As part of our ongoing analysis of Quito’s public transportation, we approximated an analysis of the on-the-ground perspective of traveling through the city on the public buses and then analyzed the attributes of different bus stops through machine learning.

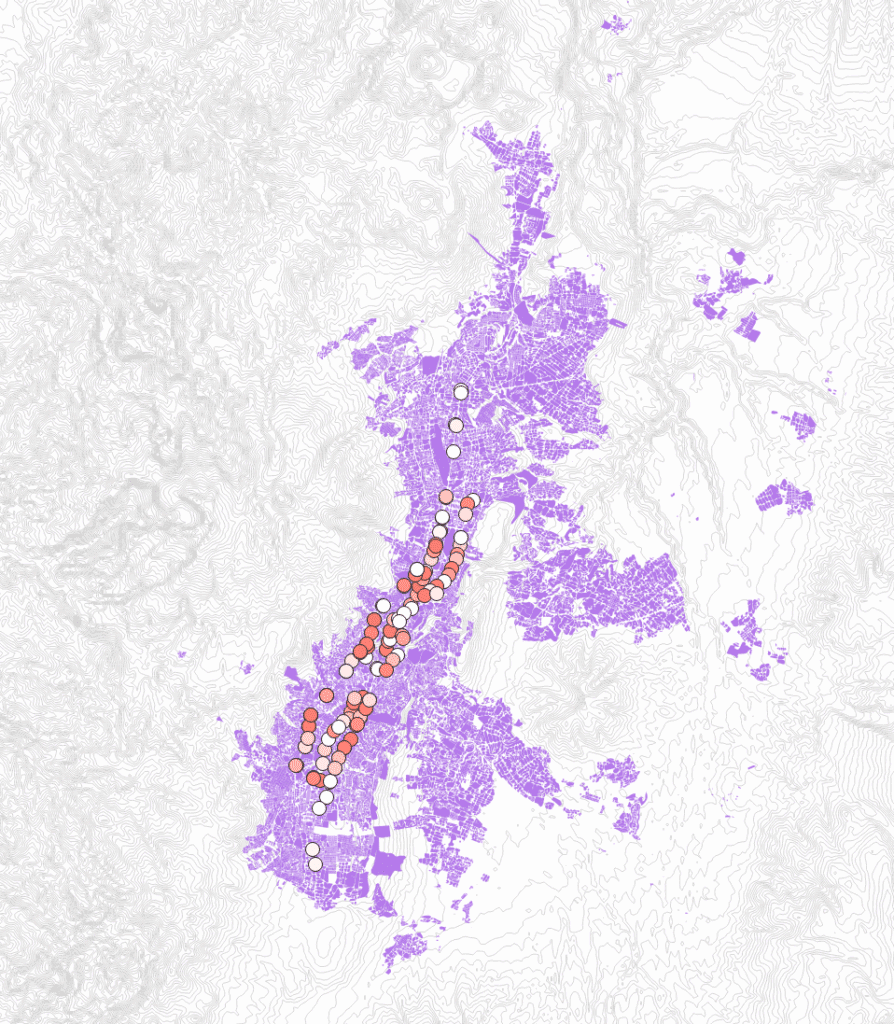

We began by creating a trajectory that would be a plausible representation of how a woman caregiver might move through the city, given various care tasks and the different needs of her family. We based this representation on demographic data collected in the city-wide Quito Como Vamos surveys and persona’s created by the study researchers that show typical characteristics of Quito residents, paired with findings from women’s mobility studies conducted in particular neighborhoods of Quito, and results of mobility research and safety and security research.

Once we planned the routes, we began to georeference them by drawing them into their own shapefile.

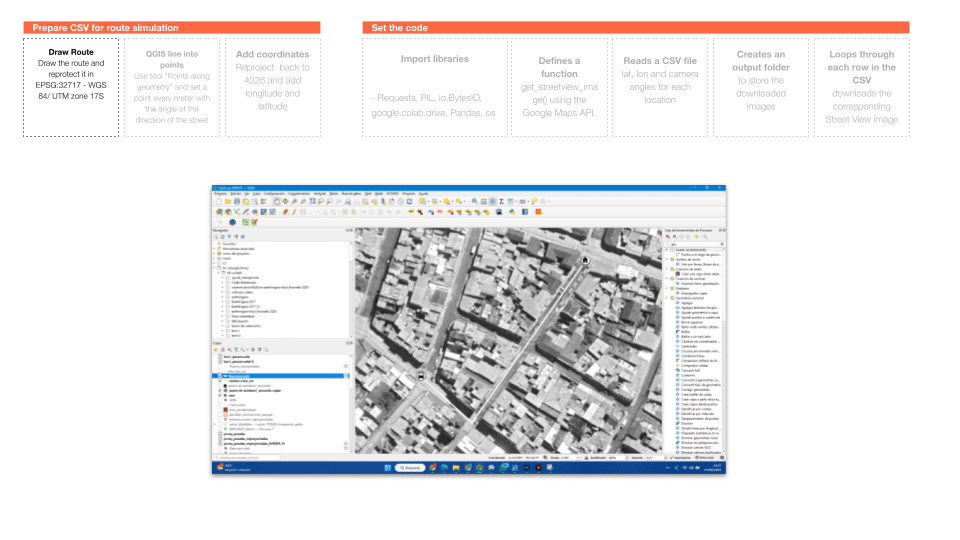

We next divided the paths into different points on QGIS.

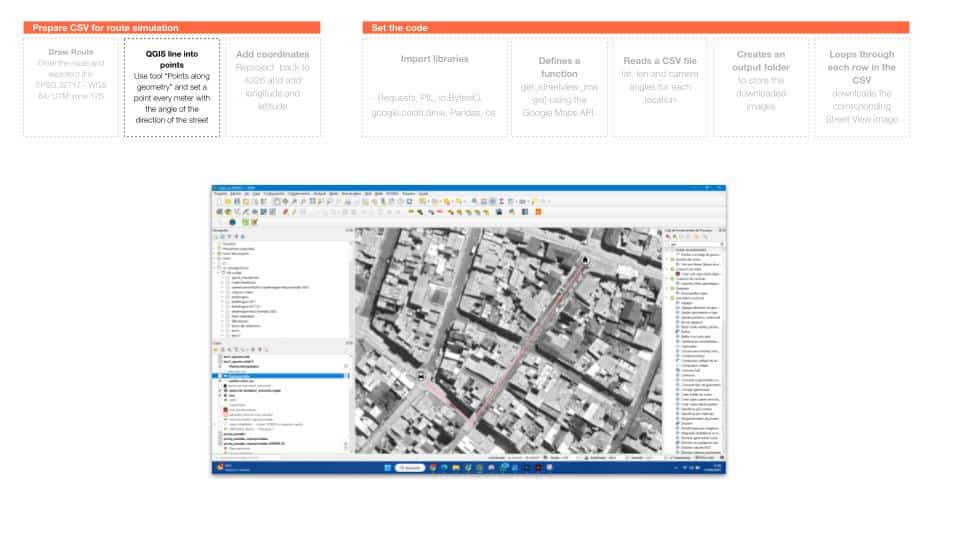

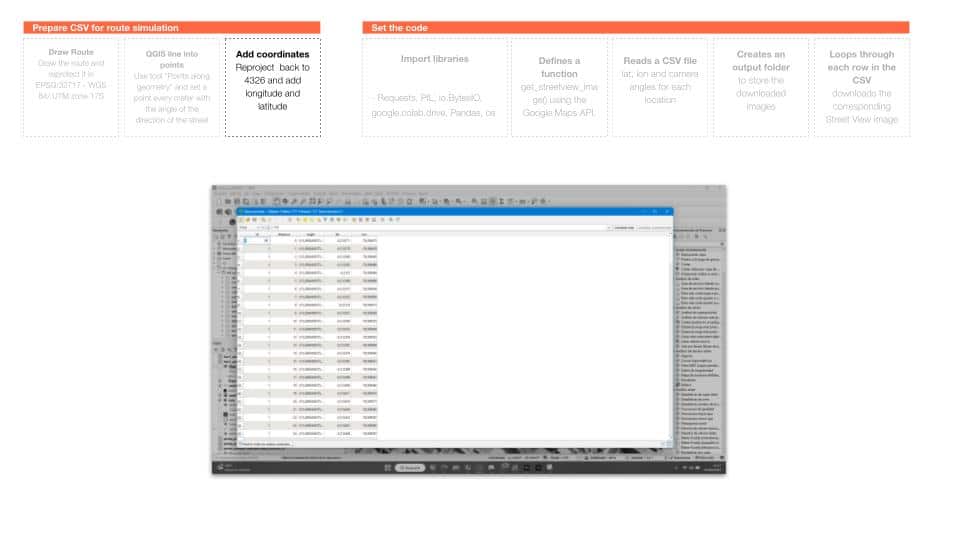

Taking these various points, we next projected them to the correct CRS and added the longitude and latitude for each point into the attribute table. This allows us to take a path and create specific viewpoints along the route.

We then wrote a script that connects to the Google Maps API to collect street view images for each of the points, using the latitude and longitude as well as the angle of the camera to download a picture. Because we wanted to get the view of our persona traveling along the road, we needed the angle to be along the path of the road.

This gave us the following result:

Because the bus stops are an important transition point in our persona’s trajectory, but also considering they are not always well equipped, we wanted to better understand the bus stops as a public infrastructure network from the lens of a woman commuter. In an effort to automate the typology detection in Quito, we began by taking coordinates from all the municipal and cooperative (private) bus stops in Quito. These were over 4000 bus stops. We input these into the same script, this time taking views across the panorama. We instructed the camera to take pictures along six 60° intervals, capturing the full panoramic view in six images.

For some detection scripts, these images were sufficient. But because of the overlap between different images, we used another script to leverage OpenCV image editing libraries to merge all six images into one panoramic but undistorted image.

We developed another script that used ChatGPT 4o mini to analyze each image and indicate certain conditions that relate to the safety and comfort of each bus stop. This output a JSON file that could be merged with the attribute table of the bus stops, allowing the different characteristics of the bus stops to be mapped in QGIS.