Global Problem

Buildings account for 40% of global carbon emissions

And the majority of these emissions come from operational carbon; a significant component of which is heating, cooling, and lighting.

These carbon and financial costs can be reduced by passive design strategies that reduce the need for mechanical heating and cooling, and electrical lighting.

Passive strategies and building performance can be assessed in early design stages through climate and environmental performance simulation.

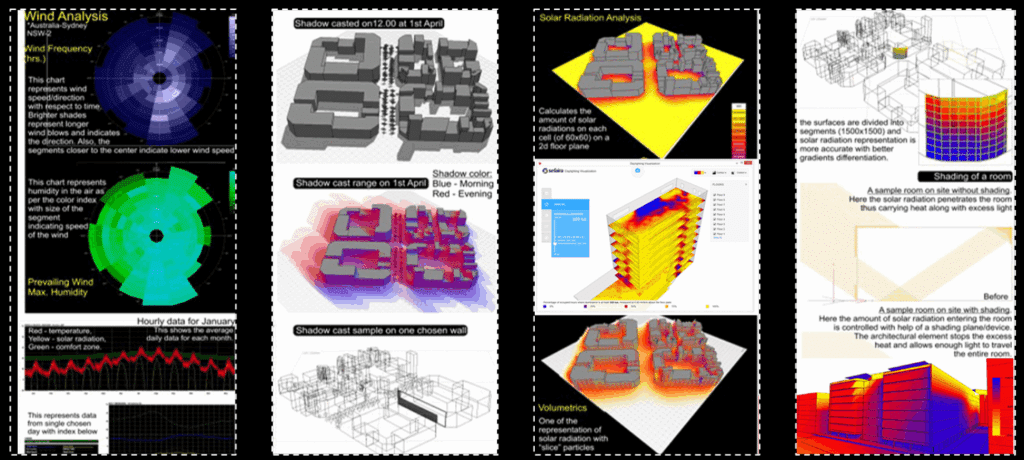

Figure 1 shows examples of climate and environmental performance simulations.

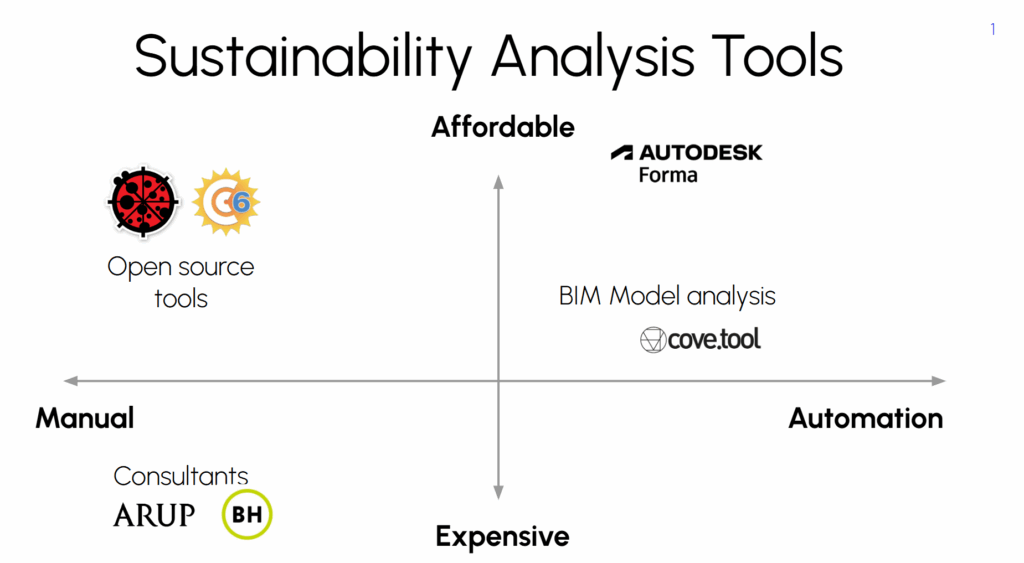

However, climate simulations are complicated and require expertise that many architects don’t have. If they don’t know how to perform their own analyses, they either skip it or outsource it. They are faced with either using complicated open source tools, or expensive consultants. There are some tools that allow you to perform these analyses on BIM models, but these are performed later in the design process. Adapt_ai is targeted for designers in the early concept design stages.

Figure 2 shows the existing landscape of tools for architectural sustainability analysis.

Meanwhile, generative AI is rapidly diffusing in architectural practice and education. 70% of architects are using genAI in early design stages to iterate through different design styles and languages.

Figure 3 shows some example of buildings made using generative AI. These examples are easily found online.

However, these images are generated completely independent of their context. These images could be as silly as an igloo in the desert.

Figure 4 shows an image made in Stable Diffusion. Generative AI can produce wholly inappropriate designs for the location.

These generative AI algorithms produce fancy pictures, but architects have no idea how they perform, or if they make sense in their specific geographical or cultural contexts.

Problems with Image Generation in Architectural Context

70% of architects are using diffusion models like Stable Diffusion, Midjourney, Dall-e and Flux to generate images of buildings.

These images are not contextual in visual style, passive strategies, nor climate data.

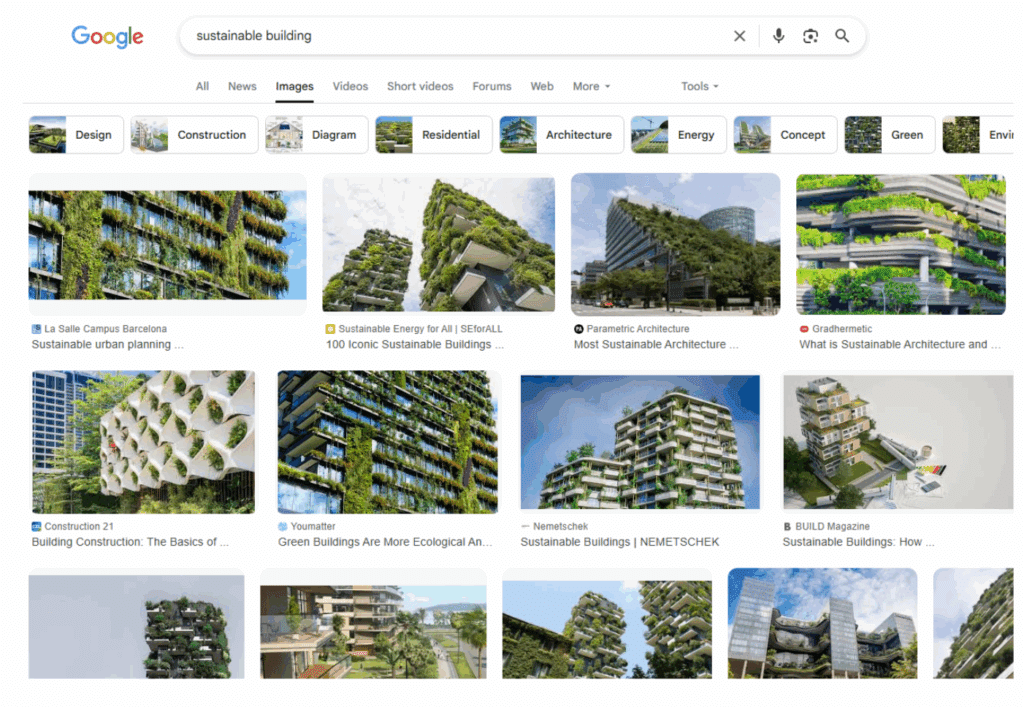

And if you prompt a diffusion model to create ‘sustainable architecture’, it will generate a heavily greenwashed building that doesn’t make meaningful sustainability improvements.

Figure 5 shows a Dall-e generated image. The prompt was “Generate an image of a sustainable building in Barcelona”

This is because the image generation model is trained on a greenwashed dataset. Large image generation models are trained on these sorts of images, and so when prompted to create ‘sustainable architecture’ they typically create buildings with greenery on the outside, but no other meaningful design changes to reduce carbon impact.

Figure 6 shows the images that are tagged as ‘sustainable building’.

Moreover, you can’t perform reliable climate simulation analyses directly on images of buildings. Researchers have had some success with Large Language Models which can be tuned to perform basic assessment of images, but they are not able to perform the detailed sustainability analysis that requires a 3D model1.

In order to convert these images into 3D models, architects must spend several hours modelling them in digital software tools, like Rhino or Revit, which can be prohibitively tedious. This necessarily reduces the number of ideas that architects can explore and evaluate, as well as increases their frustration as modelling can be tedious and time consuming, which takes away from creative design work.

Recent developments in video game technology offers a way to accelerate this process. Microsoft and Tencent have released ML models, named Trellis and Hunyuan, respectively, that let digital artists upload drawings or images of assets that can be converted into 3D models2,3. These models represent impressive leaps forward in AI and Machine Learning for digital design. However, the base model is not well suited to converting raw images of buildings into 3D models. The images must be preprocessed first to create reliable 3D models from Trellis and Hunyuan.

Figure 7 shows some examples of drawings that have been converted into 3D assets in Trellis for video game development.

Figure 8.1 shows an image of a building created by Flux, prompted to create a ‘midrise sustainable building in Milan’.

Figure 8.2 is the model generated by Trellis using the image in Figure 8.1

Figure 8.3 is a solar radiation analysis performed on the model generated by Trellis in figure 8.2

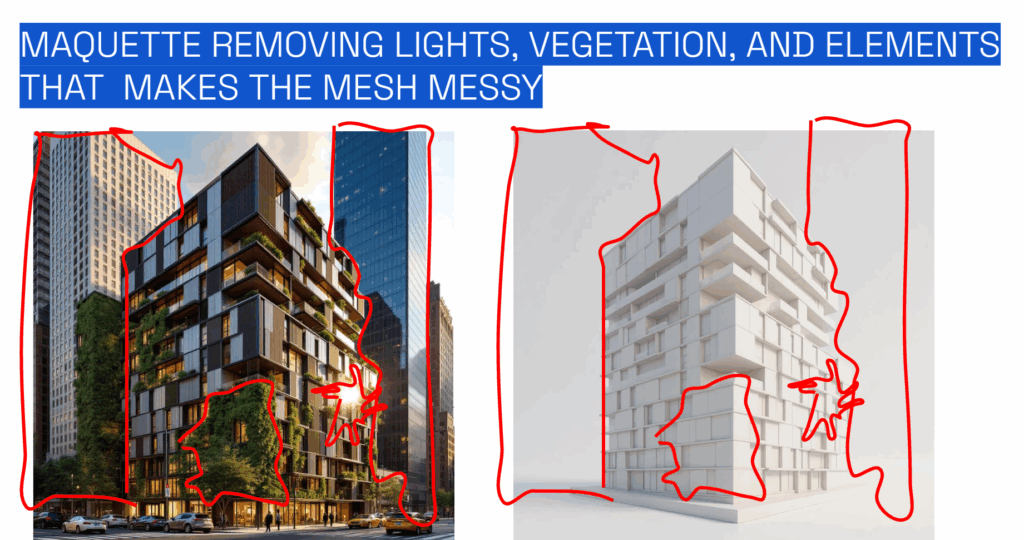

The climate simulation in Figure 8.3 shows a messy model that is suitable for video games and visualization but not accurate enough for architectural simulation and environmental validation. This is a result of noise (excessive greenery, glare, and background) in the image produced by Flux. However, we can reduce this noise in a preprocessing step (discussed later).

Finally, at the time of writing, there is no existing platform that allows users to go from an image, to 3D model, to climate simulation. If an architect wants to go through this (flawed) process, they must do it manually.

Problem Validation

We found two research papers that discuss the use of image generation and climate simulation in an integrated architectural design process4, 5. Their processes were manual, which necessarily limited the extent to which they could iterate on their designs. Implicit in their papers was the need for automation. Moreover, it proves that there is a desire and a need among architectural designers to produce climate informed genAI buildings.

More broadly, there is a large and growing trend towards environmentally conscious design among architects globally. Indeed, the market for sustainability consulting in AEC exceeds $9 Billion per year, which in large part consists of climate simulation. Digital tools are appearing to help architects perform their own simulations, from open source tools Ladybug6 and Climate Consultant7, however these open source tools are often complex for architects to navigate. This was confirmed by surveys and interviews with practicing architects. There are companies as well that provide similar services for certain use cases, such as analysis at the urban scale, or based on BIM models. However, no company provides services for this niche but growing use case (image to sustainability analysis).

Solution and Product

Adapt_ai is a website that helps architects that are using AI image generation to design more sustainably.

You can try it here at adapt.ai

Video 1 shows the demo of adapt.ai

Users can begin with a location, proceed through concept development, image generation, and view the 3D model placed in context and validated with climate simulation.

Figure 9 shows the high level concept for Adapt_ai. Start with a location anywhere in the world, develop an architecture concept, and receive climate simulations of the 3D model.

Adapt.ai eliminates tedious 3D modelling and complex climate simulation so that architects can do what they do best: designing. And the best part is that their design is informed by real world data

How it works

Adapt.ai addresses every problem that was identified with generative AI in the architectural context.

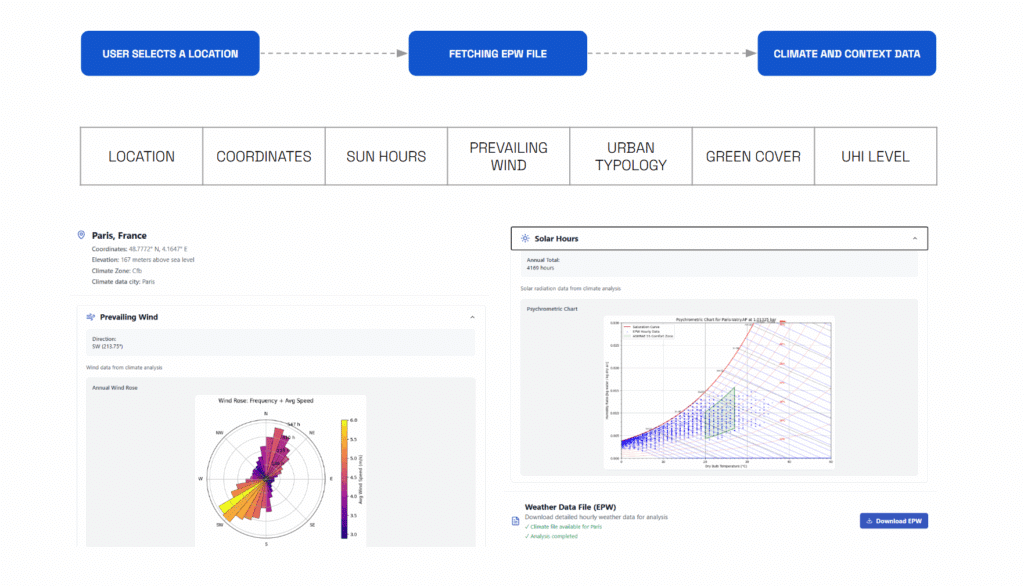

Adapt.ai parses real world climate data to provide users with synthesized and analytical insights about the local climate.

Figure 10 shows the climate data dashboard. It dynamically fetches climate data from online database, parses and visualizes data for actionable insights. The dashboard here shows the wind rose and psychrometric charts. The table shows all of the data that is given to users.

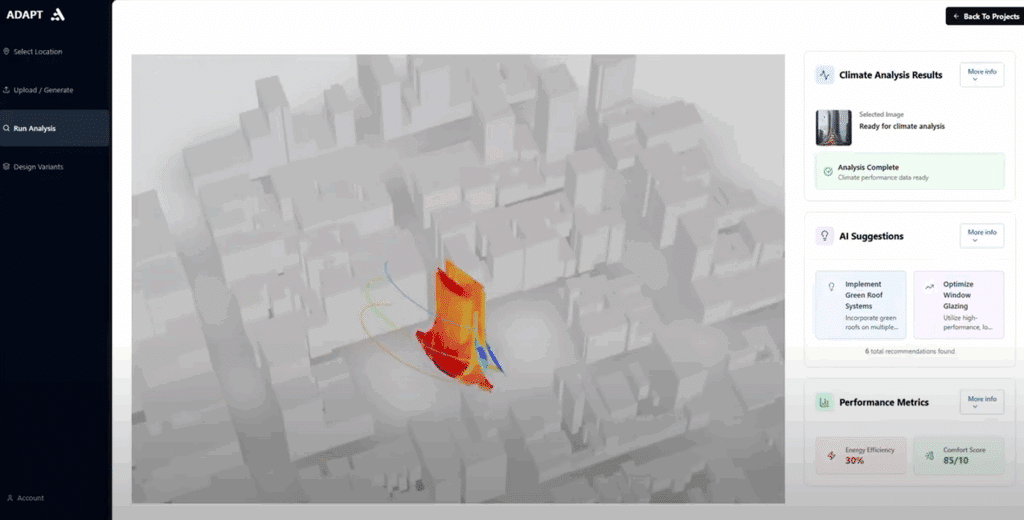

Adapt.ai uses geospatial data to place buildings in their context and runs climate simulations to get industry quality analysis.

Figure 11 shows a building with the simulation visualization placed in context. This screenshot is taken in the Adapt.ai interface.

Adapt.ai preprocesses images to create higher quality mesh models. It sends images to Replicate to convert the building image into a maquette before being turned into a 3D mesh to remove excess foliage, glare, and background.

Figure 12 highlights an image with the noise from foliage and glare, and shows the maquette version of the building.

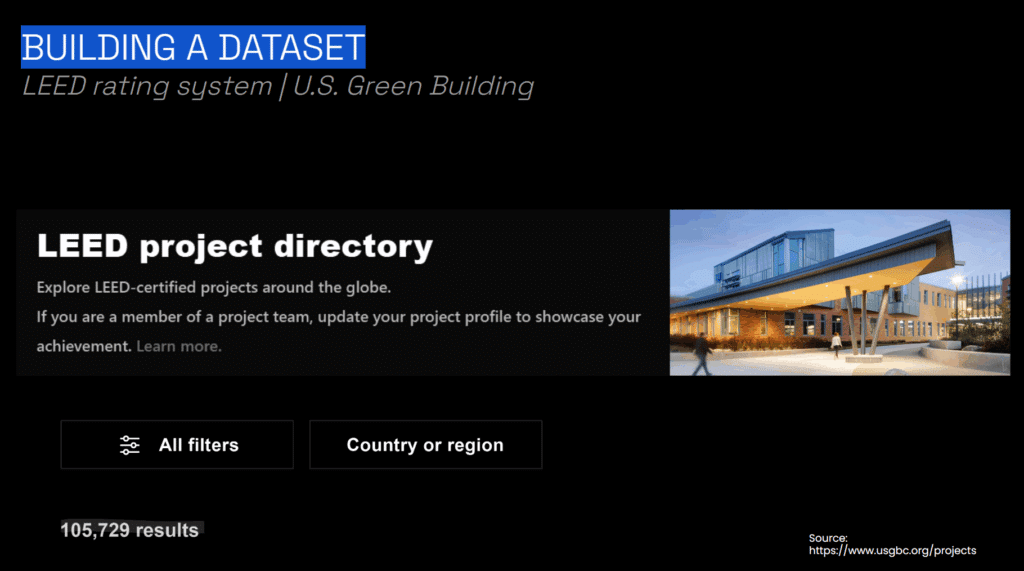

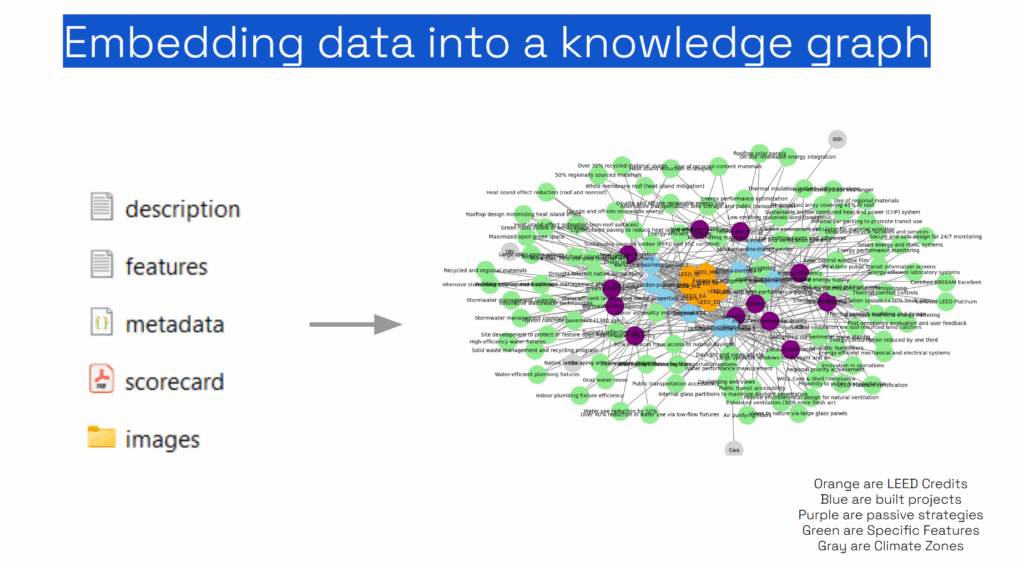

The Adapt.ai prompt engine uses data scraped from LEED certified projects to enrich the design. Data about LEED certified projects were scraped and then aggregated into a graph, for more accurate knowledge retrieval. That way, designers are provided with insights from real, verified sustainable projects, and avoid the pitfalls of greenwashing. These insights are layered into the prompts so that users can see which features they can add or use to improve the environmental performance of their building.

Figures 13.1 shows the LEED project directory, from whence data was scraped for the knowledge graph

Figure 13.2 shows the high level data schema for graph creation. The nodes are color coded as follows: Orange are LEED Credits; Blue are built projects; Purple are passive strategies; Green are Specific Features; Gray are Climate Zones.

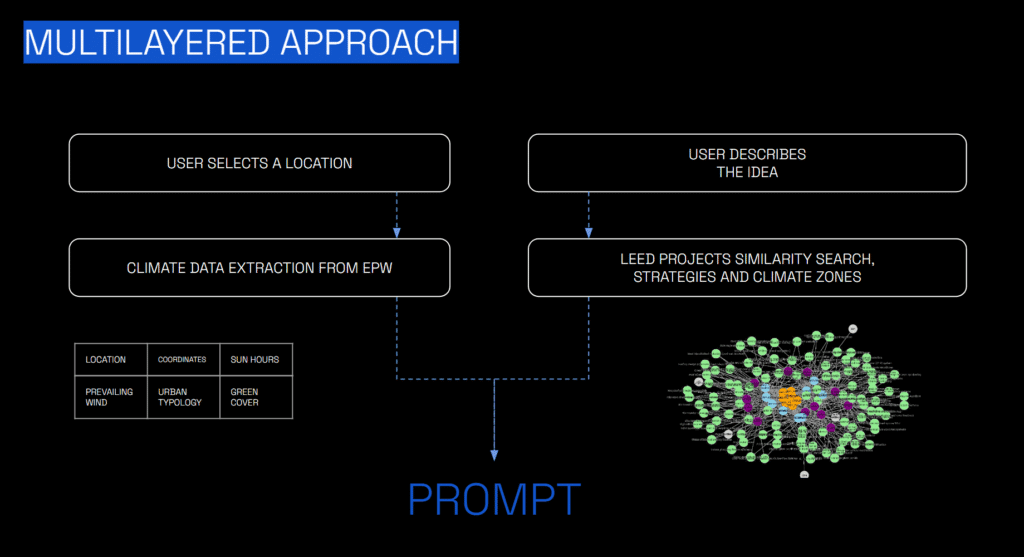

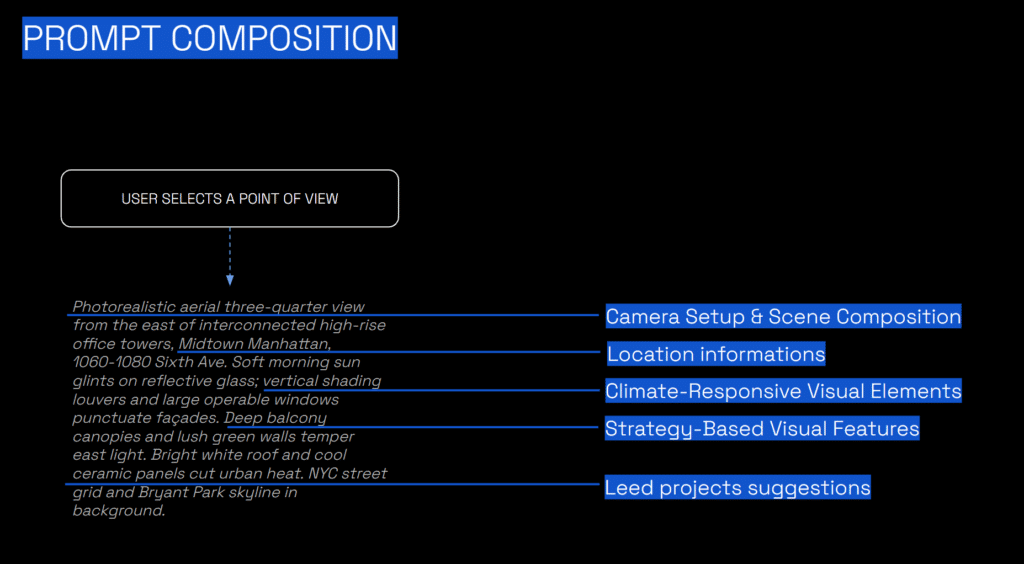

13.3 and 13.4 show how climate data and LEED project data are combined with user input to provide a rich, multilayered prompt for more effective image generation.

The images produced from this method on Adapt.ai are far superior to the images one can produce by using the raw image generation models.

Figure 14 shows one use case of Adapt.ai. The prompt in the top left was the user input given to an image generation model and to Adapt.ai. The top images show what was produced by the ‘raw’ image generation model, and the image on the bottom was the image produced when augmented by climate data, LEED data, and other Adapt.ai enhancements.

Adapt.ai has a user friendly interface, intended to be as simple as possible, to provide users with an easy-to-use tool that seamlessly and automatically guides them through the early design stages and allows them to iterate through many concept ideas.

The result is more informed AI-assisted design.

References

- P. J. Bentley, S. L. Lim, R. Mathur and S. Narang, “Automated Real-World Sustainability Data Generation from Images of Buildings,” 2024 4th International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Male, Maldives, 2024, pp. 01-06, doi: 10.1109/ICECCME62383.2024.10797127. keywords: {Knowledge engineering;Energy consumption;Accuracy;Mechatronics;Large language models;Buildings;Estimation;Data collection;Prompt engineering;Sustainable development;Large Language Model;image-to-data;building sustainability}, ↩︎

- Xiang, J., Lv, Z., Xu, S., Deng, Y., Wang, R., Zhang, B., Chen, D., Tong, X., & Yang, J. (2024). Structured 3D latents for scalable and versatile 3D generation. arXiv preprint arXiv:2412.01506. https://arxiv.org/abs/2412.01506 ↩︎

- Lai, Z., Zhao, Y., Liu, H., Zhao, Z., Lin, Q., Shi, H., Yang, X., Yang, M., Yang, S., Feng, Y., Zhang, S., Huang, X., Luo, D., Yang, F., Yang, F., Wang, L., Liu, S., Tang, Y., Cai, Y., He, Z., Liu, T., Liu, Y. Y., Jiang, J., Huang, J., Guo, C., … Tencent Hunyuan3D Team. (2025, June 19). Hunyuan3D 2.5: Towards high-fidelity 3D assets generation with ultimate details (arXiv preprint arXiv:2506.16504). arXiv ↩︎

- Zhong, C., Shi, Y., Cheung, L. H., & Wang, L. (2024). AI-enhanced performative building design optimization and exploration: A design framework combining computational design optimization and generative AI. Xi’an Jiaotong-Liverpool University. ↩︎

- Andreou, A., Kontovourkis, O., Solomou, S., & Savvides, A. (n.d.). Rethinking architectural design process using integrated parametric design and machine learning principles. University of Cyprus & Manchester Metropolitan University. ↩︎

- Roudsari, M., Pak, M., & Scambos, A. (n.d.). Ladybug Tools [Computer software]. GitHub. https://github.com/ladybug-tools. ↩︎

- Milne, M., & Liggett, R. (n.d.). Climate Consultant (Version 6.0) [Computer software]. UCLA Energy Design Tools. https://www.energy-design-tools.aud.ucla.edu/climate-consultant/request-climate-consultant.php ↩︎