Abstract:

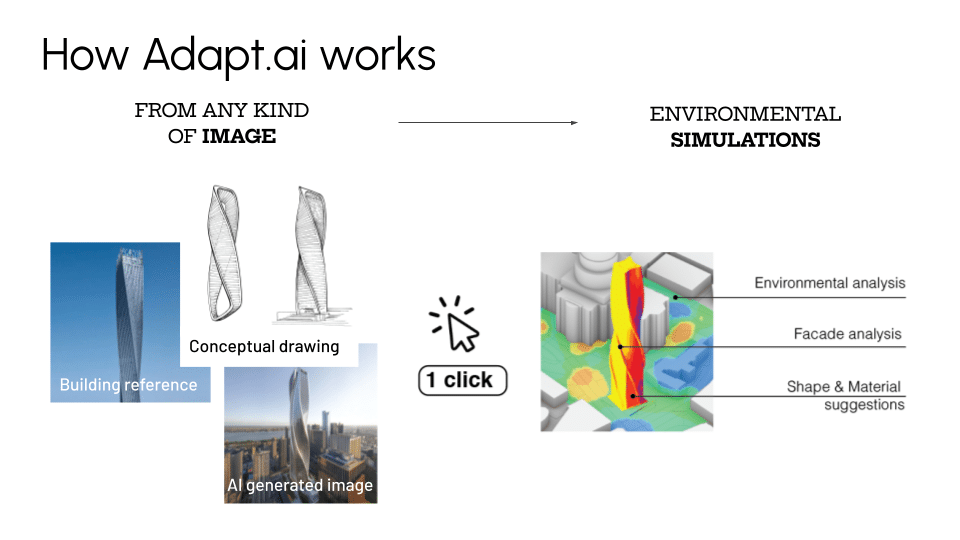

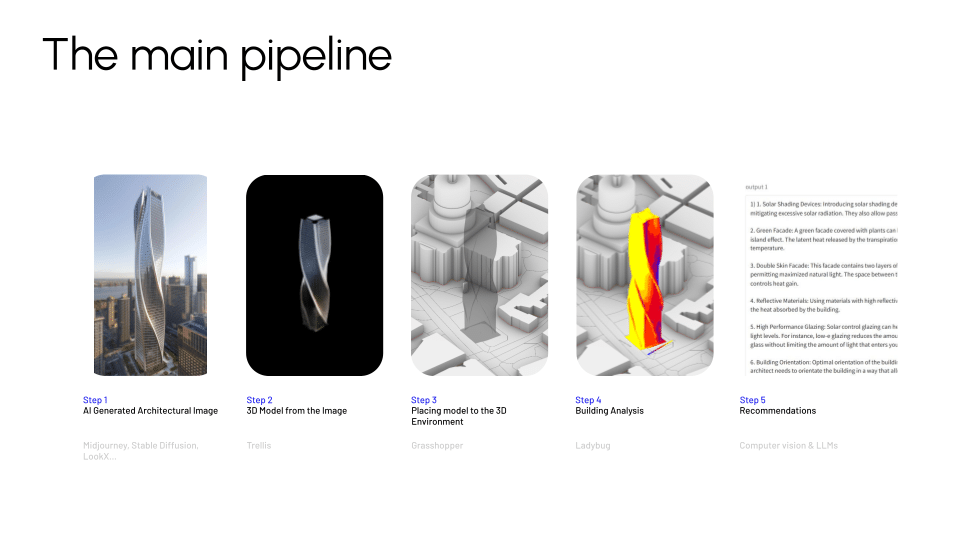

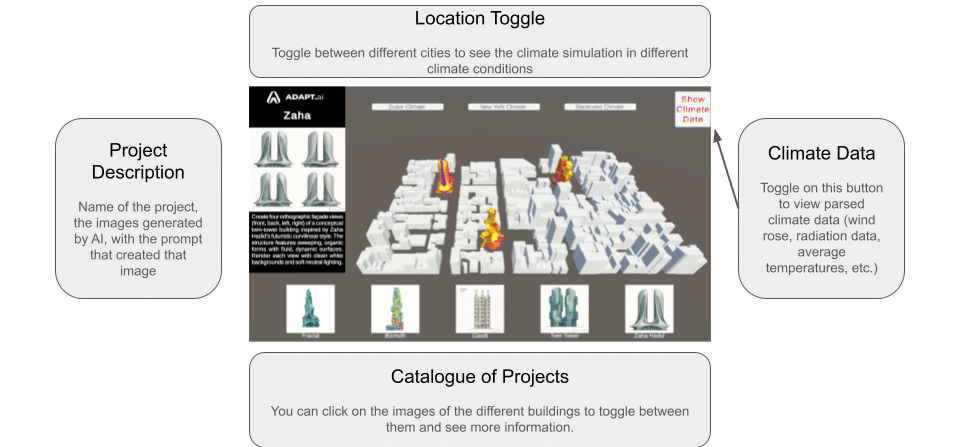

For the class of Interactive Interfaces with professor Daniil Koshelyuk we got introduced to the Unity game engine, a powerful software to create all kinds of 3D applications and environments. For our group, we decided to create an app that would be the continuation of our Research Studio project: Adapt AI. A project where we aimed at creating a copilot for sustainable design for architects in the conceptual design phase. This is how it works: Based on a location, we take an image or a sketch of a building, turn it into 3D, put it in its context and run a solar radiation simulation on the building in its environment. We also provide other insights about the local environment, and AI suggestions on how to improve the design, to ultimately help architect start off their design with as much useful information to make their designs and buildings as sustainable as possible. So for this exercise, we decided to create an app in Unity that takes some of those AI generated 3D models, puts them in a context and provides the solar radiation analysis for each of those buildings in three different climates, for the users to explore and see how different shapes positioned differently in different climates can have an impact on design decisions.

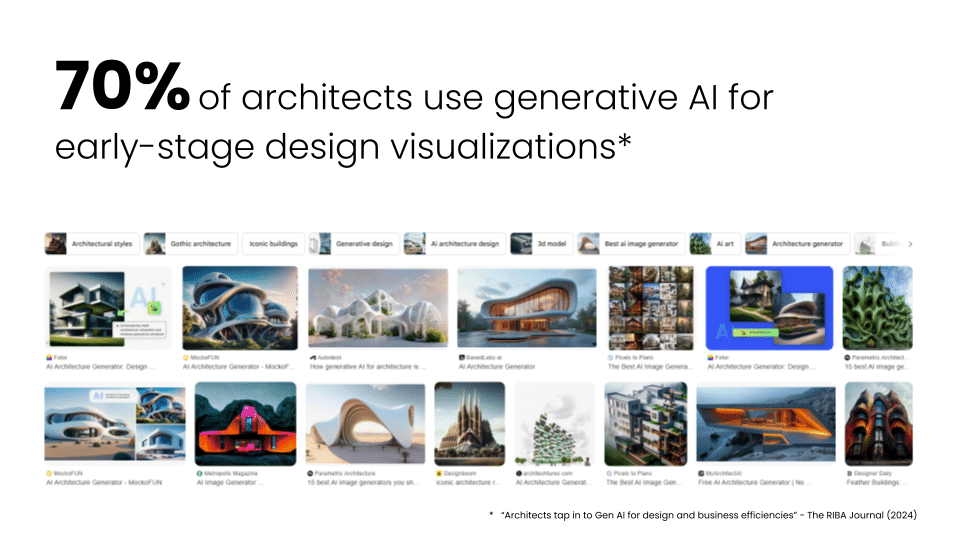

Problem:

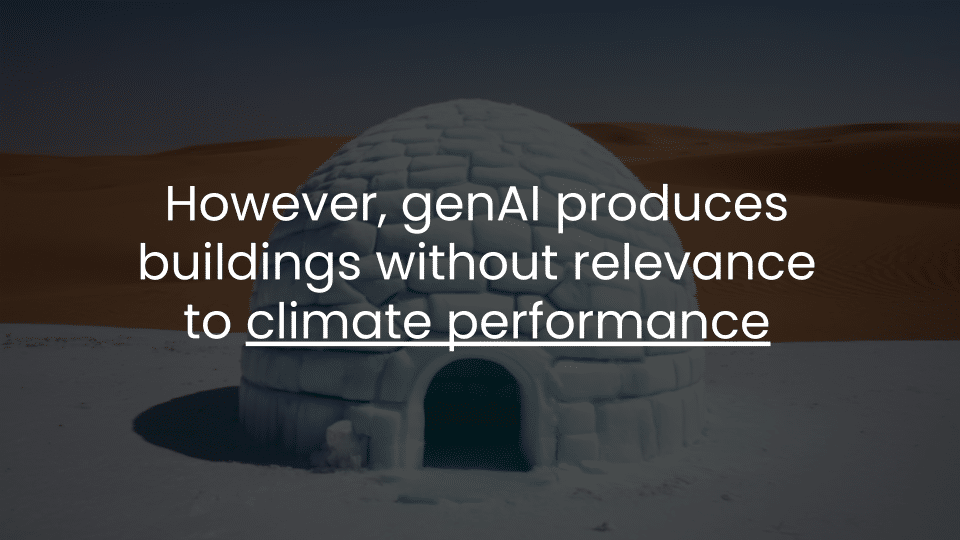

Our Solution:

The Physical Prototype:

Context:

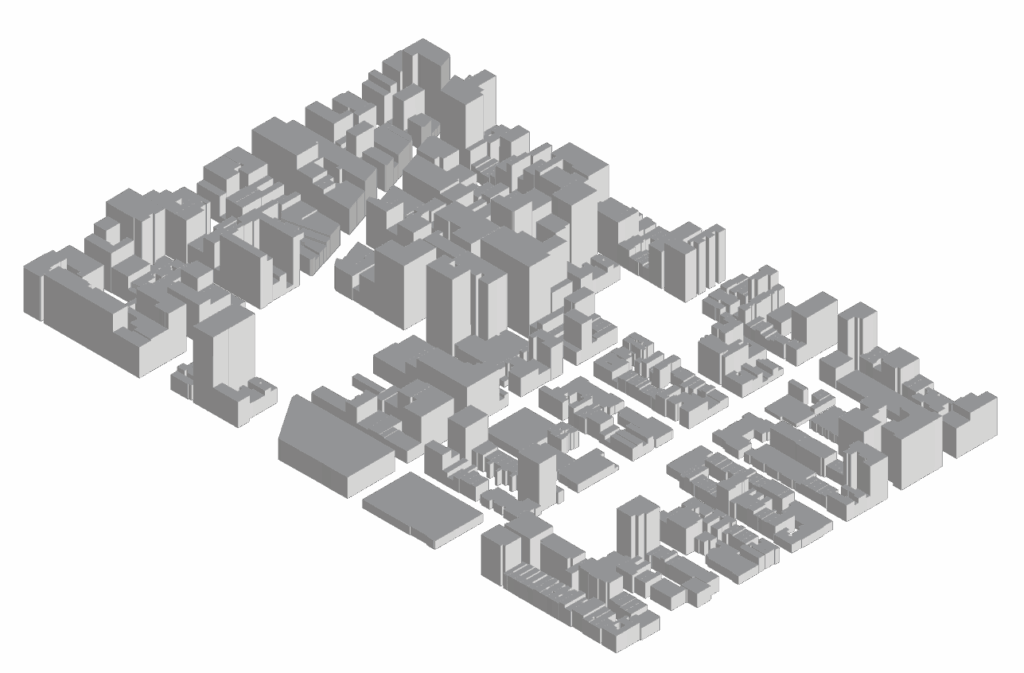

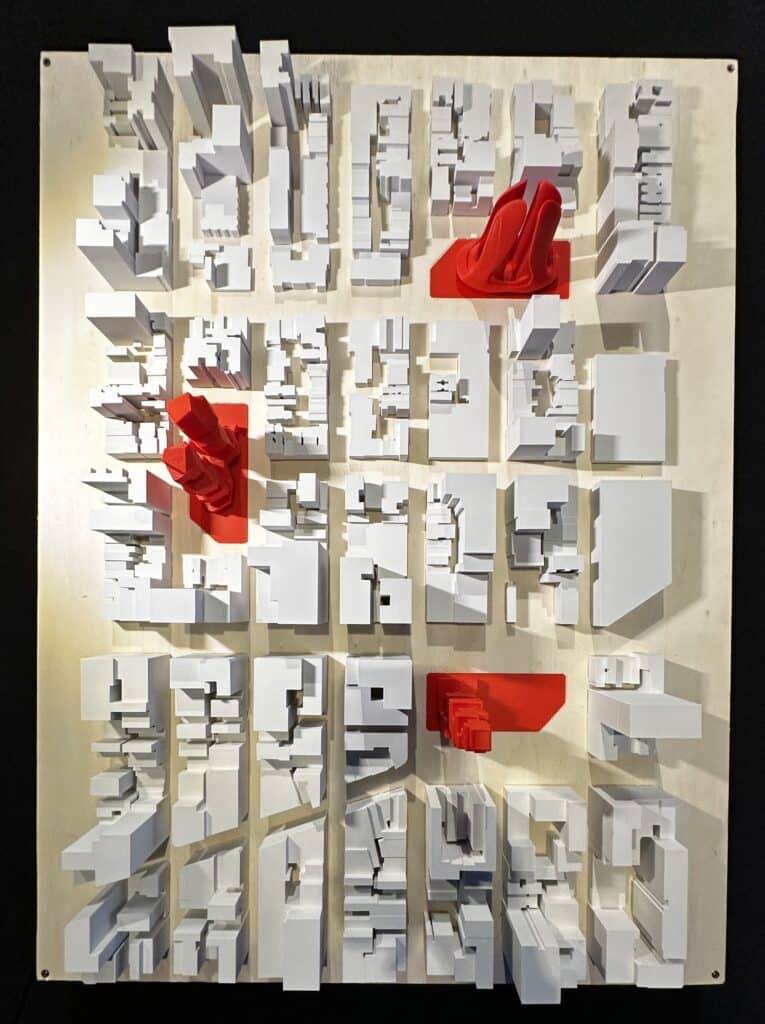

As part of our Research Studio Submission, we had to come up with a Physical prototype of our project, which is not that obvious for a project that is purely virtual. So we came up with the idea of creating an urban context, and allocating some spaces for the new AI generated 3D models for users to be able to interchange and interact with firstly in a physical way. So first we got the context:

In this Context, we then set three areas where users will be able to position the gen AI models

Images and Prompts:

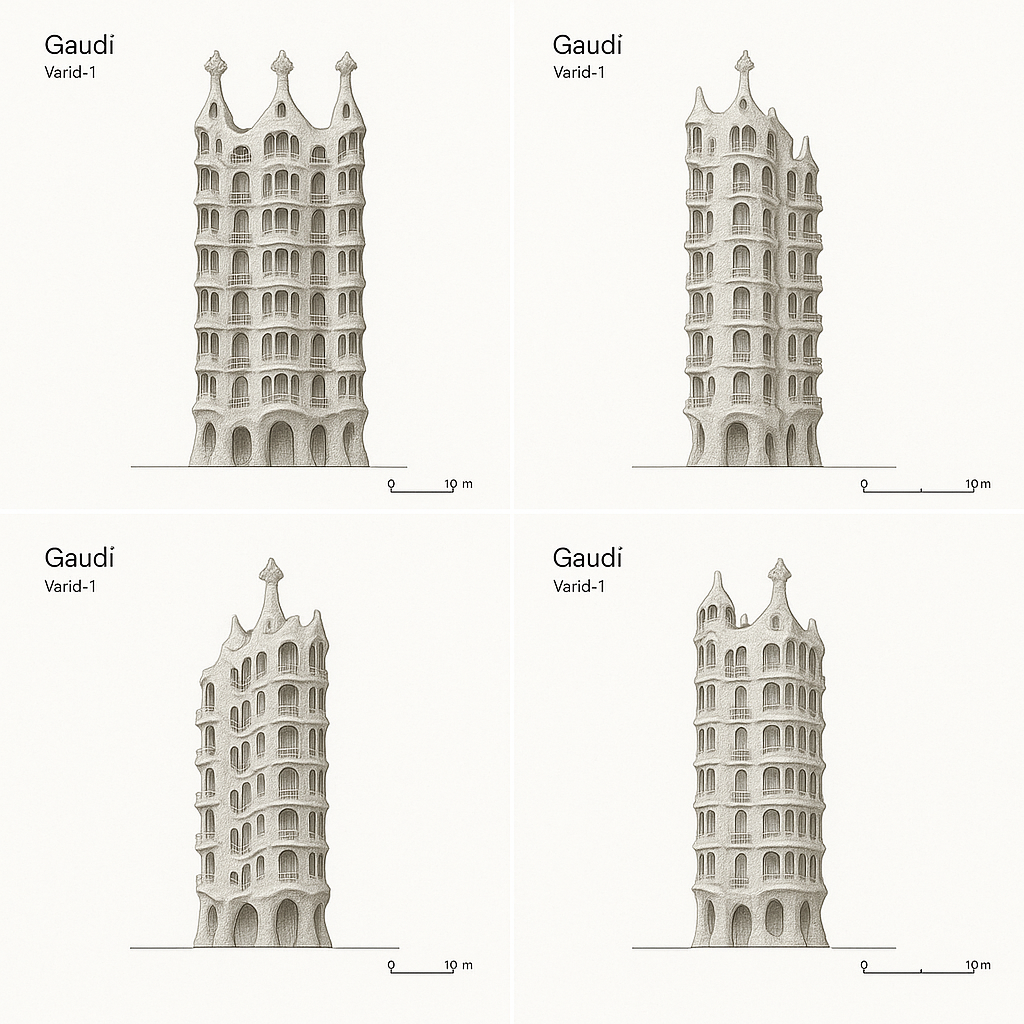

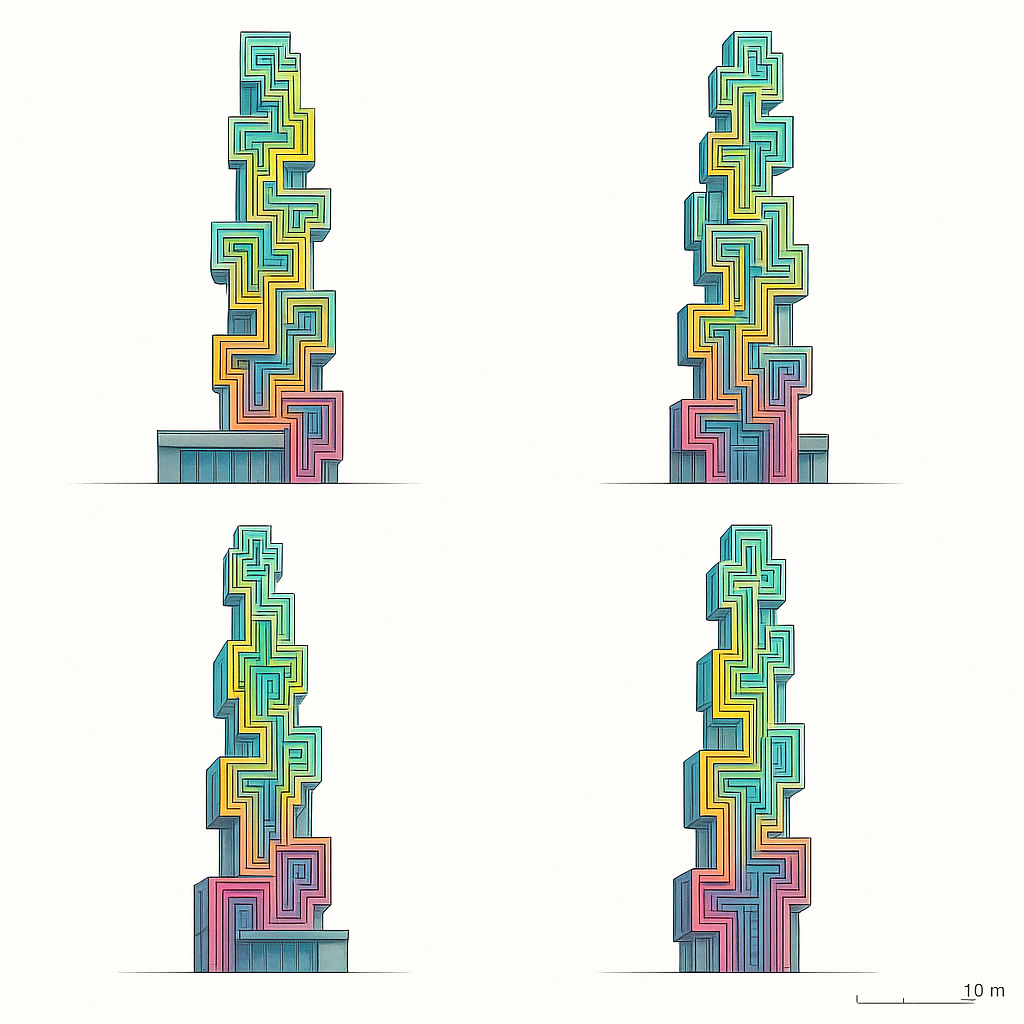

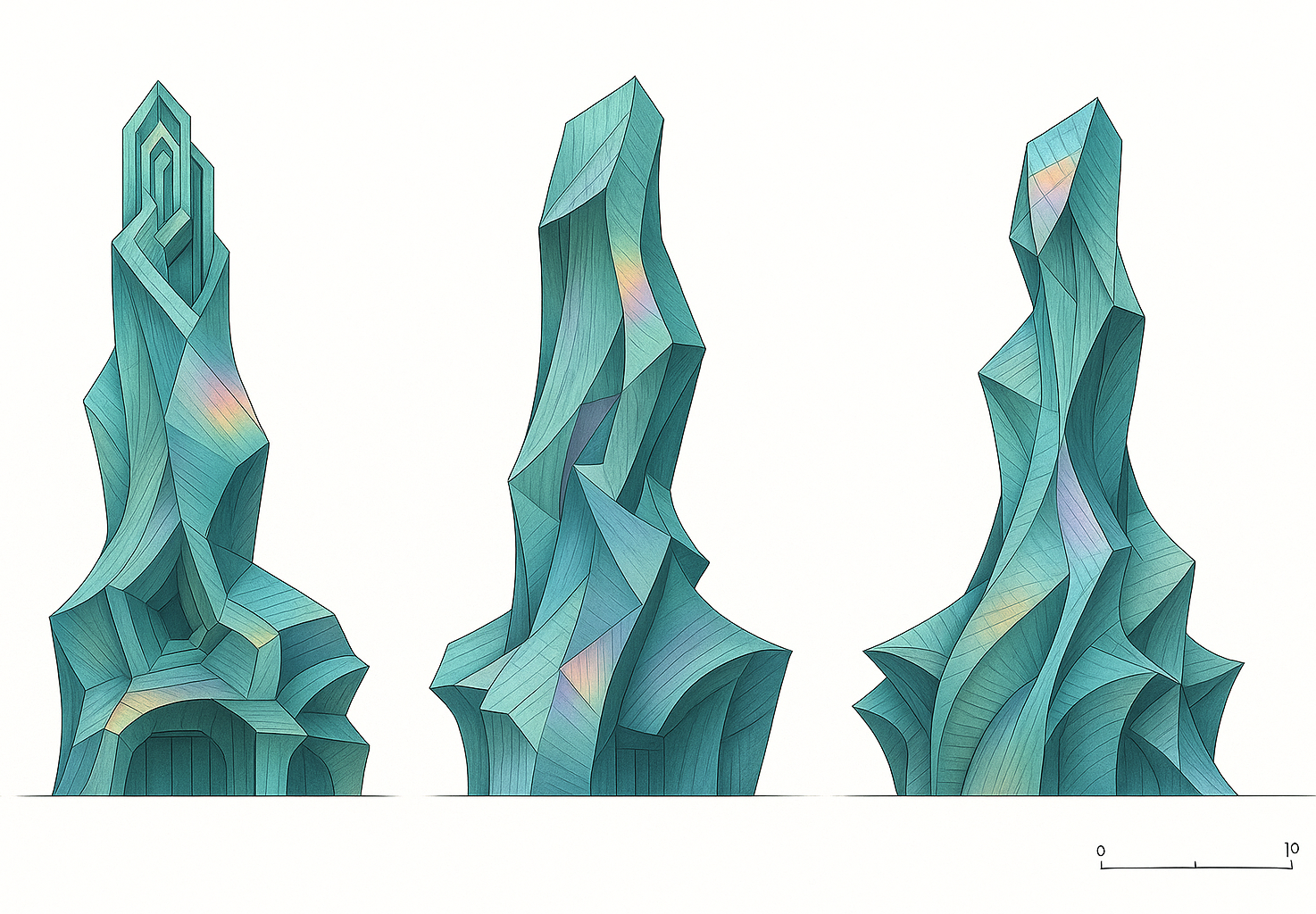

And for our prototype, we created 5 different models. We first generated 5 images using Chat GPT’s 4o model with the following prompts:

Create four orthographic façade views (North, South, East, West) of a high-rise building inspired by Antoni Gaudí’s architecture. The tower should feel organic and expressive—like a Gothic cathedral melted into a forest. Think fluid stonework, colorful mosaics, bone-like structure, and nature-driven geometry. The form should rise with sculptural intensity, full of symmetry and ornate detail, as if it grew out of the Earth.

Create four orthographic façade views (front, back, left, right) of a very high rise thin tower inspired by the structure of bismuth and fractals inspired by the style of MVRDV. Render each view with clean white backgrounds and soft neutral lighting.

Create four orthographic façade views (front, back, left, right) a fragmented low-rise museum inspired by the structure of bismuth and fractals that looks like a Franck Gehry design if he took LSD. The building should appear sculptural, with warped metallic-looking forms. Generate four distinct views from different sides for design exploration.

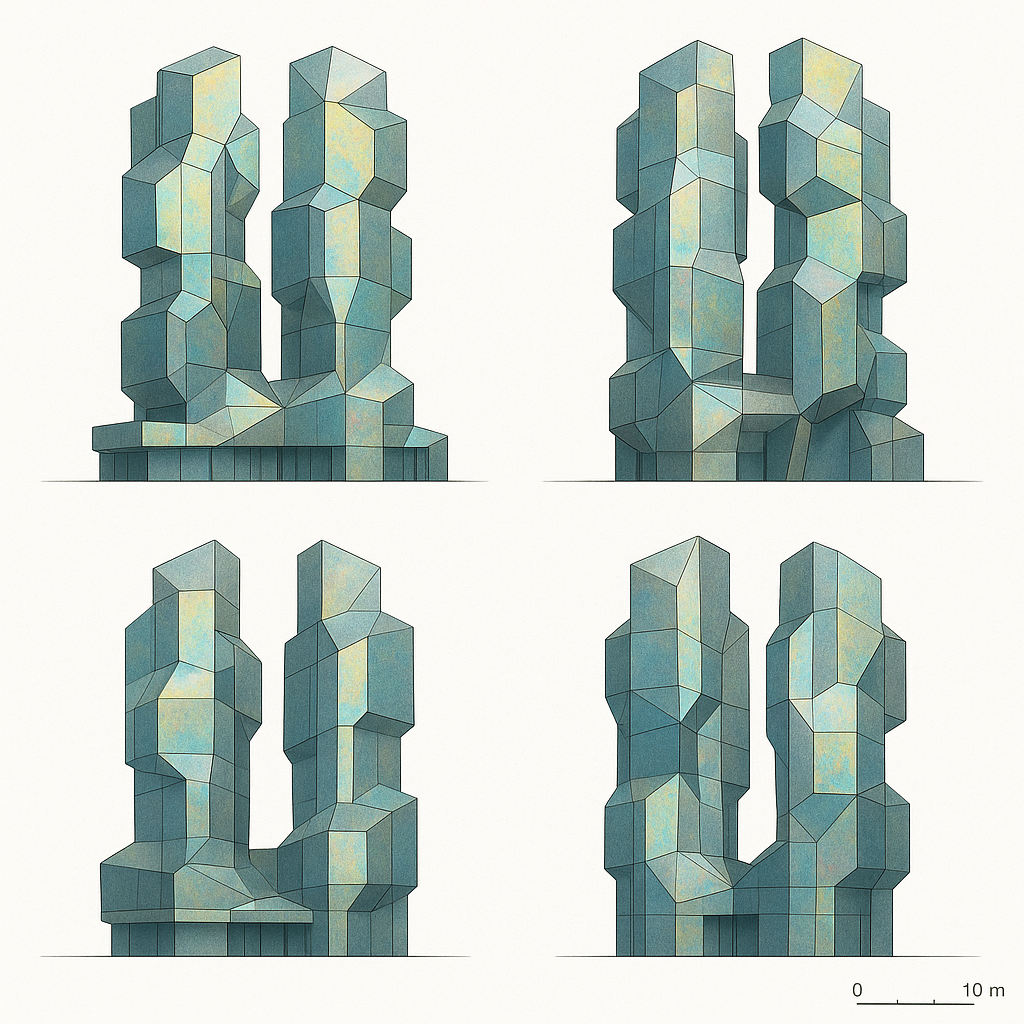

Create four orthographic façade views (front, back, left, right) of a conceptual twin-tower building inspired by Rem Koolhaas’s style. The structure features orthogonal shapes like pyrite clusters with dynamic metal coded holographic surfaces. Render each view with clean white backgrounds and soft neutral lighting.

3D models:

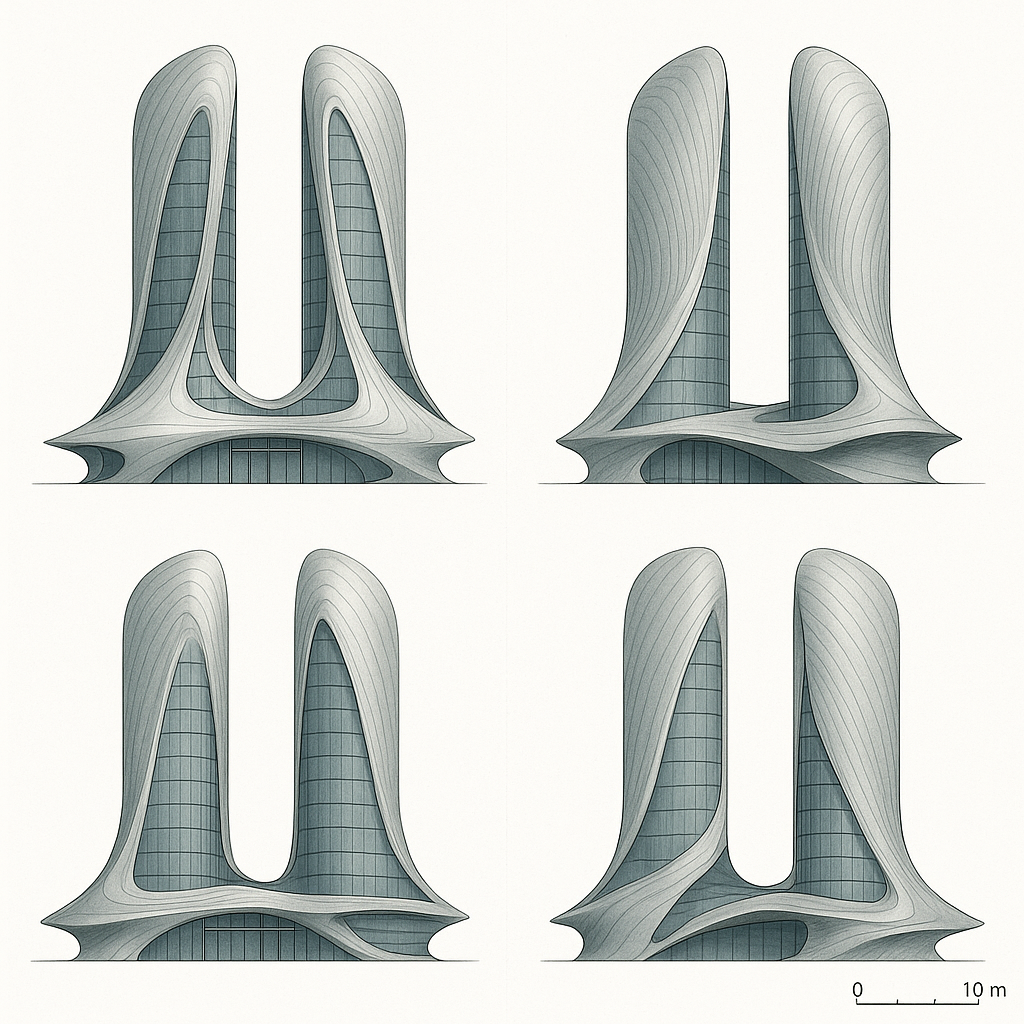

Create four orthographic façade views (front, back, left, right) of a conceptual twin-tower building inspired by Zaha Hadid’s futuristic curvilinear style. The structure features sweeping, organic forms with fluid, dynamic surfaces. Render each view with clean white backgrounds and soft neutral lighting.

We then took these models and converted them into 3D using the Hunyuan Tencent model, and we got some very detailed results

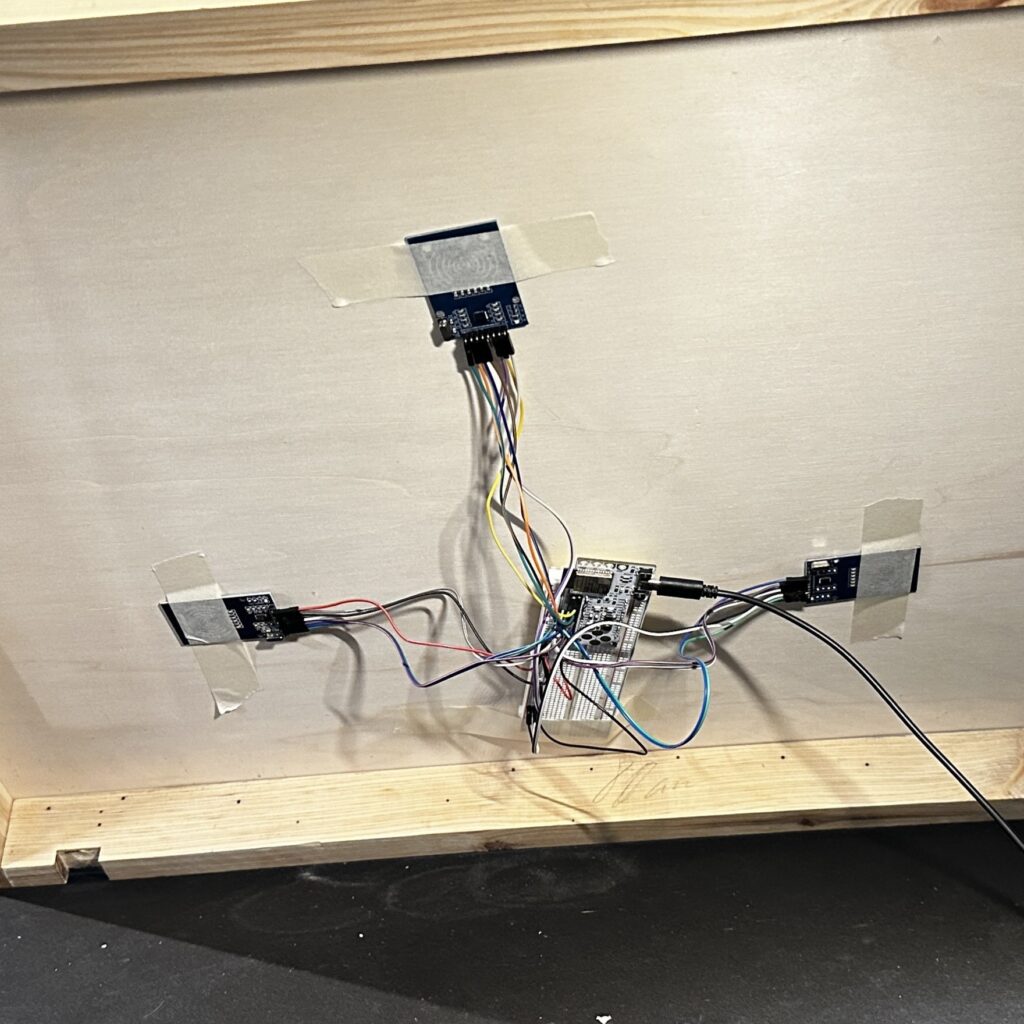

When then printed the context in white PLA, the 5 models in red PLA, and used a 60x80cm board as a base

The Unity App

Target Audience:

Our ideal user for adapt.ai is a concept designer at a medium to large design firm. To that end, our interactive interface is aimed at demonstrating the full pipeline and the potential of our web based tool to concept designers.

We highlighted building projects with a high degree of formal complexity, in a dense urban environment, in 3 different cities (NY, Dubai, and Stockholm) with vastly different climates.

We want to show how a user can go from an image, to a 3D model (no matter how complex) placed in an urban context, and receive climate simulation data in any city in the world.

Prototype Workflow:

Digital Twin:

The App:

Challenges & Future Directions:

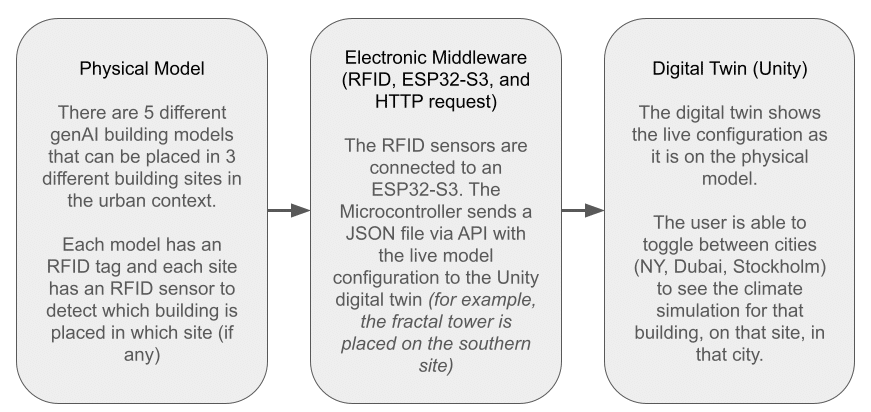

After printing the context and assembling everything, our goal was as we said before to connect the physical prototype with the virtual app. To do so, we started experimenting with MFRC522 RFID sensors and tags, to be able to detect our 3D models on our context. We tried different ways, first with the Arduino Uno that was able to comfortably manage 1 RFID sensor, but struggle to detect 3, mainly due to power supply to sensors. We knew that also needed internet connectivity, which the Arduino doesn’t have, so we quickly switched to a Raspberry pi 5. The pi being a literal computer, it had no problem with the HTTP request part and sending JSON files of the detected tag IDs and Sensor IDs, but unfortunately the Raspberry pi 5 with it’s Linux OS currently doesn’t not support the MFRC522 packages in python, which is necessary to read the sensors. A quick fix to that is to create a virtual environment and install the packages, but even then the pi is very unreliable in reading the RFID sensors, randomly working sometimes and mostly not working. Last resort, try the same workflow with a ESP32-S3, which is perfect being a very simple microcontroller that we can interact with using the Arduino IDE, and it also has internet and Bluetooth connectivity. And after many hours of trial and error, we finally managed to connect 3 RFDI sensors to the ESP32 that were able to read all the tags continuously and sending them to the local Flask server that we opened.

Here is a video of the Arduino IDE (on the right) showing that the ESP32 is reading the RFID tags, and going through the process of : [Reading / Connecting to the Flask server/ Preparing a JSON with ( tagId; readerId; readerName; timeStamp)/ Sending the POST request/ Getting a HTTP response] Where we can the flask server ( on the left) receiving all those informations. Unfortunately, we did not manage to connect the server to the unity app.

For future scopes and development, we would like to implement Augmented Reality AR synchronized with the physical model, so that the user can have more interaction with the prototype. It can also be interesting for firms to show their prototype through AR for presentations to their clients. We are also thinking of deploying it as a standalone app connected to our main web platform so users have AR/VR capability on the projects they create in adapt.ai.

Thank you Daniil