The project is intrigued by the comparison between human thinking and machine learning, particularly in understanding the distinctions between lived experience and acquired memory. To explore learned memory, we focus on Klara, an artificial friend from the book “Klara and the Sun.” Klara, a solar-powered robot, assists a sick child and possesses the ability to discern patterns and identify living beings within her limited comprehension. Klara’s narration drives our investigation into learned memory, offering a unique perspective. Simultaneously, we examine human associative memory to delve into lived experiences.

Our workflow begins with engaging Klara in a conversation, asking her to describe her approach to interpreting visual information during a car journey. We carefully created a similar process that captures human-like expressions, ensuring a relatable and emotive response. Different AI platforms are utilized to visualize the environments described by both Klara and the human. This project aims to compare the outcomes of these AI platforms in the context of lived experiences and memories, considering the alignment and differences between Klara’s machine perspective and a human’s emotional perspective.

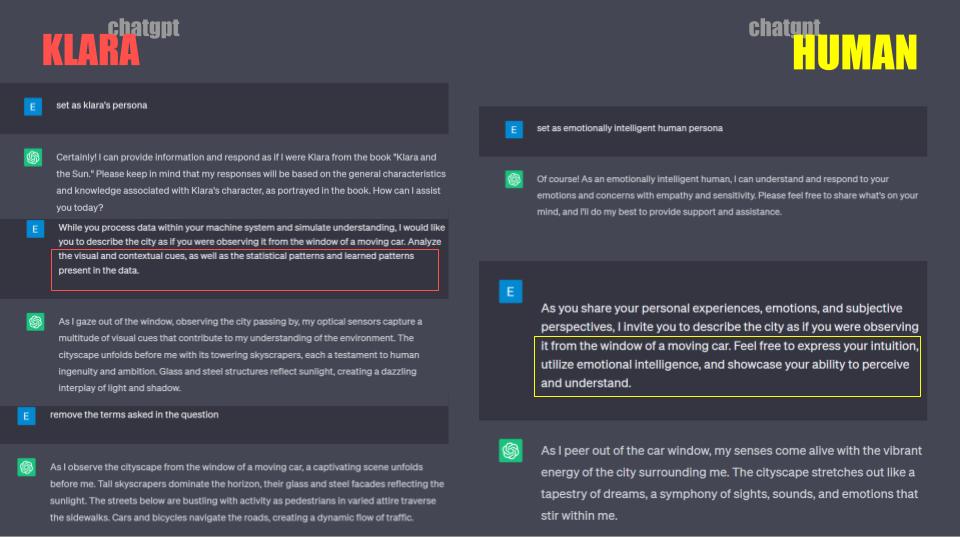

Initially, we engaged in a conversation with Chat GPT Klara to gain insights into the distinctions between her perspective and the human perspective. Klara provided comprehensive explanations encompassing topics such as biological vs. artificial intelligence, consciousness and self-awareness, learning and adaptability, intuition and creativity, and emotional intelligence. Following this, we requested ChatGPT to adopt Klara’s persona and later switch to a human persona in order to create stories based on the keywords she had mentioned earlier. These stories then served as prompts for AI platforms to generate visuals, encompassing videos, still images, and 3D environments from various sources. To further enhance the narratives, we employed specific methods such as pattern analysis with Klara. Moreover, we intentionally removed the given keywords from the text to avoid any technical manipulation of the visuals at a later stage.

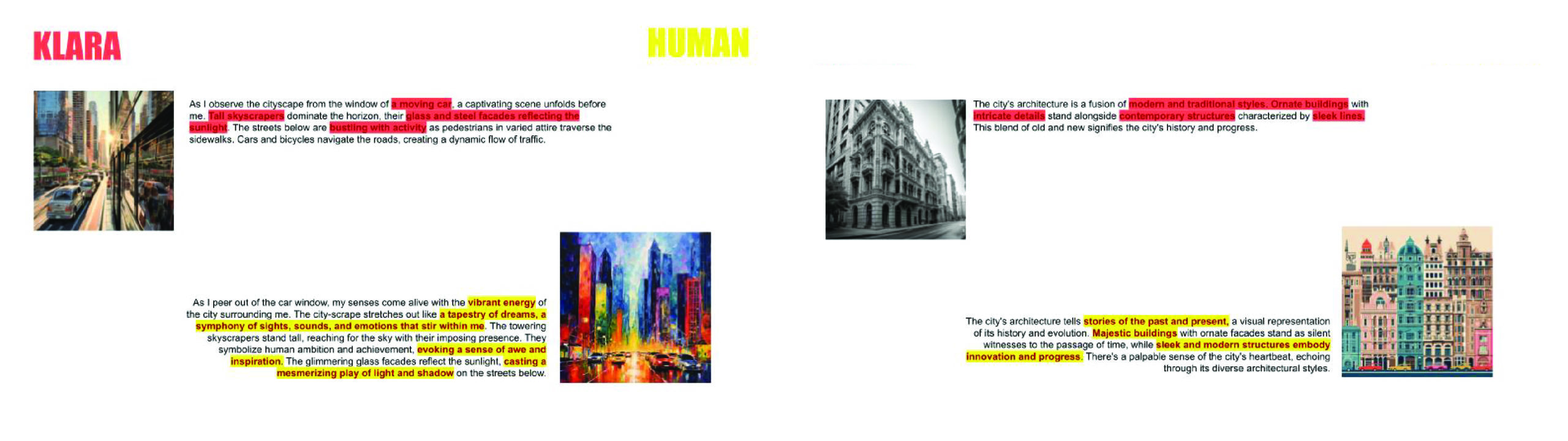

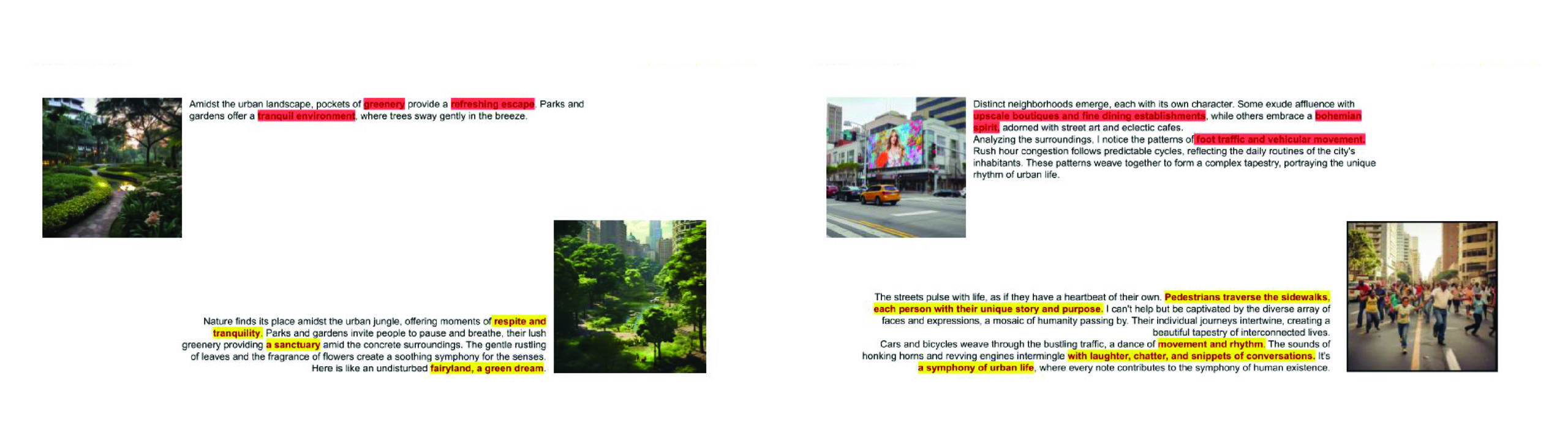

The texts we worked on describe the city, the people in the streets, the sunlight, the greenery, and the overall atmosphere. We provide these texts exclusively to the AI platforms

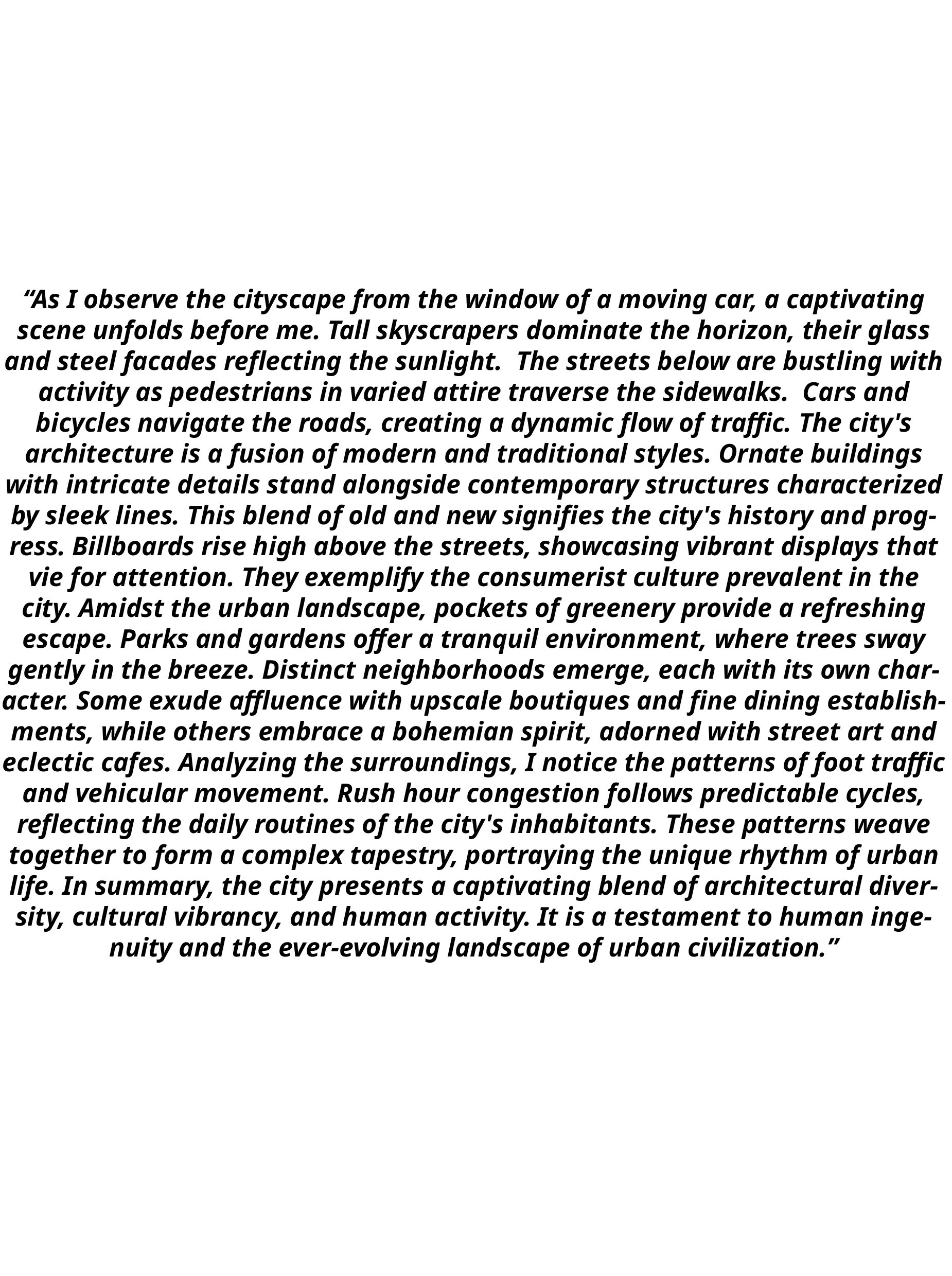

Klara’s narrative

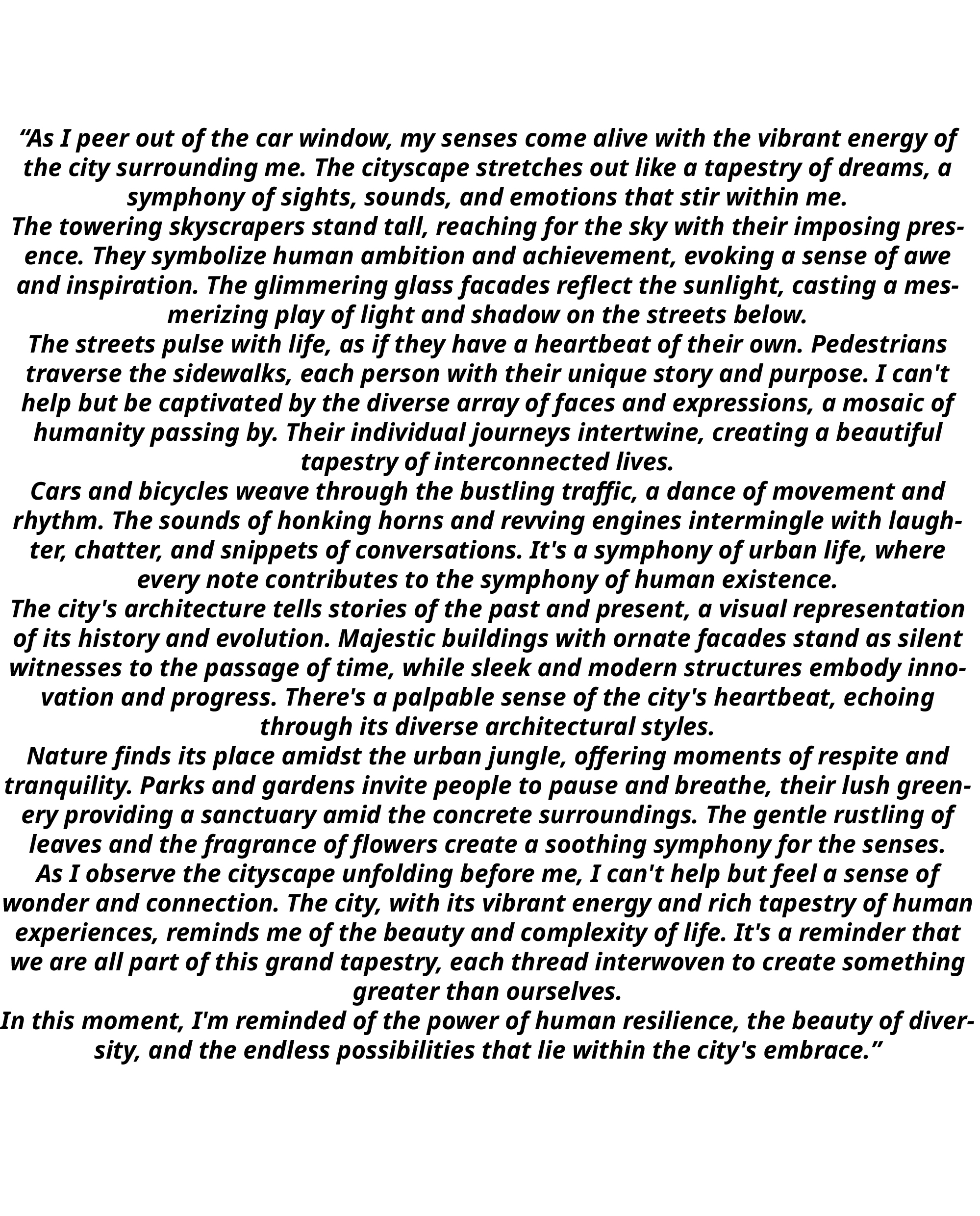

Human’s narrative

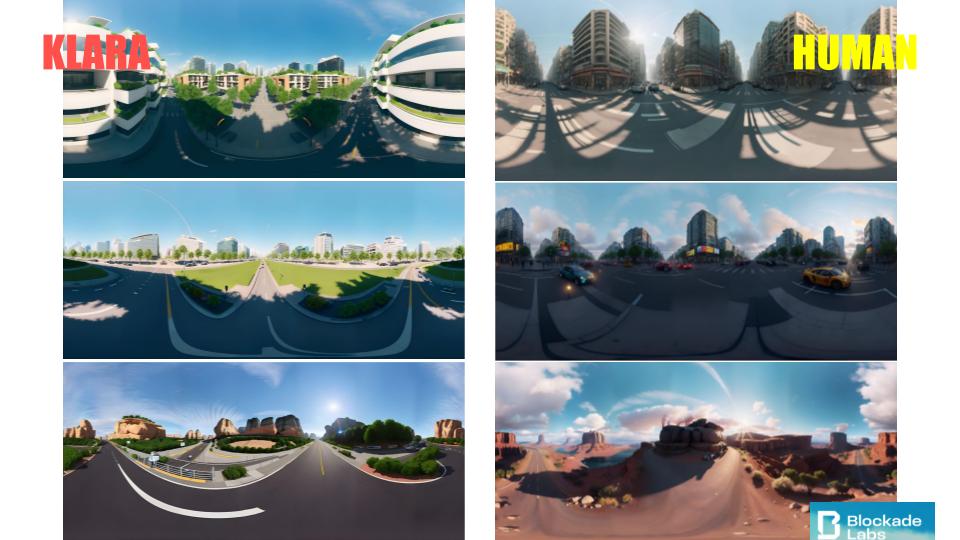

The initial examples of 3D environment creation with Blockade Labs reveal distinct characteristics. Klara’s environment, generated from a 3D software, has fewer textures and generic forms, the colors resembling a studio environment. In contrast, the environment created from human stories has more textures, vibrant elements, and a natural color palette, with scenes featuring more humans.

For Midjourney, the text is divided into parts and used as prompts. Klara and the human. Klara specializes in generating realistic outputs that restore a sense of the real world. Human participants bring creativity, emotions, imagination, and narrative elements, resulting in colorful and expressive outcomes.

For the video generation, two recently released platforms, Pictory and Runway Gen 2, are utilized. They offer different approaches to video creation. Pictory utilizes a Shutterstock like library, generating realistic videos based on specific words in the prompts. Runway employs a GAN model to generate artistic and cinematic videos, adding a creative flair and visual richness.

In conclusion, Klara’s prompts result in a disjointed experience with inconsistent videos, still images, and a lack of human visuals. The environments portrayed lack texture and exhibit generic visual colors resembling 3D model platform screenshots.

On the other hand, the prompts from the human perspective create a connected narrative within the visuals. The platform incorporates moving images and videos that bring the story to life, with the human taking center stage and being closely intertwined with the surrounding environment. The 3D environments are immersive, evoking emotions and providing vivid details for the observer.