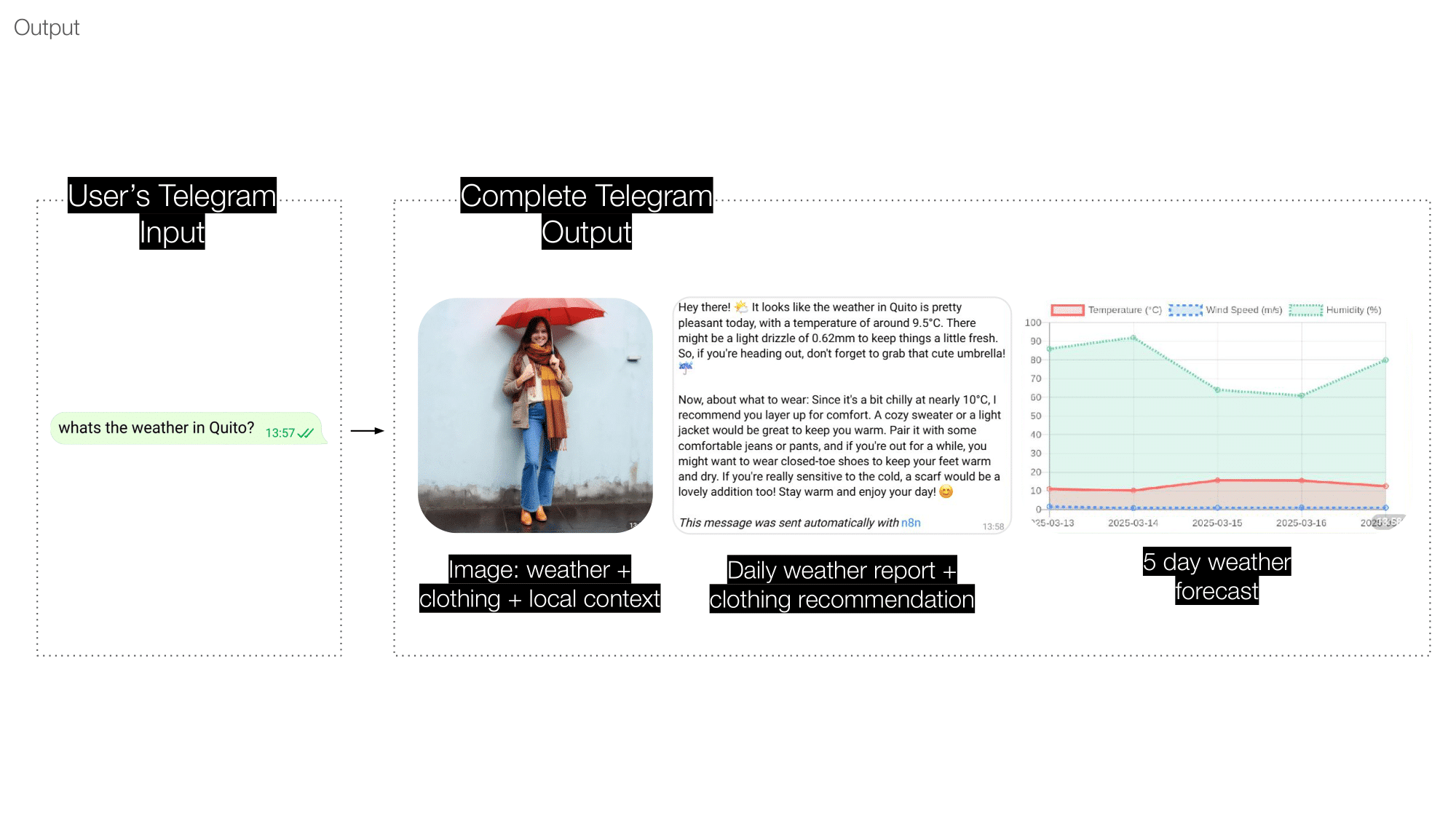

As part of a collaborative exploration into creative automation and human-AI interaction, our team developed an AI-powered weather assistant that integrates live data, generative visuals, and contextual language processing into a single, responsive system. The assistant operates via a Telegram bot and delivers three core outputs: current weather information, clothing recommendations, and an AI-generated image visualizing the described conditions.

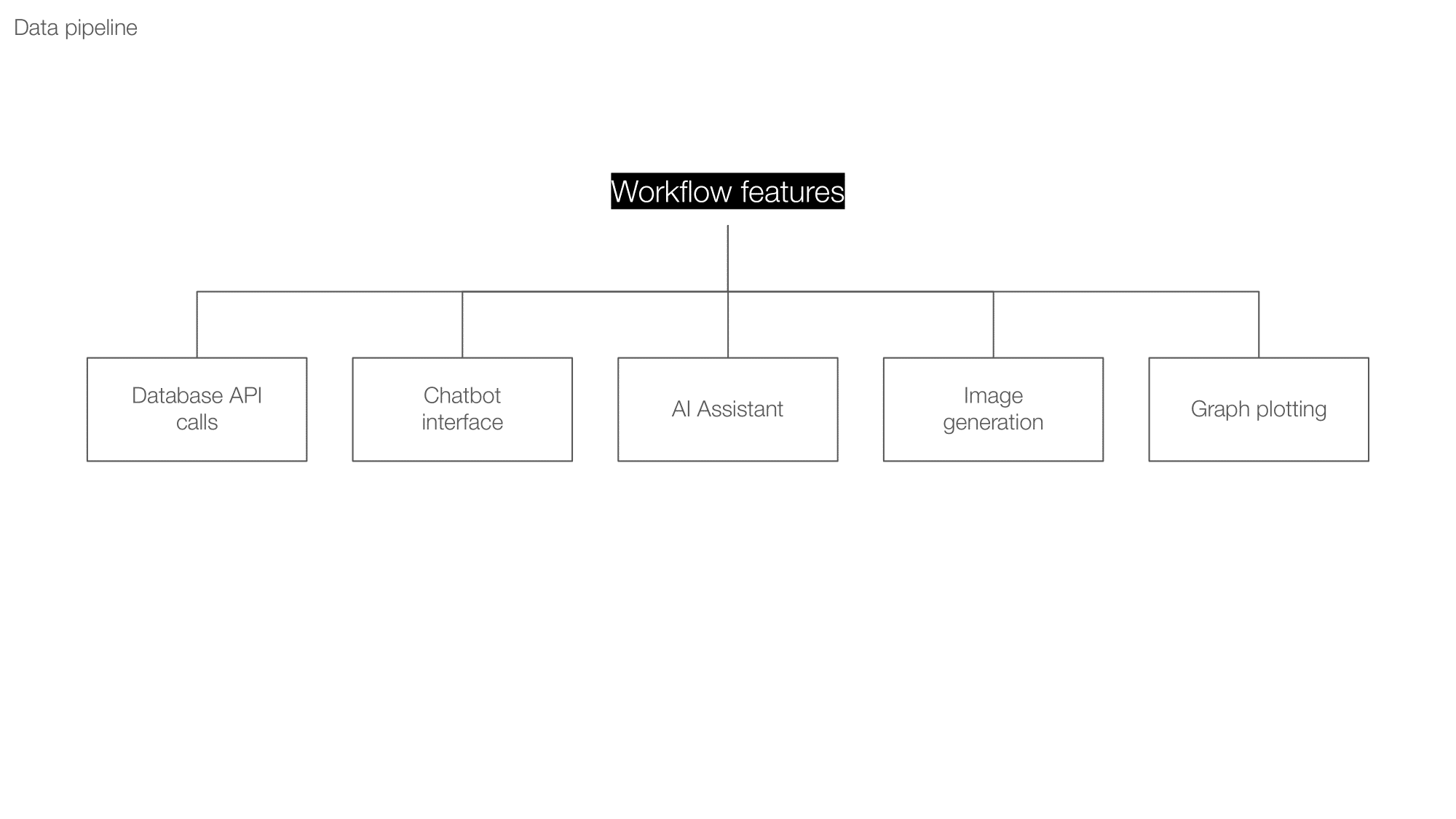

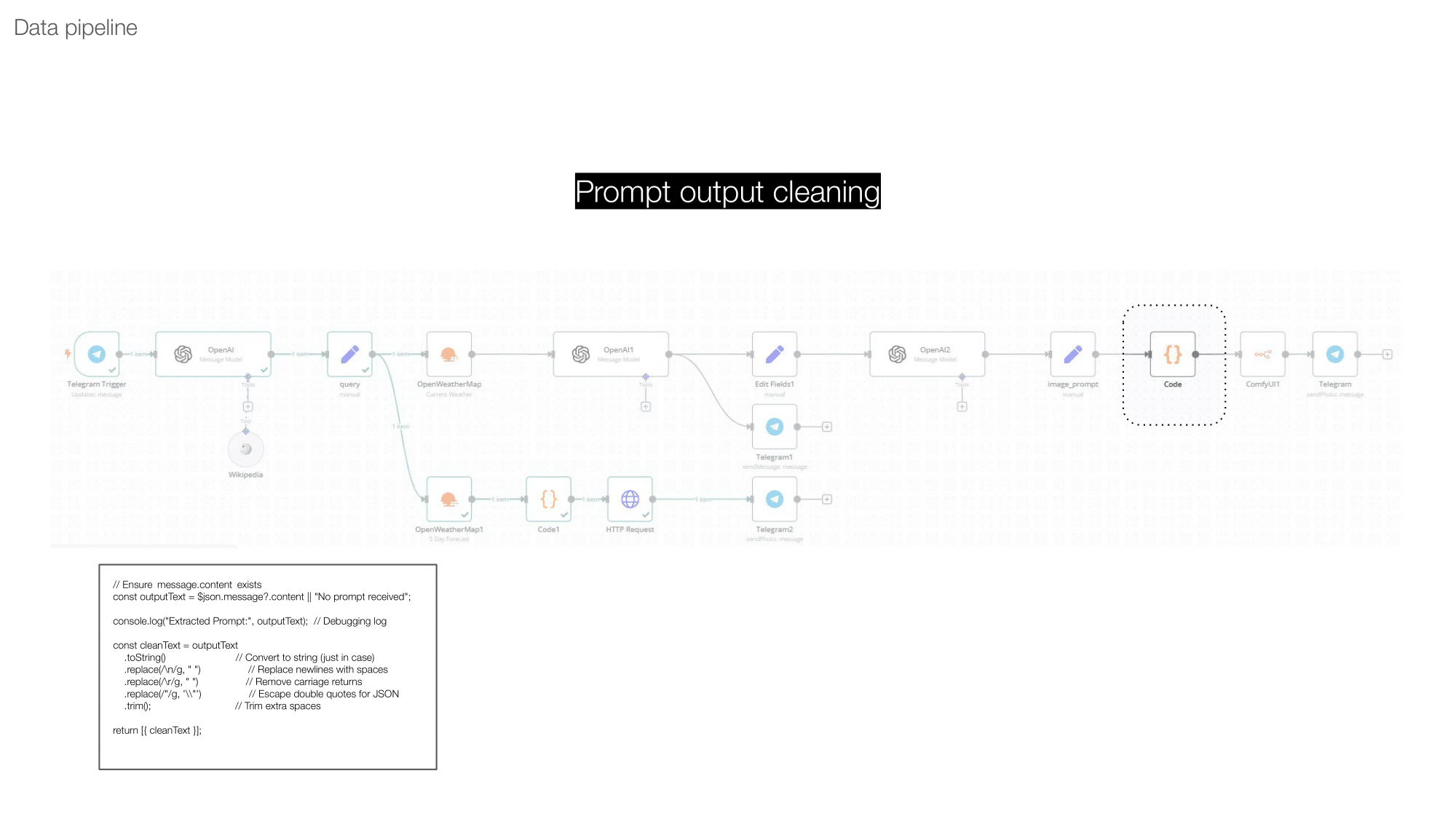

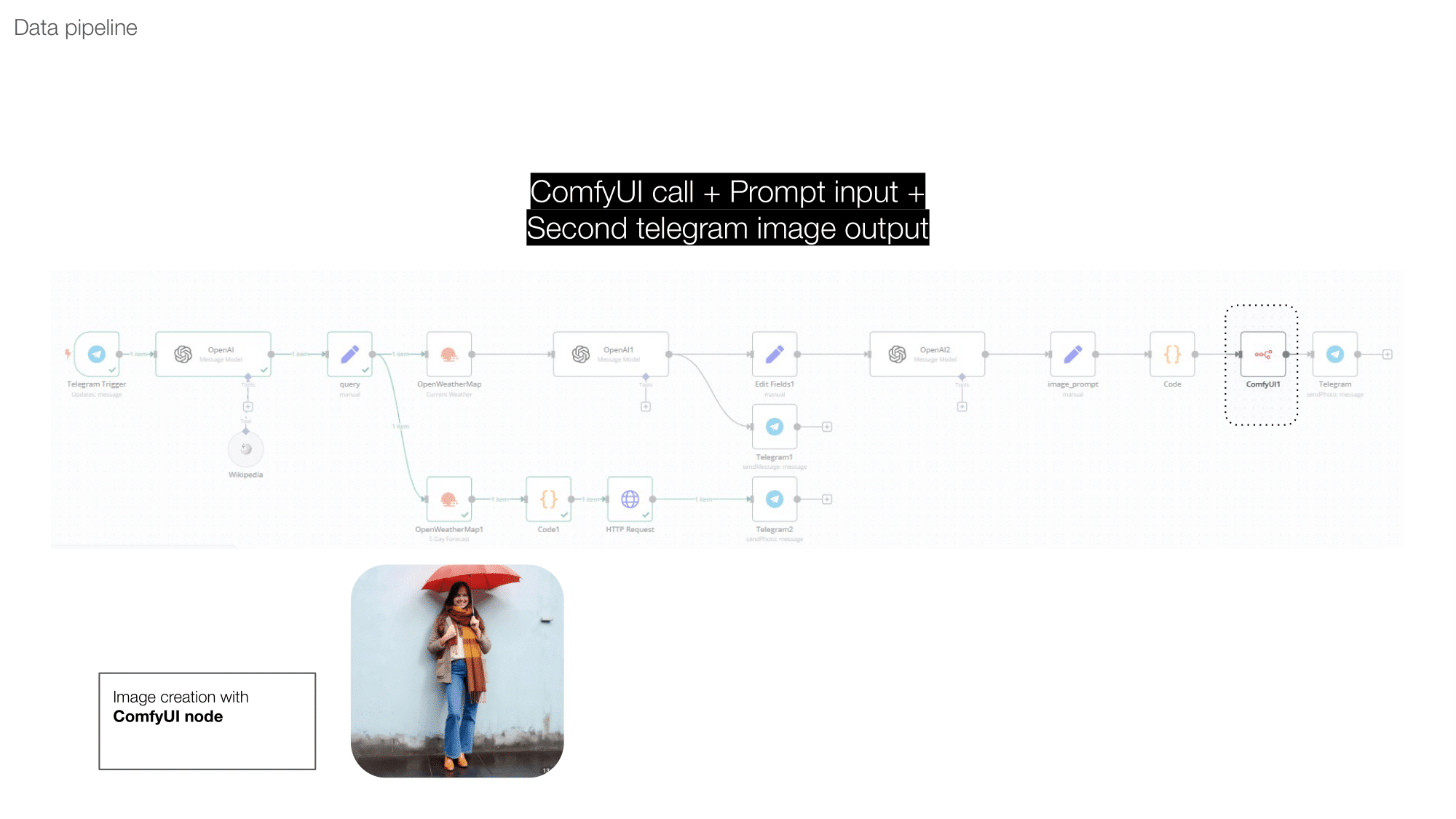

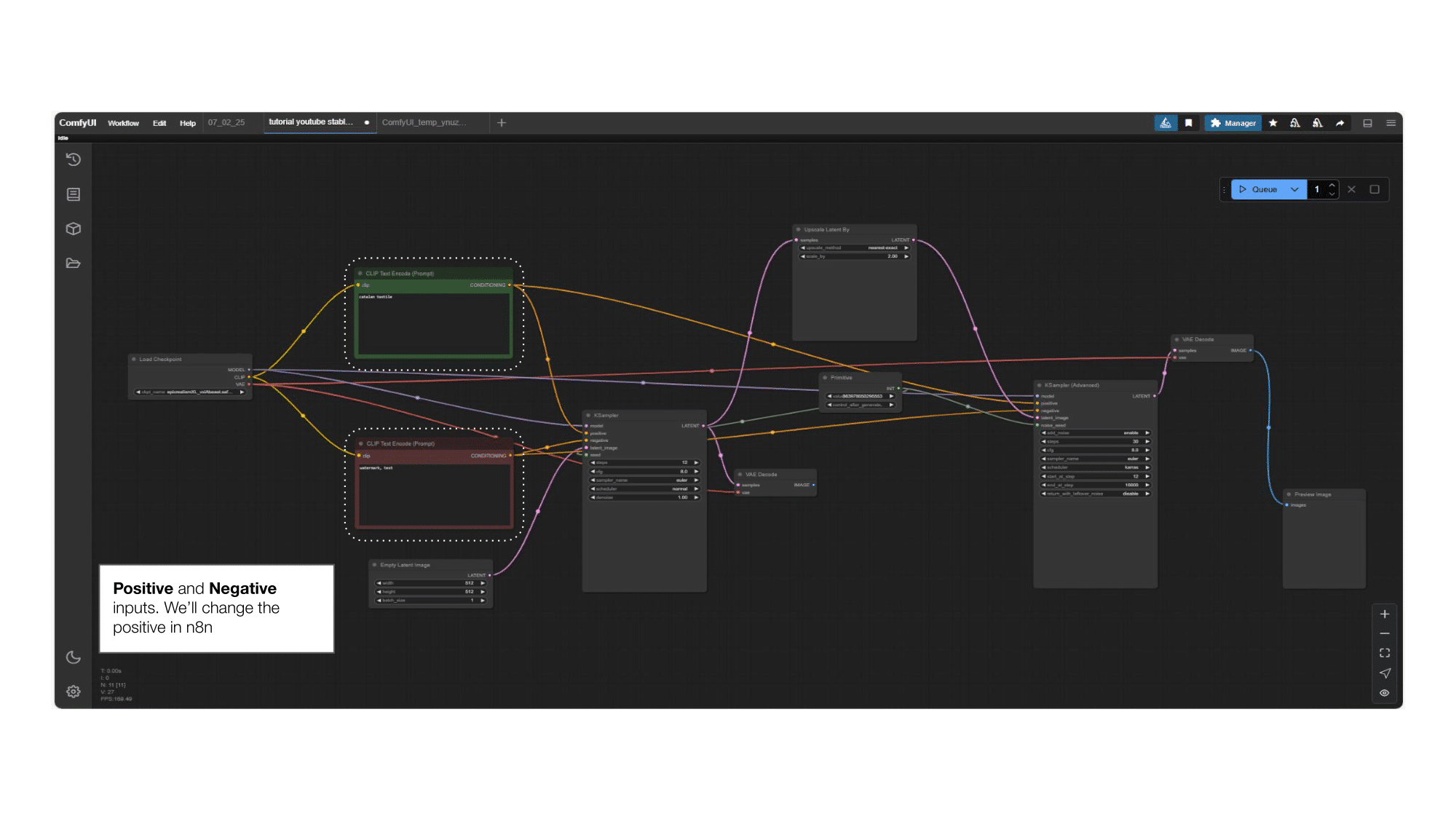

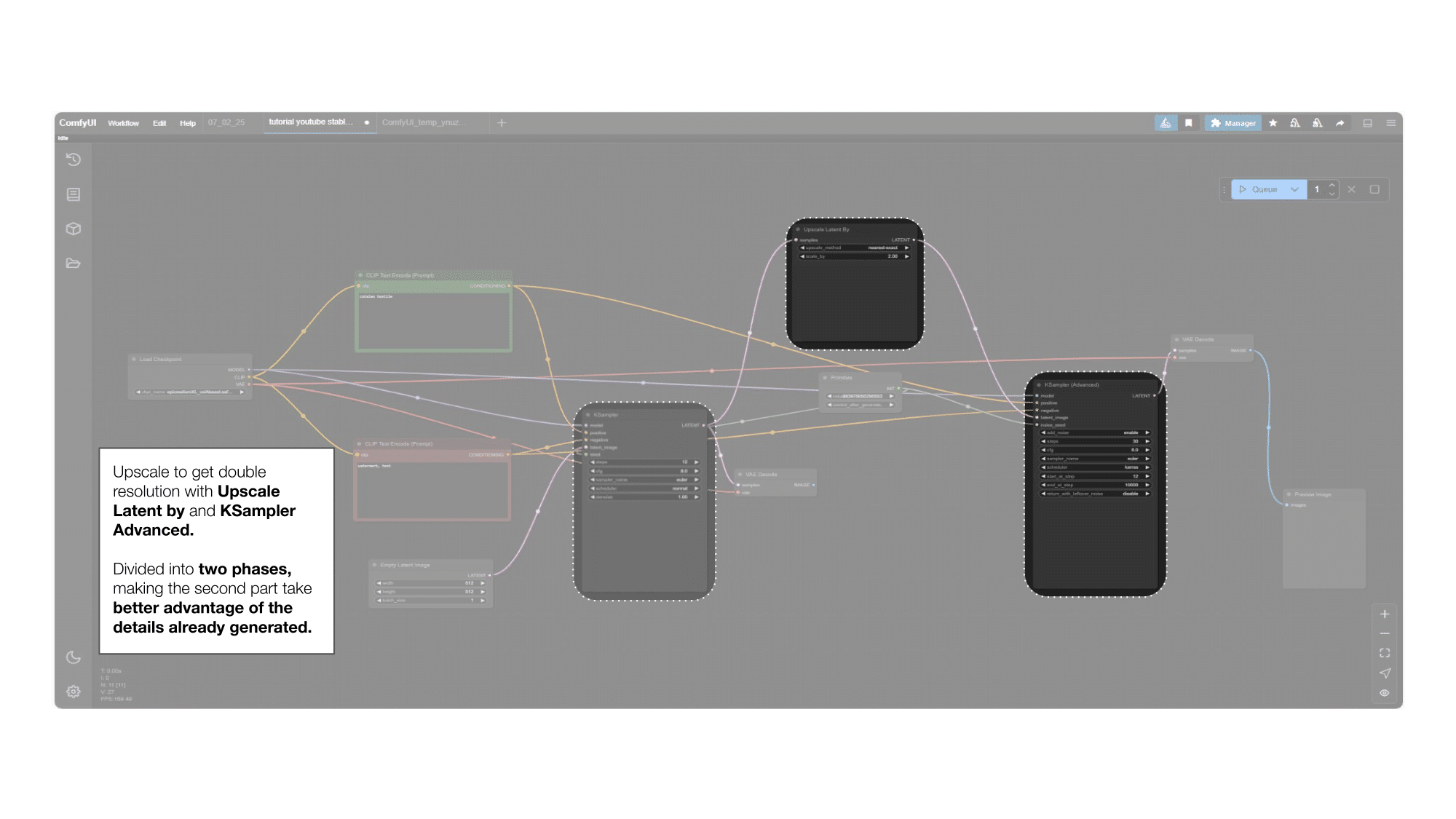

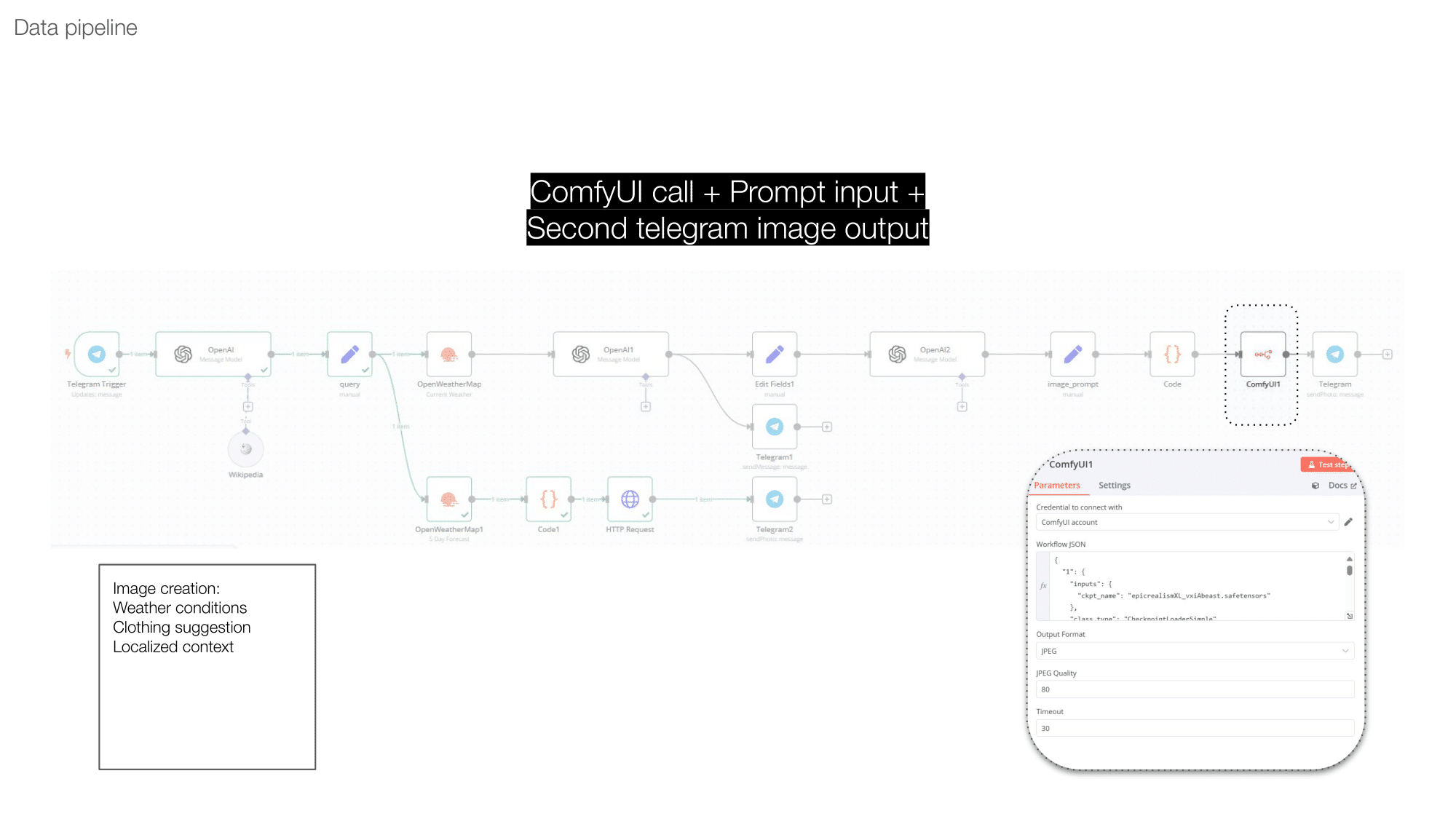

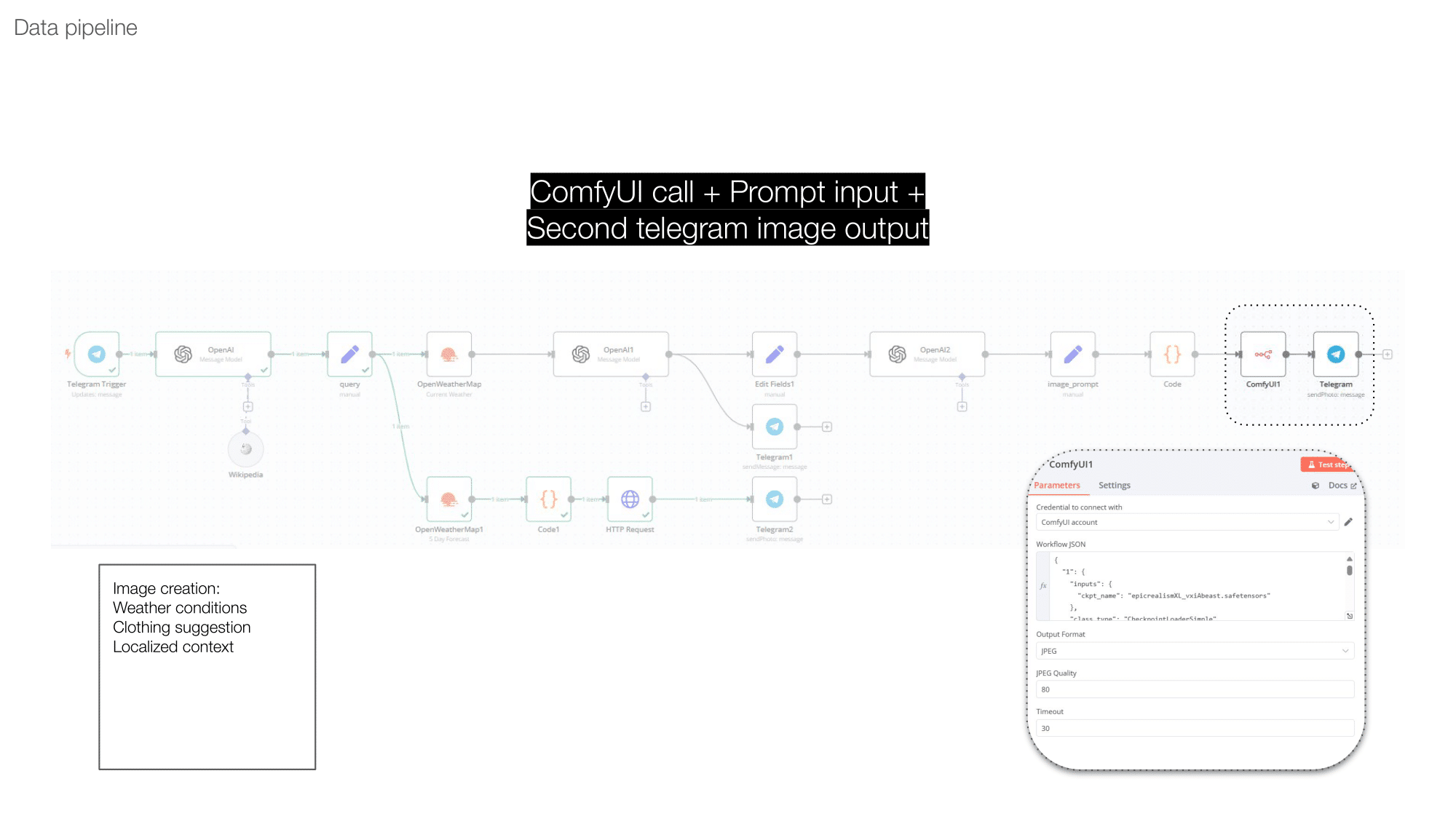

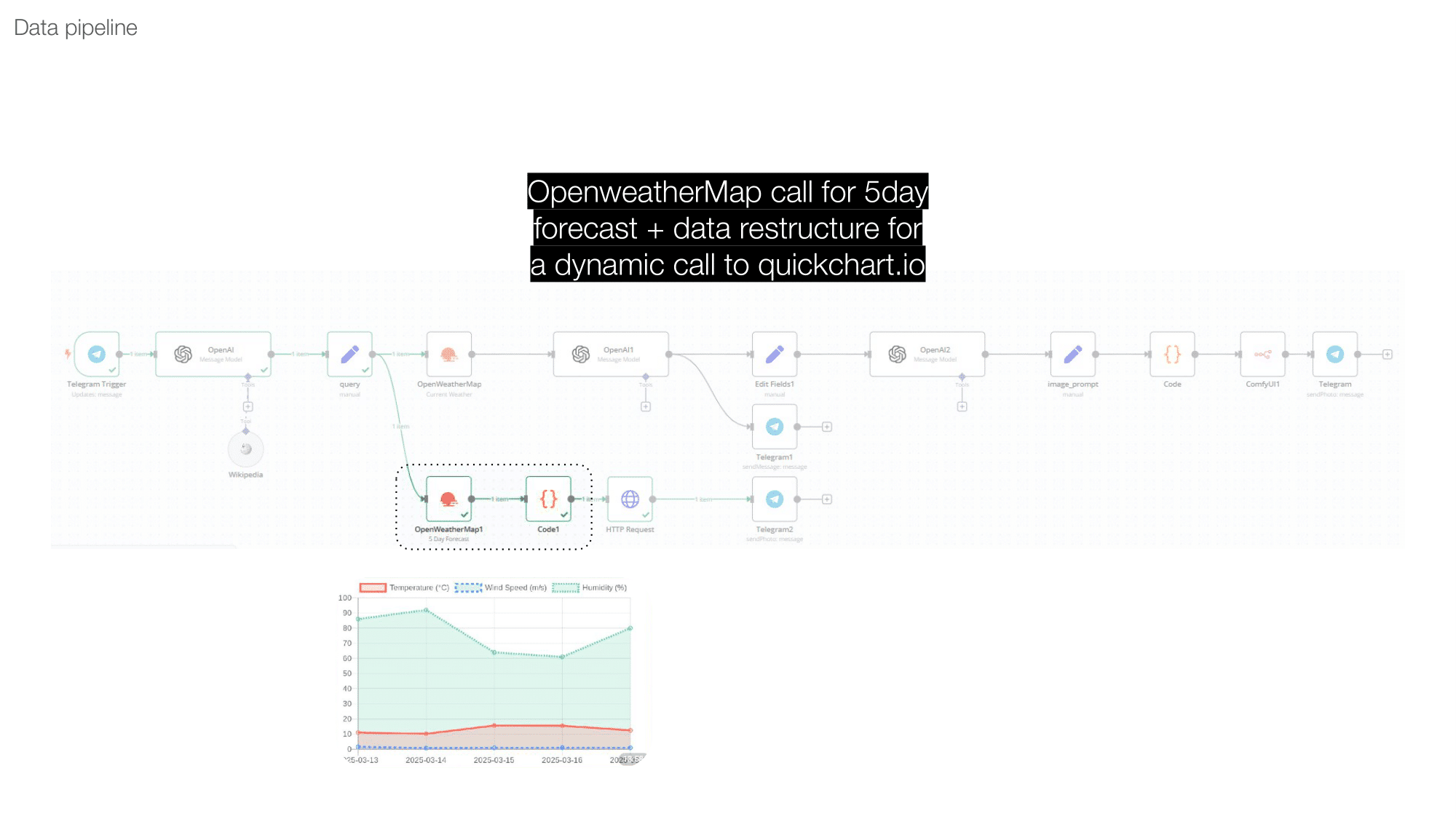

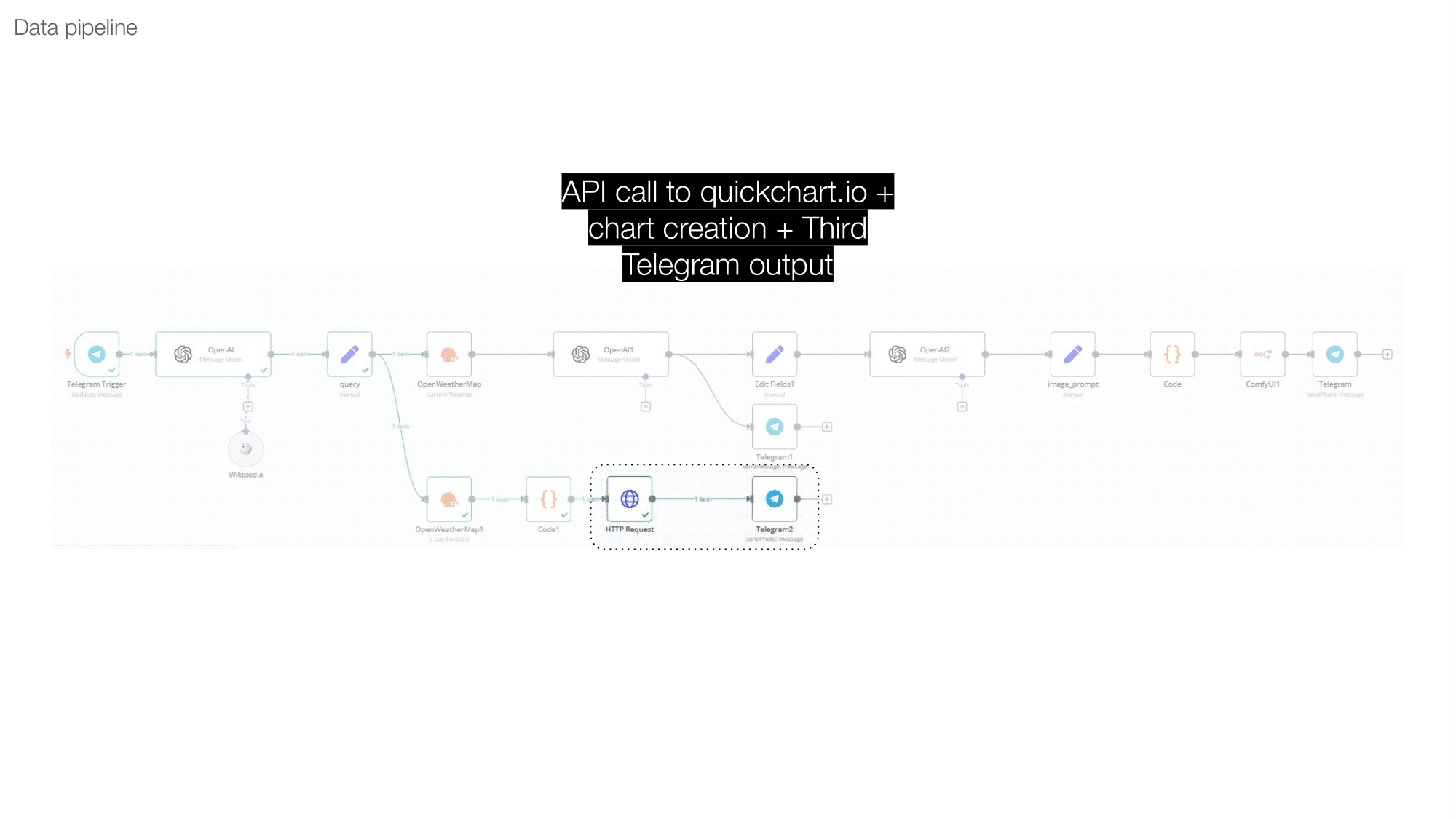

This system was prototyped using n8n as the orchestration engine and ComfyUI as the generative image pipeline. The assistant’s modular design also incorporates the OpenWeatherMap API for real-time meteorological data and OpenAI’s GPT model for contextual recommendations.

System Architecture and Workflow

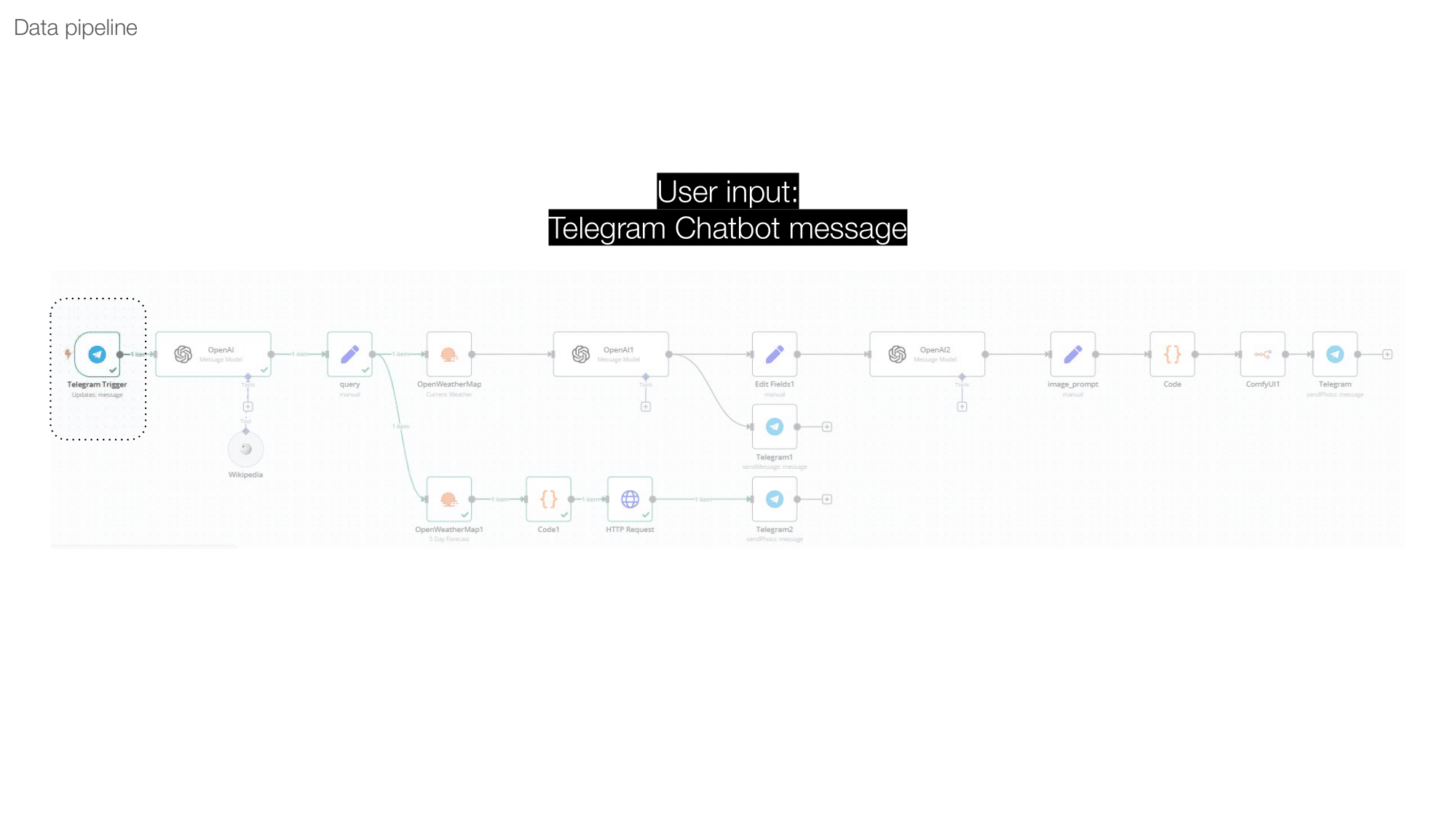

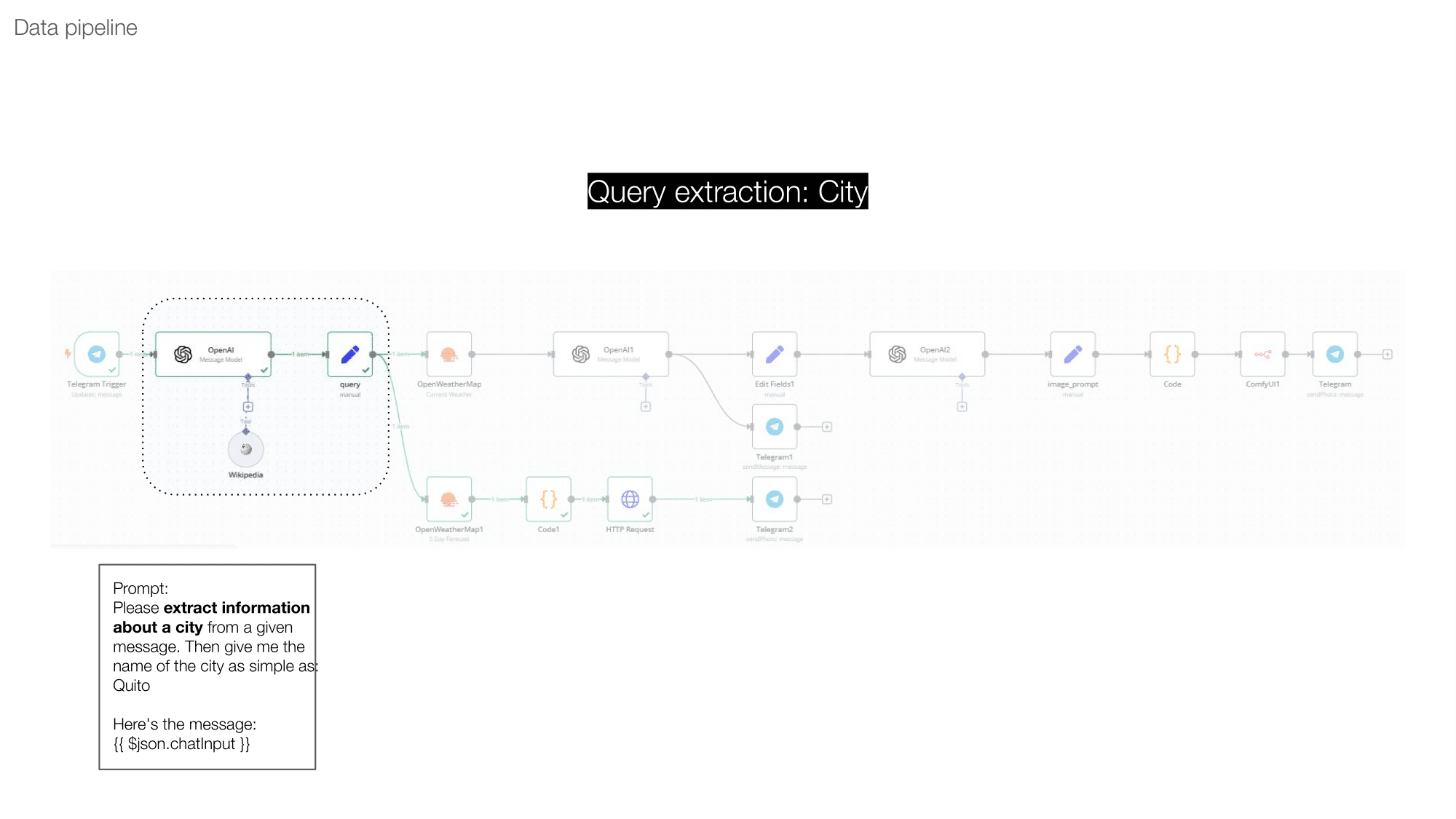

The workflow begins when a user sends a message to the Telegram bot—for example, “What’s the weather in Berlin?” The input is processed as follows:

- Natural Language Parsing

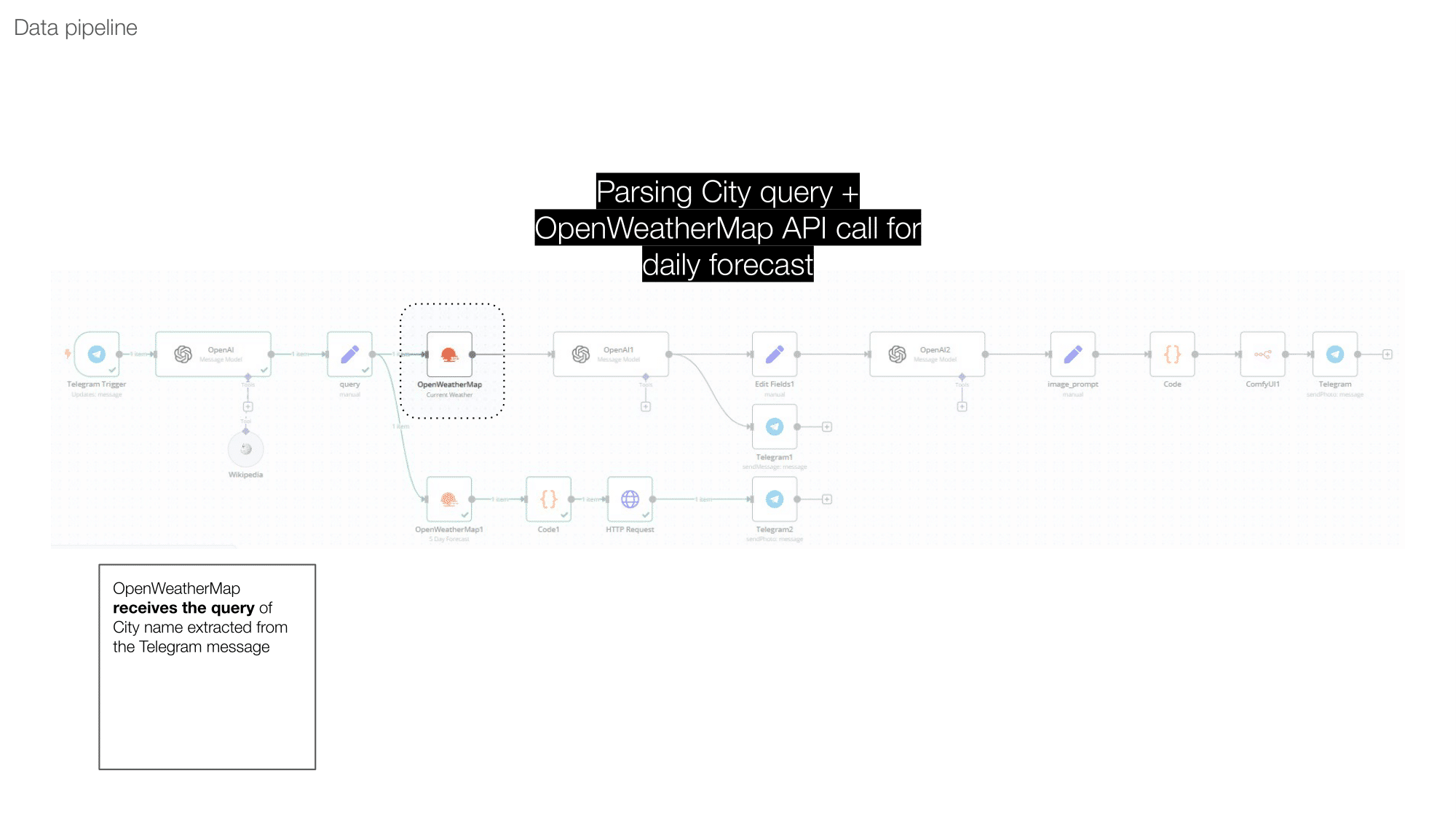

n8n receives the message and extracts the city name using a lightweight parsing function. The system is designed to handle casual, conversational input. - Weather Data Retrieval

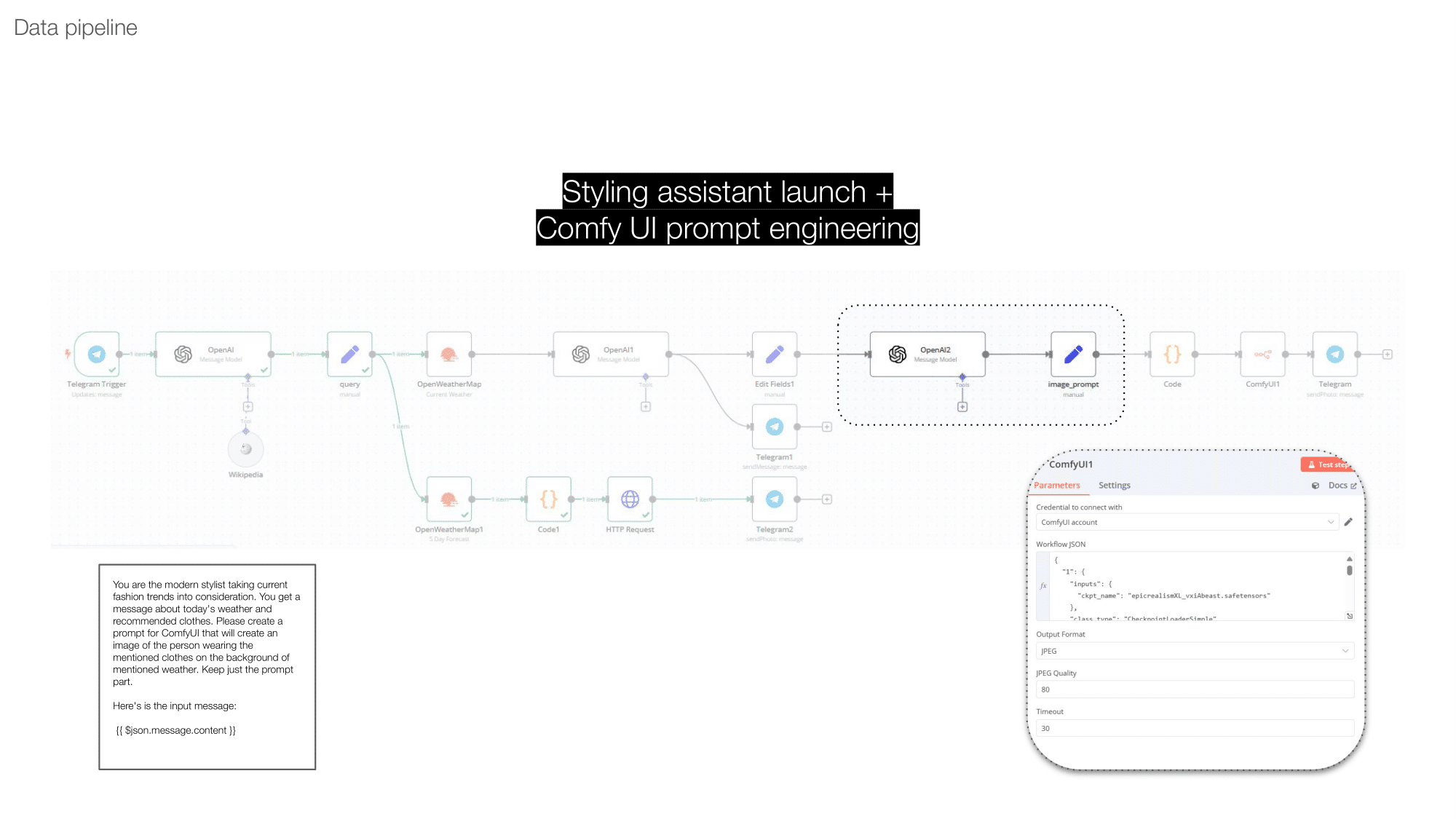

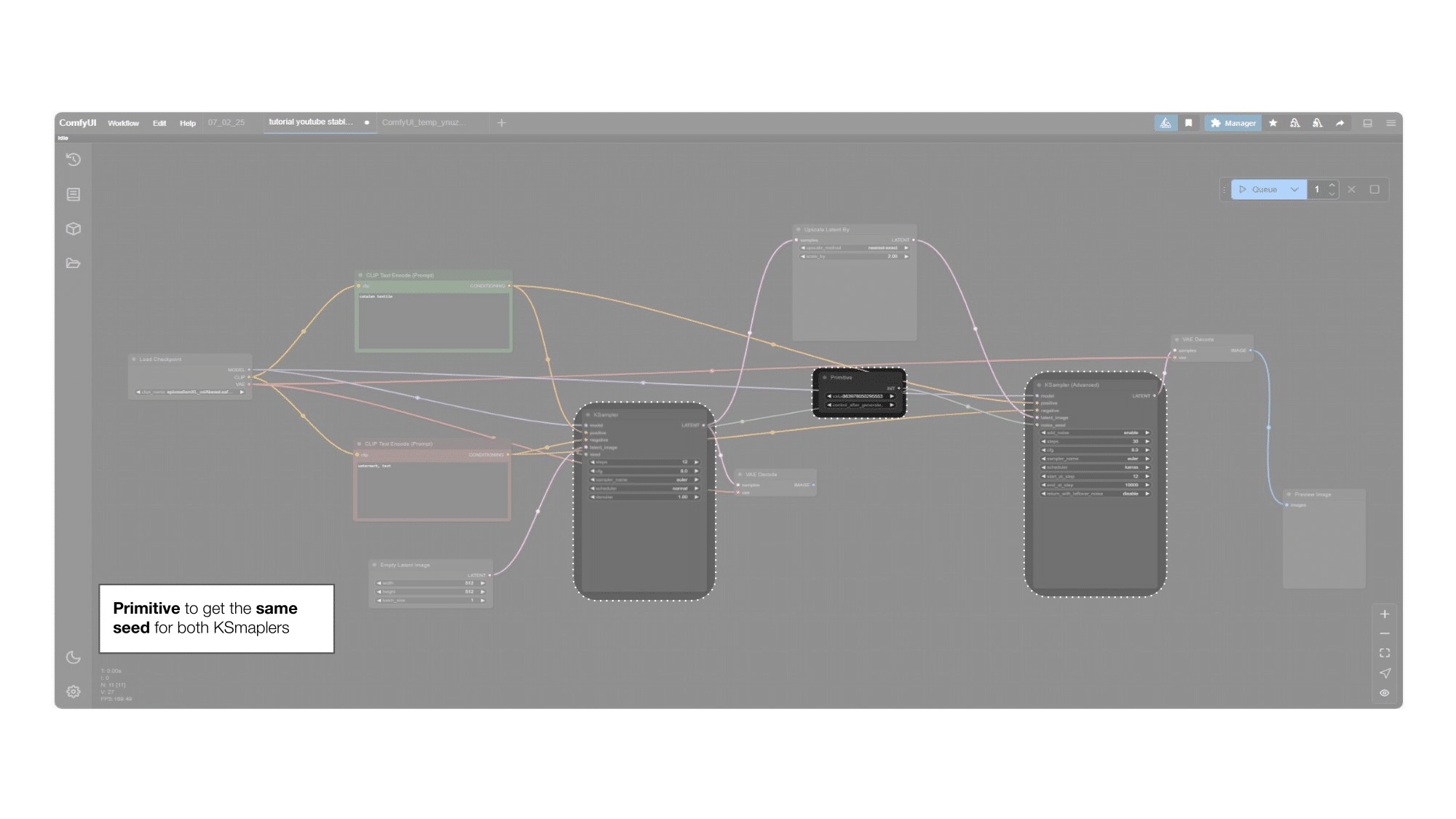

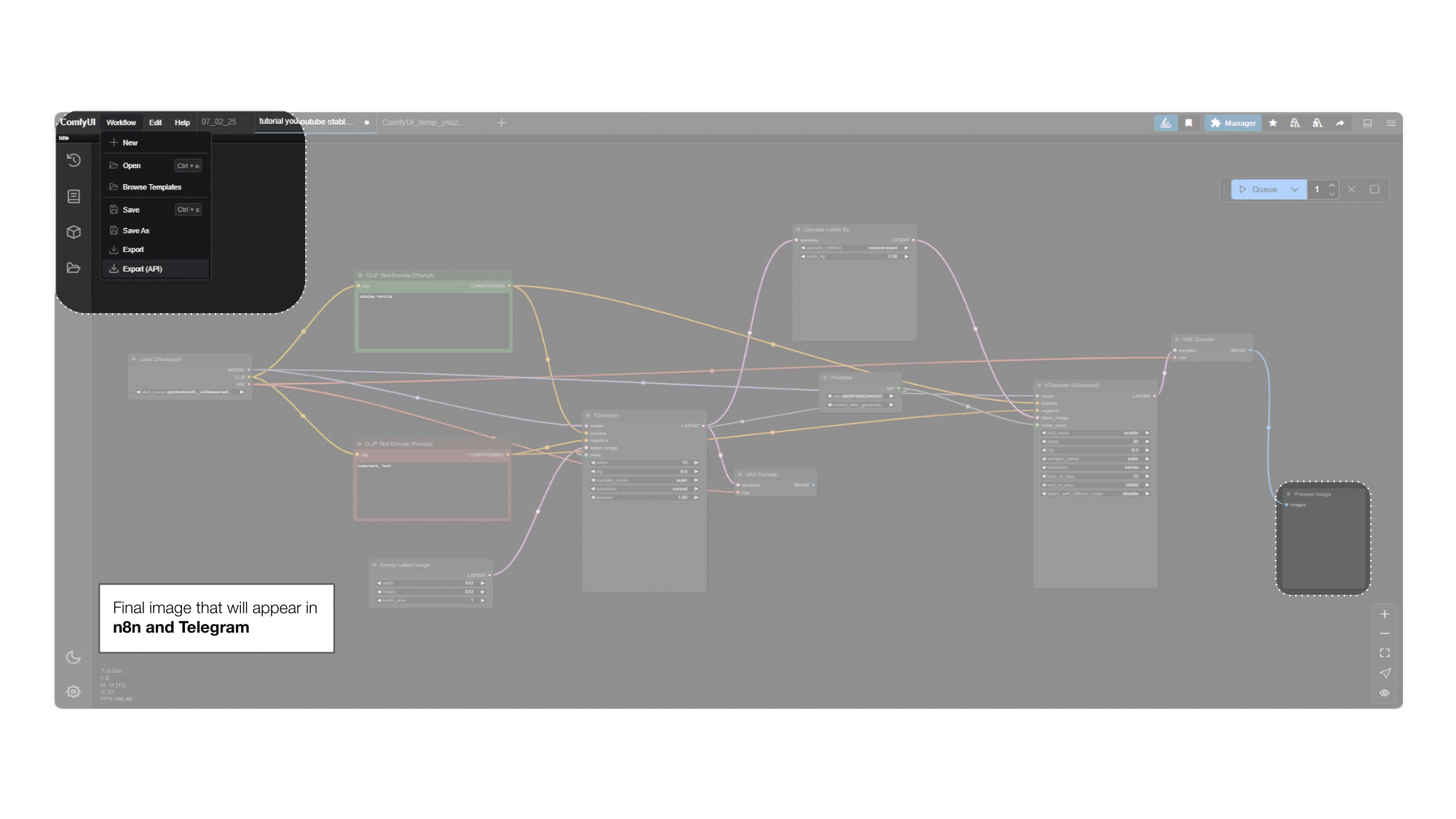

The extracted location is sent to the OpenWeatherMap API, which returns current temperature, conditions (e.g., “light rain”), and other relevant metrics. - Generative Visualization via ComfyUI

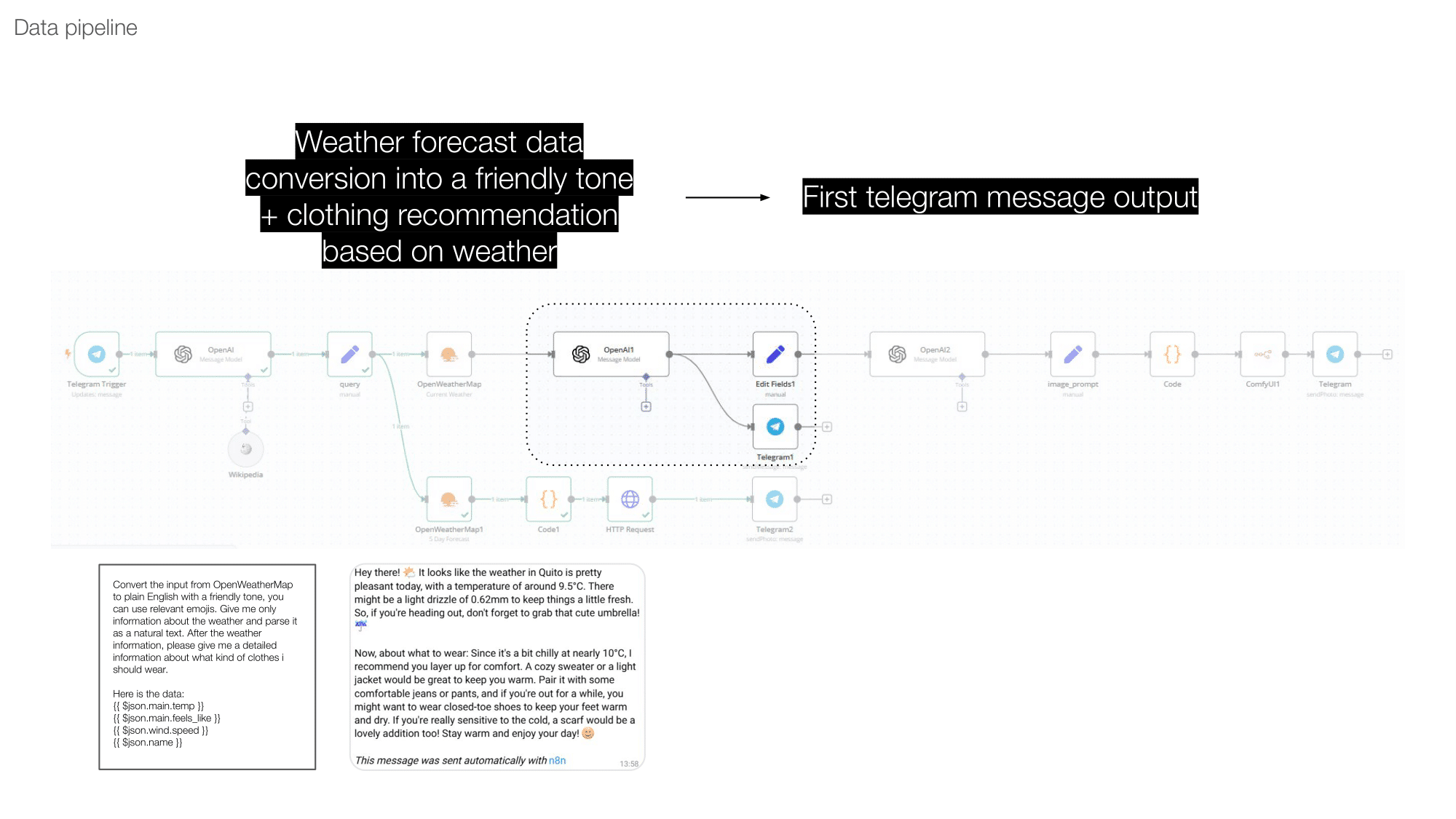

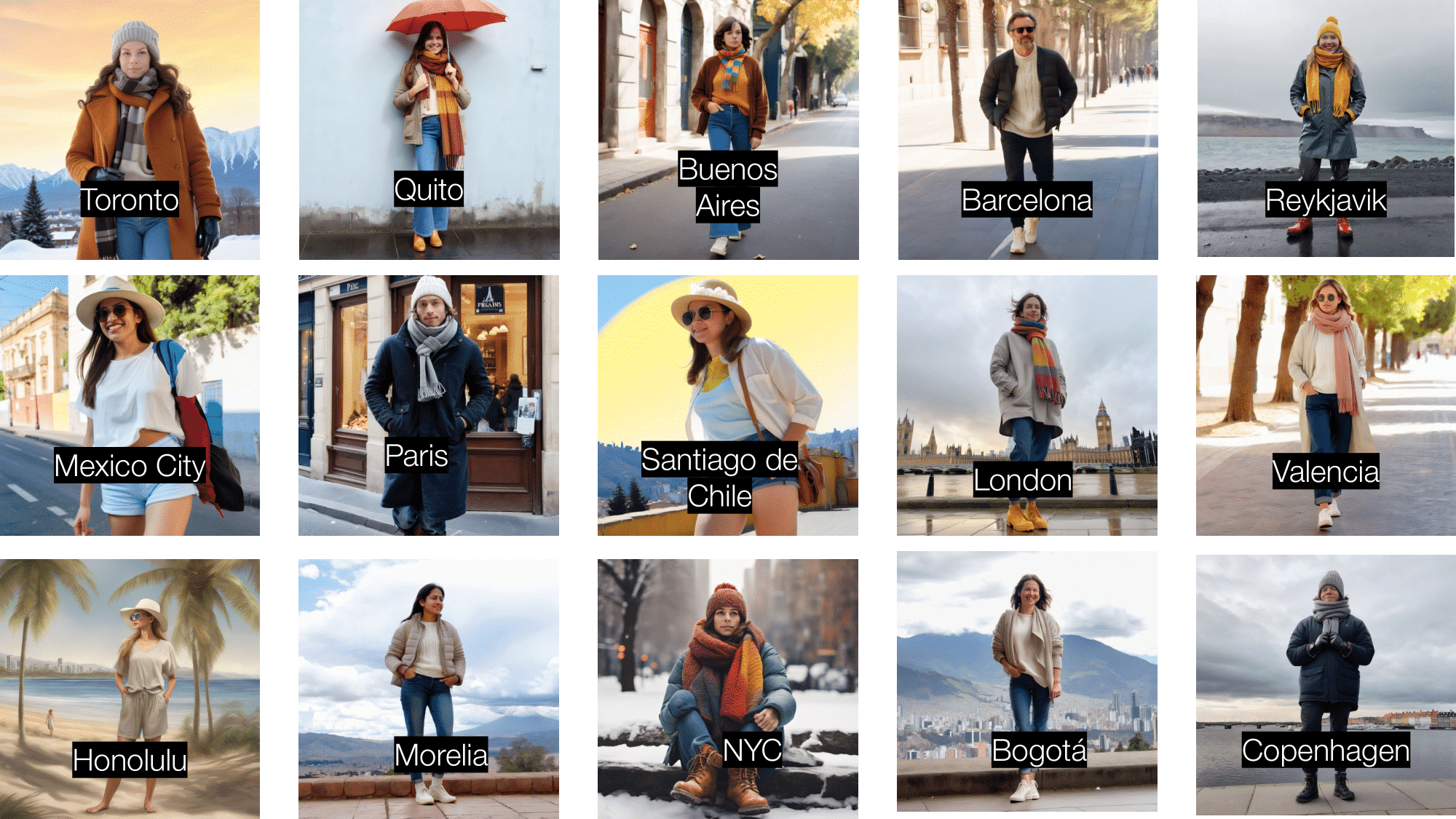

Weather data is transformed into a descriptive prompt (e.g., “A scenic view of Berlin with overcast skies and 12°C”), which is passed to a local ComfyUI pipeline. The output is a unique image reflecting the live conditions. - Contextual Clothing Recommendations

In parallel, a prompt is sent to GPT-4 to generate a short recommendation based on the current weather. For example: “Wear a waterproof jacket and closed shoes.” - Telegram Response

The system sends the generated image and clothing advice back to the user via Telegram, completing the loop in near real-time.

Reflections and Future Directions

This project was conceived as an experiment in bridging automation, real-time data, and generative media. While initially built as a functional prototype, it revealed unexpected affordances in terms of personalization and interaction. Users respond not only to the practicality of the assistant but also to the expressiveness of the AI-generated images.

Future iterations may include support for multiple languages, stylistic variation in visual outputs (e.g., seasonal aesthetics or art styles), and scheduled updates. This work demonstrates the potential of low-code platforms and modular AI tools in rapidly prototyping expressive, data-driven applications.

System Architecture and Workflow

The workflow begins when a user sends a message to the Telegram bot—for example, “What’s the weather in Berlin?” The input is processed as follows:

- Natural Language Parsing

n8n receives the message and extracts the city name using a lightweight parsing function. The system is designed to handle casual, conversational input. - Weather Data Retrieval

The extracted location is sent to the OpenWeatherMap API, which returns current temperature, conditions (e.g., “light rain”), and other relevant metrics. - Generative Visualization via ComfyUI

Weather data is transformed into a descriptive prompt (e.g., “A scenic view of Berlin with overcast skies and 12°C”), which is passed to a local ComfyUI pipeline. The output is a unique image reflecting the live conditions. - Contextual Clothing Recommendations

In parallel, a prompt is sent to GPT-4 to generate a short recommendation based on the current weather. For example: “Wear a waterproof jacket and closed shoes.” - Telegram Response

The system sends the generated image and clothing advice back to the user via Telegram, completing the loop in near real-time.

Reflections and Future Directions

This project was conceived as an experiment in bridging automation, real-time data, and generative media. While initially built as a functional prototype, it revealed unexpected affordances in terms of personalization and interaction. Users respond not only to the practicality of the assistant but also to the expressiveness of the AI-generated images.

Future iterations may include support for multiple languages, stylistic variation in visual outputs (e.g., seasonal aesthetics or art styles), and scheduled updates. This work demonstrates the potential of low-code platforms and modular AI tools in rapidly prototyping expressive, data-driven applications.