Generative AI

MaCAD Digital Tools for GENERATIVE AI SEMINAR

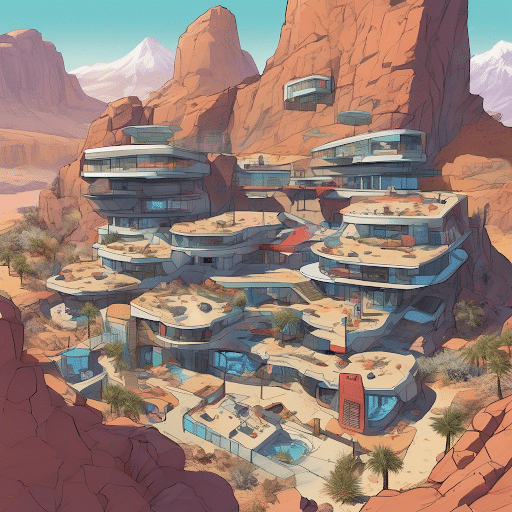

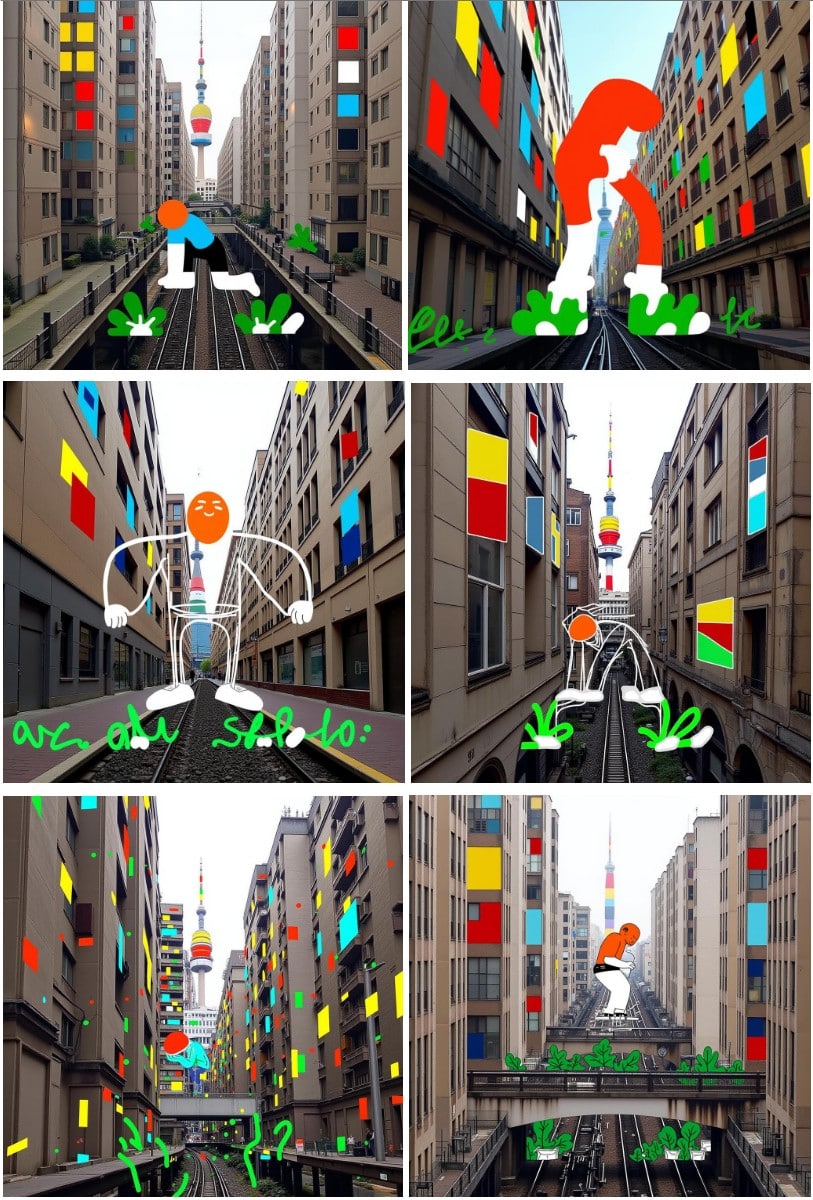

SDXL image with text inputs by Michael Walsh. MaCAD 2023/24

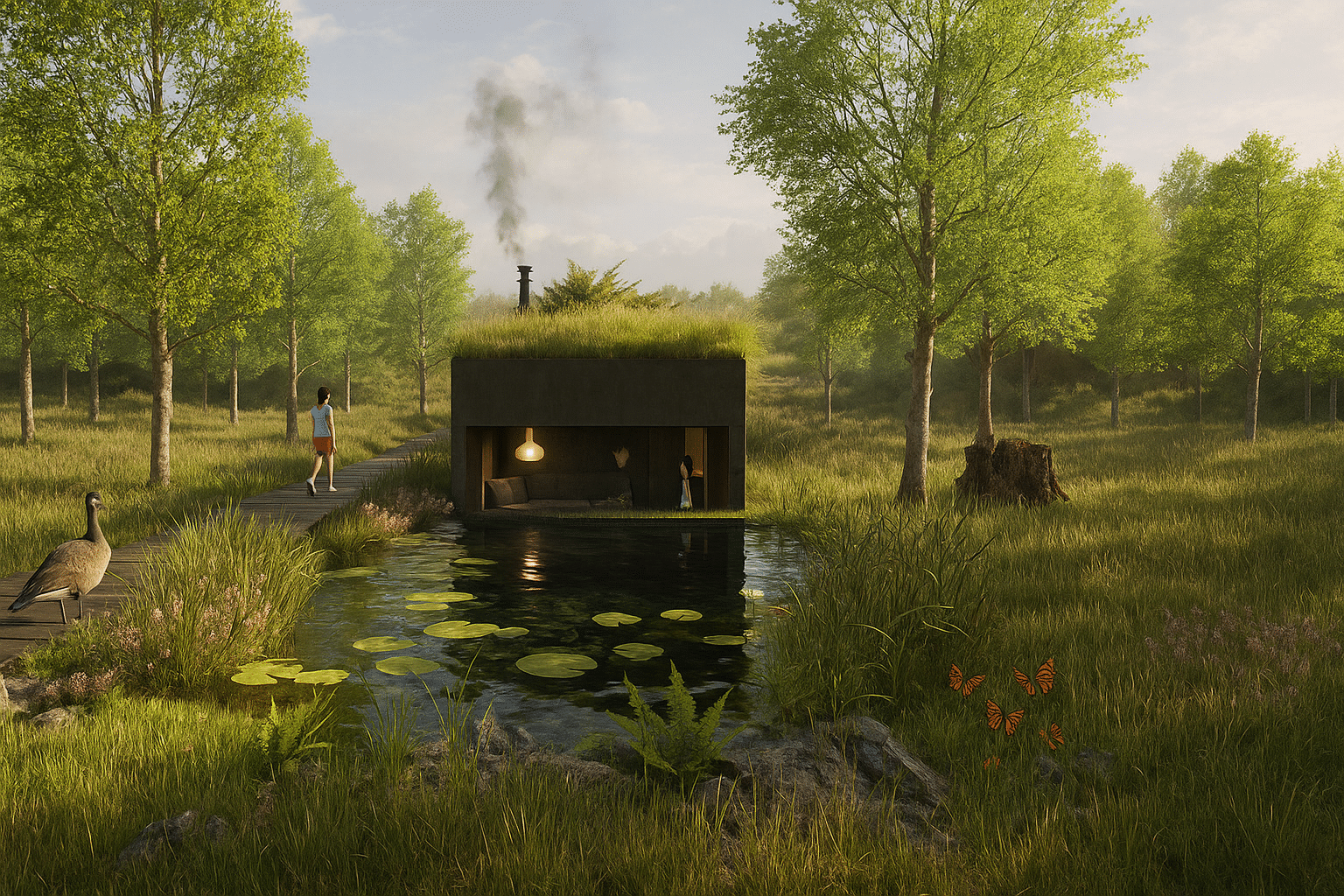

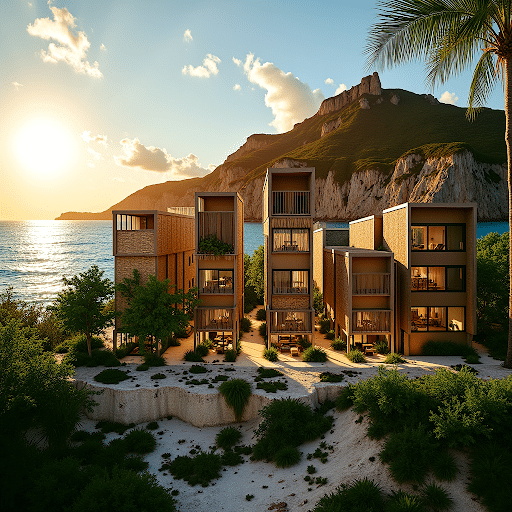

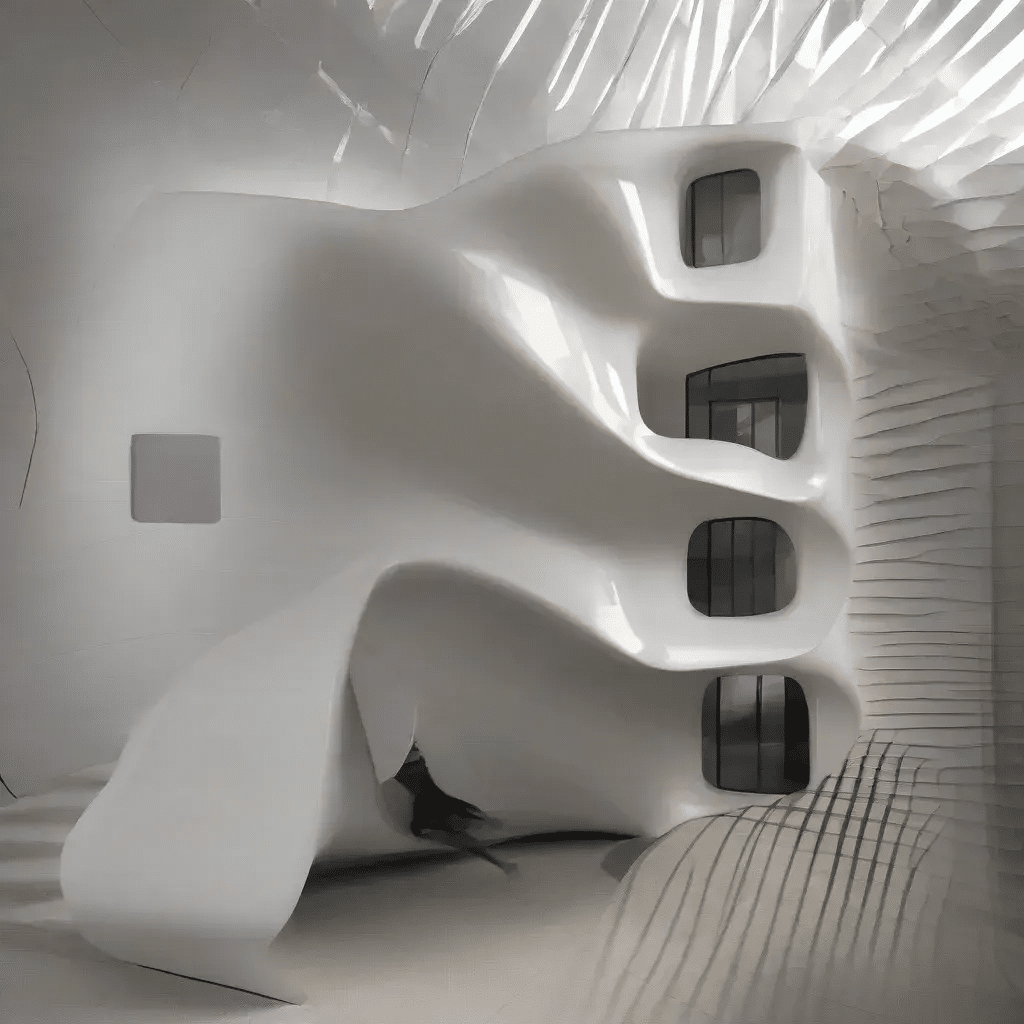

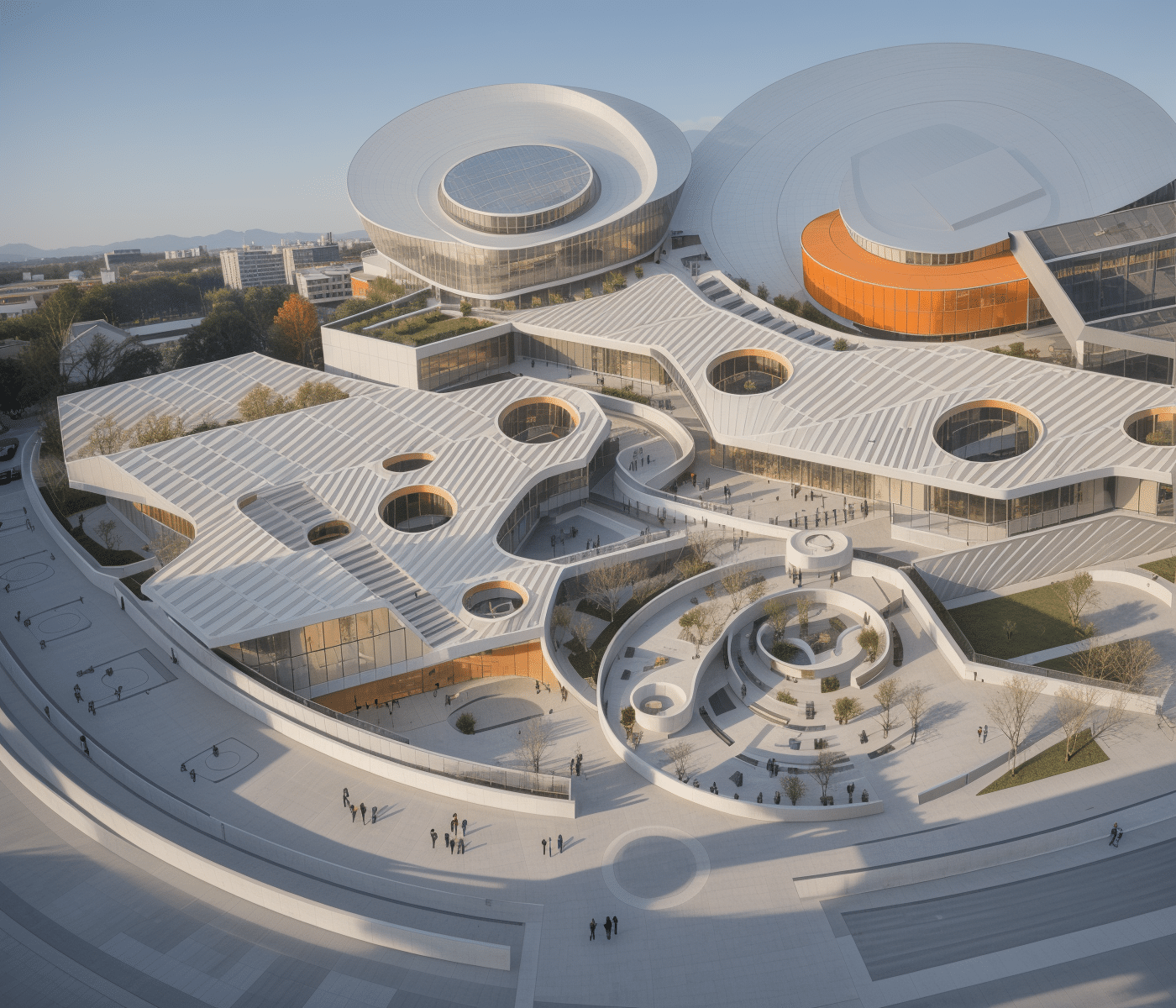

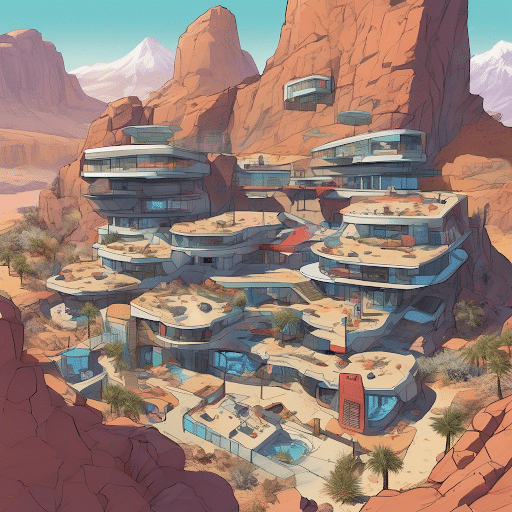

The Digital Tools for Generative AI seminar explores the practical uses of image generation in the Architecture, Engineering, and Construction (AEC) industry. Integrating these tools into design practice marks a transformative shift, enabling professionals to redefine traditional workflows. Architects, engineers, and designers can leverage generative AI to discover new design possibilities, streamline processes, and tackle complex challenges in innovative ways.

Students will delve into state-of-the-art image generation and editing techniques using generative models. They will learn how these models create high-quality images from text descriptions and their applications in conceptual design and architectural visualization. The seminar includes generating visuals with existing models, fine-tuning them using Low-Rank Adaptation (LoRA) with custom datasets, and experimenting with model parameters to understand their influence on image quality outputs.

By the end of the course, students will integrate their results into interactive applications using Gradio, enabling seamless exploration and presentation of their AI-generated visuals.

Learning Objectives

- Learn about the history of machine learning for image generation.

- Understand key concepts of the image generation process, including embeddings, latent space, network architecture, denoising, sampling, conditional generation, guidance, training, and fine-tuning.

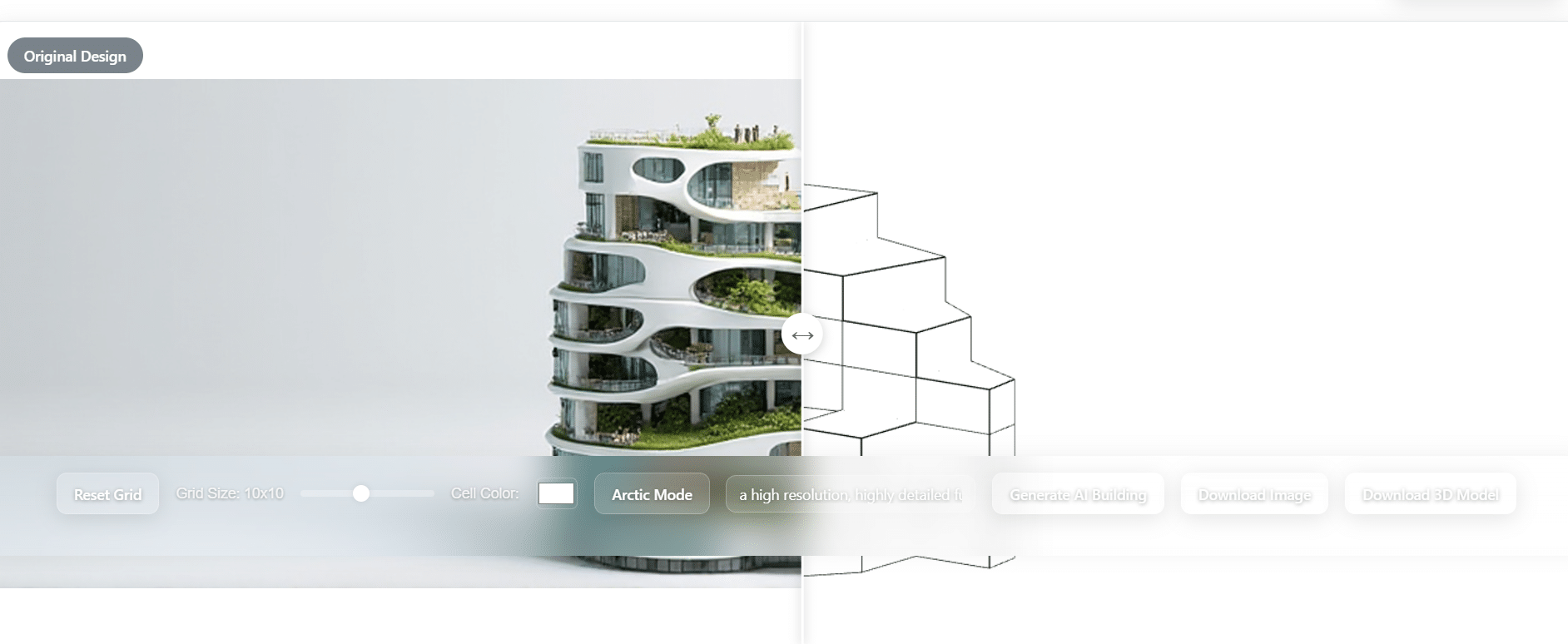

- Generate high-quality images from both text prompts and image inputs.

- Edit and expand images while maintaining stylistic coherence.

- Efficiently fine-tune models using custom datasets.

- Control image generation with additional inputs (sketches, edges, and depth maps).

- Utilize batch prompting strategies to iterate on images with a structured approach.

- Create interfaces to present and interact with generative workflows.

KEYWORDS

image generation, text-to-image, image-to-image, generative flow and diffusion models, controlnet, low-rank adaptation (LoRA), interfaces, python, google collab, comfyUI, gradio.