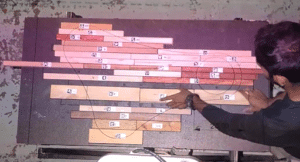

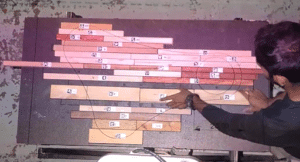

Interactive Projection is a hands-on seminar where students explore human-in-the-loop fabrication using dynamic projection and sensing. Participants will design interactive systems that combine projectors and sensors (e.g., depth cameras, motion trackers) to guide users through physical tasks or immersive experiences. The focus is on creating visual symbols, rule-based feedback, and real-time adaptations—projecting instructions, corrections, or dynamic visuals that respond to user actions. Students will prototype workflows where sensors validate interactions and trigger projected cues, blending digital precision with tangible engagement. The seminar encourages experimentation with tools like TouchDesigner, Unity, or custom code, though no prior expertise is required. Projects could range from practical fabrication aids (e.g., woodworking guides, interactive manuals) to artistic installations or collaborative games. By the end, attendees will have built a functional system that rethinks how projection can mediate between humans and machines—enhancing creativity, accuracy, and play in making processes.

Learning Objectives

Through prototyping and critique, students will leave with a framework for designing interactive projection as a mediating tool between humans and machines.

- Conceptualize & Design

- Integrate Sensing & Projection

- Prototype Human-in-the-Loop Workflows

- Finite state machine & Behavior Trees