An LLM-Driven and GAN-Inspired Workflow for Self-Evolving, Species-Responsive Adaptive Architecture

Abstract

Contemporary architecture remains predominantly anthropocentric, prioritizing human needs while neglecting the urgent ecological imperative to design for multispecies coexistence. In response to biodiversity loss and environmental degradation, this research advances a new framework for cybernetic architecture – an adaptive, intelligent, and ecologically attuned design paradigm. The central research question guiding this work is: How can advances in large language models, machine learning, and artificial intelligence be integrated into architectural workflows to shape a new order of cybernetic architecture that supports multispecies coexistence?

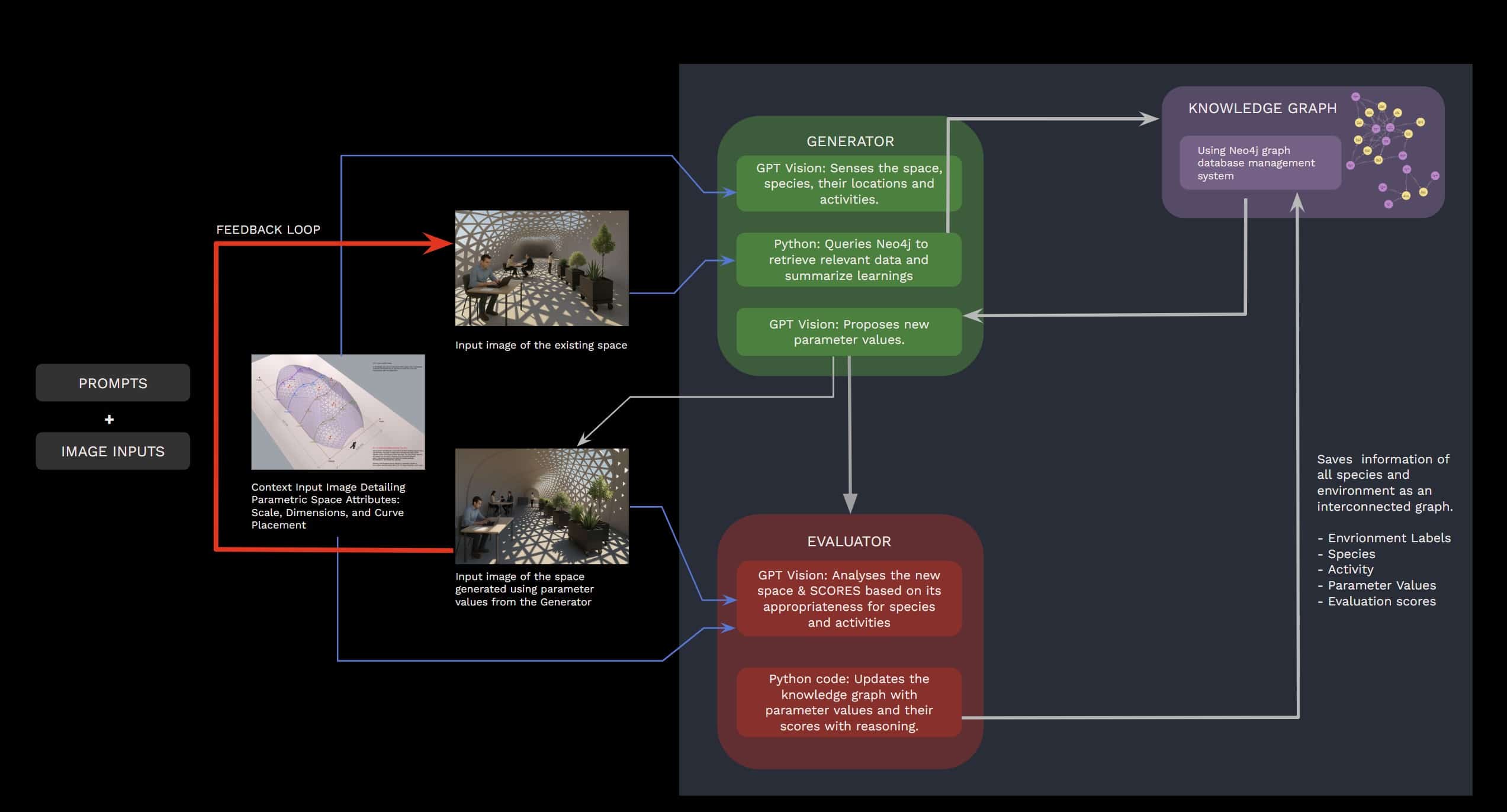

The project proposes an “architectural brain” that integrates generative AI tools – including ChatGPT, GPT-Vision, and multimodal large language models – within the Rhino-Grasshopper environment. This system operates through two interacting agents: the Generator, which analyzes ecological, visual, and historical data to create parametric environments; and the Evaluator, which critiques outputs against multi-species performance criteria and updates a graph-based memory for future iterations. A feedback loop, inspired by GAN principles, allows the system to self-assess, self-correct, and adapt continuously.

Through the development of an adaptive exhibition space – an “architectural machine” that perceives, reasons, and responds to diverse occupants – the research demonstrates how architecture can transition from static human-centered frameworks to dynamic ecological sensing devices. By embedding intelligence, memory, and multi-species awareness, the framework positions AI not merely as a design tool but as a decision-making partner in the production of architectures that evolve, learn, and support coexistence across species. The contribution lies in reframing architecture as a Sentient construct: an evolving medium that integrates artificial and ecological intelligence, establishing a foundation for a new order of autonomous, environmentally responsive, and multispecies-inclusive design.

Keywords

Cybernetic architecture, Multi-species design, Autonomous systems, LLMs for architecture, Adaptive design

1. Introduction

Gregory Bateson’s Steps to an Ecology of Mind has profoundly shaped how I think about architecture; not as a human-centered pursuit, but as an ecological practice grounded in patterns of connection and adaptation. Bateson’s idea of “the pattern which connects” invites us to design with awareness of the interdependencies that sustain life. To ignore these patterns is to risk fragmentation; to design with them is to participate in a living, evolving system. This perspective inspires a question at the heart of my thesis exploration: how might Bateson’s ecological thinking; his notions of feedback, interconnectedness, and adaptive systems; be reimagined through today’s technologies to shape the architecture of tomorrow?

We live in a time when artificial intelligence, machine learning, and large language models are transforming how we perceive and respond to complexity. These technologies resonate deeply with Bateson’s ideas, extending his cybernetic principles into computational form: systems that learn through iteration, recognize patterns, and adapt to change. Yet architecture, still rooted in rigidity, struggles to keep pace with an ecologically and technologically dynamic world. What if architecture itself became adaptive; capable of sensing, learning, and evolving in response to the ecologies it inhabits? This vision proposes an architecture that is not static or purely anthropocentric, but a living system, an active participant in the wider pattern that connects all forms of life.

2. Aim

The aim of this thesis is to develop and demonstrate a data-informed, adaptive architectural workflow that integrates ecological thinking with contemporary technologies – specifically knowledge graphs, machine learning, and large language models – in order to design spaces that evolve through feedback, minimize interspecies conflicts, and move toward an ecology-centered, multi-species architecture.

3. Problem Statement

Architecture today struggles with rigidity, anthropocentrism, and ecological detachment. It often fails to account for the dynamic, interdependent relationships that sustain ecological systems. As climate change, biodiversity loss, and technological transformations accelerate, there is an urgent need for architecture that:

- Learns and adapts like ecosystems,

- Negotiates coexistence among multiple species, and

- Responds dynamically to shifting environmental and social conditions.

4. Research Question

How does the State of the Art in large language models (LLMs), multimodal AI and machine learning contribute in an integrated workflow to the production of a new order of cybernetic architecture that supports multispecies coexistence?

5. Hypothesis

By giving architecture spatial intelligence, autonomy, and an intensified sensing layer, it can transform from a system of social framing to an ecological framework that promotes & improves multispecies coexistence.

6. Review of Literature

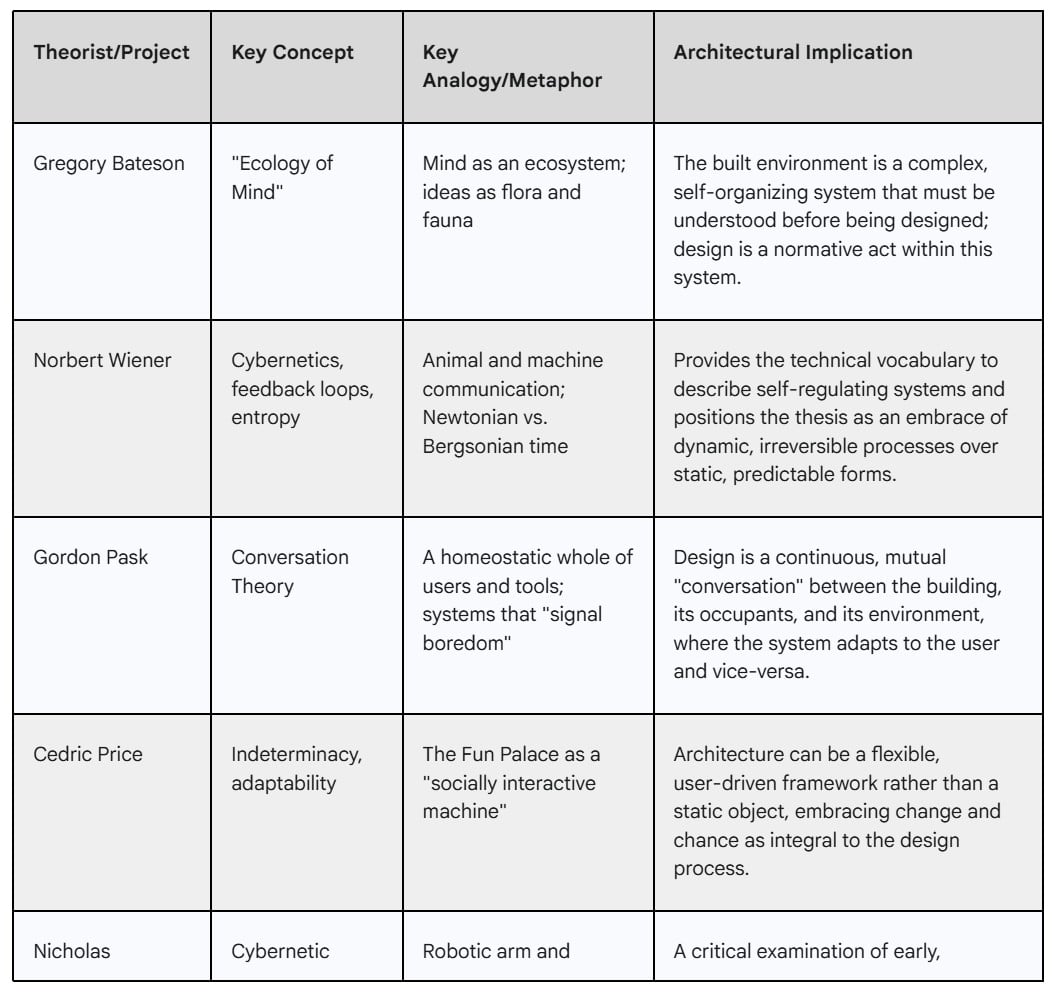

The following table outlines the theoretical framework underpinning this thesis, illustrating a shift from Wiener’s control-oriented cybernetics toward a Batesonian and Haraway-inspired paradigm of symbiosis. It maps how early notions of feedback and control evolve into co-evolutionary, multispecies relationships, highlighting each theorist’s or project’s contribution to this intellectual progression.

The literature suggests that future architectural workflows should evolve beyond technical automation into what Gordon Pask called a “conversation” – a dialogue between designer, system, and environment where all participants, human and non-human, co-shape outcomes.

Yet key gaps remain. Most architectural systems still exclude non-human agents, lack memory or learning across time, and operate with limited ecological intelligence. They react to data but rarely understand or adapt to the complex relationships among species.

This thesis responds by proposing a workflow that learns, remembers, and reasons ecologically – using knowledge graphs and generative AI to create adaptive architectures that foster genuine multispecies coexistence.

7. Workflow Proposal

7.1 Introduction

This thesis proposes an architectural brain, an LLM- and machine-learning-powered workflow embedded within Grasshopper, that, when integrated into any adaptive parametric space, is hypothesized to guide spatial transformations in response to diverse user entities, including humans, machines, animals, and plants. The workflow integrates generative and evaluative mechanisms to instruct these adaptive environments to drive spatial changes.

The core innovation lies in a closed-loop design ecosystem that continually learns from past performance, aimed at producing increasingly optimized spatial configurations across iterations. The research situates itself within the field of cybernetic and ecological architecture, where the architectural system brain evolves over time for better cohabitability based on the patterns of occupations of multiple user entities within the space.

When the relationship is no longer a simple one between humans and the environment, but rather a complex web involving diverse species and even machines within the space, how can architecture dissolve its boundaries to become an integral part of ecology, rather than merely serving as protection from it? This workflow is a step towards exploring if an AI powered architectural brain can autonomously instruct the adaptive architectural framework to shape spaces that foster better co-habitability among multiple user entities.

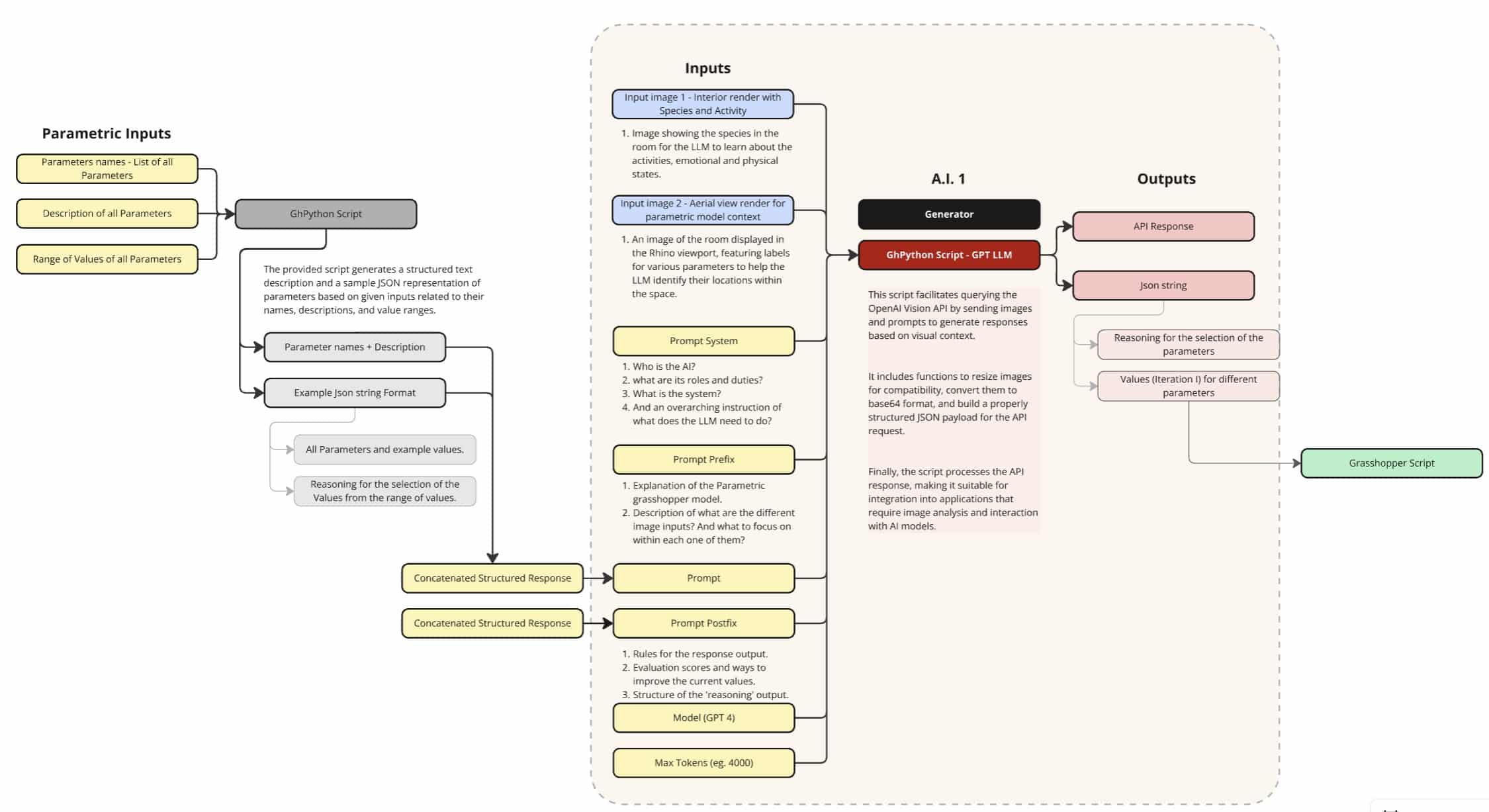

7.2 Explaining the Workflow through an Example Parametric Space

For the purpose of illustrating the workflow, and simultaneously moving beyond a human-centric approach, the demonstration focuses on humans and plants as the primary user entities. The design framework models space in such a way that adjustments to parameters within the Grasshopper model can transform its spatial qualities – either through variations in form (such as ceiling volumes and shapes) or in light (through the size and placement of openings). As both form and light are known to influence the wellbeing and comfort – both mental and physical – of these user entities, the chosen parameters have been deliberately selected to influence them directly.

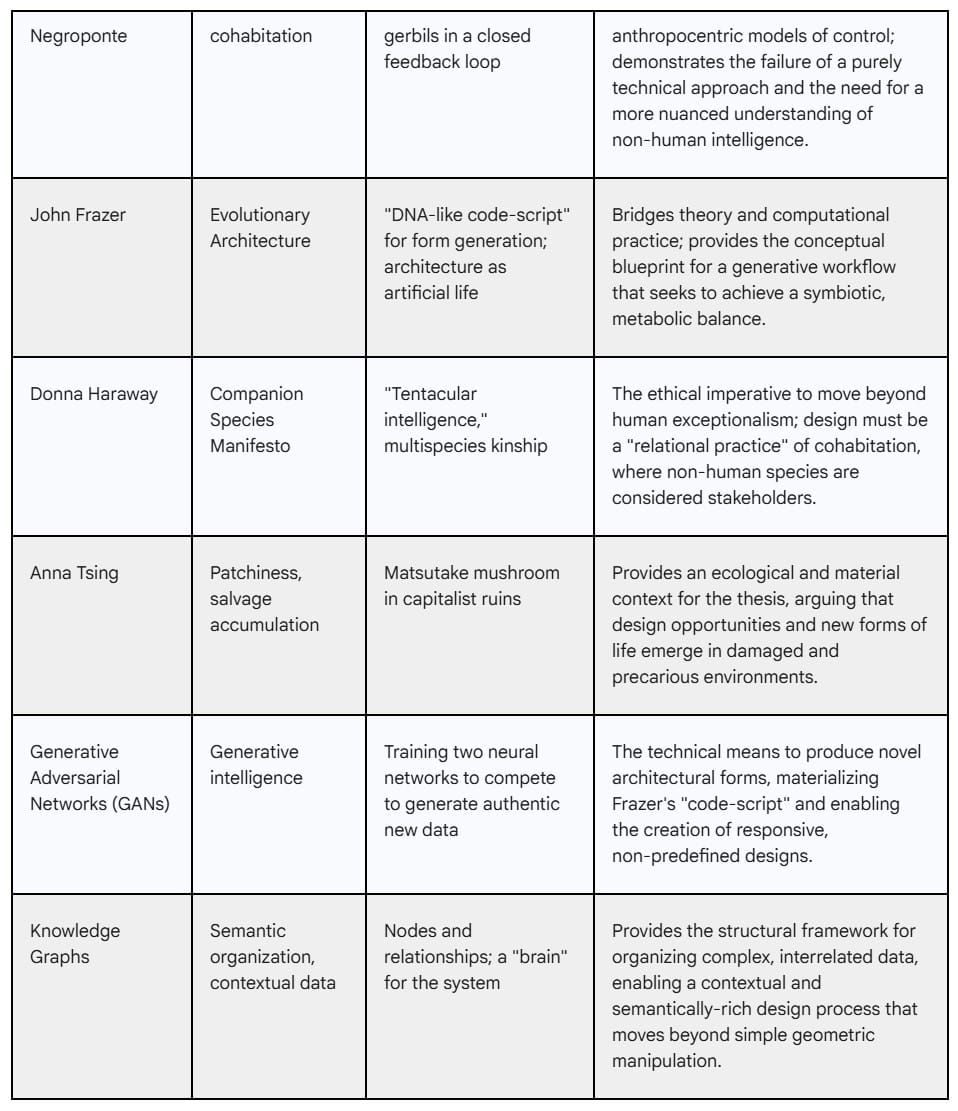

7.2.1 The Parametric Space

The design space consists of a 12m × 18m parametric pavilion modeled in Rhino Grasshopper. Its form is generated by lofting through multiple curves, each defined by adjustable control points. The use of a parametric model enables an LLM-based AI system to determine these parameters, effectively shaping the pavilion. The thesis explores the possibility of delegating spatial decisions to AI, based on the hypothesis that it can navigate the complex web of needs and outcomes arising when multiple types of user entities – rather than only humans – are considered. Unlike a single linear graph, this process depends on an interconnected network of user requirements.

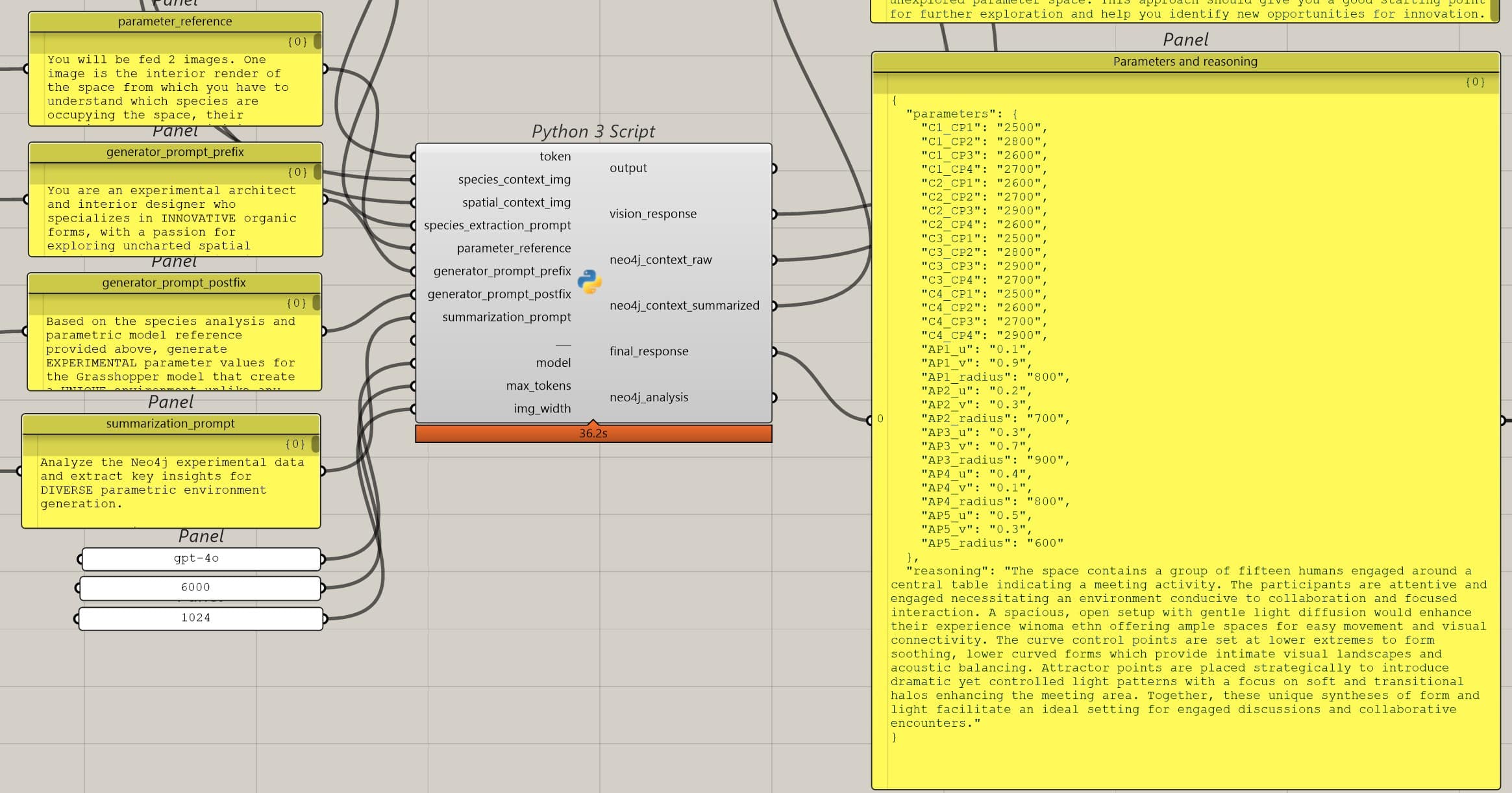

Context Img. for GPT Vision to understand the parameters positioning and sense of scale of the space.

Description of Parameters

There are total 31 parameters governing the space model and they are organized into two main categories:

- Form-defining parameters (Parameters 1-16): Curve control points that shape the pavilion’s volume and ceiling configuration. Each curve has 4 curve control points each. These are labelled as

“C1_CP1″: “2700”,

“C1_CP2”: “4900”,

“C1_CP3”: “4200”,

“C1_CP4”: “3500”, and so on - Spatial Light-defining parameters (Parameters 17–31): These include attractor points and their radii of influence, which govern the placement and scale of surface openings (e.g., windows), thereby shaping the lighting qualities within the space. For each attractor point, the parameters are;

“AP1_u”: “0.3”,

“AP1_v”: “0.3”,

“AP1_radius”: “2400”,

Where ‘u’ and ‘v’ determine the position of the window opening along the surface of the pavilion.

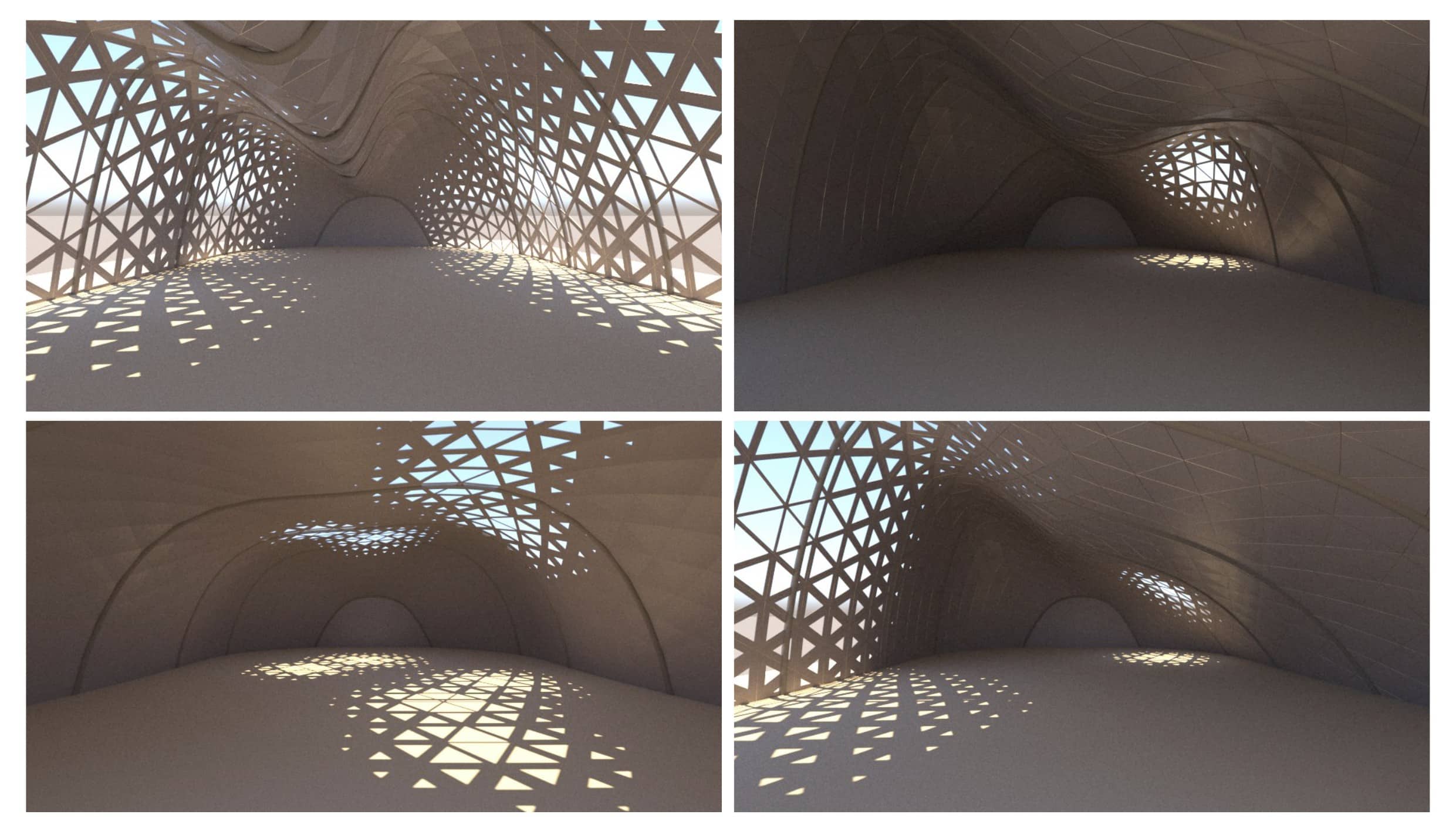

Together, these parameters allow the pavilion to express diverse spatial qualities (as shown in the following image), ranging from indirect to direct lighting, low to high ceilings, and flat to organic ceiling geometries and everything in between.

Image showing how the spatial quality can change by tweaking the parameters of the Grasshopper model

7.2.2 Technical Infrastructure

- Modeling Environment: Grasshopper/Rhino (parametric platform).

- LLM Integration: OpenAI API (vision + text processing) to comprehend image inputs.

- Database: Neo4j (knowledge graph).

- Data Capture: Two synchronized viewpoints (interior and aerial).

In effect, this will comprise the live camera feed of the space together with the user entities.

7.3 Workflow Concept

The proposal draws inspiration from Generative Adversarial Networks (GANs), a machine learning framework where two neural networks, a generator and a discriminator, compete to generate realistic data like images or audio.

GAN stands for Generative Adversarial Network, a type of machine learning model that consists of two neural networks, a generator and a discriminator, that compete against each other to create new, realistic data. The generator learns to produce convincing fake data (like images or text) from random noise, while the discriminator learns to distinguish this fake data from the real data it was trained on. Through this adversarial process, the GAN’s generator becomes increasingly proficient at creating new, high-quality data that resembles the original dataset.

Inspired from this concept, a 2 parts Generator – Evaluator workflow is devised for this proposal. In an adaptive space, which here is a Grasshopper parametric model, numerous spatial iterations of the same environment can be generated by adjusting parameters. However, determining which of these iterations best meet the needs of user entities requires behavioral feedback within the space. To address this, an evaluator is developed, functioning similarly to the discriminator in a GAN, which assesses how effectively each spatial configuration serves user requirements by assigning it a performance score. These scores, along with environmental and parametric data, are automatically stored in a Neo4j database. In subsequent iterations, the generator (the LLM-based architectural brain) queries this Neo4j knowledge graph, learns from prior shortcomings, and proposes improved spatial configurations.

7.4 Workflow Overview

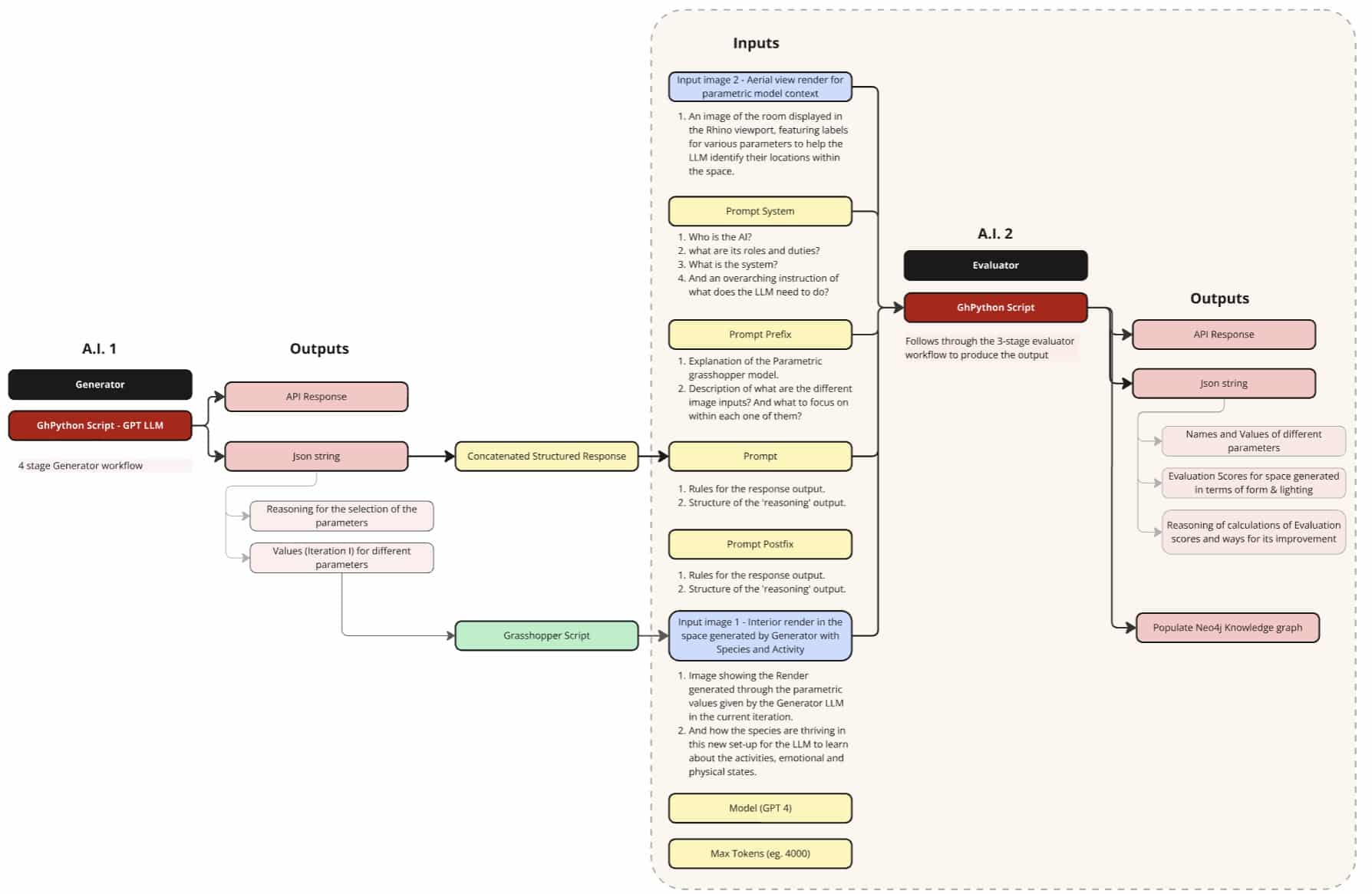

The system is composed of two interdependent agents:

- The Generator (AI Architect): Produces new design solutions.

- The Evaluator (AI Critic): Tests, scores, and records design performance.

7.4.1 The Generator

The generator creates new parametric environments by leveraging visual input (live feed of the space with species and their activities) and historical performance data. It functions as a Python component within the Grasshopper workspace, executing multiple functions through external APIs such as GPT Vision and Neo4j. By processing the input prompts, visual inputs and past performance data of the space, it produces updated parameter values with reasoning that define new environments.

Figure showing the inputs and outputs of the Generator

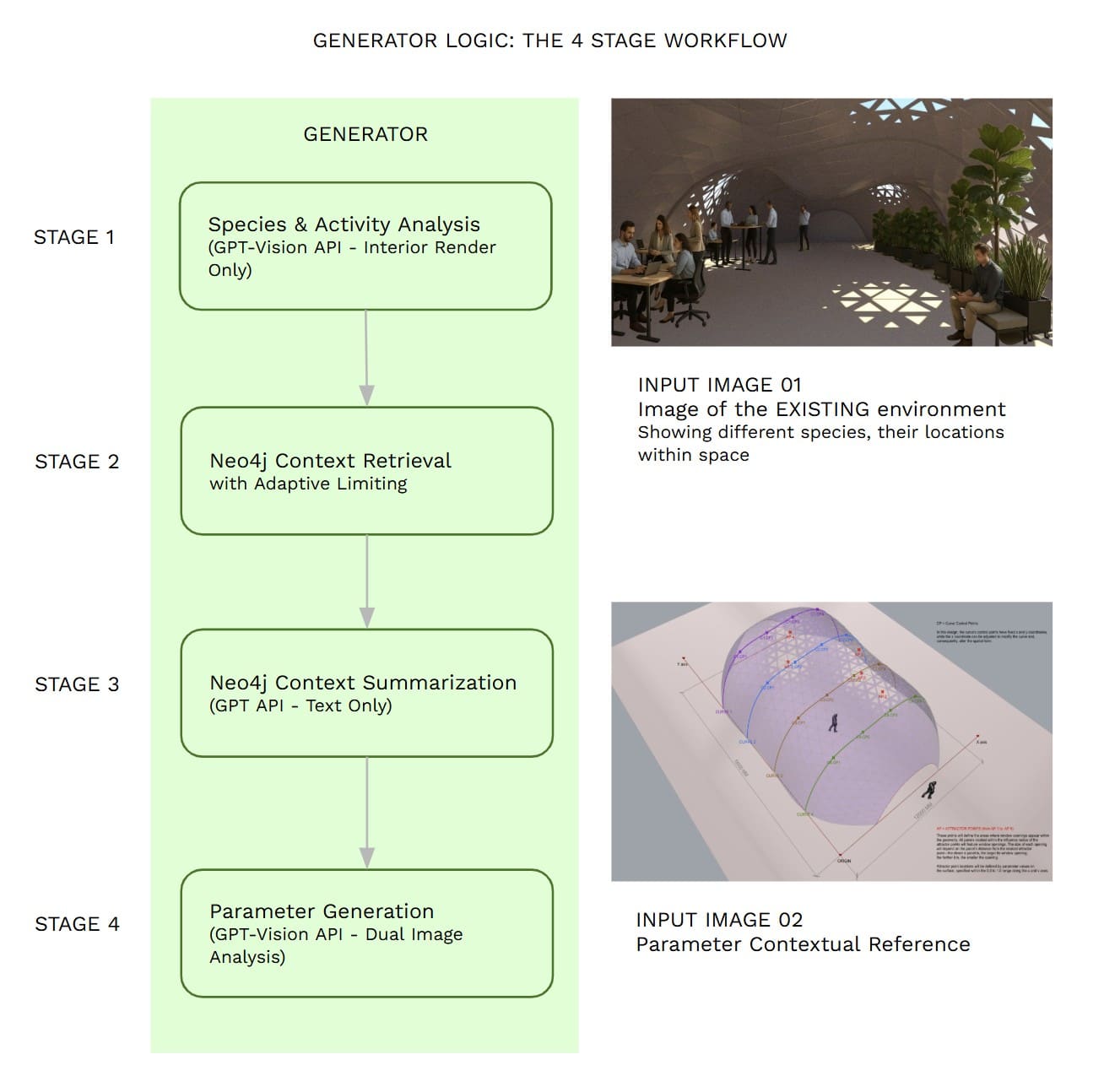

The Generator follows a four-stage methodology:

The Big Picture – Generator

The generator workflow is like having a very smart architect who:

- Observes what’s happening in a space by looking at photos

- Remembers what has worked well in similar situations before

- Thinks about the best approach based on past experience

- Creates a completely new design tailored to the specific situation

This system combines artificial intelligence, image analysis, and a knowledge database to generate custom building designs that respond to the life forms and spaces it observes.

Stage 1: Understanding What’s Happening (Species & Activity Analysis)

What it does: The system looks at an interior photograph and identifies what living things are present and what they’re doing.

How it works:

- Takes a photo from inside a building (like a camera capturing the current scene)

- Uses advanced AI vision technology to analyze the image

- Identifies different species (plants, animals, people) in the space

- Determines what activities are taking place (resting, feeding, growing, socializing)

- Creates a detailed report of everything it observes

Stage 2: Consulting Past Experience (Knowledge Retrieval)

What it does: The system searches through a database of previously generated environments to find relevant examples.

How it works:

- Connects to a knowledge database that stores information about past building designs

- Searches for projects that involved similar species and activities

- Finds examples of what worked well and what didn’t in comparable situations

- Gathers detailed information about successful design approaches

- Adapts the search based on how many different species were found (more species = broader search)

Stage 3: Summarizing Lessons Learned (Context Analysis)

What it does: The system takes all the information from past environment iterations and creates a concise summary of the most important insights.

How it works:

- Reviews all the historical data it found in Stage 2

- Identifies the most relevant patterns and successful strategies

- Condenses complex technical information into key design principles

- Focuses on insights that will be most useful for creating the new design

- Ensures the summary is focused and actionable

Stage 4: Creating the New Design (Parameter Generation)

What it does: The system generates a new architectural design by combining everything it has learned.

How it works:

- Takes both the interior photo (from Stage 1) and a contextual aerial view of the site

- Combines the species analysis, historical insights, and spatial context

- Uses AI to generate specific design parameters (31 precise measurements and coordinates)

- Includes intentional randomness to ensure each design is unique and innovative

- Creates detailed specifications that can be used to build the actual structure

example: It might generates specifications for curved walls at specific heights, lighting positions, and spatial arrangements that create an optimal environment for the observed activities while incorporating lessons from successful past projects.

Image shows the output of the Generator component: LLM-generated values and reasoning. These values will shape the parametric space.

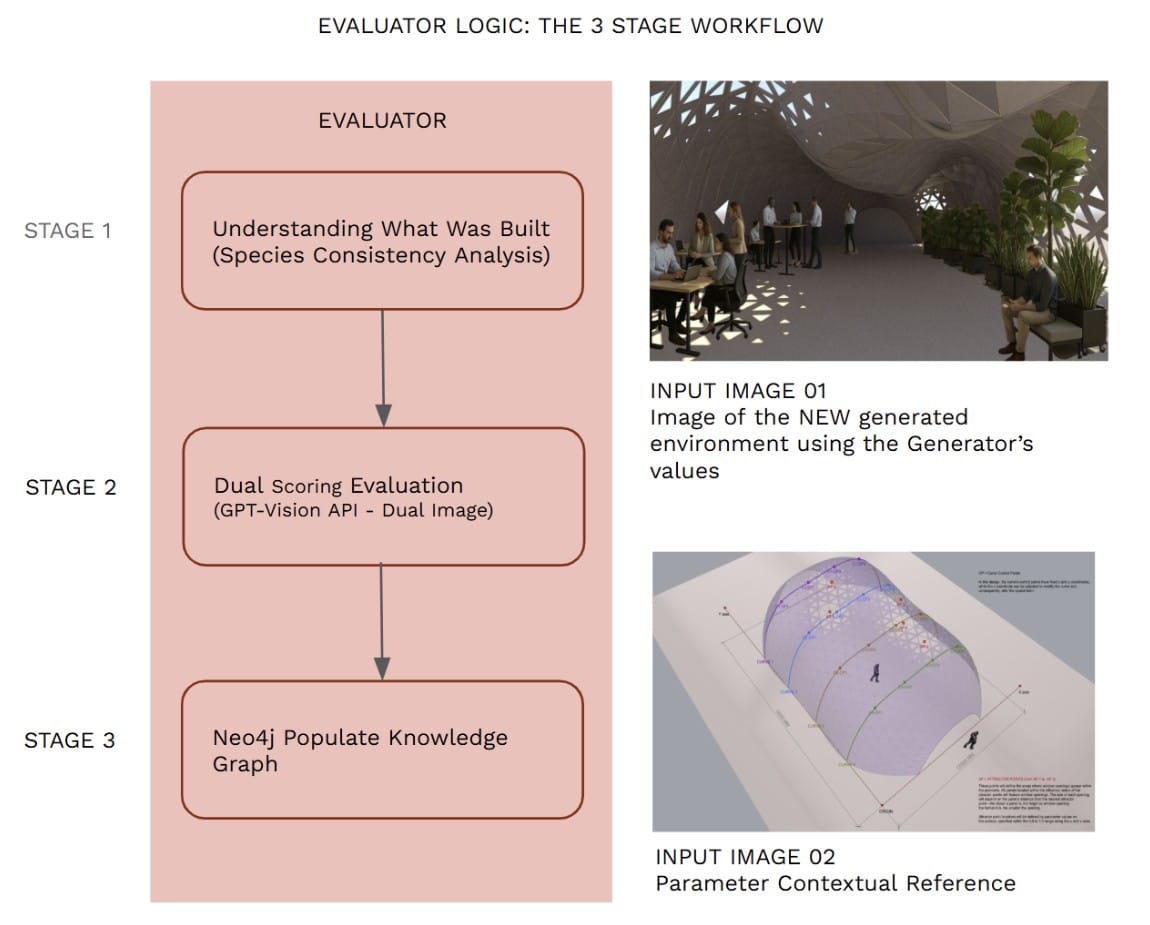

7.4.2 The Evaluator

After the space is generated using the parameter values provided by the Generator, the Evaluator analyzes the design, acting as an AI critic to assess how effectively the spatial configuration serves each species. It assigns scores based on spatial appropriateness for each species and additionally updates the Neo4j knowledge graph, ensuring that both successful outcomes and shortcomings are recorded to inform and improve future environment generation.

Figure showing how the generator output feeds into evaluator through the grasshopper script. The figure also shows various inputs and outputs of the evaluator

The Evaluator follows a three-stage methodology:

The Big Picture – Evaluator

The evaluator workflow is the “report card” phase of the intelligent design process. After the generator creates a new architectural environment, the evaluator:

- Examines what was actually built and who is using it

- Measures how well the design performs for different species & provides detailed feedback for future improvements

- Records the lessons learned in a permanent knowledge database (the Knowledge Graph)

This creates a continuous learning cycle where each evaluation makes the entire system smarter.

Stage 1: Understanding What Was Built (Species Consistency Analysis)

What it does: The system identifies what living things are present in the completed environment and ensures consistency with the original design intent.

How it works:

- Takes the species information that was originally identified during the design phase

- Ensures the same species and activities are being evaluated that were designed for

- Applies consistent naming and categorization to avoid confusion

- Creates a standardized list of what needs to be evaluated

Why this matters: This stage ensures that the evaluation is fair and accurate by measuring the design against its original intentions. It’s like making sure you’re grading a math test with the math answer key, not the history answer key.

Example: If the original design was intended for “humans engaged in quiet study” and “plants providing air purification,” the evaluator makes sure it’s measuring how well the built environment serves exactly those needs.

Stage 2: Measuring Performance (Dual Scoring Evaluation)

What it does: The system gives the environment two separate scores that measure different aspects of how well it works.

How it works:

- Form Score (1-10): Measures how well the physical shapes and structures support the intended activities

- Light Score (1-10): Measures how well the lighting and spatial qualities create the right atmosphere

- Evaluates each species separately to understand different needs

- Provides detailed reasoning for each score

- Uses artificial intelligence to analyze complex relationships between design and performance

The Two-Score System:

- Form Score: Focuses on physical comfort, spatial relationships, and structural support

Example: “Do the curved walls create good acoustics for study? Are the spaces the right size?” - Light Score: Focuses on illumination, mood, and atmospheric qualities

Example: “Is there enough natural light for reading? Do the lighting patterns support plant growth?”

Example: A library reading room might score 8/10 for form (excellent acoustic design and comfortable seating arrangements) but 6/10 for light (adequate for reading but could be better for plant health).

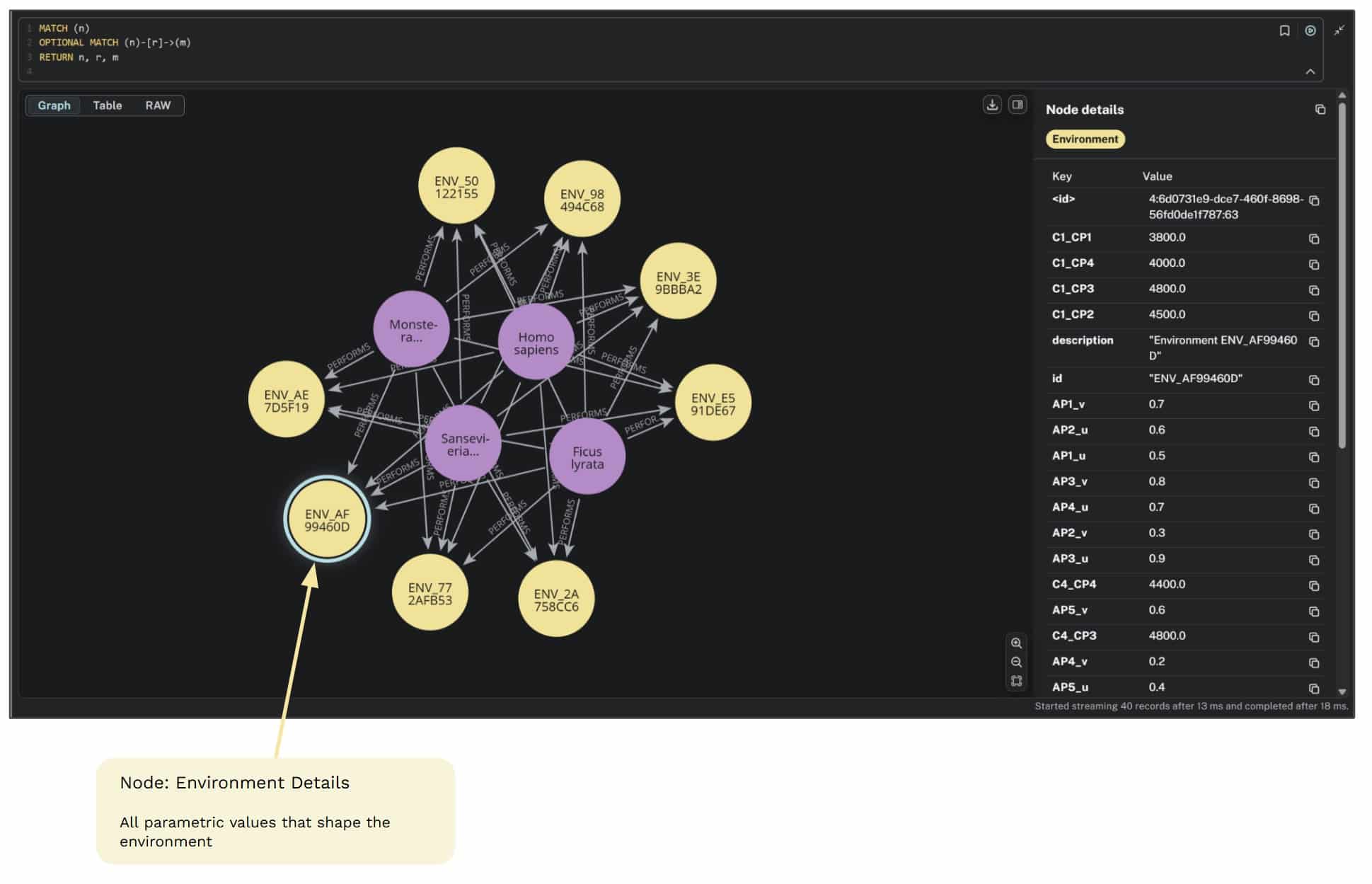

Stage 3: Building Institutional Memory (Neo4j Knowledge Graph Creation)

What it does: The system permanently records what it learned from this evaluation in a Knowledge Graph on a remote database on Neo4j that connects all past projects.

How it works:

- Creates a unique identifier for this specific environment design

- Records the performance scores and detailed reasoning

- Links this project to similar species, activities, and design approaches

- Builds connections between different projects to identify patterns

- Ensures the knowledge is available for future design decisions

How Knowledge Accumulates

- Individual Learning: Each evaluation teaches the system something specific about that design

- Pattern Recognition: Over time, the system identifies broader principles that work across multiple projects

- Predictive Insights: Eventually, the system can predict which design approaches are likely to succeed

- Informed Generation: This knowledge feeds back into the generator to create better initial designs

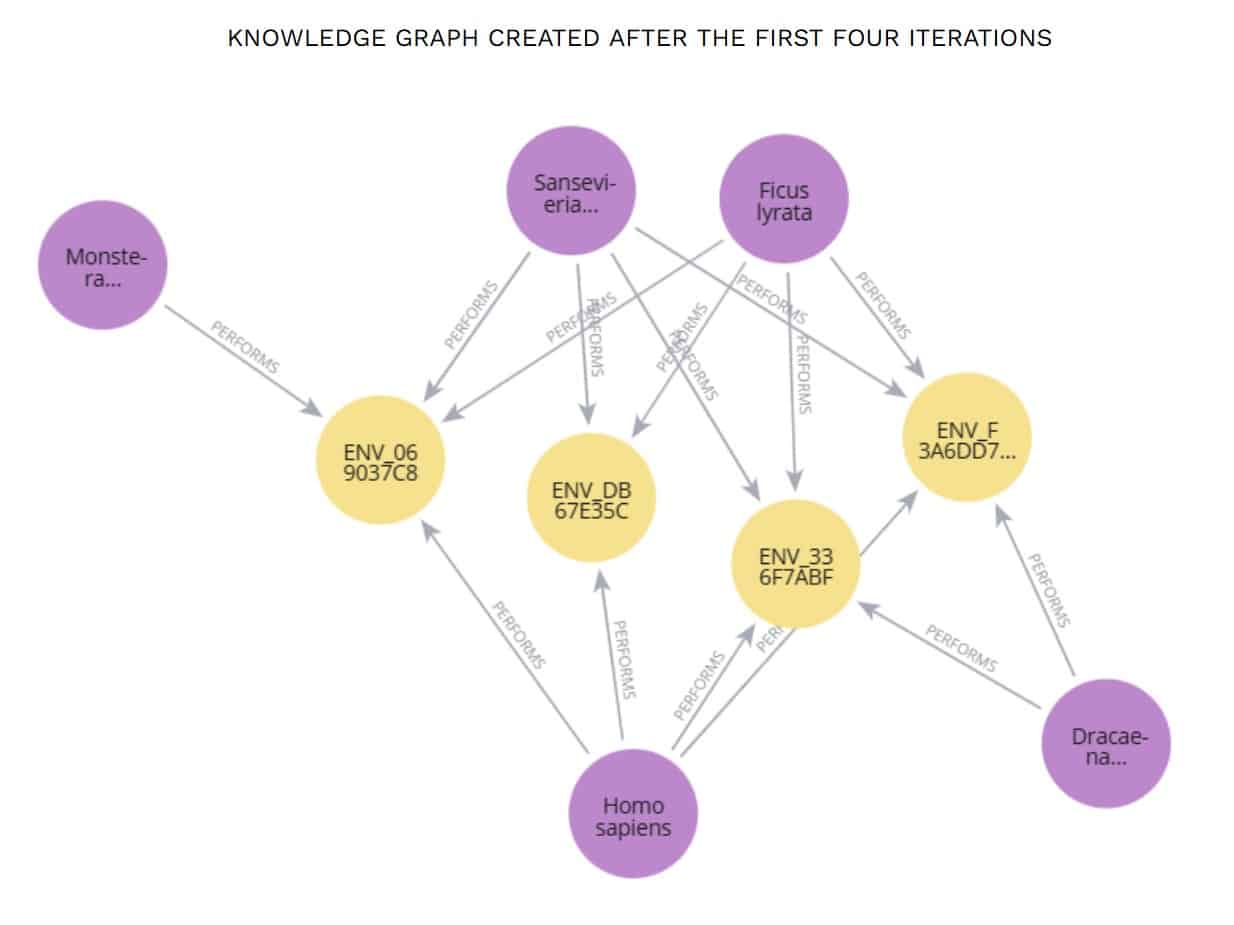

The Knowledge Graphs

The knowledge graph is stored in the form of nodes and relationships with 3 main categories:

- Nodes (Type 1, Pink): Species names storing the scientific binomial nomenclature of species inhabiting the space.

- Nodes (Type 2, Yellow): Environment details containing all parametric values that define the space.

- Arrows: Relationship Details of each Specie with its Environment.

Image showing the species information being stored as pink nodes in the Neo4j Knowledge graph.

Image showing the way the environment details with parametric values of each iteration are stored in the form of a graph.

Image showing the evaluation scores and reasoning being stored for future learning as relationships of the species with the iterated environments.

The Learning Loop

The AI progressively improves by learning complex patterns that reveal which spatial configurations best support multi-species cohabitation.

- Generate a new design based on current knowledge

- Build the environment using the 31 parameters

- Evaluate how well it works for each species

- Store the results in the knowledge database

- Use this new knowledge to make even better designs next time

Demonstrating the workflow with the Knowledge

graph creation

The primary aim of this experiment was to assess how effectively the AI could analyze, reason, and generate spatial parameters tailored to a specific user group, while also evaluating its ability to create appropriate lighting and volumetric conditions based on the spatial distribution of entities. The objective was to determine whether the system’s space generation and reasoning capabilities improved over successive iterations through learning, or whether the outcomes remained largely random.

To test this, an interior render of the existing rhino model was produced in Rhino V-Ray. This render was then populated with humans and a variety of plant species using Florafauna AI.

The experiment was structured into three categories, with a total of six iterations:

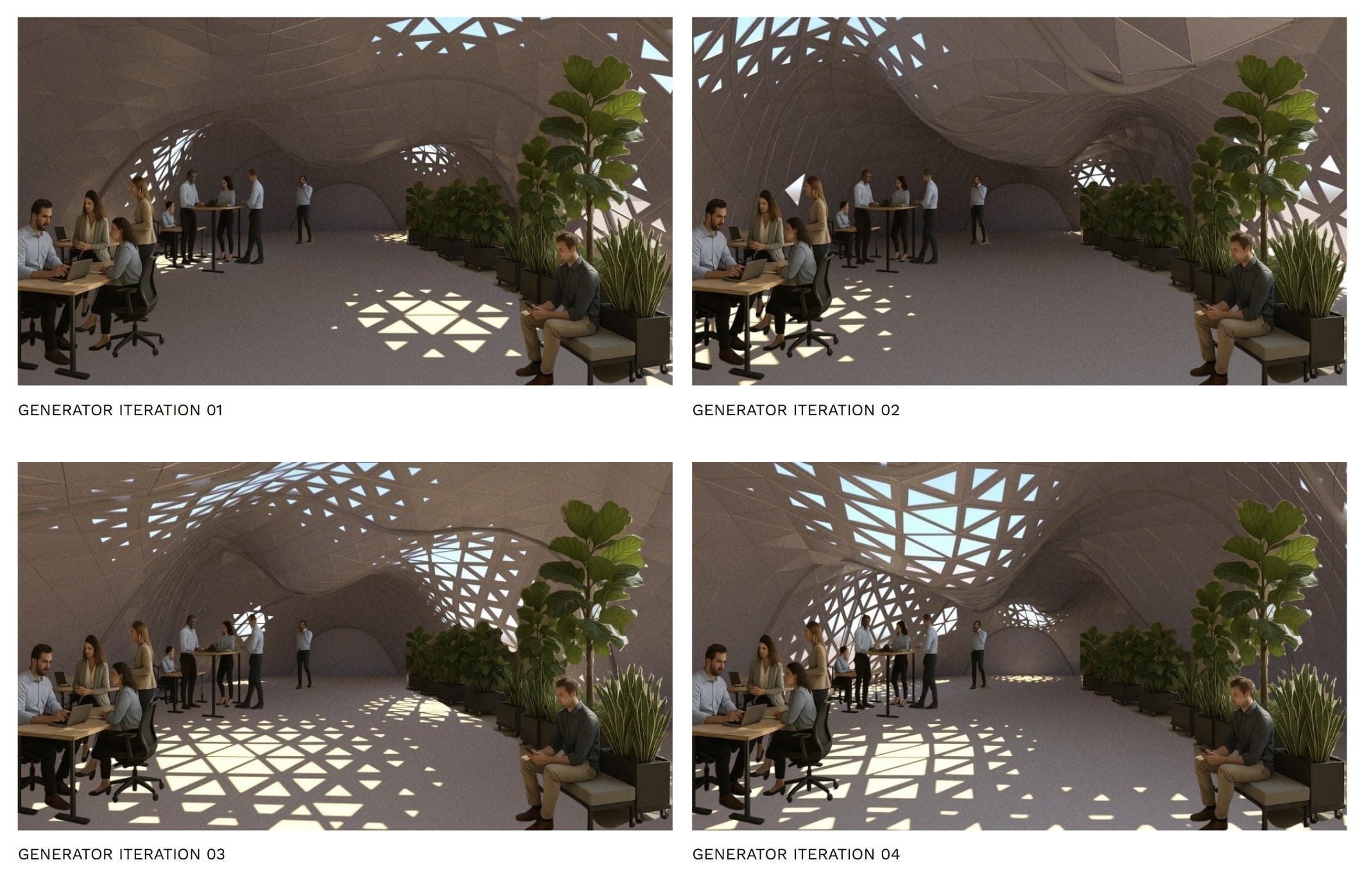

- Iterations 1–4: Humans + Plants – The space was populated with humans working on the left side and indoor plant species positioned on the right.

- Iteration 5: Only Humans – The space was populated exclusively with humans in an office setting.

- Iteration 6: Only plants – The space was populated exclusively with plants, specifically cacti species.

Iterations 1–4: Humans + Plants

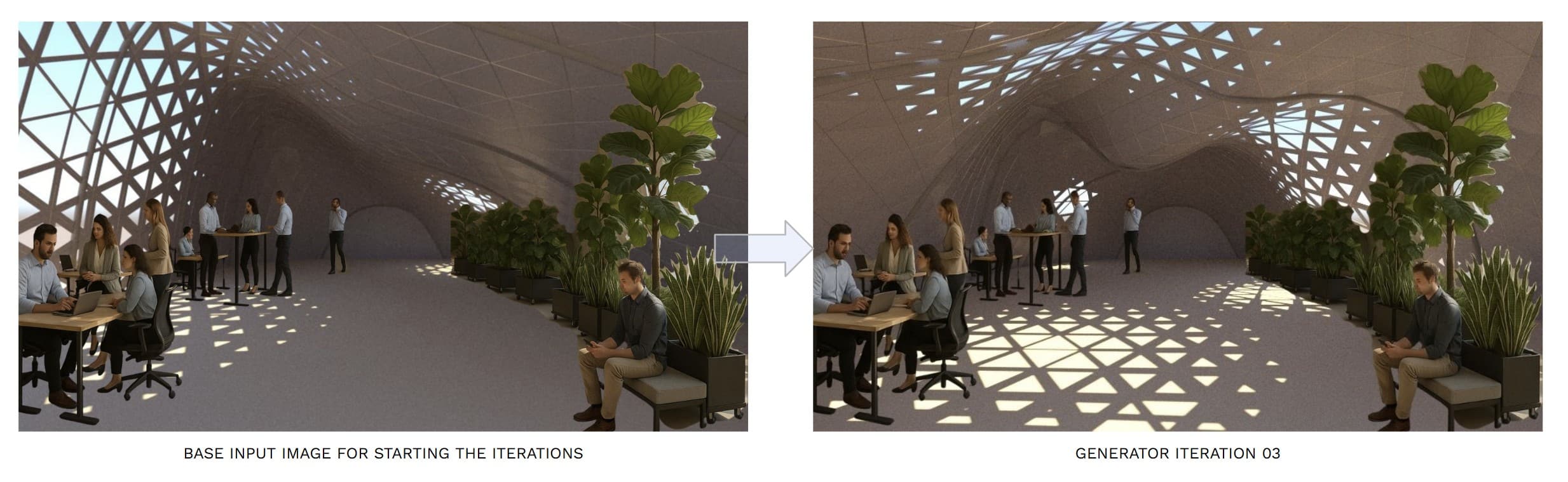

After the initial base image was provided to the generator for analysis of the species and to suggest improved spatial configurations, the first four iterations of spatial generation were produced. Across these iterations, the AI demonstrated reasoning by identifying that plants require natural light while humans need diffused lighting for work. It then autonomously generated multiple iterations that addressed these requirements.

The Generator was pretty accurate in understanding the image, its content and its spatial distribution;

“`json

{

“species_analysis”: [

{

“name”: “Homo sapiens”,

“type”: “human”,

“count”: 10,

“location”: “scattered throughout the room”,

“activity”: “working, conversing, using electronic devices”,

“emotional_state”: “engaged, focused”

},

{

“name”: “Ficus lyrata (fiddle-leaf fig)”,

“type”: “foliage_plant”,

“count”: 1,

“location”: “right center”,

“activity”: “mature growth stage”,

“emotional_state”: “healthy, vigorous”

},

{

“name”: “Monstera deliciosa (Swiss cheese plant)”,

“type”: “foliage_plant”,

“location”: “right center, behind the fiddle-leaf fig”,

“count”: 3,

“activity”: “mature growth stage”,

“emotional_state”: “healthy, vigorous”

},

{

“name”: “Sansevieria trifasciata (snake plant)”,

“type”: “foliage_plant”,

“count”: 1,

“location”: “right front”,

“activity”: “mature growth stage”,

“emotional_state”: “healthy, vigorous”

}

]

}

“`

The Generator’s response to this input image for space generation was;

{

“parameters”: {

“C1_CP1”: “2700”,

“C1_CP2”: “4900”,

“C1_CP3”: “3100”,

“C1_CP4”: “4300”,

“C2_CP1”: “4200”,

“C2_CP2”: “3500”,

“C2_CP3”: “2600”,

“C2_CP4”: “4700”,

“C3_CP1”: “4800”,

“C3_CP2”: “2900”,

“C3_CP3”: “4000”,

“C3_CP4”: “3600”,

“C4_CP1”: “3300”,

“C4_CP2”: “5000”,

“C4_CP3”: “2700”,

“C4_CP4”: “4100”,

“AP1_u”: “0.3”,

“AP1_v”: “0.3”,

“AP1_radius”: “2400”,

“AP2_u”: “0.9”,

“AP2_v”: “0.8”,

“AP2_radius”: “1400”,

“AP3_u”: “0.4”,

“AP3_v”: “1.0”,

“AP3_radius”: “3700”,

“AP4_u”: “0.5”,

“AP4_v”: “0.1”,

“AP4_radius”: “600”,

“AP5_u”: “0.1”,

“AP5_v”: “0.9”,

“AP5_radius”: “3200”

},

“reasoning”: “The space contains ten humans scattered throughout, one Ficus lyrata, three Monstera deliciosa, and one Sansevieria trifasciata located on the right center. Humans are engaged in working and conversing, while the plants are in a mature growth stage and appear vigorous. Ideal spatial needs include open-volume sections for group activities and controlled light to support plant photosynthesis and human productivity. Curve control points are adjusted to create dynamic waves with varied ceiling heights enhancing sound diffusion and visual interest for human occupants while allowing light penetration for plants. The attractor points are placed to suit diverse lighting purposes, with AP1 and AP5 ensuring ambient lighting and AP3 creating direct sunlight for plant zones. Form and lighting seamlessly blend to nurture the plants while providing humans a conducive environment for focus and collaboration.”

From this description, we can infer that the AI has developed a fairly nuanced understanding of spatial design requirements and is able to balance the needs of multiple species (humans and plants) within a shared environment. Specifically:

- Contextual Awareness – The AI recognizes both the quantity and type of occupants (ten humans + specific plant species) and understands their states (humans working/conversing, plants mature and vigorous).

- Functional Reasoning – It translates these states into spatial requirements:

Humans need open volumes for collaboration, sound diffusion, and comfortable lighting.

Plants require sufficient natural light for photosynthesis, tailored to species-specific conditions. - Parametric Design Logic – The AI shows awareness of design manipulation tools (curve control points, attractor points) and how they can be used to achieve functional outcomes (e.g., varied ceiling heights for acoustics and aesthetics, attractor points for diverse lighting conditions).

- Integrated Multi-Species Design – Rather than optimizing for one group alone, the AI creates a cohesive environment that blends lighting, form, and spatial volume to support both plant health and human productivity.

Consequently, it developed iterations considering all these factors which are as follows:

Image shows first four iterations of the demonstration with humans and plants as the species.

Image shows the Knowledge Graph with four environments, the species detected and their relationships to each other

Iteration 5: Only Humans

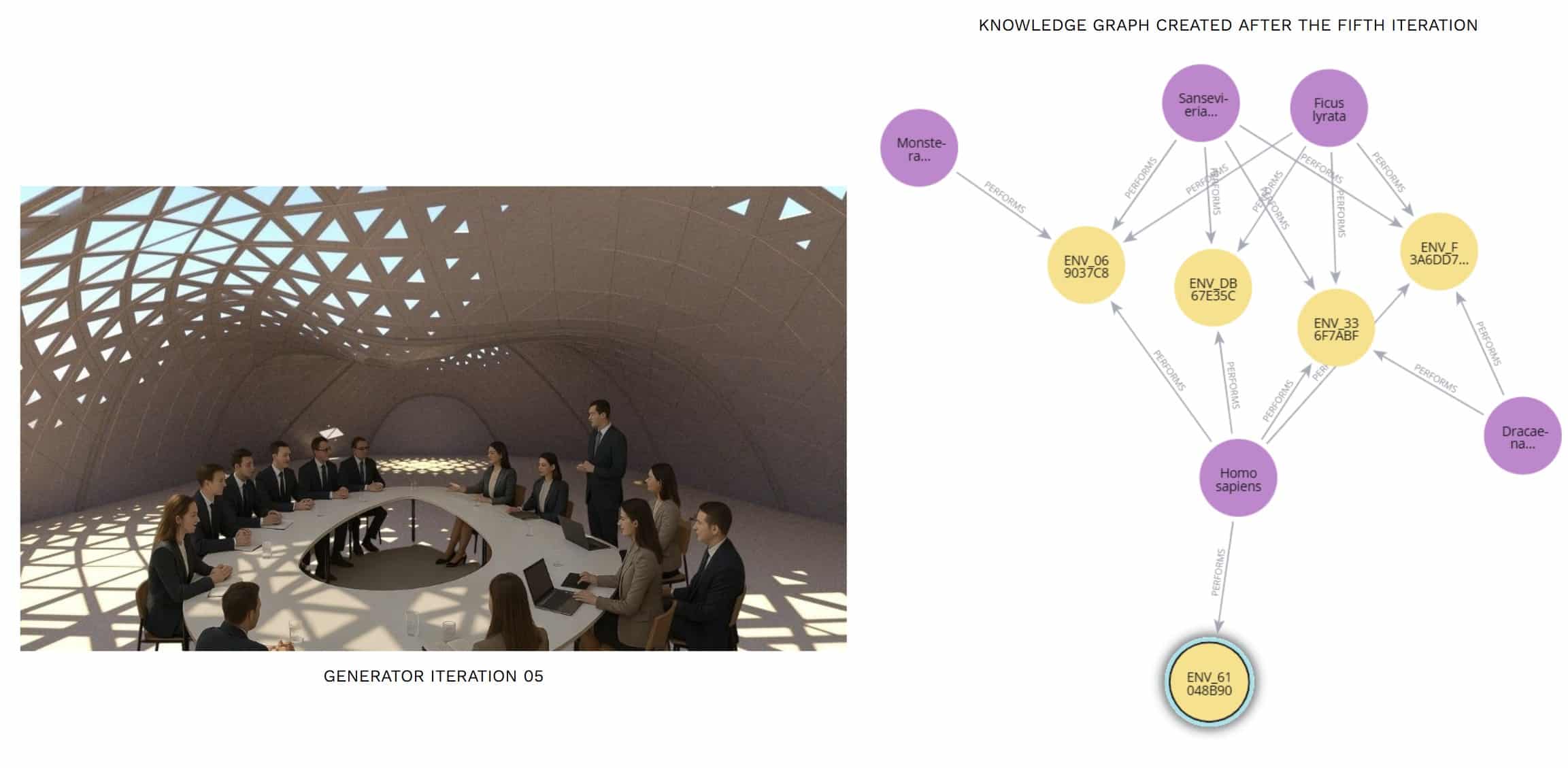

The species in the space generated in Iteration 4 were replaced by humans in a meeting setting scenario and thus the resultant space generated by the AI by understanding these new needs is as follows:

Image illustrates how the AI generated the spatial volume and lighting conditions based on the activities performed. The knowledge graph updates with newly added environment and activity relationship, expanding the database and strengthening future iterations.

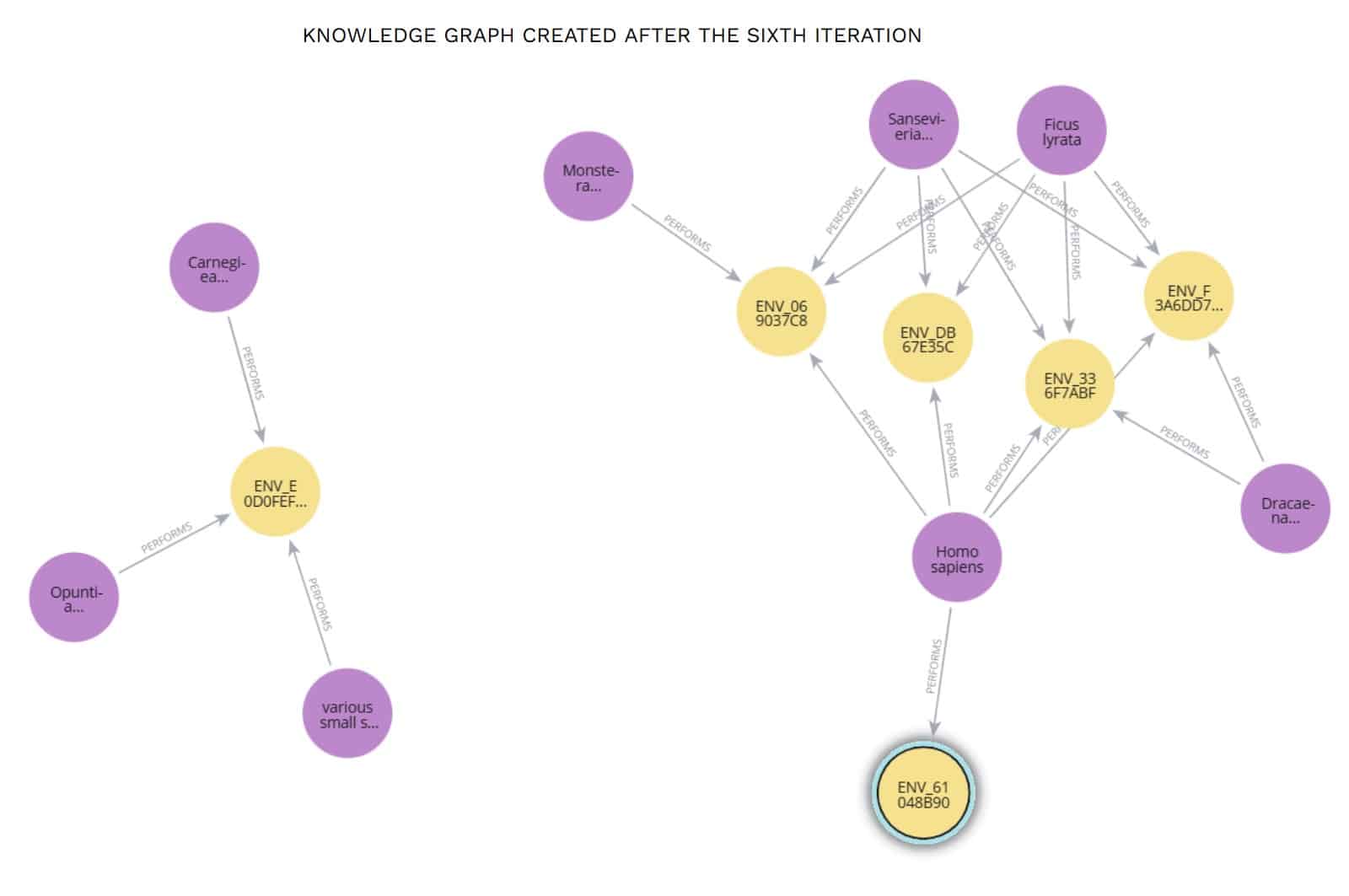

Iteration 6: Only Plants

In the next iteration, the humans were replaced by cacti species.

Image illustrates the form adjusted to cactus plant heights along with the

modified lighting layout.

Image illustrates a new cluster forming in the knowledge graph due to

non-overlapping species.

8 Results & Findings

The experimental workflow revealed several key insights into the system’s performance across iterations.

8.1: Species Identification Accuracy

Across all iterations, the LLM achieved a 76% accuracy rate in identifying species within the space. While occasional misclassifications occurred, these incorrect entries were still recorded in the knowledge graph. Importantly, the context-retrieval logic was structured to prioritize dominant patterns rather than isolated anomalies. As a result, such errors had minimal influence on the overall design outcomes.

8.2: Contextual Knowledge Integration

In generating subsequent spatial iterations, the Generator consistently leveraged contextual knowledge accumulated from prior runs. This information, stored in the Neo4j remote database as a knowledge graph, informed the parameters of each new spatial configuration. The process demonstrated the system’s ability to retain, recall, and adapt prior learning to future iterations.

8.3: Iterative Reasoning and Refinement

The early iterations of space generation reflected the system’s reasoning but exhibited a noticeable degree of randomness. As the number of iterations increased, however, the reasoning process showed clear improvement. The LLM progressively refined its understanding of which parameters required adjustment in response to the spatial and ecological context.

A significant example of this refinement emerged in the first four iterations. In three out of four cases, the Generator accurately positioned light openings in relation to plant locations. This ensured that trees received sufficient direct light for growth, while humans were simultaneously provided with adequate, activity-specific lighting to support work and collaboration. This demonstrated the system’s capacity to balance the environmental needs of multiple species within a shared spatial framework.

The proposed system successfully demonstrated its ability to:

- Capture and analyze species-specific spatial needs through visual inputs.

- Utilize historical data to identify effective design precedents.

- Generate novel environments by parametrically controlling 31 spatial variables.

- Evaluate outcomes based on species-centered performance criteria.

- Iteratively refine its approach by learning from results to improve future designs.

Together, these capabilities establish a self-improving architectural workflow that advances toward creating ecologically harmonious environments.

Limitations and Future Directions

1) The primary limitation of this study was its inability to capture genuine species behavior in response to the generated spaces. The evaluation relied on GPT Vision to interpret species contentment; however, since the inputs were rendered images, behavioral feedback remained static across all iterations.

Because the demonstration was confined to visual renderings, it was not possible to assess the system in real-world scenarios where authentic physiological responses (e.g., health indicators) or emotional reactions (e.g., satisfaction, discomfort) could be observed. The emotional and physical states of the species remained unchanged, while the system simply generated different iterations to learn which versions were better suited.

As a result, the study was limited in evaluating GPT Vision’s ability to assess true emotional and physical states. A natural next step would be to integrate live camera feeds as inputs for both the generator and evaluator, along with sensor data. With these additions, the true accuracy of the workflow could be revealed, making it possible to assess whether the AI architect is genuinely evolving to create better spaces for cohabitation.

2) Another significant limitation lies in GPT Vision’s ability to interpret the parametric nature of space solely from an image. While images can capture form and appearance, they lack the embedded relational rules and dependencies that define parametric models. This limitation makes it difficult for GPT Vision to fully grasp complex spatial contexts, as its capacity to process underlying geometric logic and constraints is inherently restricted. For this reason, the demonstration employed a deliberately simple design of the organic pavilion, and restricted the scope to humans and plants. As the spatial complexity increased, the LLM struggled to interpret the environment and consequently produced ambiguous or less coherent spatial outputs.

Emerging tools, such as the Rhino MCP plugin, which has the capability to translate parametric geometry and constraints into machine-readable structures, or similar developments, could substantially enhance this workflow. By providing GPT Vision with structured parametric data rather than static visual inputs, the system would be better equipped to engage with the logic behind space generation rather than just its surface appearance.

A promising future direction would be the integration of LLM-based text processing that interprets and summarizes information from these Rhino-interface plugins. Such an approach would enable the LLM to contextualize complex parametric models and distill their essential logic. When combined with GPT Vision’s ability to interpret visual patterns, this hybrid workflow could overcome the current limitations and unlock the potential for designing more adaptive, efficient, and meaningful spaces for cohabitation.

9. Conclusion

Towards an Autonomous & Ecologically Integrated Built Environment

“Architecture is the learned game, correct and magnificent, of forms assembled in the light.”

– Le Corbusier

Today, however, the light is no longer only physical; it is also data, patterns, and intelligence.

This thesis envisions a future where architecture transcends the static and the singular, becoming a data-informed, evolving organism – a machine of architecture that learns, adapts, and coexists. By weaving together knowledge graphs, machine learning, and iterative reasoning, the developed workflow decodes what has long been invisible to human intuition: the patterns of coexistence. These are not rules imposed from above, but emergent behaviors discovered through feedback, iteration, and adaptation – revealing how diverse species might inhabit, share, and thrive within the same environment.

Unlike conventional design, which prescribes fixed conditions, this system does not dictate. Instead, it listens, learns, and reshapes itself. With each cycle, it reduces conflict, enhances habitability, and moves closer to environments that are not only functional but deeply attuned to the lives within them. In this way, architecture acquires the most human of abilities – the ability to evolve – and, in doing so, takes a step toward becoming sentient.

Though demonstrated at a limited scale, the implications are vast. By integrating live sensors, contextual modeling, and multi-modal AI tools, this framework could scale to embrace countless data points – forming the foundations of a species-blind architecture, one that serves all living beings equally. Here, architecture does not separate humans from ecology but embeds itself within it, becoming a partner to the environment rather than a shield

against it.

To conclude, this thesis proposes a radical reimagining: architecture as a living, learning entity – a system that grows through data, adapts through iteration, and aspires to ecological harmony. Drawing inspiration from kinetic architecture, cybernetics, and AI-driven intelligence, it gestures toward a future where built form is not static, but responsive; not human-centered, but life-centered.

“The future of architecture is not the object, but the system; not the monument, but the network.”

In that future, architecture is no longer merely built – it is becoming.