‘’To use a mobile robotics to aid in the accuracy of construction and installation planning by creating a new visual communication link using scanned data from the built environment.”

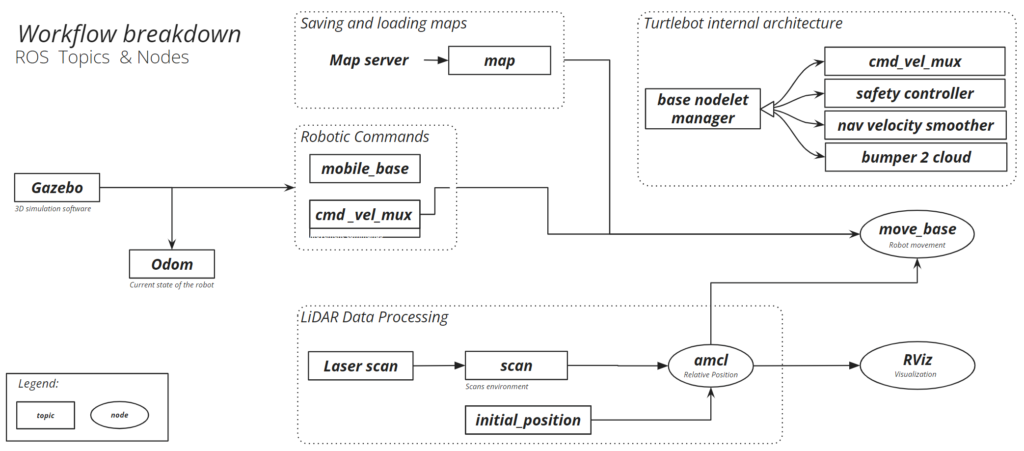

The Site Assistant is an mobile robotic assistant designed to enhance efficiency on construction sites. It addresses the common challenge of locating specific objects within walls or pinpointing precise areas for tasks. Utilizing the Building Information Modeling (BIM) data from architects and engineers, the Site Assistant projects relevant information directly onto surfaces. This projection, achieved through a top-mounted projector, offers a practical alternative to Augmented Reality (AR) for data display. This project was developed as part of the Software II Class, focusing on programming with the Robot Operating System (ROS). We utilized the versatile Turtlebot Robot, programmable via ROS, for this purpose. The Turtlebot’s adaptable design allowed us to equip it with the necessary tools, although due to limitations, we only incorporated a 2D Lidar Sensor and an Intel NUC PC.

The main idea is to empower the workforce on a construction site by assisting them with this system. When the constructor arrives at the site, they can command the robot to move to the working area. To enable this process, it is necessary to scan the environment beforehand and compare it to the architectural project: BIM model, furniture, paintings, and MEP (Mechanical, Electrical, and Plumbing) infrastructure.

Progamming/Testing

+

We started by creating a map of the room we want to scan. We did this by moving the Turtlebot manually through the room to create a 2D Lidar Scan. Ideally we would also have a 3D Lidar camera attached, but this was not available at the time.

To get a 3D Model, which we can project on the walls we need a 3D model of the Room. We used Polycam to create this. The scan we made we then rebuilt in Rhino to run a simulation of the room again. All of these steps were only necessary because we dont have a 3D Lidar scanner.

After we rescanned the room, we were able to provide the robot with certain positions were the robot should start projecting. The Animation below is a simulation demonstration of what we can do.