A Critical Analysis of AI-Driven Emotional Computing and Mechanization of Human Affect

1. Abstract

This critical analysis examines the theoretical implications of “Automating Scientists,” a project that explores the intersection of artificial intelligence and human emotional recognition. Through the lens of affective computing, this study interrogates the fundamental question:

“Can a machine sense us deeply enough to create for the heart, not just the eye?”

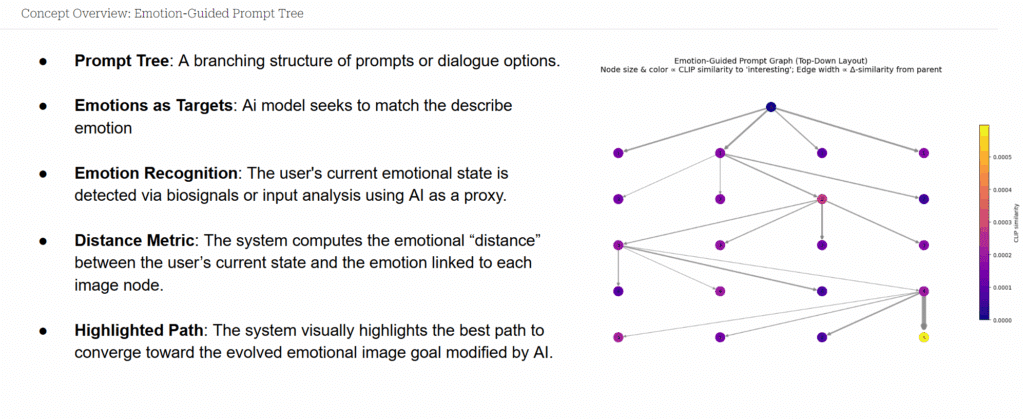

The project challenges traditional boundaries between human subjectivity and computational objectivity, proposing emotion-guided prompt trees as a mechanism for AI systems to navigate and respond to human emotional states. This analysis critically examines the theoretical foundations, methodological approaches, and broader implications of mechanizing human affect within computational systems.

2. Background Study

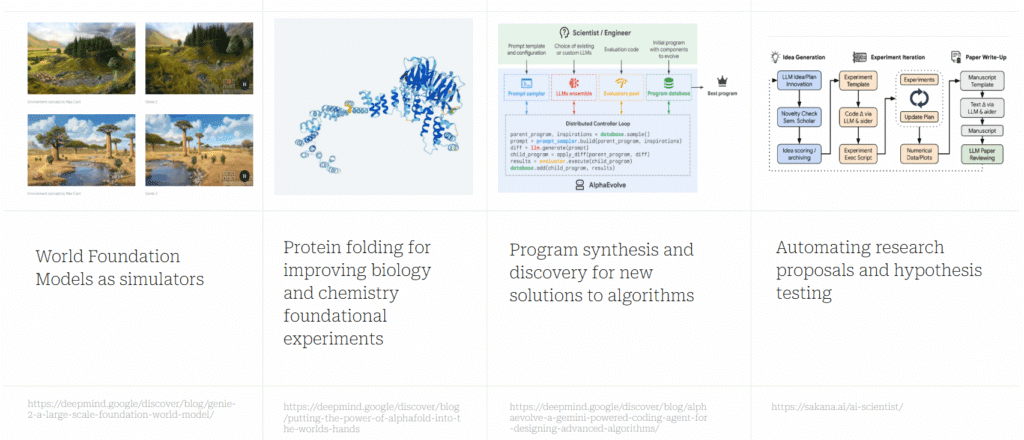

In the rapidly evolving landscape of scientific discovery, large-scale AI systems are not only accelerating innovation but also reimagining the very process of research itself. From foundation world models like Genie 2 that simulate rich, dynamic environments, to AlphaFold which revolutionized biology through accurate protein folding predictions, these tools serve as computational engines of imagination and experimentation. AI agents like AlphaEvolve go further, autonomously generating novel algorithms through iterative synthesis and evaluation, while platforms like Sakana’s AI Scientist automate the entire research cycle—from ideation to experimentation and manuscript generation. Together, they represent a shift from AI as a tool to AI as a scientific collaborator, capable of hypothesis generation, experimental reasoning, and potentially, discovery itself.

3. Literature Review:

- Foundational texts in Affective Computing:

- Rosalind Picard’s “Affective Computing” (1997) remains as a seminal text in the field where it argues that for computers to be truly intelligent they must be able to recognize and respond to humans. Picard’s work establishes a framework for emotion recognition systems that lay groundwork for other developments.

- Antonio Damasio’s ‘Descartes error’ – provided a crucial understanding into the relationship between emotion and cognition, demonstrating that emotional processes are essential to decision making.

- Sherry Turkle’s ‘Alone Together’ (2011) – offers us a critical examination of human-computer relationship exploring how digital technologies are reshaping human experience and social connection.

- Phenomenological Approaches:

- Hubert Dreyfus ‘Being the world’ (1991): provides critique of AI arguing human understanding involves a kind of embodied know-how that cannot be captured by computational systems.

4. Theoretical Framework

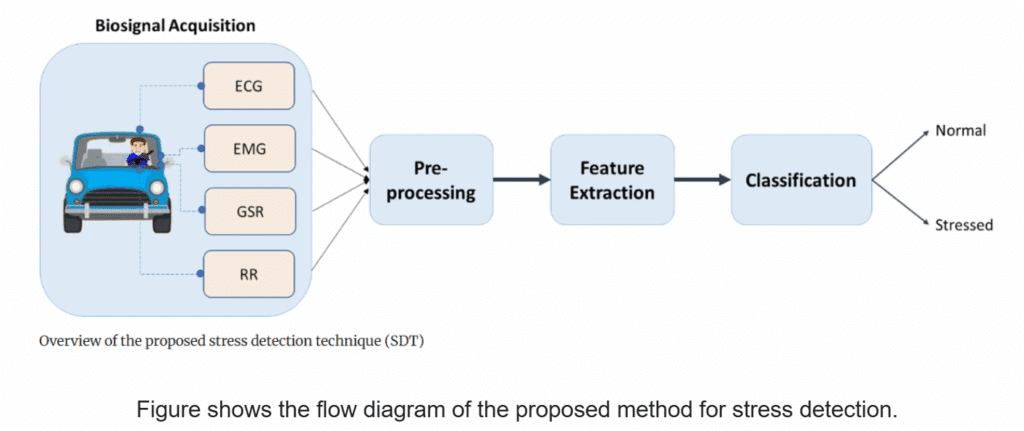

a. Automatic Driver Stress Detection

A study developed a system to detect driver stress in real-time using multiple bio signals—ECG, EMG, GSR, and respiration rate. The signals undergo preprocessing, feature extraction, and classification through machine-learning models like Random Forest. Their system achieved a remarkable 98.2% accuracy, with 97% sensitivity and 100% specificity, demonstrating that such a system can be integrated into Driver Assistance Systems to trigger calming interventions like playing relaxing music.

b. Empowering Emotion-Aware Control for People with Disabilities

Neuralink is an advanced AI-driven brain–computer interface (BCI) that interprets neural signals to enable direct communication between the brain and external devices. By translating electrical patterns from the brain into actionable commands, it allows users—especially those with paralysis—to control computers, type, or play games using only their thoughts. As an application of AI using emotions, Neuralink opens the door to real-time emotional state detection and response, where future systems could adapt environments, communication, or digital content based on the user’s cognitive and affective states, bridging human emotion with machine intelligence.

placing electrodes near neurons in order to detect action potentials. Recording from many neurons allows us to decode the information represented by those cells.

In the movement-related areas of the brain, for example, neurons represent intended movements. There are neurons in the brain that carry information about everything we see, feel, touch, or think.

“ I wrote this with my brain” – Bard

reference:Neuralink

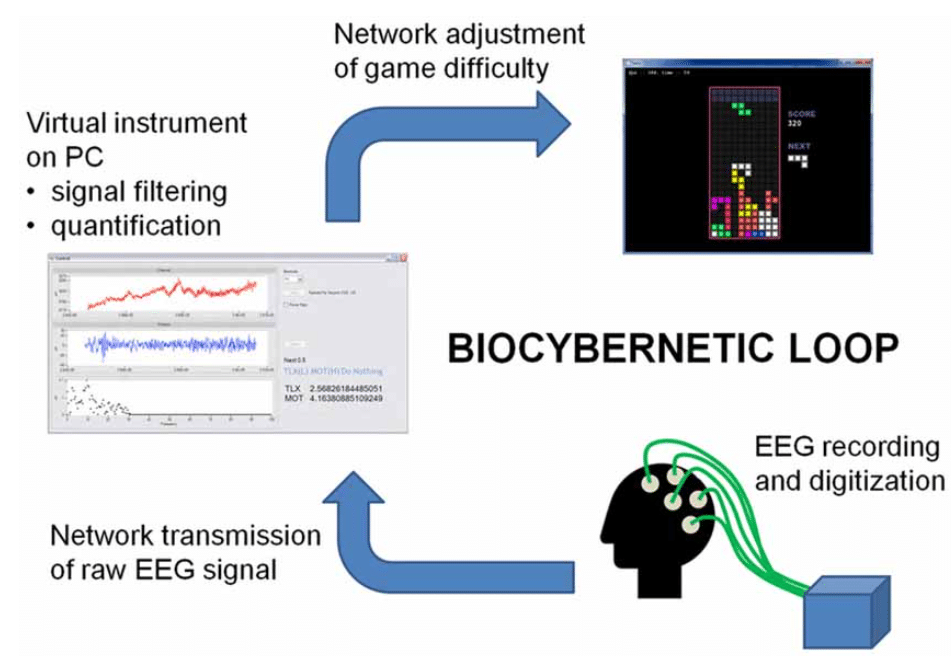

c. Affective Gaming & Difficulty Adjustment

Using EEG headsets (e.g., Emotiv, Muse), adaptive gaming platforms monitor players’ emotional arousal and engagement. A study with Tetris used alpha/theta EEG bands to dynamically adjust game difficulty in response to player stress or boredom. Another research in car-racing simulations incorporated EEG data to model emotions and adapt gameplay, improving interactive experience.

Figure: Components of the biocybernetic loop.

5. Case studies: Emotion x AI

Further into our exploration into the direction of emotions, we targeted our focus on projects which are oriented towards emotion centric projects that focus on human response. The most striking case studies that we got oriented towards were from the portfolio of Ninon Lize – Masclef. The majority of her experiments are embodied towards the speculative fiction and affective feedback environments. The key experiments that we considered extensively during our exploration are listed below:

- Strange loops(2022-23): One of the most interesting projects which is a wearable brain computer interface that visualizes the wearer’s emotional state using the fiber optics and EEG inputs. This project bridges neuroscience, performance and emotional computation together. Where the brainwave activity: Gamma, beta, alpha, theta into visual displays. The process creates an externalised form of inner emotional experience. Where the project doesn’t just visualize the feeling but operationalizes it, making emotion a medium.

- Hyperdream(2023): A project which constructs dreamlike spatial landscapes from EEG signals collected during hypnagogic(pre-sleep states). It transforms the subconscious signals into 3D mesh geometries offering a model for how emotional and cognitive signals into 3D mesh. Based extensively on affective feedback loops, translating internal states into visual outputs.

6. The transition: why emotions?

In our own research, these projects served as critical references in our exploration of how affect might become a central axis of design. Initially, our experiments with computational tools focused on generation—parametric scripts, form-finding algorithms, and material optimizations. However, these methods consistently lacked resonance: the outputs, though innovative, often felt sterile. We began asking what made a space feel alive or emotionally charged. This question led us toward neuroscience, affective computing, and artists like Lizé-Masclef, whose work bypassed formal novelty in favor of psychological presence.

We narrowed our inquiry to emotion because it provided the clearest departure from conventional architectural metrics. Unlike form, scale, or efficiency, emotion resists quantification—but demands engagement. Projects like Strange Loops and Hyperdream revealed that emotion could be captured not only metaphorically but also computationally. They demonstrated that biosensory input could be designed with and for, not around. Their success in externalizing internal states inspired us to create systems that respond not just to the program or environment, but to the feeling itself.

7. From insight to experiment: why experiment?

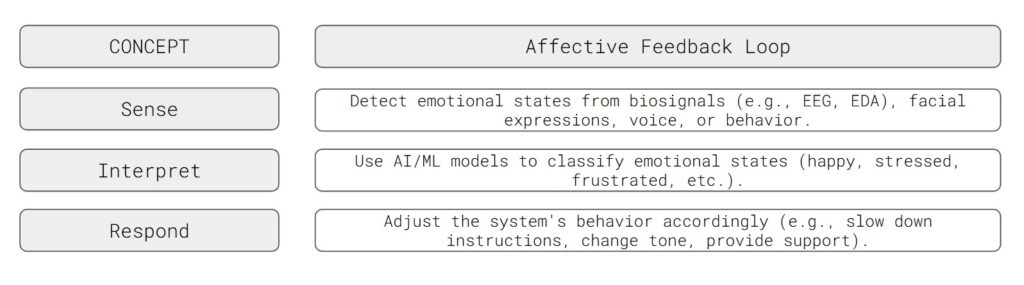

Having identified emotional computation as a critical gap in both architectural AI and design discourse, and having drawn insight from a range of pioneering case studies, we decided to move from observation to practice. We resolved to conduct our own design experiment to test and develop the Affective Feedback Loop in a tangible way. The aim was not to simulate emotion superficially, but to create a generative system that could interpret, iterate, and respond to targeted emotional states using visual design. This meant moving from theory to application: building a prototype pipeline where inputs (emotions) could be transformed into outputs (form, color, texture, composition), with embedded evaluative feedback mechanisms.

The next sections comprise the structure, methodology and process of the experiment.

8. Experimental Framework: how?

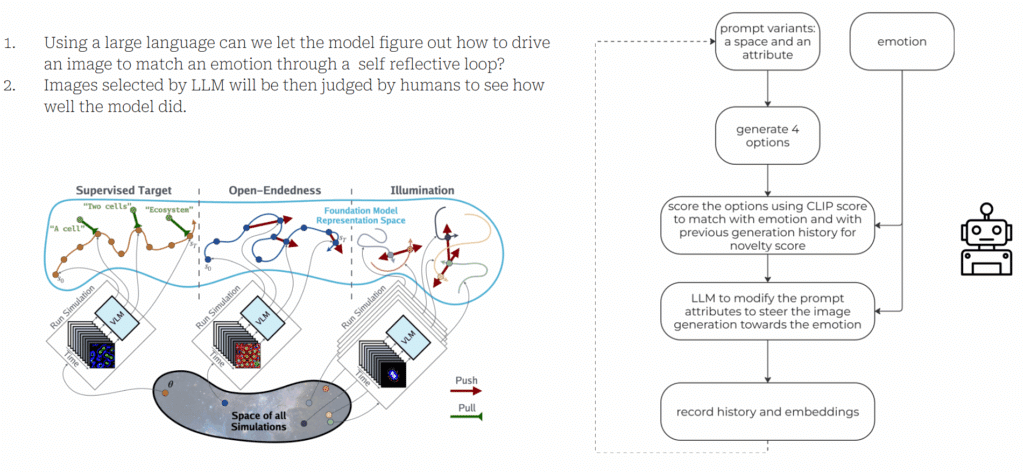

Our experiment is designed around a core question: Can a machine imagery that feels emotionally resonant and can it learn to improve this through feedback? – So to test this we developed a pipeline where emotional states act as a generative prompts.

9. The experiment: Steps and Explorations: what did we do?

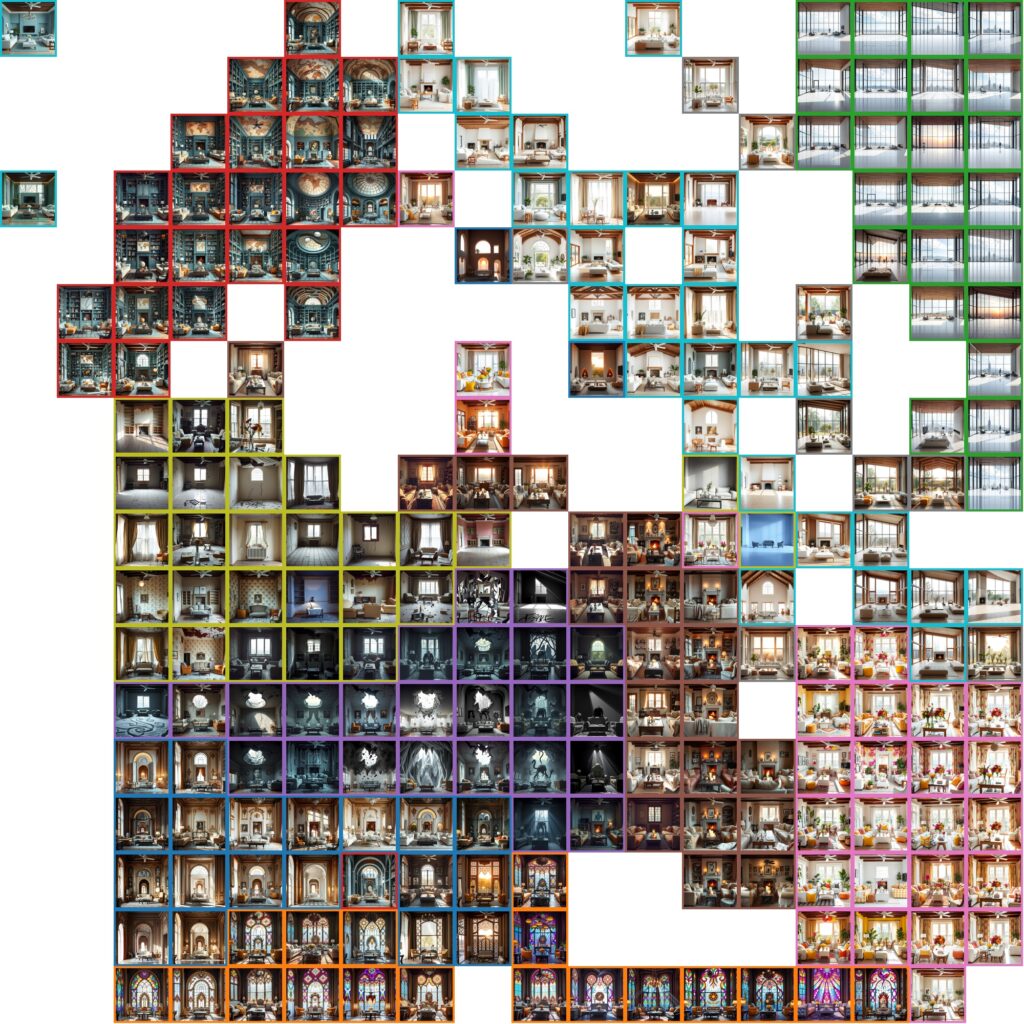

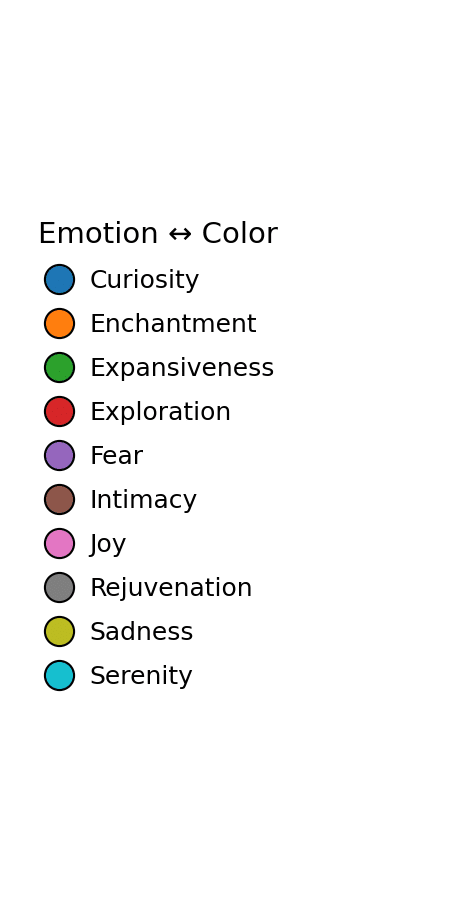

10. Experiment Results Analysis: Learnings?

- Iteration 1: Lighthearted Emotion: When targeting lighthearted emotion, this iteration showcased the systems ability to navigate towards emotional targets However there were few key concerns:

- Subjective Validation: How do we verify the AI’s understanding of ‘lighthearted’ matches the human understanding of it?

- Emotional Authentication Does the system actually achieve genuine emotional understanding or is pattern matching?

- Iteration 2: Complex Emotions(melancholy, sadness,fear):

- Melancholy vs sadness: The system’s ability to distinguish between closely related emotions suggests that it understands the difference but these distinctions might reflect training bias rather than understanding.

- Fear: Ability to process negative emotions, showcases capacity to handle a wide spectrum of emotions.

- Methodological Concerns:

- Human Judgement as validation: Extensive reliance on human judgement to validate AIs emotional understanding has challenge:

- Subjective Bias: Human emotional interpretation varies significantly across individuals.

- Temporal Inconsistency: Human judgements may change with time.

- Human Judgement as validation: Extensive reliance on human judgement to validate AIs emotional understanding has challenge:

Key Limitations: The need for human correction and reliance on judgement indicates that it needs cross verification from parameters other than the ones included in the experiment. Some of the key aspects that need to be considered are: EEG, Bio signals, cross-cultural modeling, VR feedback etc. Which can enable the system for a more correct framework.

11. Critical Reflections

Vision–language models can analyze an image, generate descriptive prompts, and even propose adjustments to evoke a desired emotion. In our trials, however, we observed systematic biases in how the LLMs translate emotion into architectural revisions most suggestions revolve around tweaking color palettes or minor spatial alterations. This highlights that, despite advances, truly novel or nuanced designs still require human judgment and curation.

At the same time, emotion itself is deeply subjective: two people may respond very differently to the same space. Recent work on coupling LLMs with non-invasive biosensors promises to capture an individual’s unique physiological reactions and feed them back into the generation loop, potentially tailoring designs far more precisely. But it also opens a profound ethical dilemma: if we can decode neural signals to create images straight from our thoughts, how do we safeguard mental privacy? Could this technology meaningfully enhance our creativity and well-being, or does it risk exposing the most intimate corners of our minds?

12. Conclusion

Current systems cannot fully replicate human emotional understanding, they demonstrate significant progress in recognizing, interpreting, and responding to emotional states. As we continue to develop emotional AI, we must remain mindful of both its potential and its limitations. These systems can enhance human emotional experience, but they cannot replace the irreducible subjectivity of human feeling. They can simulate empathy, but they cannot feel genuine care. They can process emotional data, but they cannot experience the full richness of emotional life.

Perhaps the most important insight from this critical analysis is that the value of emotional AI lies not in its ability to replicate human emotion, but in its capacity to augment human emotional intelligence and create new possibilities for emotional expression and understanding. As we continue to explore the intersection of artificial intelligence and human emotion, we must proceed with wisdom, empathy, and deep respect for the complexity and beauty of human emotional life. The future of emotional AI lies not in the mechanization of feeling, but in the – creation of technologies that honor, support, and celebrate the full spectrum of human emotional experience.

The heart of the machine may never beat with genuine feeling, but it can learn to dance in rhythm with human emotion, creating new forms of emotional experience that enrich rather than replace the profound mystery of human feeling.

References: