AI in AEC is often pitched as a design generator or a shortcut to faster output. We pushed a more real definition: AI’s purpose is decision support, helping teams see risk earlier and choose better options, while humans remain accountable.

AEC is a chain of high-stakes decisions made under pressure: feasibility, coordination, procurement, construction risk, and long-term performance. In that context, AI is most valuable when it turns messy, fragmented information into structured signals—detecting inconsistencies, summarizing changes, prioritizing issues, and flagging risk—so teams reduce rework and improve reliability. The “agent shift” is clear: AI becomes an agentic layer that can plan steps, call tools, run checks, generate reports, and escalate decisions to humans—because the real time sink is often “glue work,” not a single design task.

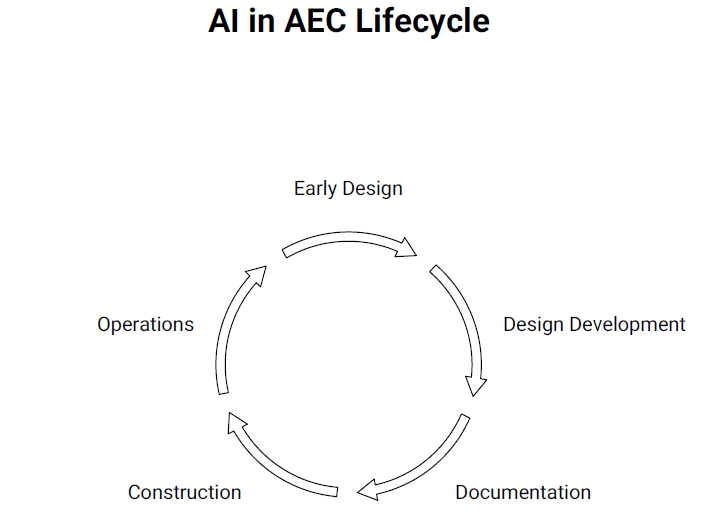

Where AI Adds Value?

Early Design: Stress-test options against constraints: daylight targets, spans, circulation logic, budget envelopes.

Coordination/Docs: Detect inconsistencies, cluster clashes, and summarize “what changed” across model versions.

Construction: Transform site data into risk signals for schedule, quality, and safety checks.

Operations: Use digital twins to learn from performance and feed constraints back into design.

What It’s Not?

An auteur: replacing intent with “the model’s taste.”

A novelty engine: persuasive outputs without verification or traceability.

Speed at any cost: faster delivery that increases downstream errors and liability.

Trust & Governance

In AEC, the stakes are real: if an AI output is wrong and it leads the team in the wrong direction, responsibility still sits with people and organizations, not with the tool.

That’s why AI only becomes useful when it’s paired with validation and auditability. A practical way to think about this is a simple risk cycle: Govern who owns the system and approves outputs; Map where AI sits in the workflow and what can go wrong; Measure how it performs and where it fails; and Manage risk with review gates, confidence thresholds, and safe fallbacks.

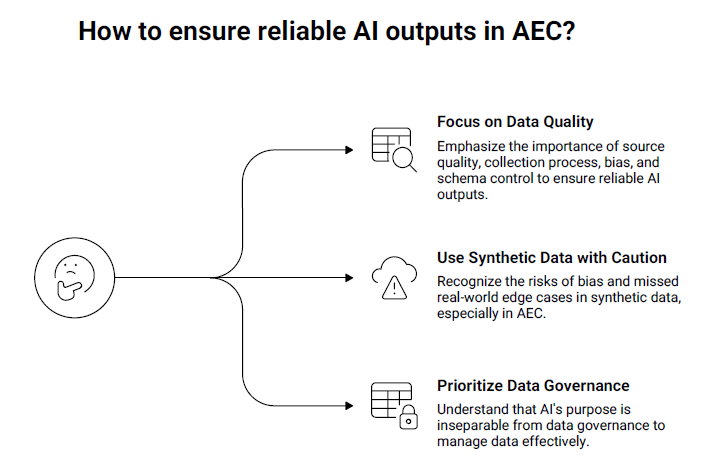

This is also where data becomes non-negotiable. Decision support is only as strong as the quality and coverage of the data behind it. Synthetic data can help fill gaps, but it can also introduce bias or miss real-world edge cases—so it needs careful checking against reality.

And for those working in Europe, the regulatory mindset reinforces the same point: AI isn’t just “a tool you use,” it’s a system with risk levels and a chain of responsibility across everyone involved.

Takeaways

Start with decisions, not deliverables.

Treat data structure as design work.

If it can’t be audited, don’t ship it.