Building Together, One Word at a Time

AIM

The aim of this project is to enhance human-robot collaboration through the development of a user-friendly system that creates an inclusive environment. Text-to-speech (TTS) and speech-to-text (STT) systems enable humans and robots to communicate in anintuitive and engaging way.

Relevance

One major issue was that the human participant did not fully understand the instructions and was unsure of what actions to take. Nevertheless, this is not the only case that we have encountered. Collaborating with robots could be quite intimidating for the majority of the people, and as a result, it tends to lead to a large gap in the development of new workflows or technologies. While robots can be useful in many disciplines, becoming a robot professional requires a considerable amount of training.

To address these challenges, we developed a program to assist the user more effectively. This program provides clear, step-by-step instructions to the human participant and allows the user to give commands to the robot, such as continuing the task, stopping the process, or asking for help if needed. This solution improved the collaboration between the human and the robot, ensuring a smoother and more efficient task execution.

Workflow Overview

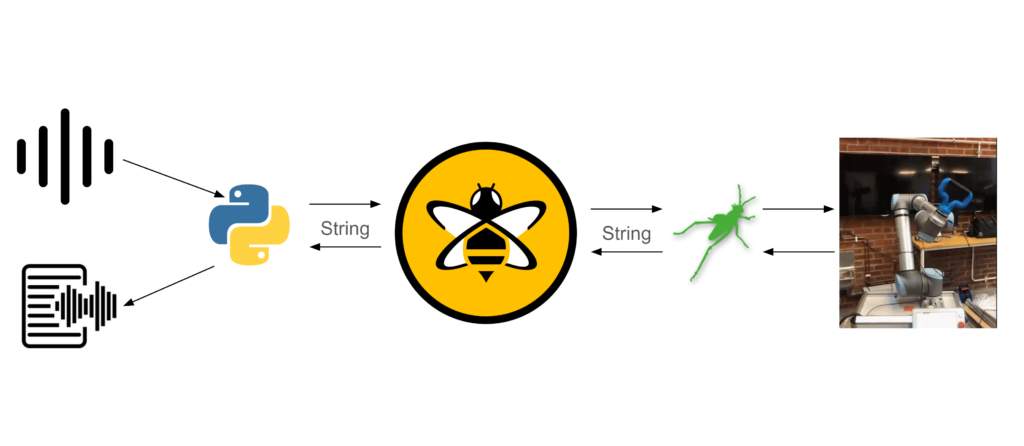

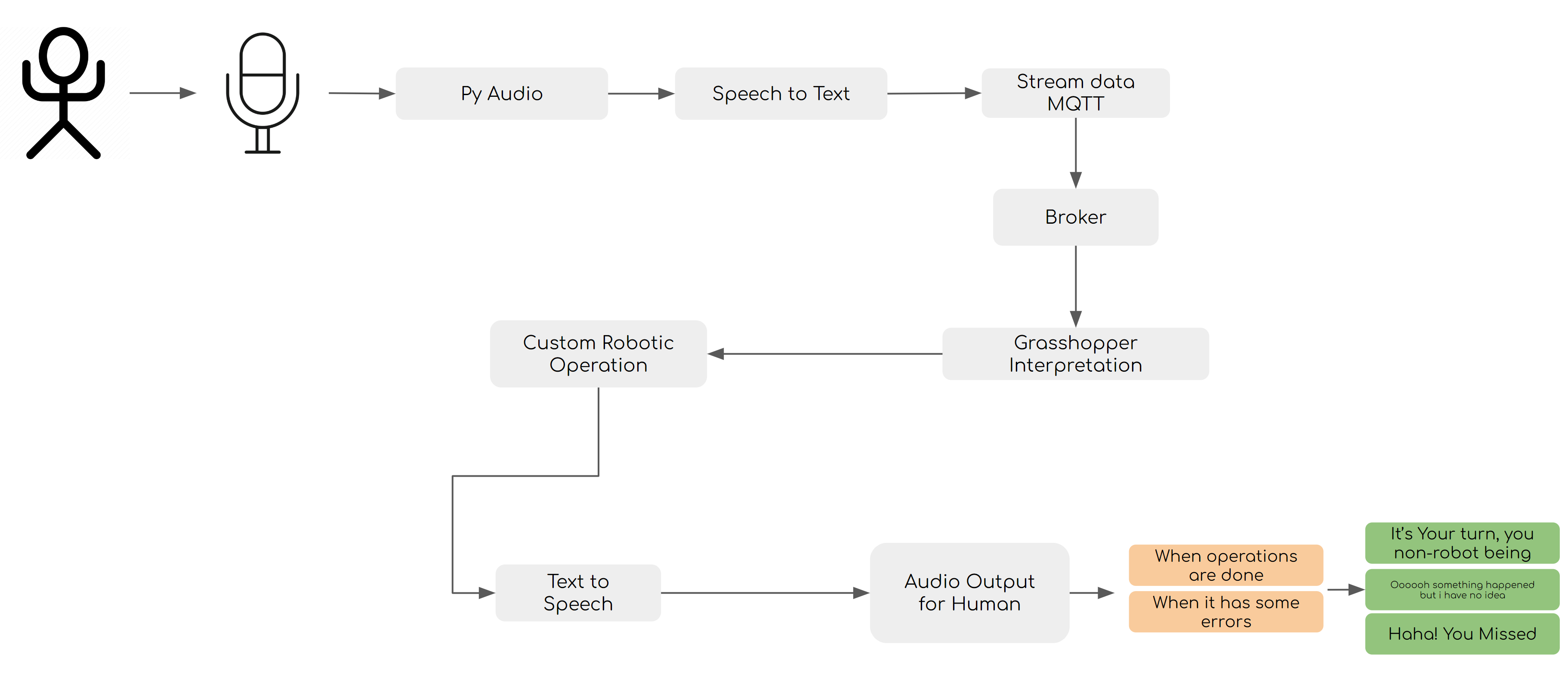

This system is designed to facilitate interaction between a user and a robotic system by integrating speech recognition, text-to-speech (TTS), and MQTT communication between the two. The system consists of three main components: audio input from a microphone, processing of speech commands, and publishing responses to an MQTT broker via the MQTT protocol.

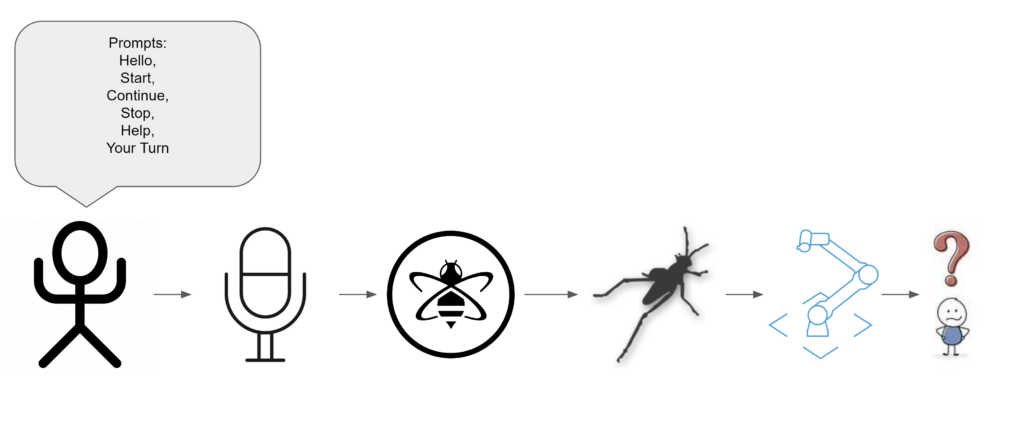

The following diagram illustrates how a user interacts with a robotic operation using voice commands. A microphone is used to record the user’s voice. Through a speech-to-text process, PyAudio captures audio input and converts it into text.

By using MQTT, a messaging protocol, this text data is streamed to a broker, which serves as an intermediary, forwarding it to the desired destination. With Grasshopper, a visual programming language, the received text is interpreted and a custom robotic operation is triggered based on the commands.

Robots execute tasks and provide feedback to users. User feedback is converted from text to speech and played back as audio. The system responds differently depending on the situation. If there are errors or issues, it may respond with, “What’s wrong? Do you need help you non-robotic being?” or “Need a hand? Unfortunately, I’m a robotic arm and not a hand”

User interaction can be intuitive and efficient through natural speech control and spoken feedback.

Behaviors

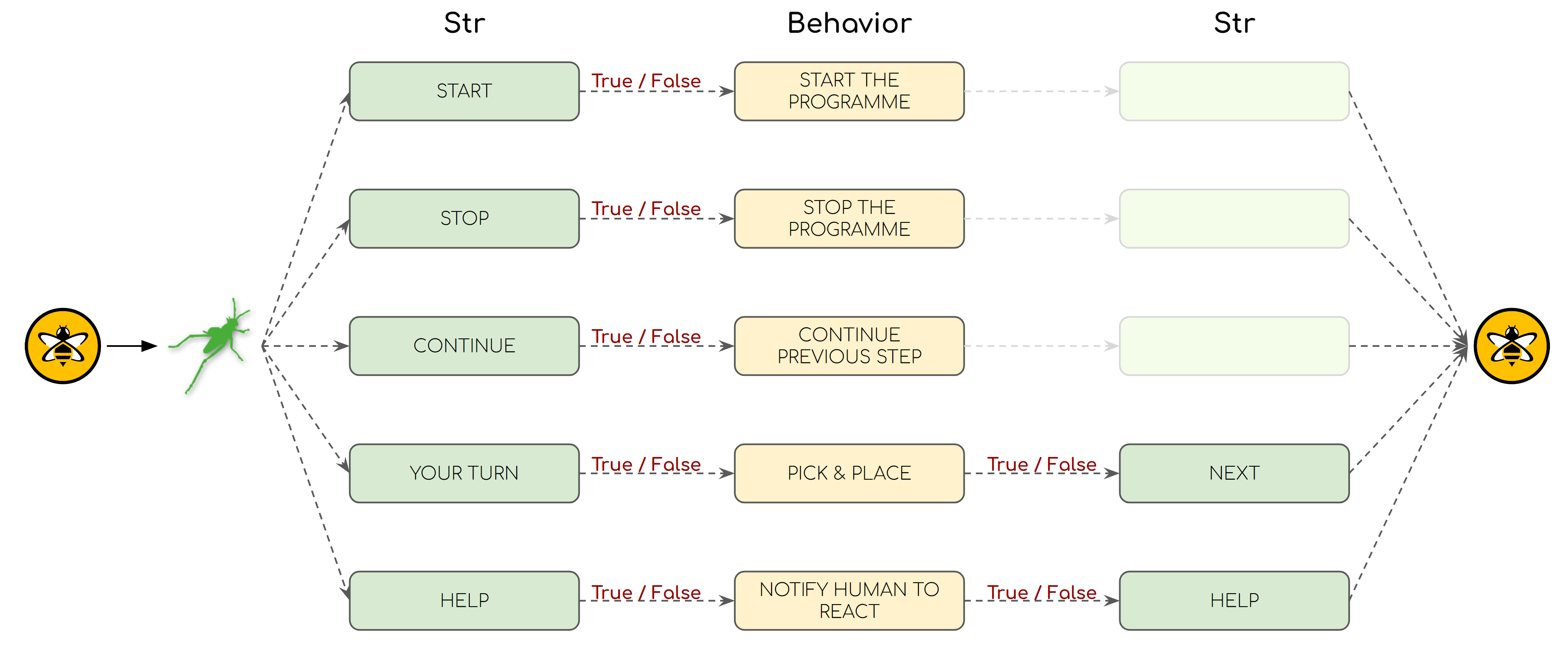

In the system, commands are received from the user, which are represented by different strings (Str) such as “START,” “STOP,” “CONTINUE,” “YOUR TURN,” and “HELP.” Each command is interpreted by the robot in a specific manner.

- If the “START” command is sent by the user, the robot checks a condition (True/False) and starts the program if it is true.

- When the robot is given the “STOP” command, it checks another condition. The program is terminated if this condition is true.

- A robot that is using the “CONTINUE” command will continue the previous step if the condition is met.

- A pick-and-place operation is initiated by the “YOUR TURN” command. It proceeds to the next step if the condition is true.

- A robot checks the condition when the user requests “HELP,” and, if it is true, alerts a human to take action.

A loop of interaction between these commands and their corresponding behaviors ensures that the robot can perform tasks and respond appropriately to different commands.

Conclusions

It was exciting for us to see how intuitive controlling the robot could be using our voice and how we can really create an inclusive environment. In spite of the fact that the overall system was working, we needed to test it with a large number of users to improve not only its capabilities of speech recognition, but also to identify new features to add that would improve it, such as pointing to specific blocks to move if the user feels lost. Additionally, this workflow could be implemented in different contexts of design and evaluated.