Can we find a systemized approach for extracting the shape of cities?

City.Style.GAN is a small research on the possible generative networks capables of generating new buildings massings according to the urban shape fo the city.

Methodology:

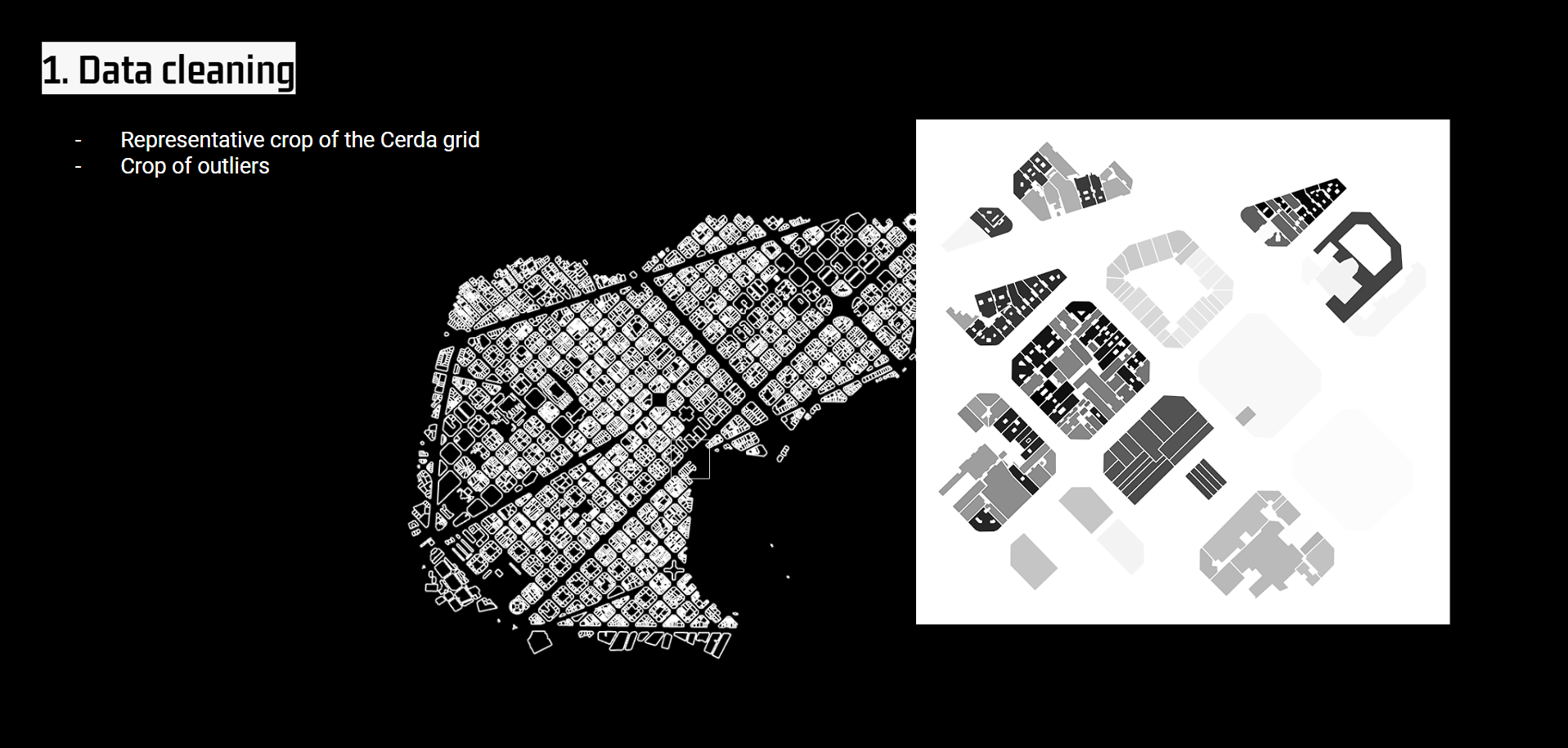

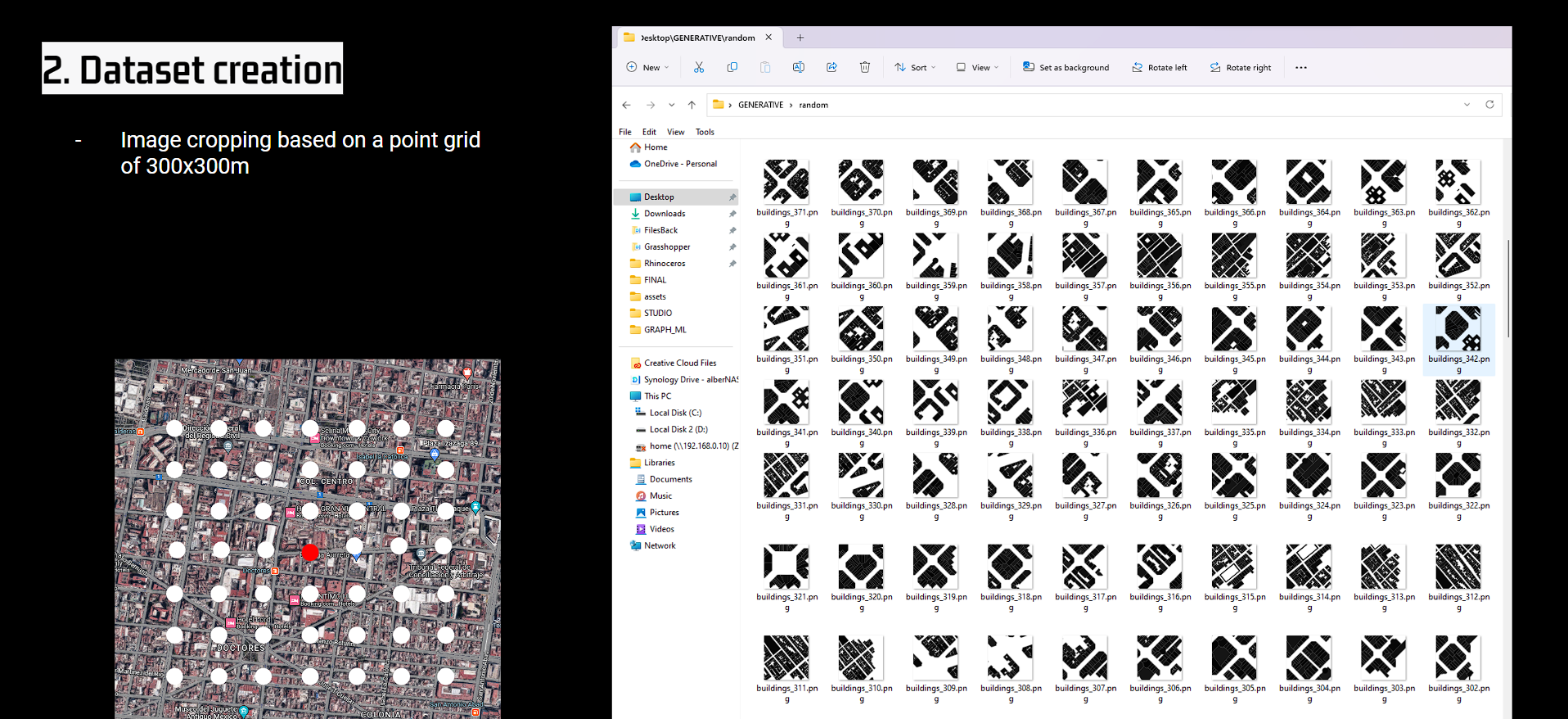

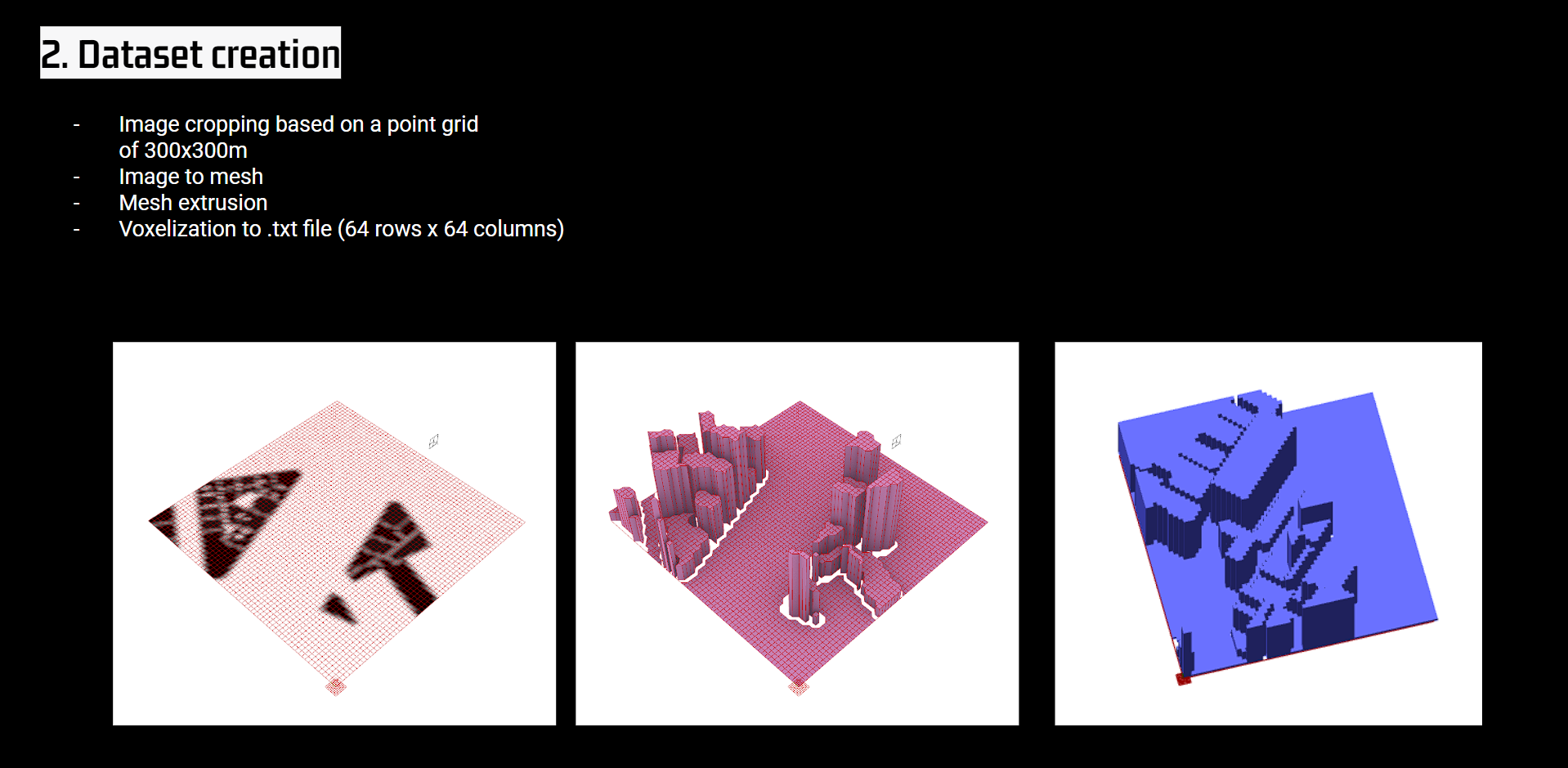

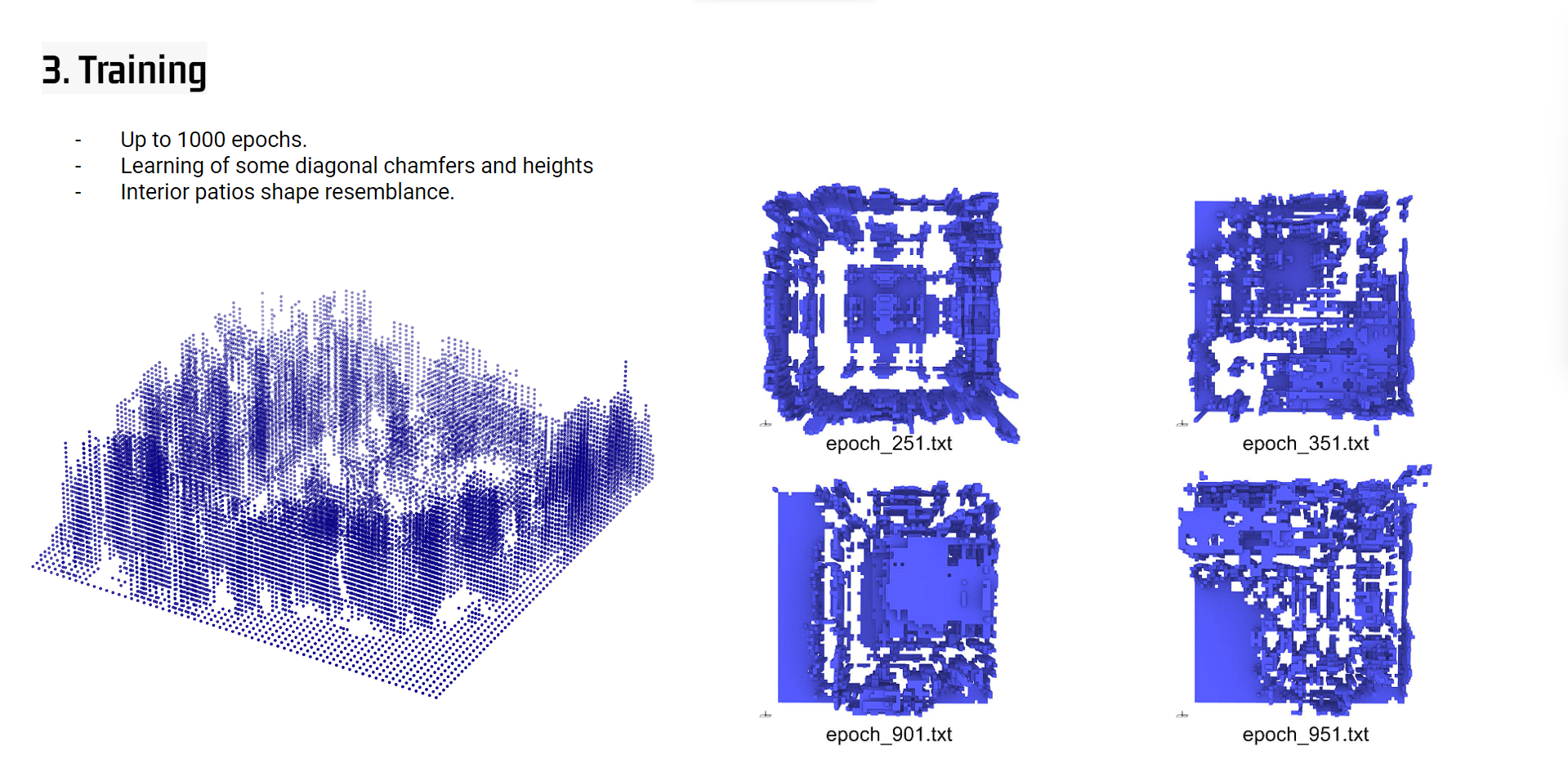

In a first aproximation to the urban generation, large portions of three urban tissues are extracted in OSMnx and bring to grashopper for the voxelization of each city, and follwing trainng in a 3D Generative Adversarial Network.

3DGAN

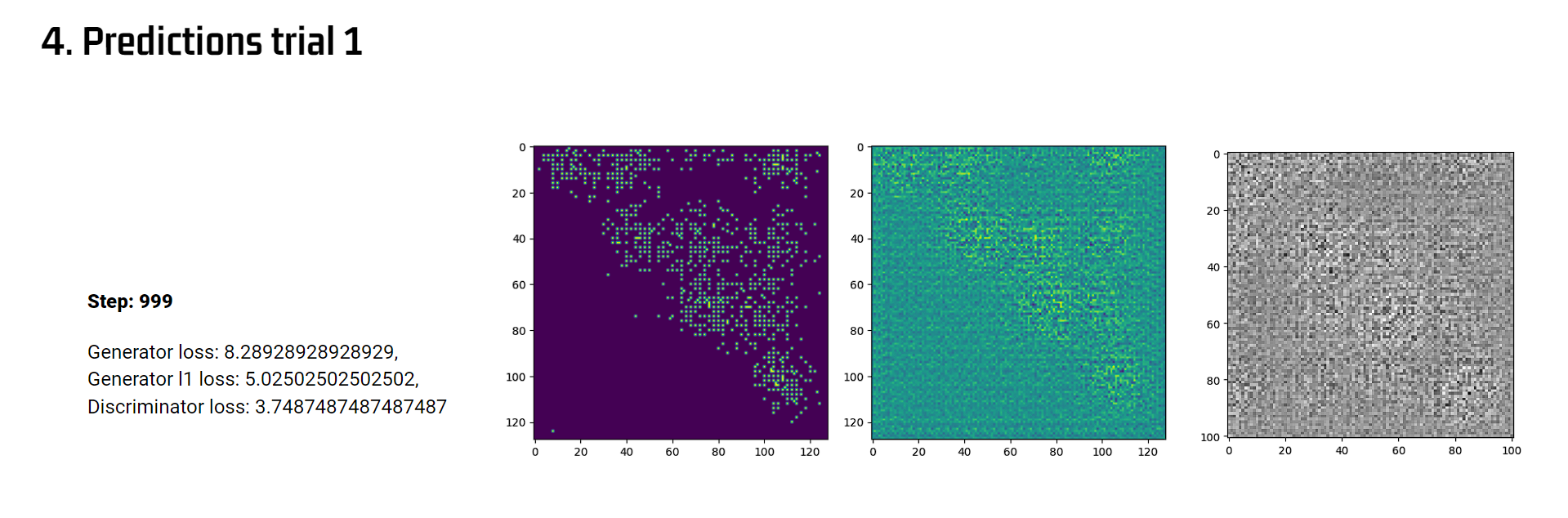

Heightfield image to voxel generation first approach.

Moving to a new model

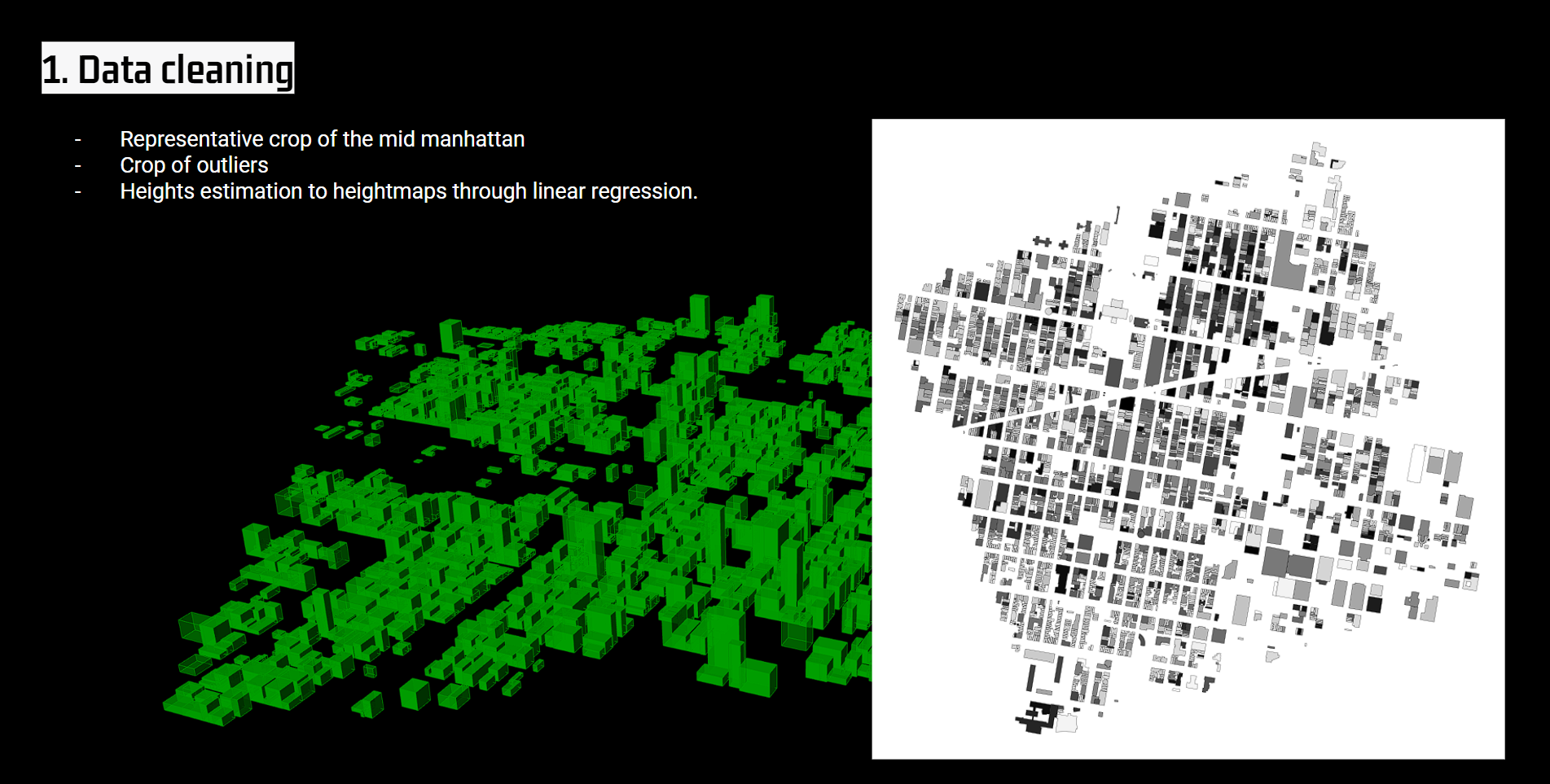

Later on, due to the limitation of maximum voxels samples resolution (64*64*64), the research is narrowed down to the building block shape generation, this is the generation of new building blocks inside each city block depending on the 3D data of the city.

cGAN

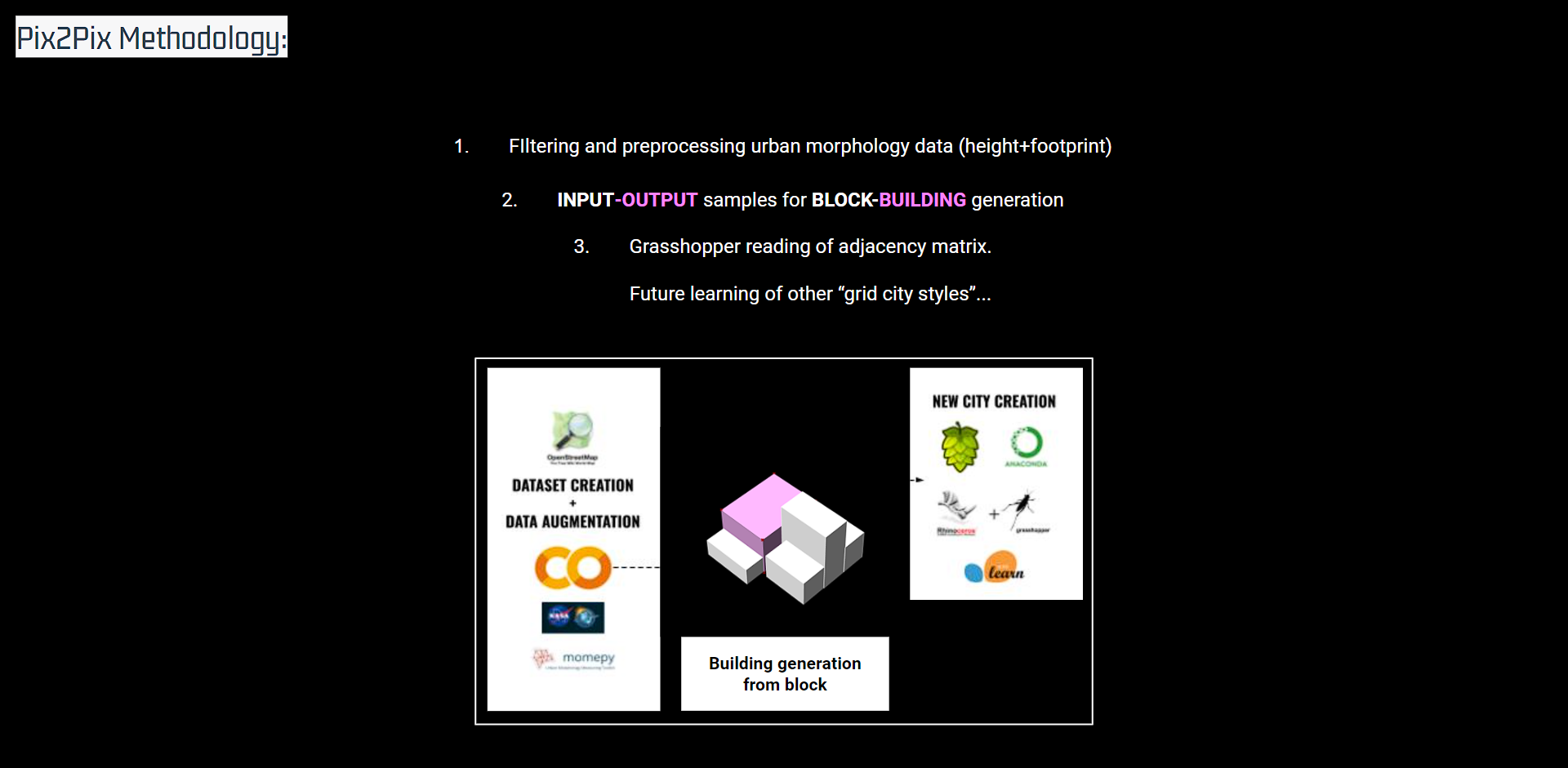

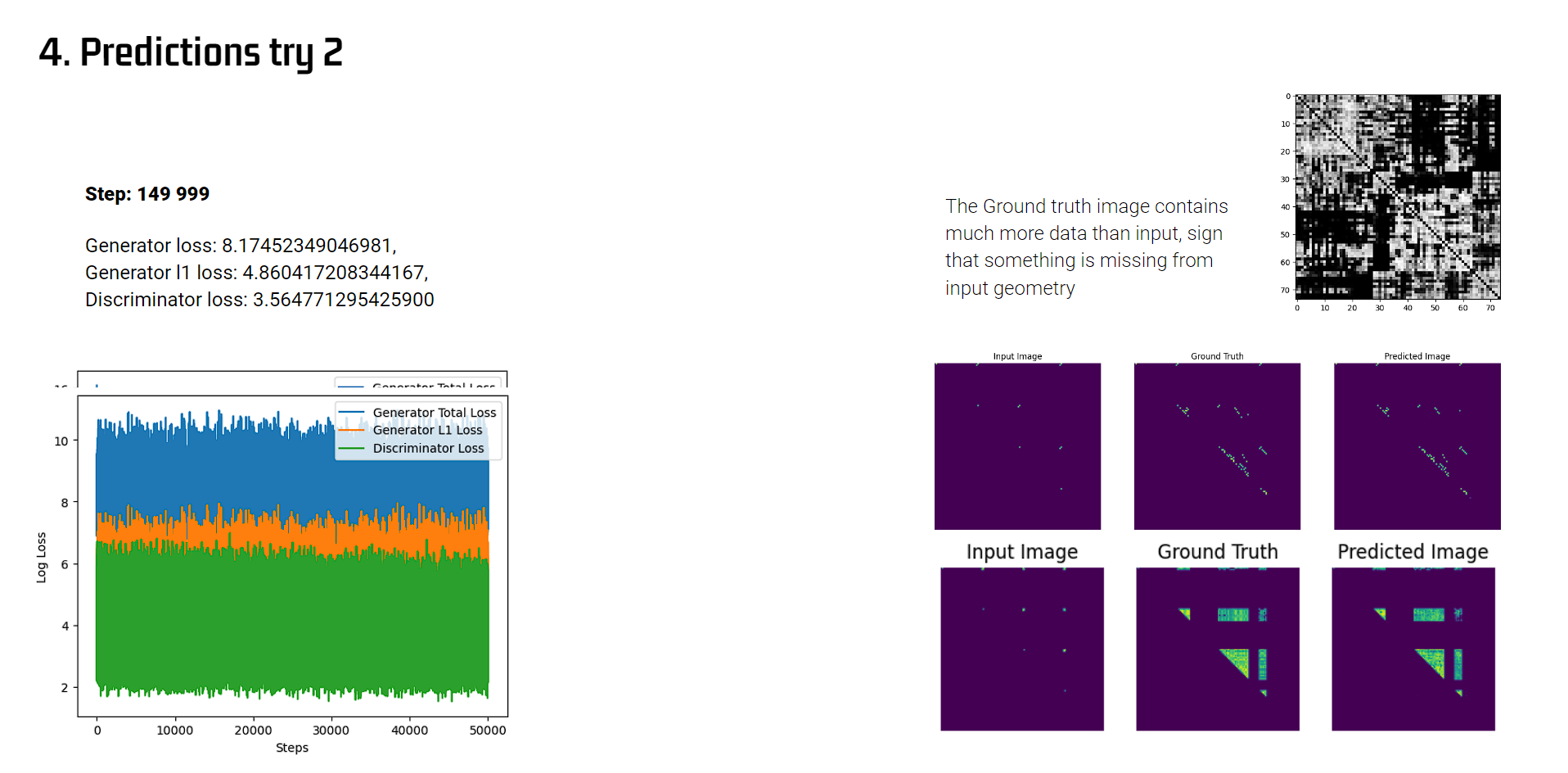

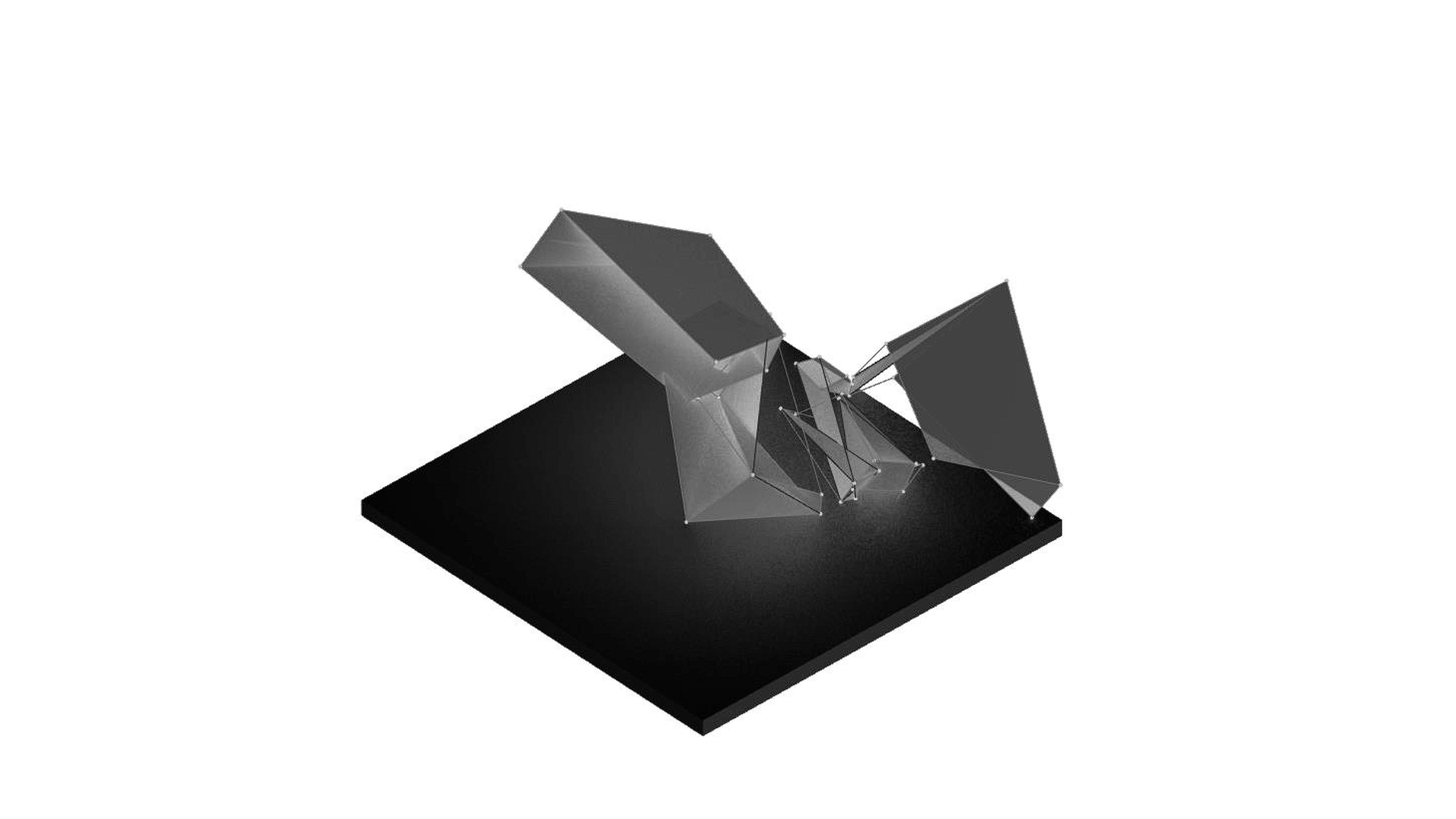

The cGAN selected follows a pix2pix architecture, where two main CNNs models work together in an adversarial manner.

The generator is responsible for generating synthetic images based on random noise or other input representations, in this case the rest of buildings belonging to the block. The discriminator is trained to compare the ground image of the single building with the one generated by generator in a scoring system, improving until output geometry is indistinguishable from predicted one.

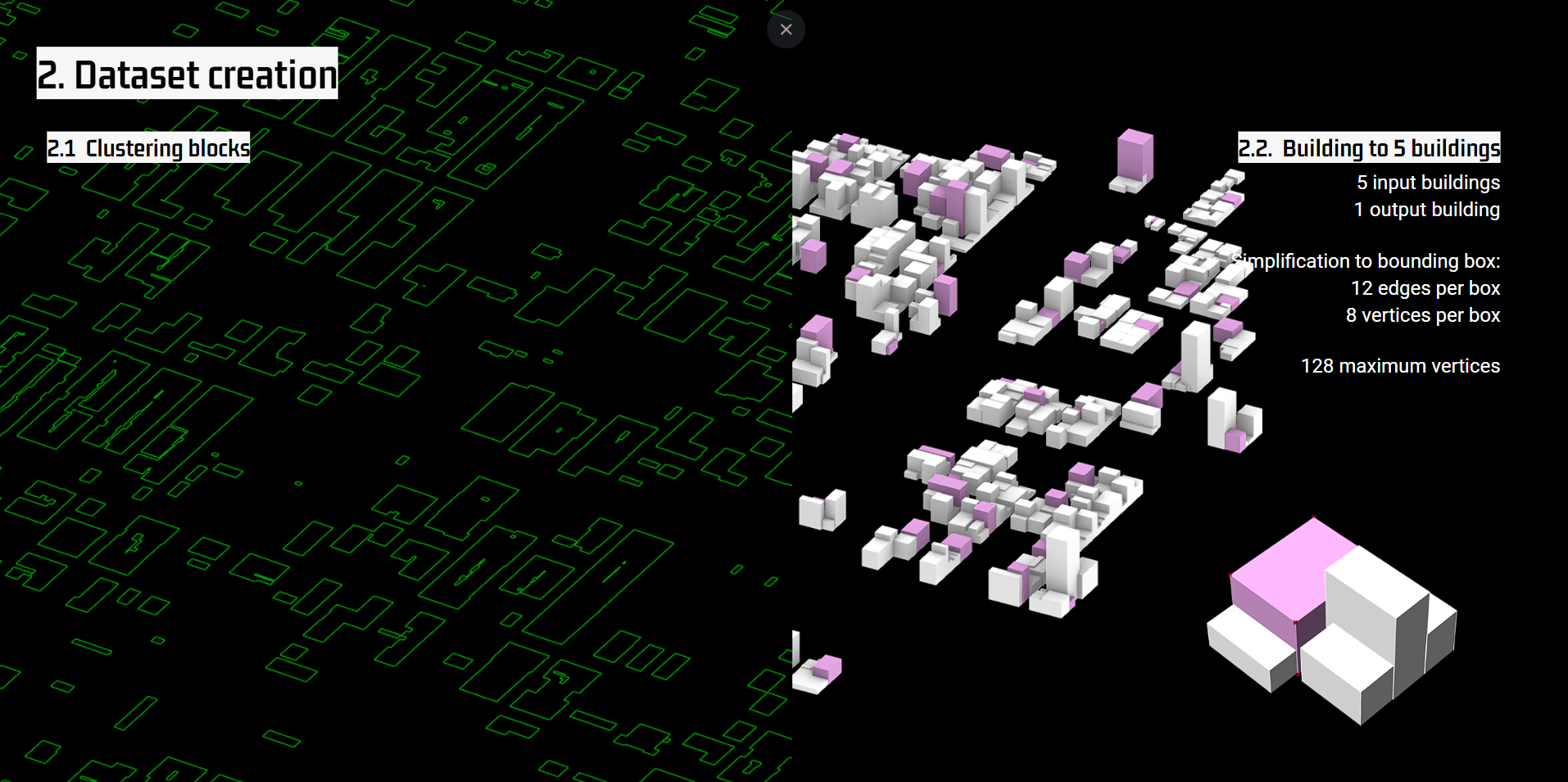

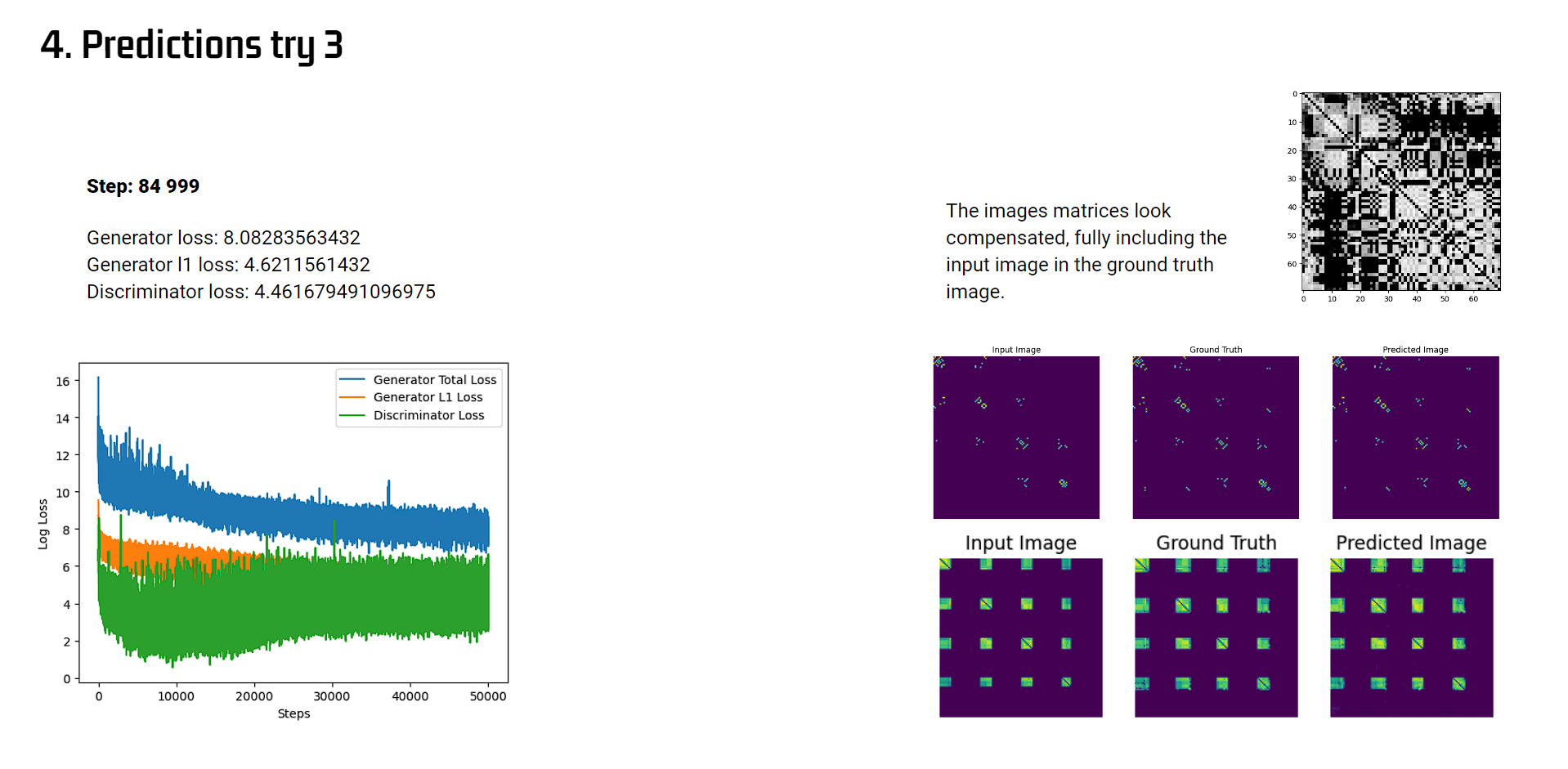

In the final training trial, a boolean representation of the city blocks was incorporated to reduce the number of vertices. The remaining buildings in each block were iterated upon (output geometries), and training was conducted for a total of 85,000 steps using complete valid matrix images.

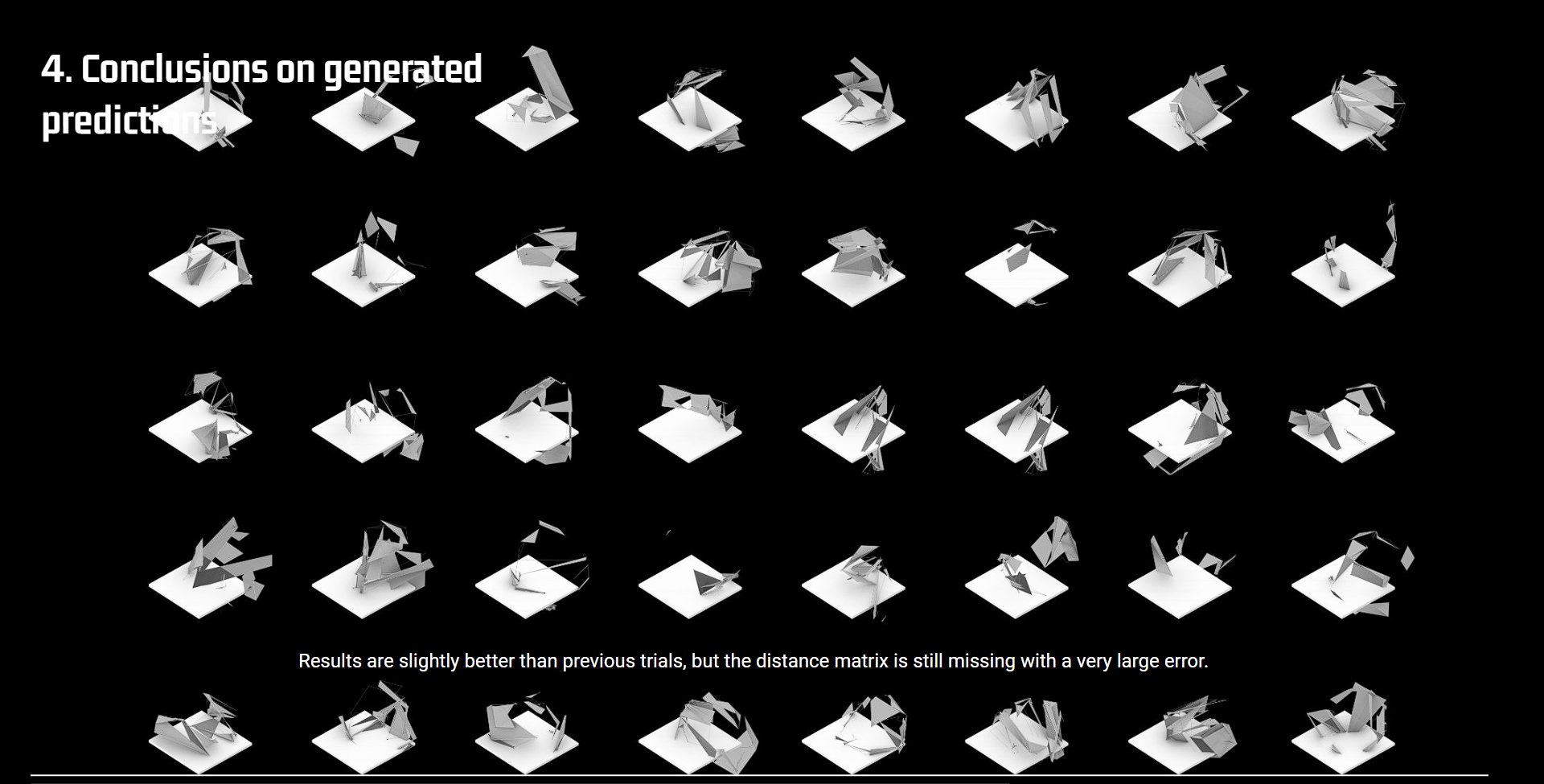

Although this dataset had more samples (900), approximately half of the reconstructions were invalid when distances were reconstructed back in kangaroo, as shown by the red samples. Improving the rules for filtering out these bad reconstructions and conducting longer training could have resulted in better distance prediction.

Conclusions

The resolution of the geometry proved to be a significant challenge for the datasets, as many outlier geometries needed to be cleaned from the downloaded city footprints.

Relying solely on real data of the city geometry limited further progress in fine-tuning of the model too, meaning that creating a custom dataset would likely have yielded better results. Achieving a more balanced distribution between input and output geometries would have reduced the number of discarded samples and improved the block clustering od vertices, making them more compact and better suited for the 128×128 image subdivision of pixel information.