Aerial Reforestation Using Autonomous Drones

Context

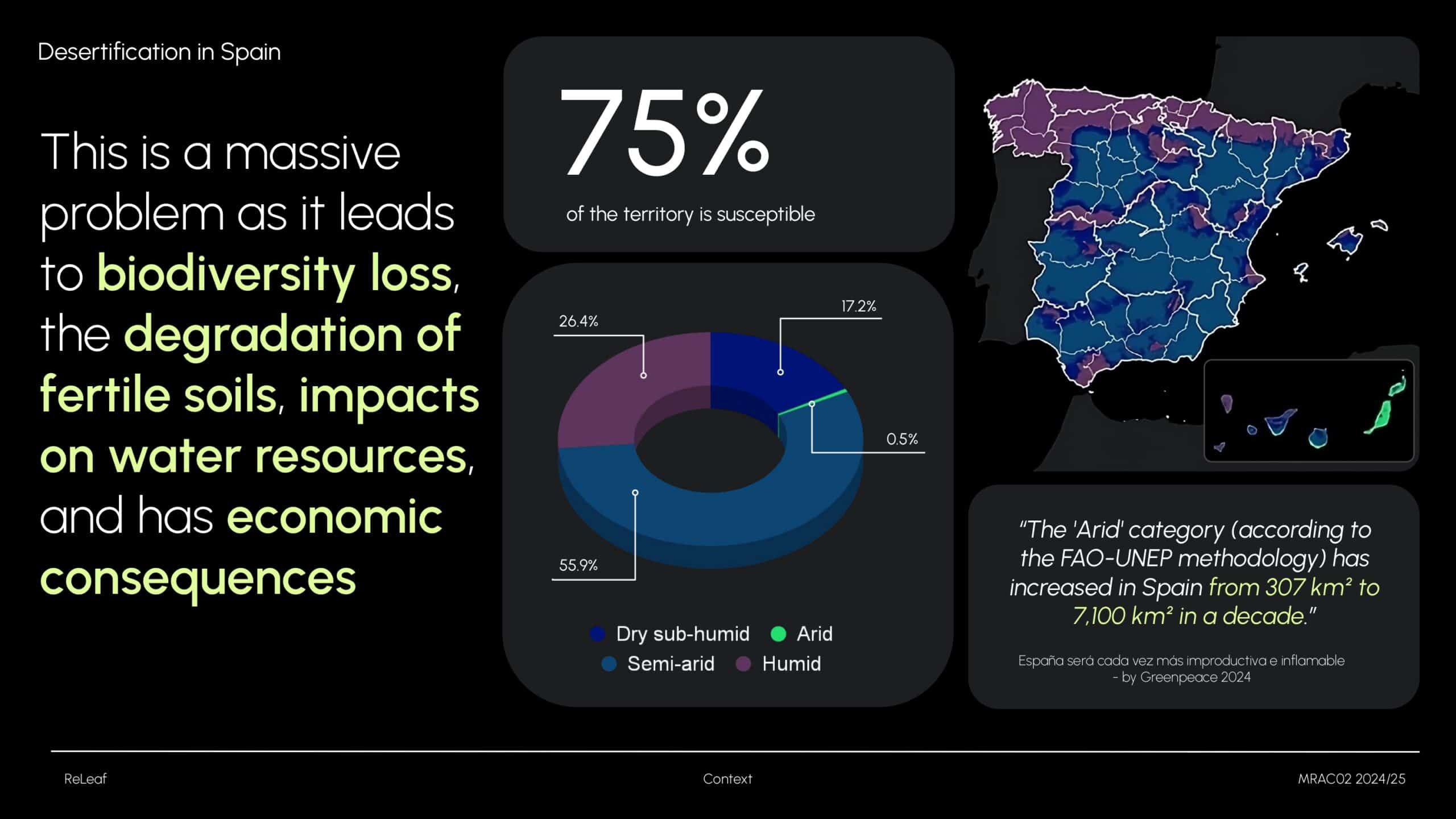

Desertification is a major environmental challenge in Spain, driven by both natural and human factors. Over 74% of the country’s land is at risk, especially in regions like Andalusia, Murcia, Valencia, and Castilla-La Mancha. This is largely intensified by factors such as deforestation and wildfires, which leave the soil exposed and highly vulnerable to wind and water erosion.

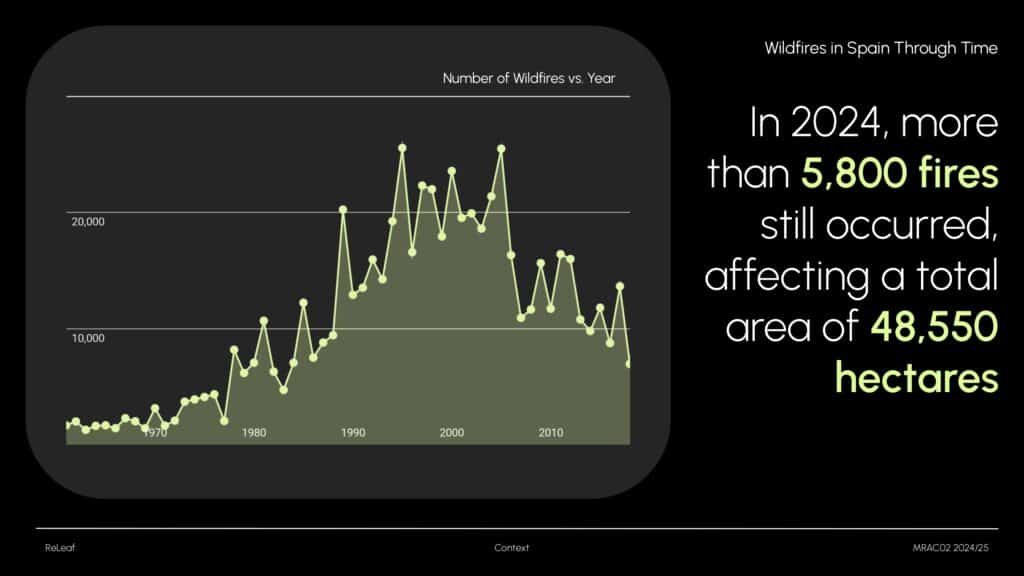

This graph shows the hectares of land burned in Spain from 1990 to 2023. While there was a peak between 1975 and 1995, both the number of fires and affected hectares have decreased since then. However, the problem persists: in 2024 alone, there were over 5,800 wildfires, impacting more than 48,550 hectares – an alarming figure.

These numbers highlight the urgent need for ongoing prevention and restoration. In response, multiple reforestation initiatives have been launched at the European level, supported by dedicated funding programs.

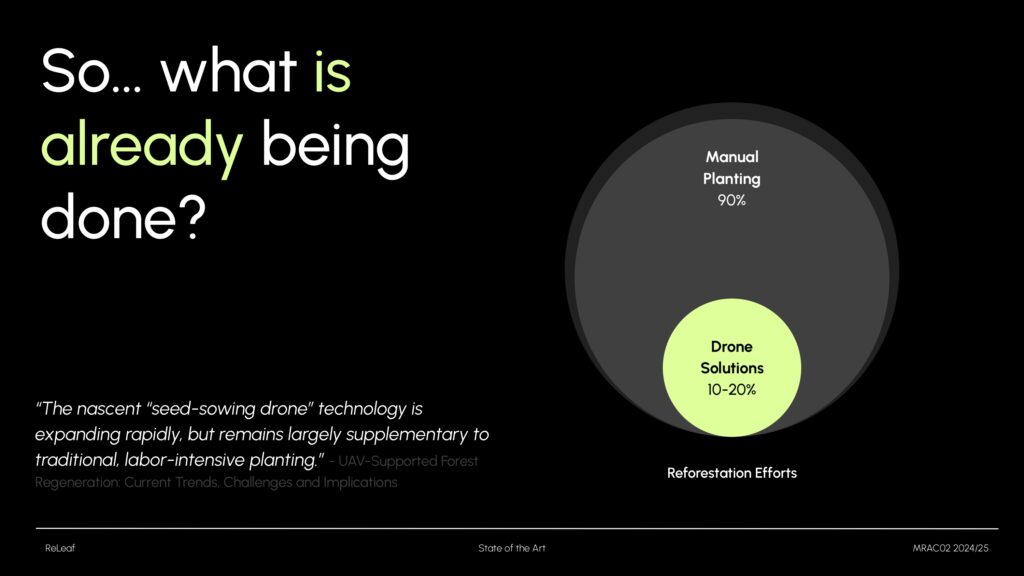

So, what actions are already being taken? The two most common techniques are hydroseeding and manual planting. Manual planting is used in nearly 90% of cases, but it has significant limitations. As a result, we’re now seeing an increase in hybrid solutions – where manual planting is complemented by drone technology. I’ll now show you two examples.

State of the Art

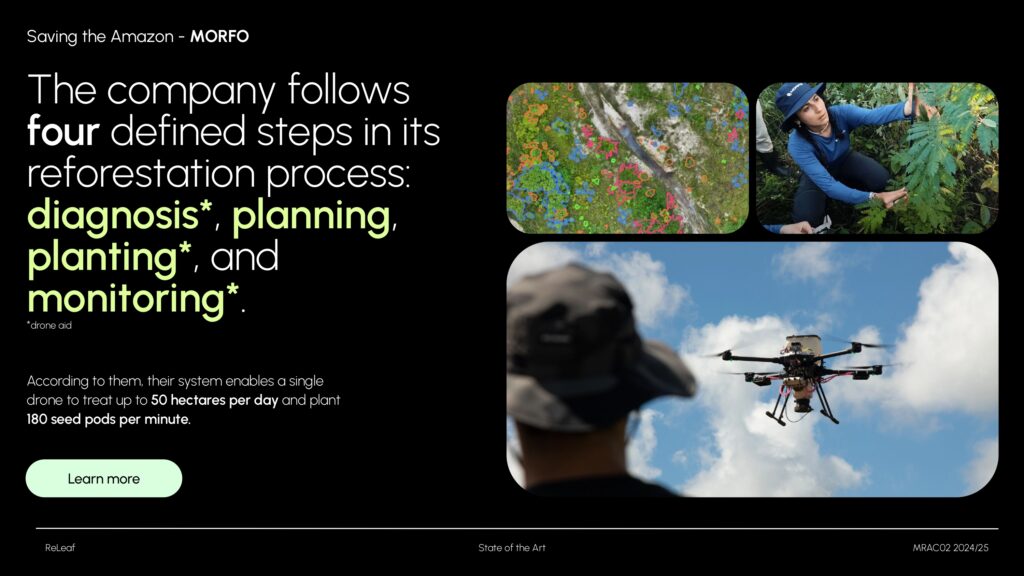

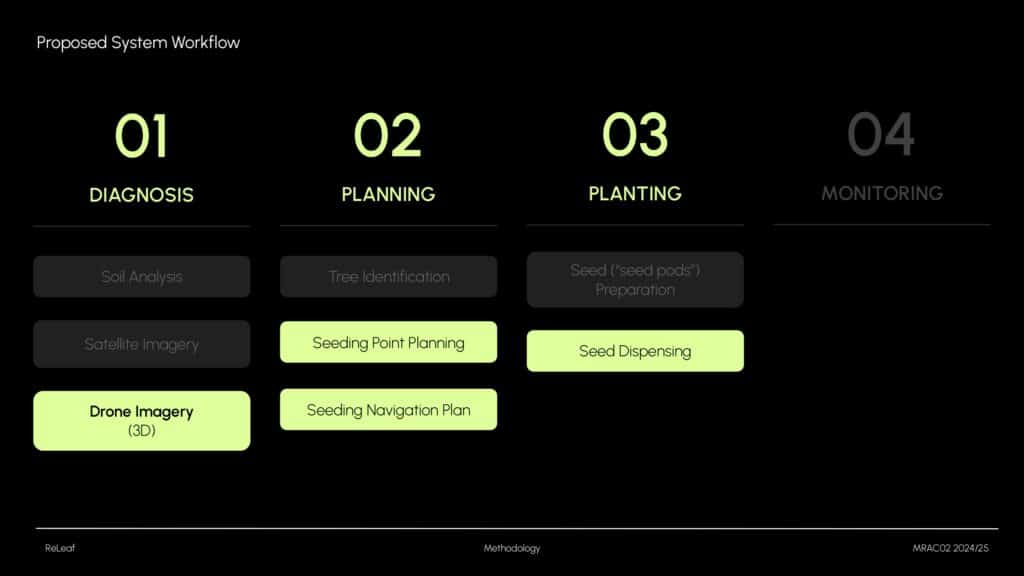

Morfo is a Brazilian company focused on reforesting the Amazon. Their reforestation process follows four steps: diagnosis, planning, planting, and monitoring. Drones are used in three of these stages – for terrain analysis, seed deployment, and growth monitoring.

According to Morfo, a single drone can treat up to 50 hectares per day and plant up to 180 seed pods per minute.

The second example is Dronecoria, a project previously developed in collaboration with IAAC. It emphasizes open-source, low-cost solutions that are DIY-friendly. Their system includes a custom-built seed dispenser that releases pods on command – this inspired the design of my own dispenser.

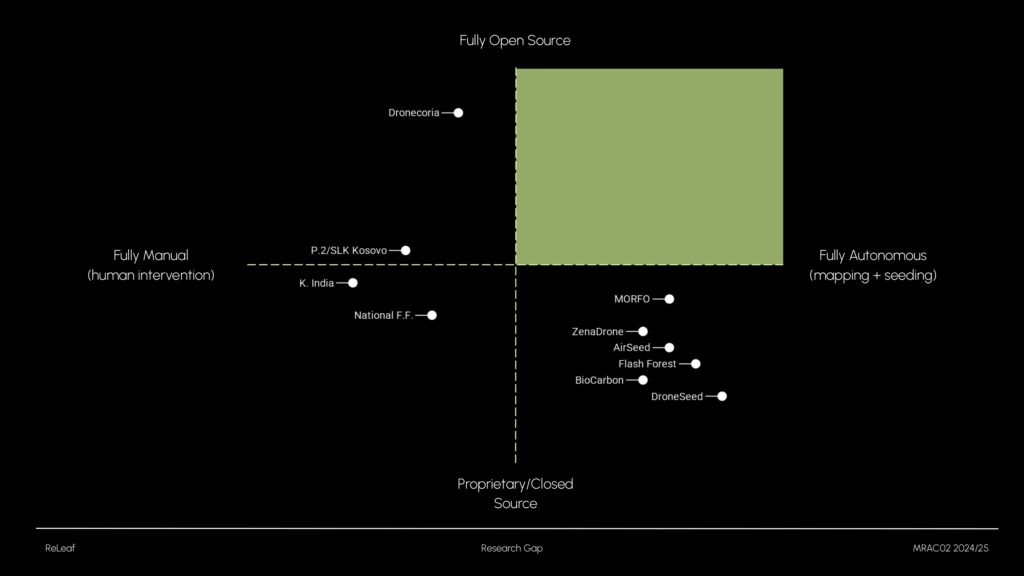

When analyzing these projects, I noticed a trend: autonomous drones tend to rely on proprietary systems, while open-source alternatives usually lack autonomy. That’s where my research comes in – developing an open-source, autonomous reforestation drone.

Project Aim

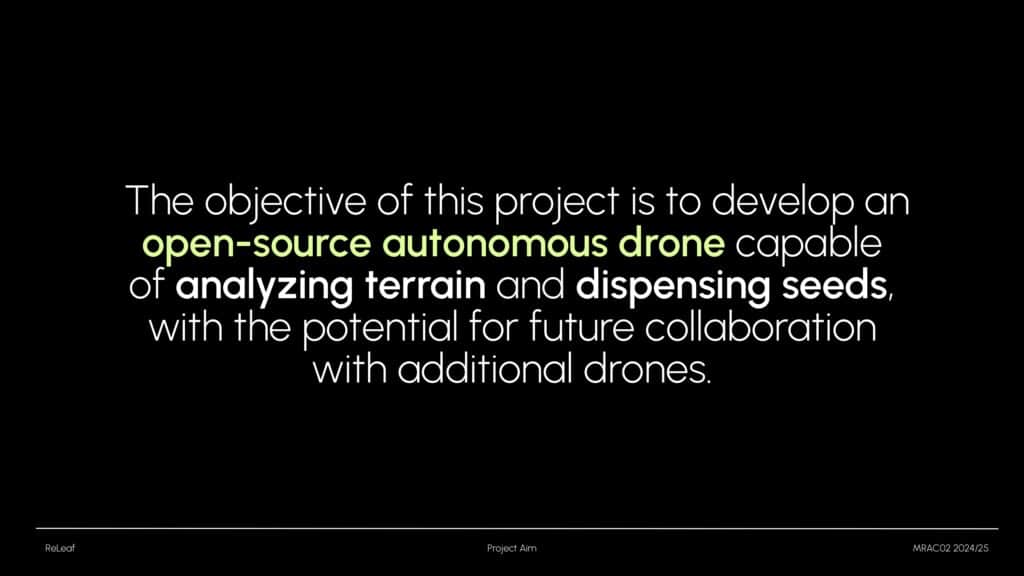

The goal of my project is to develop an open-source autonomous drone capable of scanning terrain and dispensing seeds. In the future, the system could scale up to work with multiple drones collaboratively – something critical for large-scale reforestation.

After analyzing Morfo’s four-step process, I realized building a full solution would be too ambitious for one project. So, I focused on:

- Drone-based data acquisition

- Seed point extraction and navigation

- Seed dispensing

These components cover most of the tasks typically performed by a reforestation drone.

Methodology

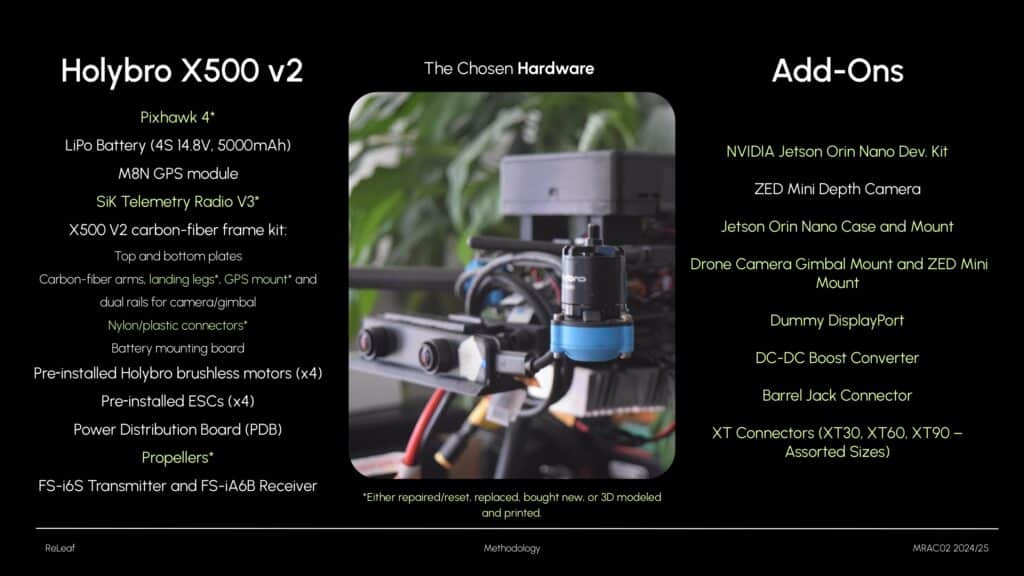

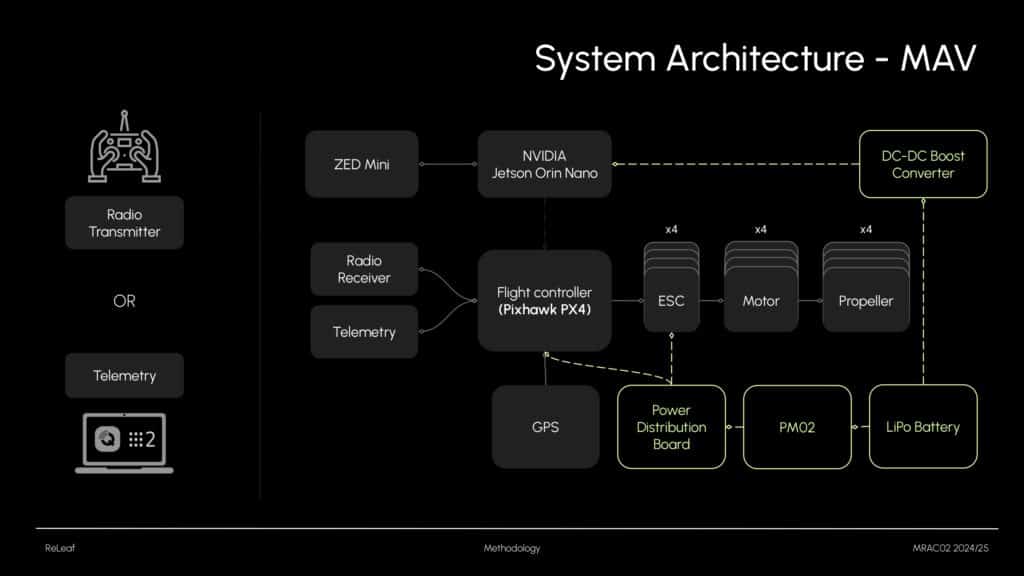

I used the Holybro X500 V2, equipped with the Pixhawk PX4 flight controller. It was available at IAAC and supports around 1kg of payload. It’s an open-source drone with good documentation, which helped with assembly. However, repairs were more difficult than expected – I had to reset, reprint, and replace several parts, which took considerable time and energy.

The drone includes 4 motors and ESCs connected to the flight controller, plus a GPS module, radio receiver, telemetry module, and a LiPo battery. It can be controlled by a remote or via ground station software (like QGroundControl or ROS2). Once everything was operational, I added a Jetson Orin Nano and a ZED Mini camera to enable SLAM.

Experimentation and Results

Once the hardware was working, I started testing. The experimental phase was divided into three parts:

- Data acquisition (terrain scanning)

- Data processing and simulation testing

- Seed dispensing

Data Adquisition

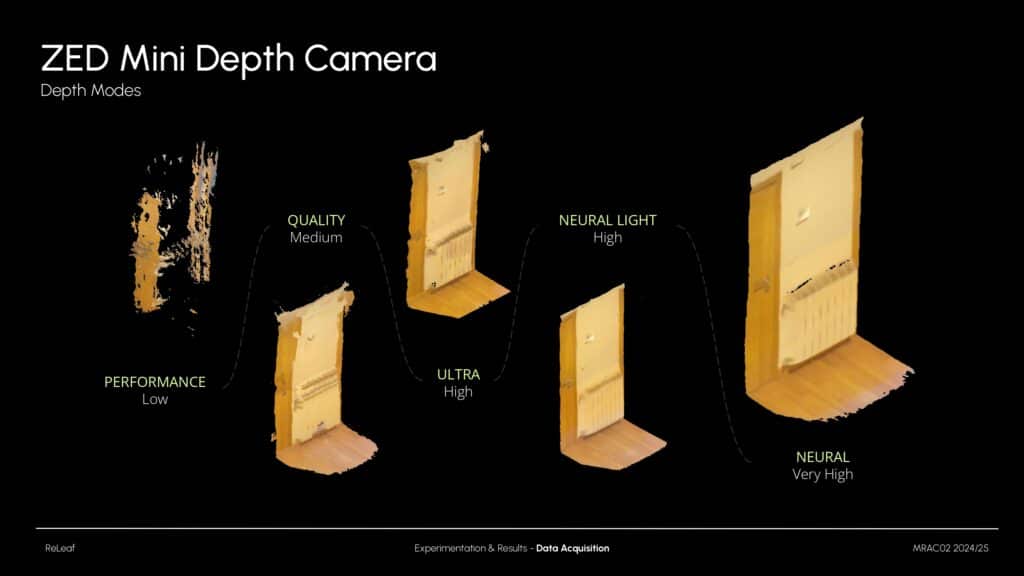

I optimized several ZED Mini parameters. One key setting was the depth mode. As shown in this slide, there’s a huge difference between ‘Performance’ mode and ‘Neural’ mode. Since my drone has limited onboard power, I used Neural Light, which strikes a good balance between speed and accuracy by using deep learning to enhance depth maps.

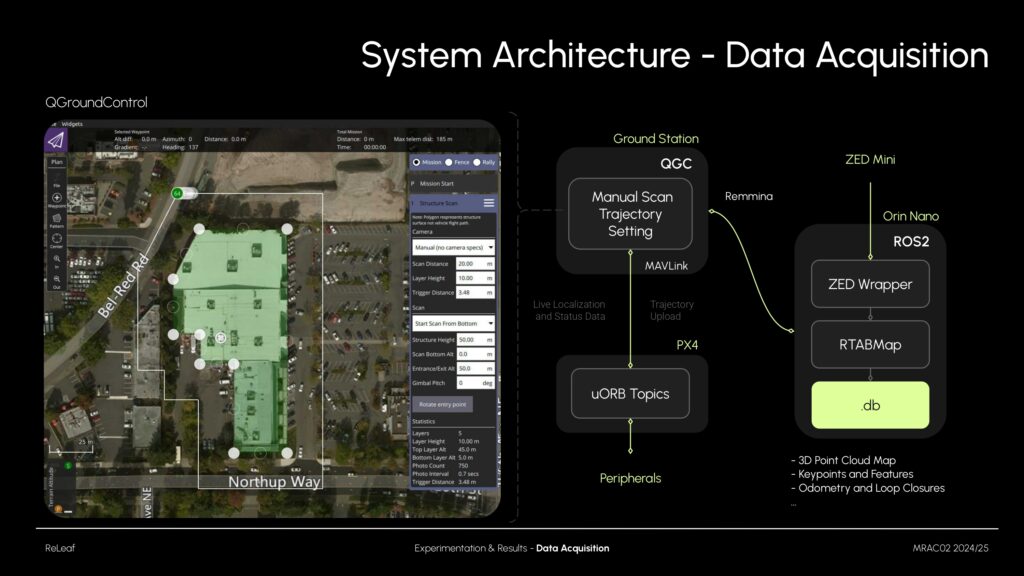

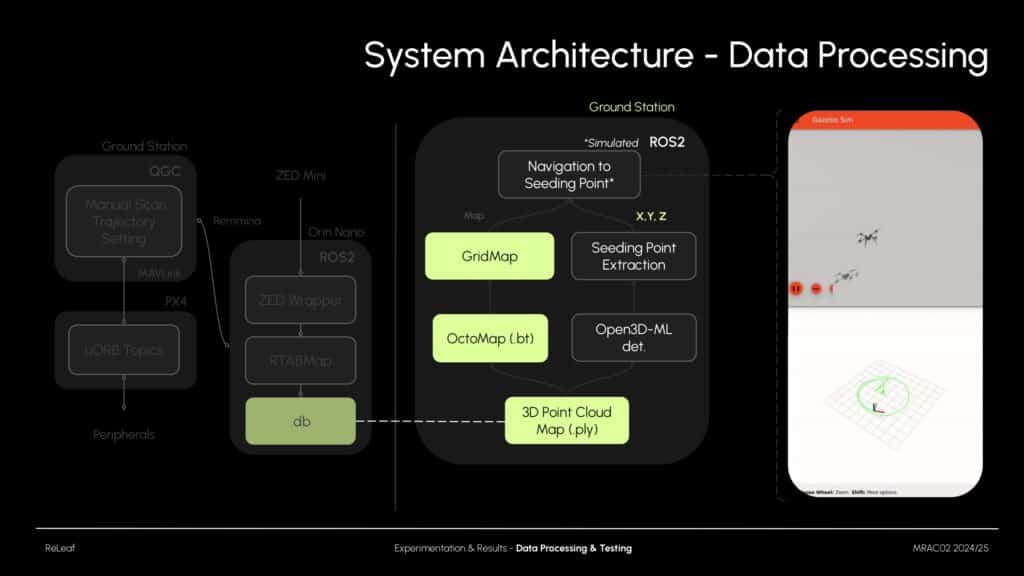

For SLAM, I used QGroundControl to control the drone via MAVLink. At the same time, I used remote desktop access to start the ZED ROS2 wrapper and RTAB-Map. This setup generated a detailed database, including point clouds, keypoints, odometry, and more.

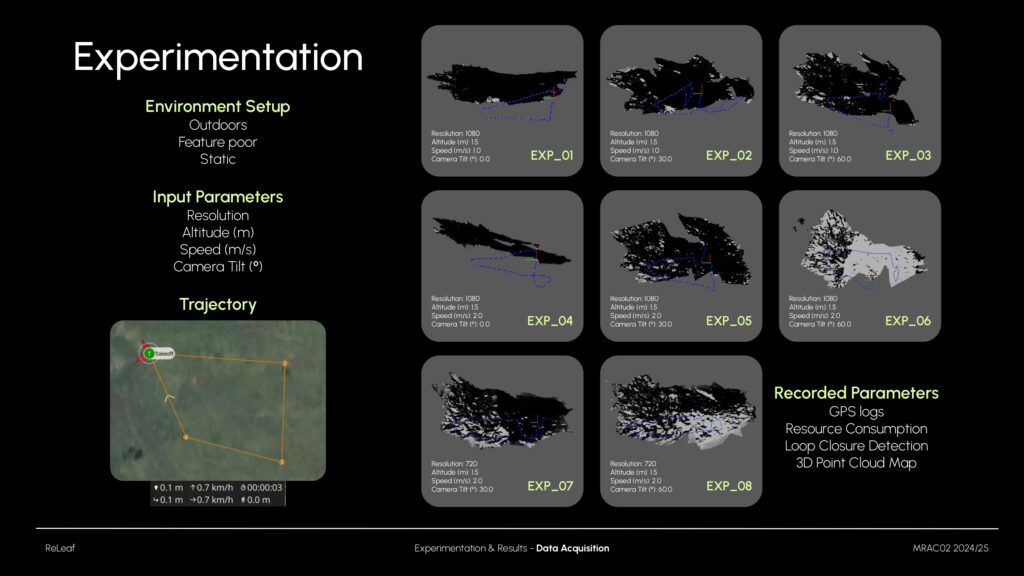

I tested how altitude, speed, resolution, and camera tilt affect mapping results. Resolution and speed had little impact (since I flew at low speeds). Camera tilt and height had stronger effects. At 60º, coverage improved but accuracy decreased. I also collected GPS logs, IMU odometry, loop closures, and resource usage. However, GPS accuracy varied, with deviations of 1–5 meters from planned paths.

These are the final results. The generated point cloud clearly captures terrain slope and features.

Post Processing and Testing

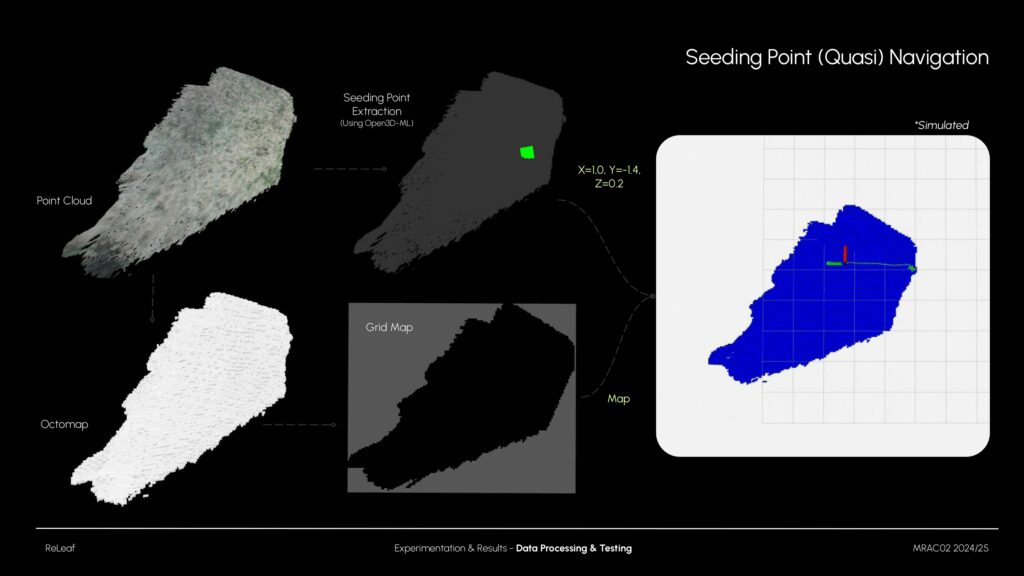

Once point clouds were generated, I worked on two post-processing tasks: extracting suitable seeding points and generating a gridmap for navigation. To test this pipeline efficiently, I used a Holybro simulation environment.

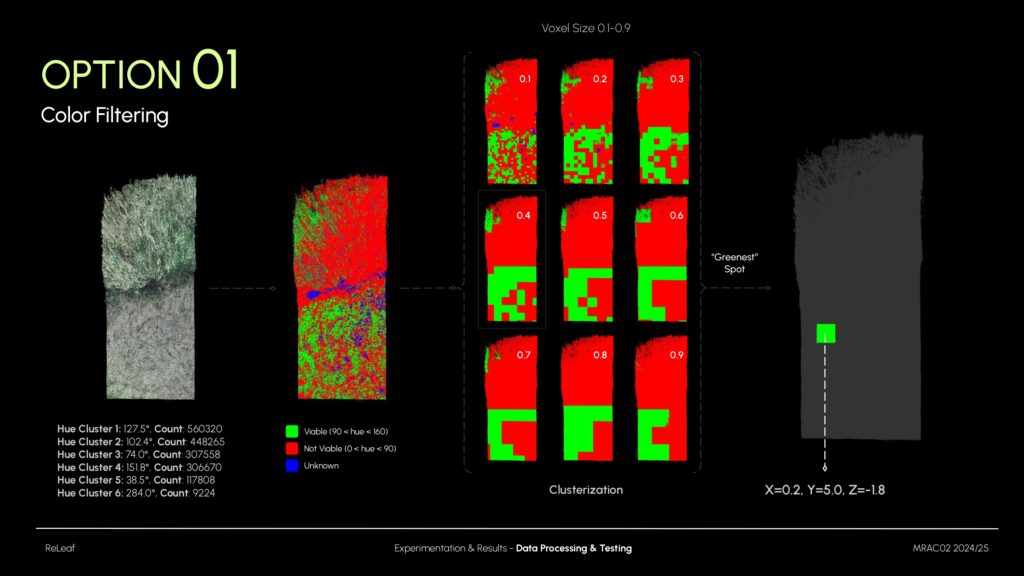

I used color filtering to eliminate tall grass regions—where seeds wouldn’t reach the soil. By adjusting hue values, I filtered out the tall grass (red) and retained the shorter vegetation (green). Then, I clustered the remaining sections and selected the “greenest” zone, calculating its (x, y, z) coordinates for drone navigation.

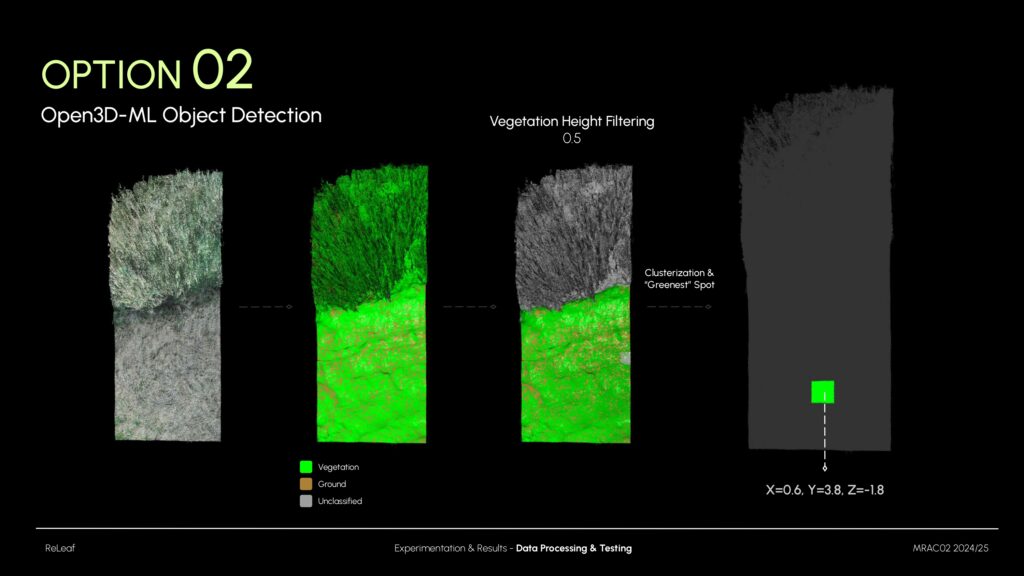

The second approach used Open3D-ML, a 3D object detection tool capable of identifying terrain elements like vegetation. It allowed me to define a maximum grass height, filtering out anything taller. As with the previous method, clustering was applied to find optimal seeding zones and determine navigation coordinates.

Here’s a quick summary of the full process: From raw point clouds to seeding point selection, grid map creation, and testing the navigation in simulation.

Seed Dispensing

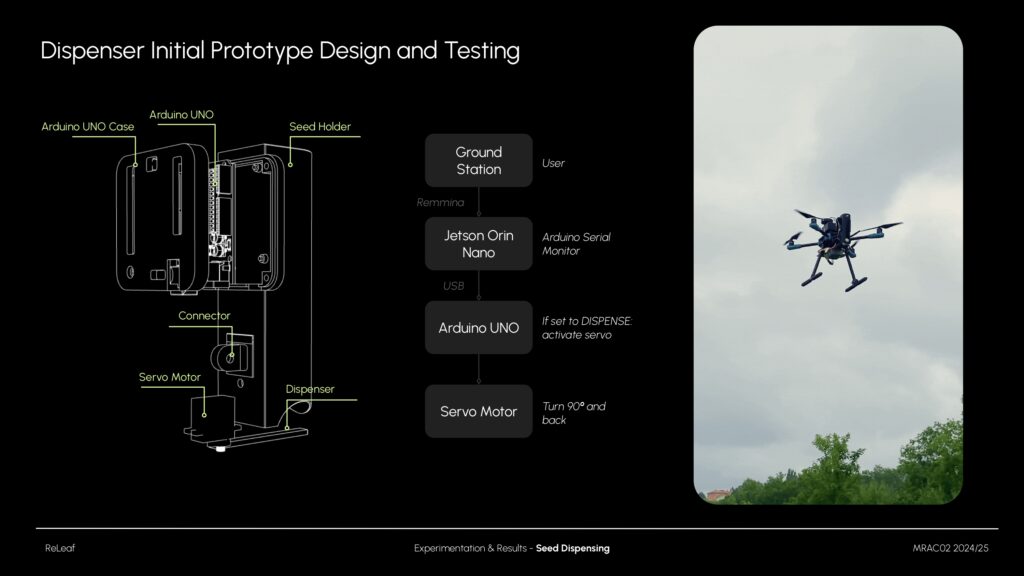

And finally, here’s the prototype seed dispenser. It holds up to five ping-pong-sized seed pods and deploys them on command. It’s a basic Arduino setup with a servo motor, currently operated through serial commands for testing.

Conclusion

This is the current system setup. It’s not fully autonomous yet, but the core components – scanning, seeding logic, and deployment – are in place.

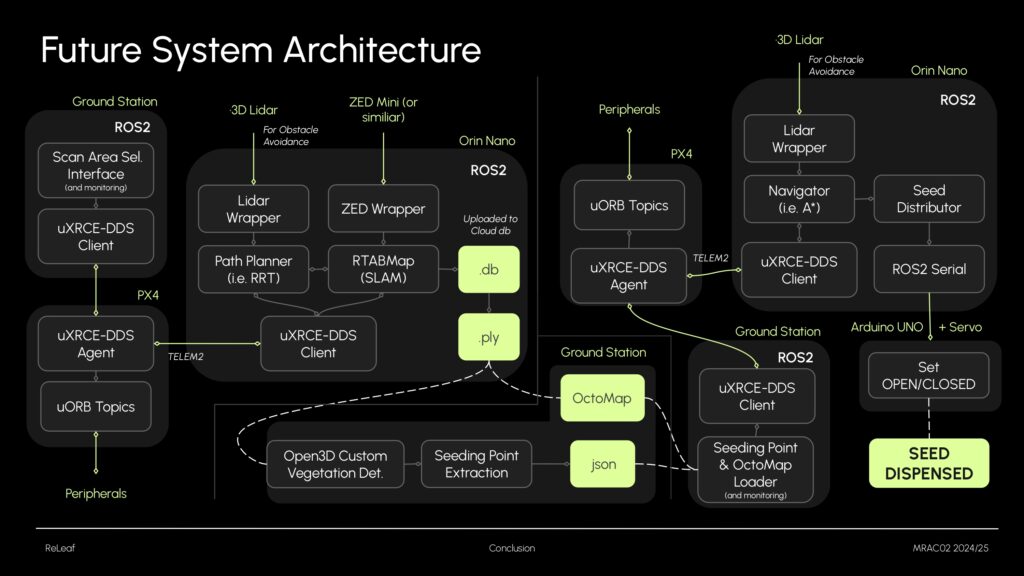

Here’s my vision for a future version:

- Hardware upgrades: better GPS, upgrade seed dispenser and add Lidar or similar (for obstacble avoidance, but potentially replacing the ZED).

- Replace QGC/MAVROS with a native DDS/ROS 2-based control system for full compatibility

- Use an autonomous SLAM path planner (e.g., RRT) instead of predefined routes, with real time obstacle avoidance.

- Train a custom 3D detection model (with posibility to detect tree species)

- Implementation of a full Navigation algorithms like A*, with real time obstacle avoidance.

- Entire pipeline testing and validation.

Github Links

ZED RTAB-Map SLAM (with seed point selection tools): https://github.com/ainhoaarnaiz/zed_rtabmap_ros2

Holybro Simulation (with map creation): https://github.com/ainhoaarnaiz/holybro_drone_simulation_ros2