A Real-Time Audio-Visual Stone Symphony

Rock the Rock is an interactive audio-visual installation that identifies and tracks rocks in real time, generating dynamic sound and projection overlays. By leveraging computer vision and finite state machines, it transforms geological forms into a sensory experience.

Concept and Context

This project served as our introduction to Finite State Machines (FSMs), developed for a camera-projection feedback setup. We chose to explore this hardware scenario through an immersive installation, using YOLO-based computer vision to track rocks and trigger visual and audio responses.

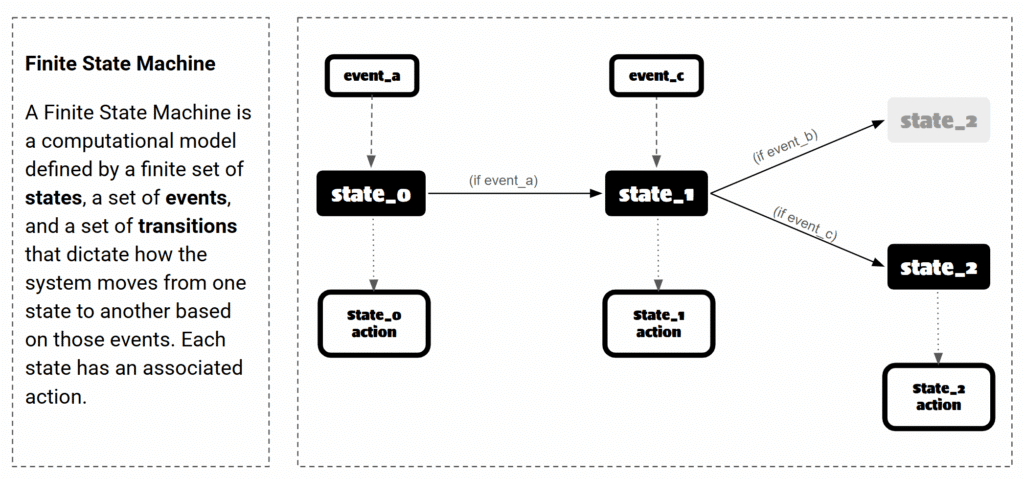

What Is a Finite State Machine?

A Finite State Machine is a computational model consisting of:

- A finite number of states

- Events that trigger transitions

- Actions associated with each state

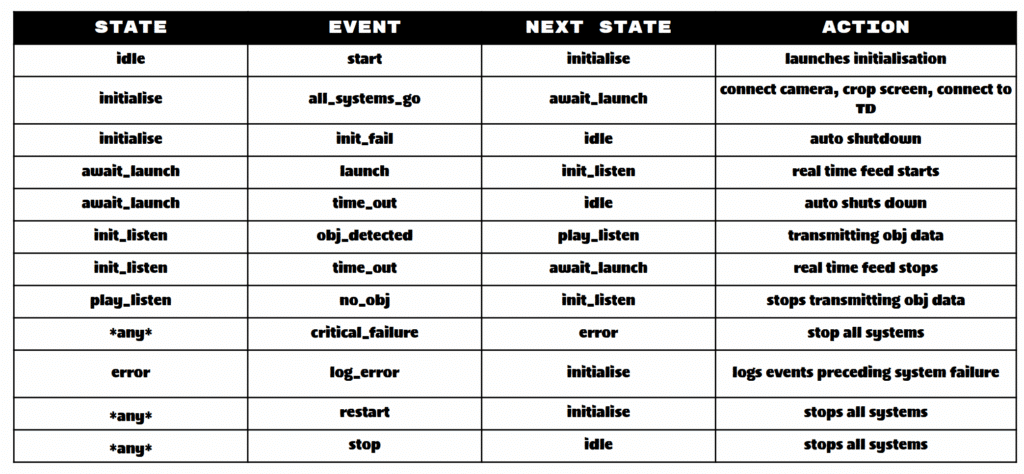

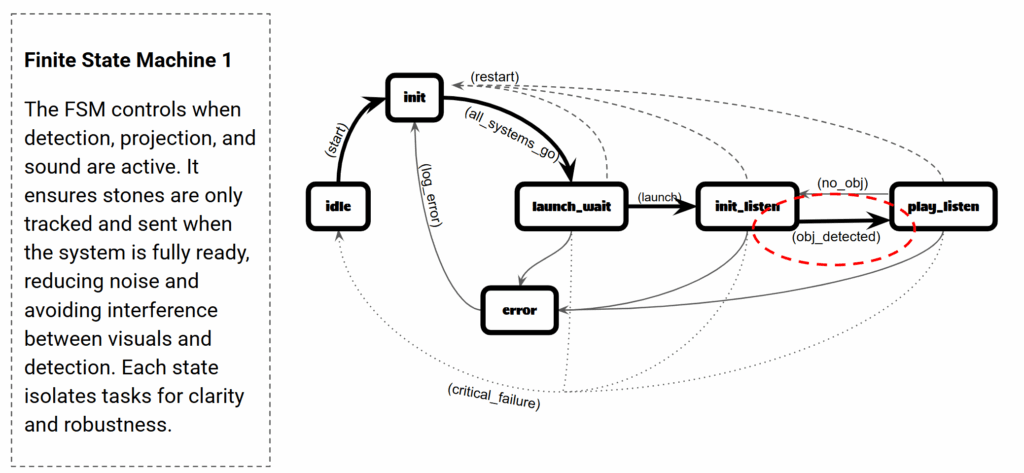

It defines how a system transitions between states in response to inputs. In our case, the FSM controlled the behavior of the detection, audio, and projection systems to ensure smooth and coordinated interaction.

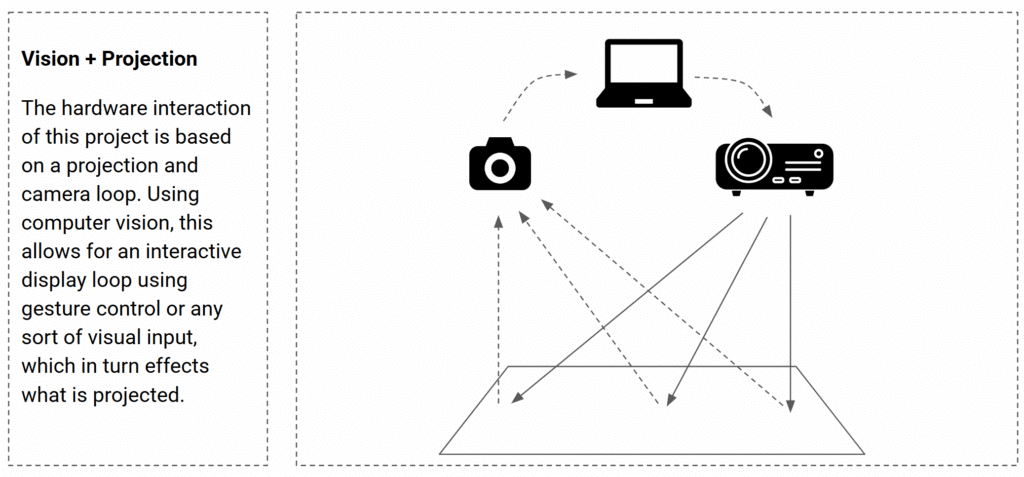

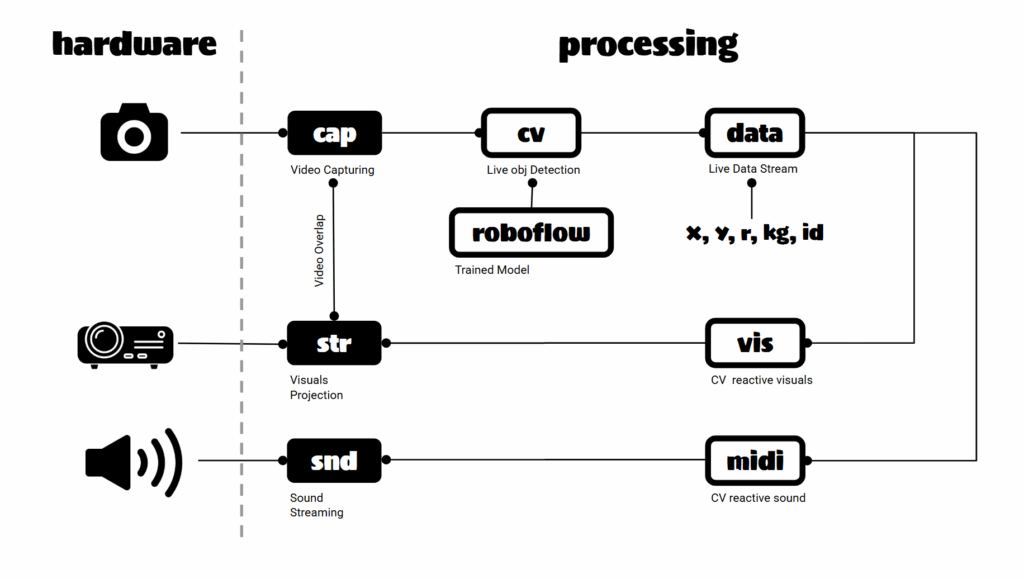

Vision and Projection Setup

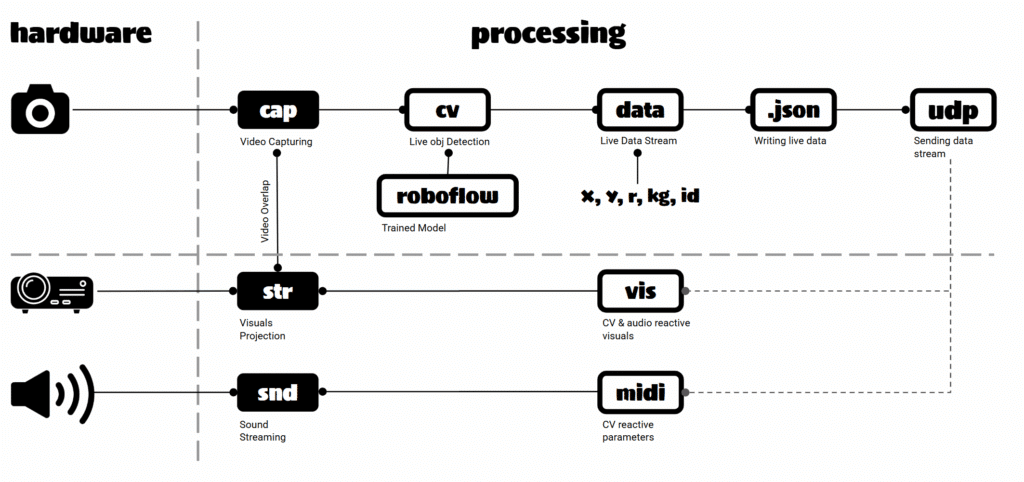

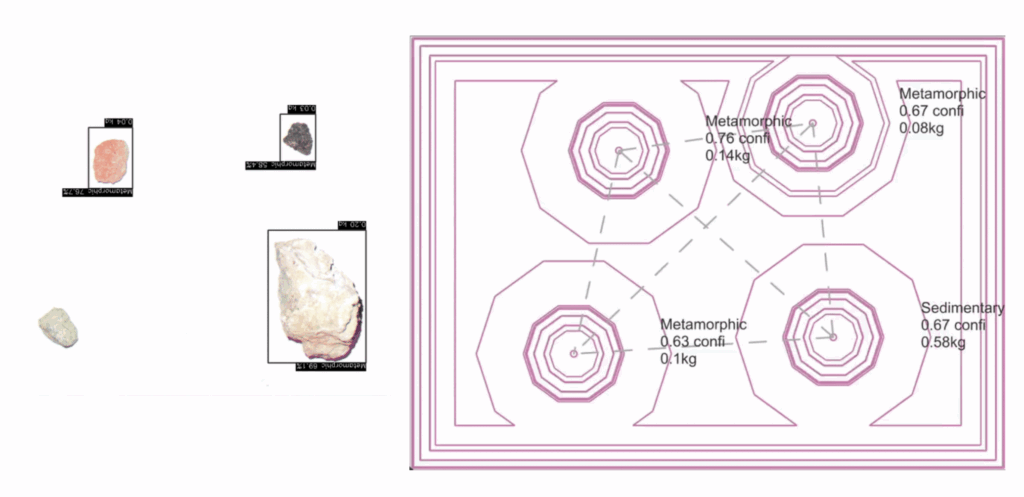

At the heart of the system is a feedback loop between camera-based detection and projector-based output. The camera captures the scene, detects rocks, and sends this data to a projection engine. The output—both visual and auditory—is mapped onto the physical location of the rocks, enabling a responsive and tactile interaction.

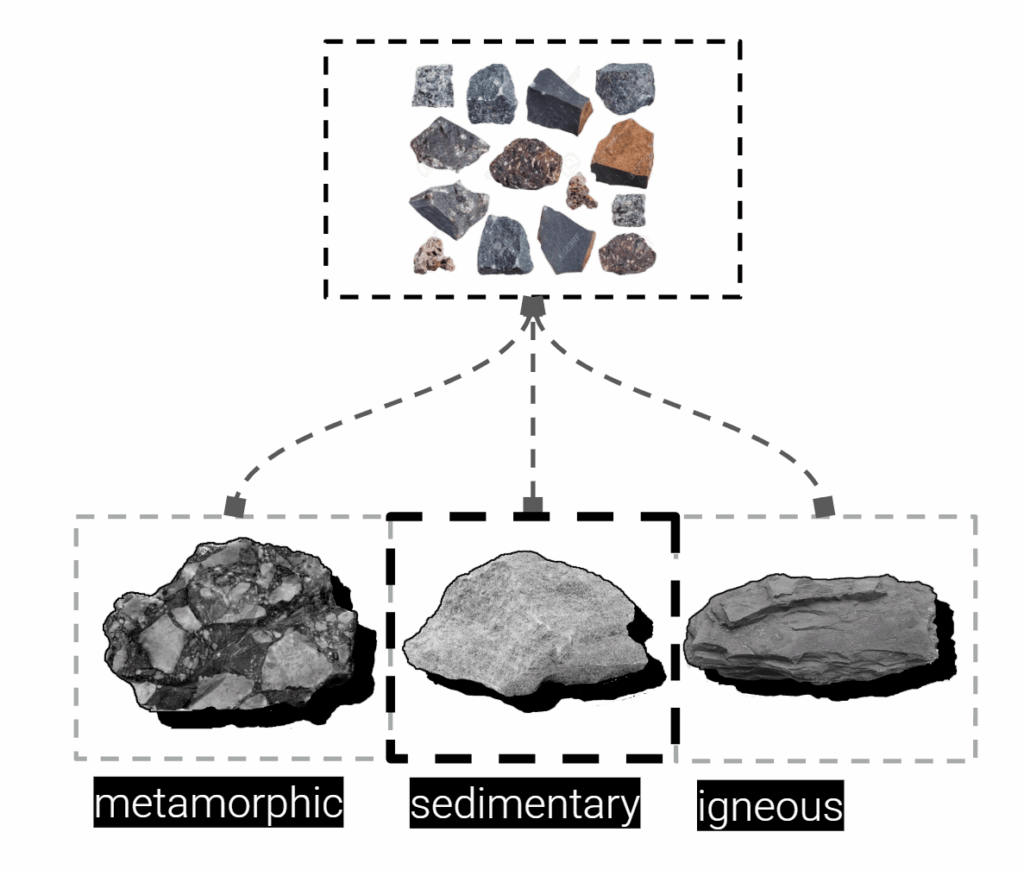

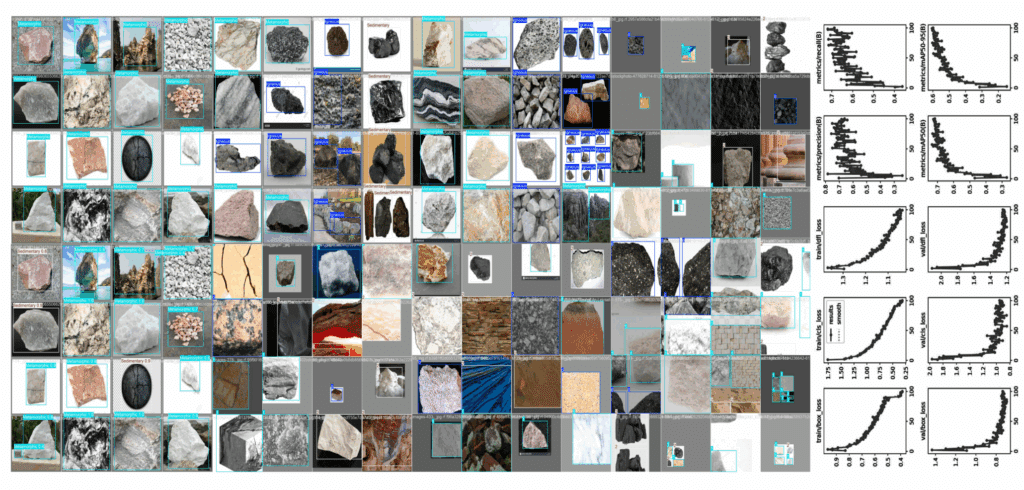

Rock Classification and Training

Using a pre-existing dataset on Roboflow, we trained a YOLO model to classify rocks into three categories:

- Igneous

- Metamorphic

- Sedimentary

These classifications served as core input types for the FSM, allowing for distinct behavior based on geological identity.

System Iterations

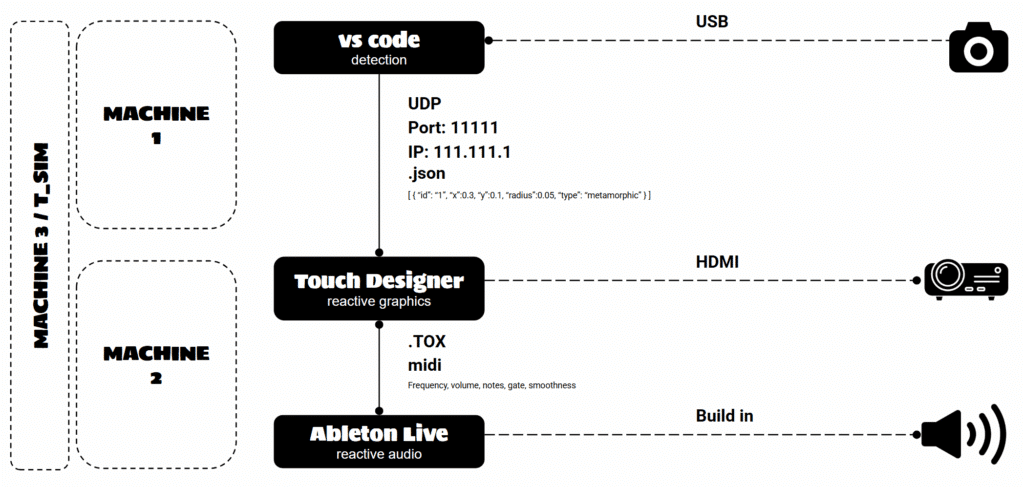

First System: Distributed Architecture

In our initial setup, the system was split across two machines:

- Device 1 (Python): Handled detection, FSM logic, and data packaging

- Device 2 (TouchDesigner + Ableton): Received data and generated visuals and sound

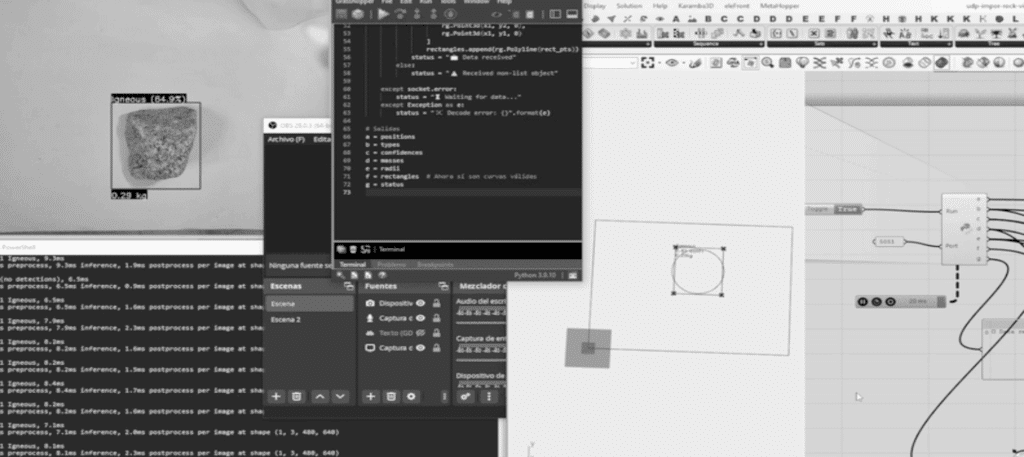

Data was captured via OpenCV + YOLO, converted into JSON (coordinates, area, rock type), and sent via UDP socket to the second machine. This distributed architecture enabled high data throughput by separating computational tasks.

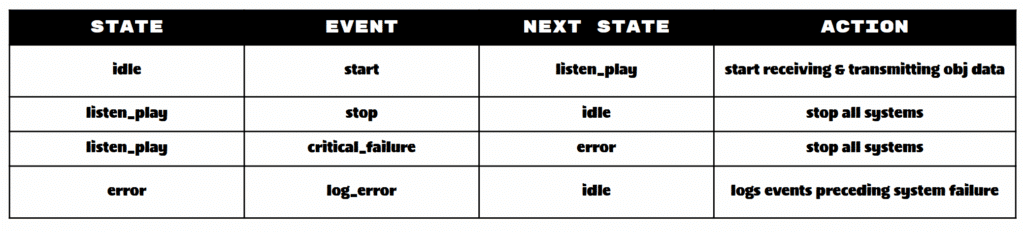

FSM 1: Modular but Complex

The first FSM defined distinct states for detection, projection, and sound playback. Each task was isolated into a controlled state, reducing interference and increasing clarity. However, the complexity introduced more potential points of failure and made debugging more difficult.

Second System: Centralized Architecture

In the refined version, we consolidated all logic and output into a single machine using Grasshopper as the central platform:

- A Python script captured YOLO vision data and sent it via UDP

- Grasshopper received, interpreted, and used this data to drive both audio and visual output natively

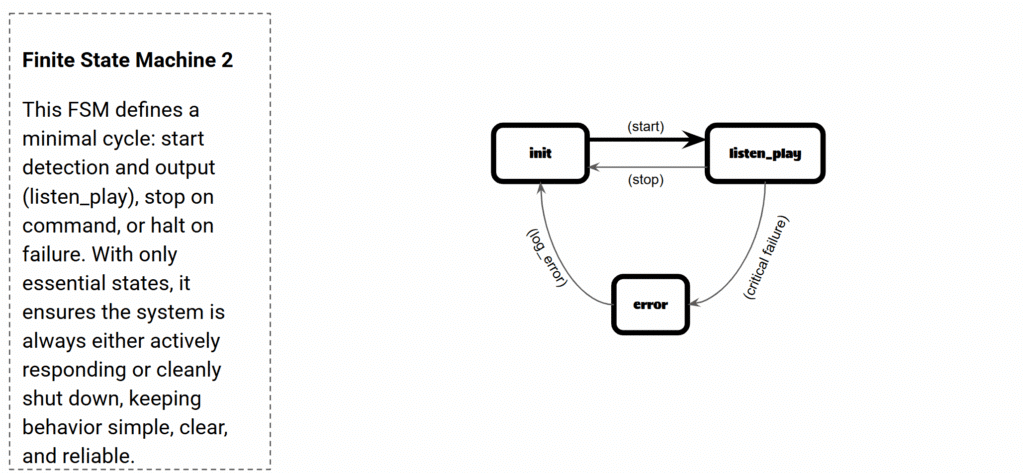

FSM 2: Lean and Reliable

This FSM had a minimal cycle:

- Start detection and output

- Stop on command or error

Fewer states meant fewer transitions and lower complexity, leading to faster and more reliable performance in short development cycles.

Reflection

While the second system performed more reliably, this was largely due to time constraints rather than inherent superiority. The first system, though more complex, offered greater modularity, performance scalability, and the potential for distributed computing across space and time.

With more development time, the distributed approach could evolve into a powerful framework for high-resolution, real-time data interpretation and feedback—especially for installations requiring multiple sensory outputs or remote access.

Closing Thoughts

Rock the Rock demonstrates the expressive power of structured systems like FSMs when paired with real-time data inputs and multi-sensory outputs. Whether centralized for rapid prototyping or distributed for complex installations, these systems open a path for responsive, computational design grounded in the physical world.