Introduction

From the moment a baby grasps a finger, human learning is messy, sensory-rich, and rooted in lived experience. Neurons re-wire through curiosity, emotion, and social feedback, slowly weaving meaning from sparse data.

By contrast, modern AI models absorb terabytes of text and images in silence, tuning billions of weights to predict what comes next. This statistical prowess lets them compose essays, draft code, and paint photorealistic scenes—yet it also makes them prone to “hallucinations,” confidently inventing facts, citations, or entire objects that never existed.

In this post we’ll explore how these two learning systems diverge: plastic brains vs. gradient-descent machines, embodied memory vs. pattern matching, and reflective doubt vs. synthetic certainty. We’ll then unpack real-world hallucination failures, explain why they happen, and outline practical guardrails for anyone relying on generative AI.

Overview

Ever catch yourself swearing you saw something that wasn’t there? Your brain’s a prediction engine, just like the AI powering your chatbot. In this post, I’ll break down how humans and AI learn, spotlight their quirks, and show why AI sometimes “hallucinates”—spitting out convincing but false info. You’ll see real-world AI mistakes, from fake citations to botched medical advice, and even a quick experiment where I tricked a model into hallucinating. By the end, you’ll see why errors happen and how we can catch them.

How Humans Learn

Your brain’s a guessing machine. It uses predictive coding—a fancy term for making educated guesses based on past experiences (priors), then tweaking them with new sensory info. Saw a shadow move? Your brain might scream “cat!” before checking if it’s just a breeze. Memories work similarly, blending old and new data, which is why they’re fuzzy sometimes.

“Your brain bets on what’s likely, not what’s certain.”

- Checker-Shadow Illusion: A checkerboard with shadows tricks you into seeing two squares as different colors, though they’re identical. Your brain’s priors about lighting mislead you.

- Mandela Effect: Ever recall Nelson Mandela dying in the 1980s? Many do, but he died in 2013. Shared false memories spread via suggestion.

How AIs Learn

AI language models, like GPT, learn by predicting words. They’re transformers, slicing text into tokens (think word chunks) and guessing what comes next during training. This self-supervised process scans billions of sentences, tuning the model to mimic patterns. No human teacher needed—just text and math. But it’s not perfect.

- Data Gaps: If training data lacks niche topics, the AI stumbles. Ask about a rare disease, and it might guess wrong.

- Temperature Sampling: When generating text, a randomness knob (temperature) can make outputs creative but shaky. High settings spark wild guesses.

hallucinations

Why AI Hallucinates

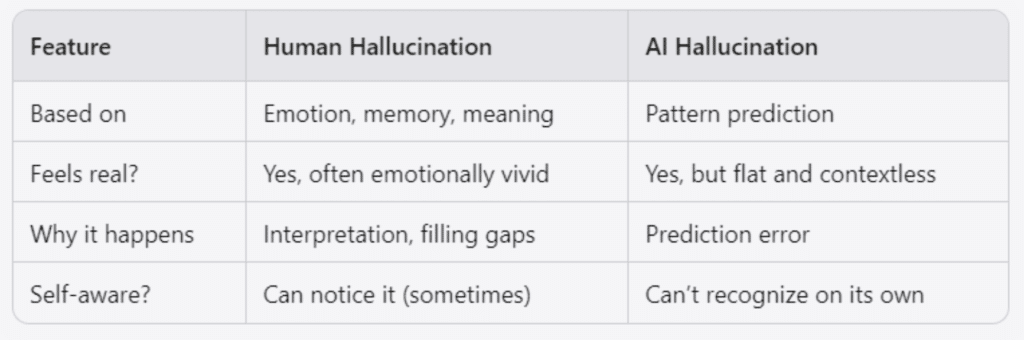

To explain why hallucinations (fabricated or false outputs) happen in AI models like ChatGPT or image generators — and how it’s different from human errors.

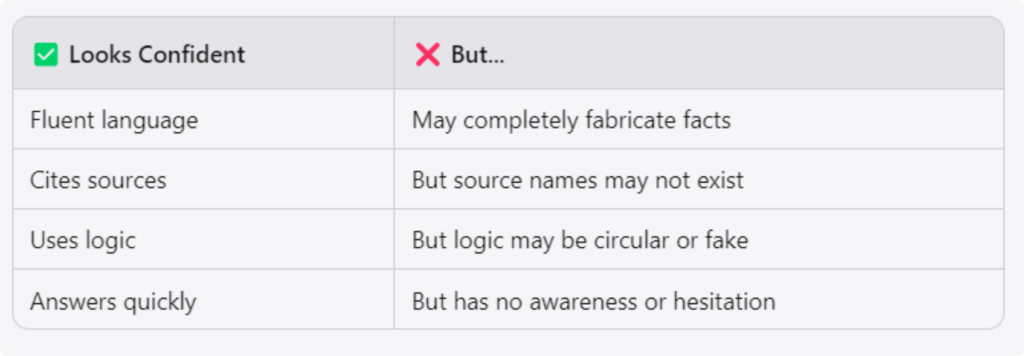

🔹 AI is a Prediction Machine, Not a Thinker

- AI doesn’t “know” facts — it predicts the most likely next word/image/pixel based on patterns.

- It doesn’t verify what it generates — it doesn’t know if it’s true or false.

- It has no understanding of context or intent.

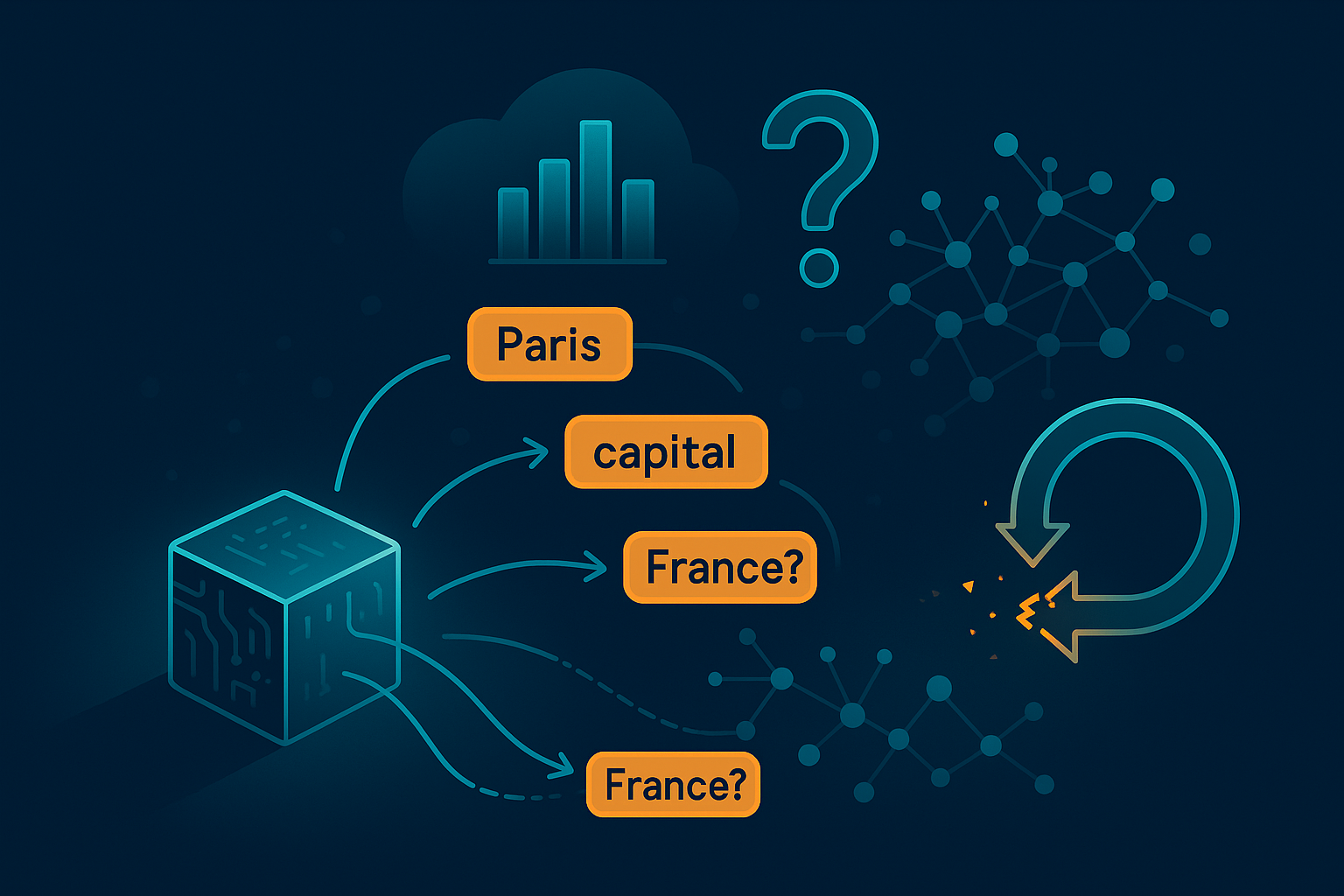

🔹 Trained on Correlations, Not Meaning

- AI learns from billions of examples — it sees what often appears together.

- It stores statistical relationships, not real-world concepts.

- For example: “Paris is the capital of…” → likely answer: France (pattern match, not knowledge).

🔹 No Feedback Loop (Unless Programmed)

- AI doesn’t learn from mistakes on its own.

- It doesn’t feel uncertainty, confusion, or embarrassment like a human might.

- If it hallucinates, it doesn’t know it did.

When Humans Hallucinate

To show that humans also hallucinate, but for very different reasons than AI — involving memory, emotion, imagination, and meaning-making — and to highlight how these errors are interpretive, not just predictive.

We Fill in the Gaps

- Human memory isn’t a hard drive — it’s reconstructive.

- We often misremember things or recall false details.

- We fill in missing information based on context, emotion, and expectation.

E.g. The Mandela Effect — collective false memories like “Berenstain Bears” vs. “Berenstein Bears”.

🔹 Meaning > Accuracy

- Our brain prioritizes coherence, not correctness.

- We make sense of things even when they’re incomplete or ambiguous.

- We interpret based on emotion, social cues, and narrative.

🔹 Hallucinations Are Interpretive

- Dreams, déjà vu, optical illusions — all human-style hallucinations.

- They’re not just glitches — they reflect how we try to make sense of the world.

- We hallucinate to understand, not just to predict.

🔹 Emotions Shape Memory

- We remember emotionally charged events more vividly — but not necessarily more accurately.

- Memory is entangled with feeling, time, and place.

- What we recall is influenced by what mattered to us.

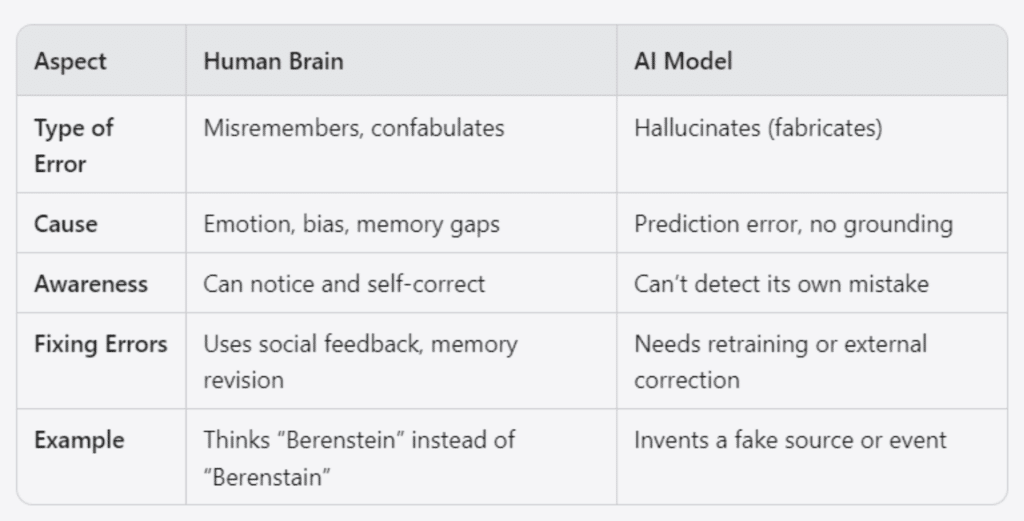

How Mistakes Differ: Humans vs. AI

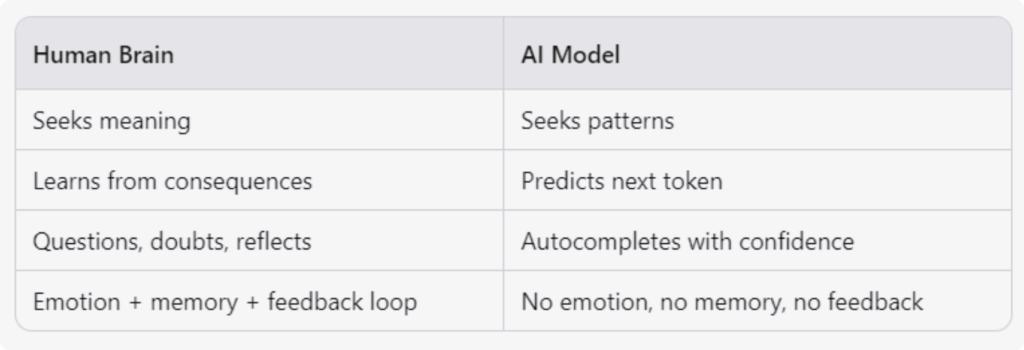

To show that while both humans and AI make mistakes, the nature, cause, and consequences of those mistakes are very different — and understanding those differences helps us design and trust AI more wisely.

Humans Make Meaningful Mistakes

- We misremember based on emotion, bias, or context.

- Mistakes often reveal how we interpret, not just what we forget.

- Social feedback helps us correct ourselves (e.g., we ask others, reflect, revise).

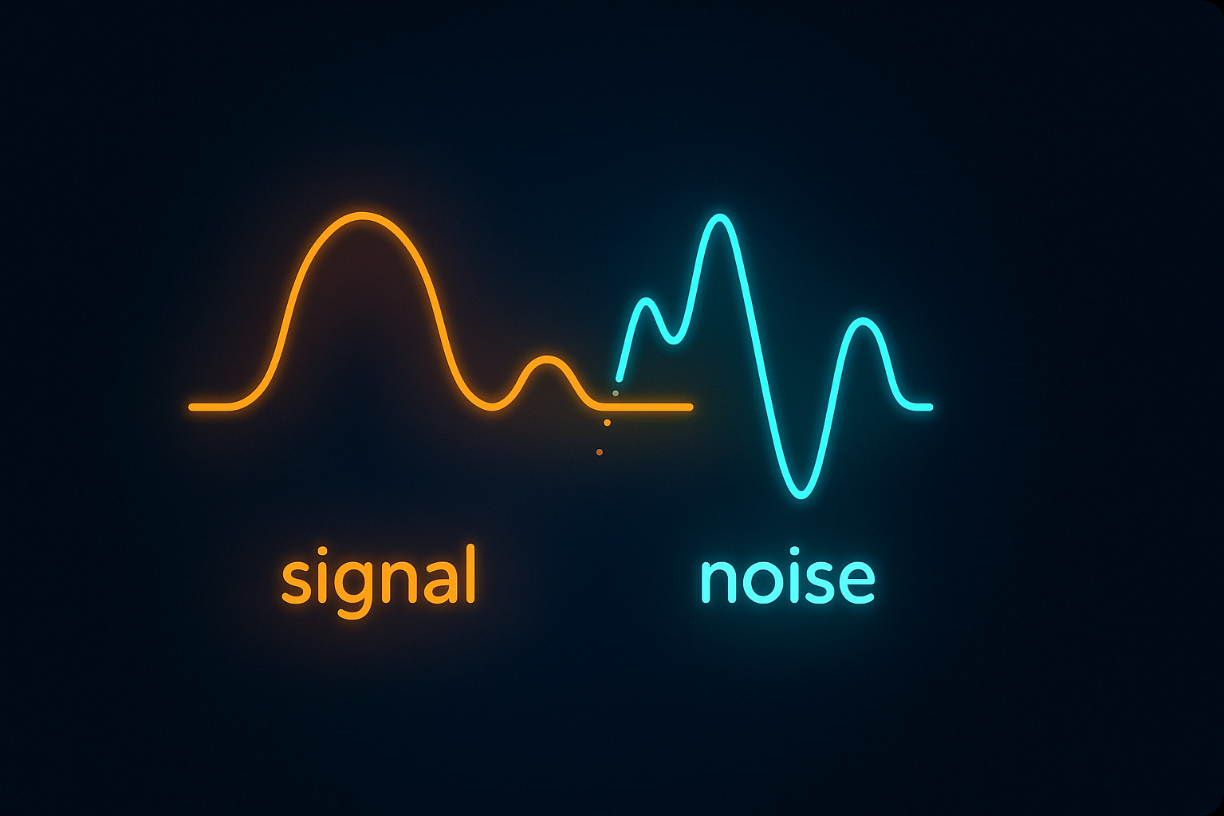

🔹 AI Makes Pattern-Based Errors

- AI “hallucinates” when it generates something plausible but false.

- It doesn’t know it’s wrong — there’s no awareness or understanding.

- Errors come from data bias, lack of grounding, or misalignment in training.

Key Insight:

Human mistakes are interpretive.

AI mistakes are predictive.

Brains, Bots, and the Truth

synthesize and reflect: Showing how humans and AI learn, mistake, and hallucinate differently. Now connect it to real-world implications — trust, design, ethics, interpretation, and responsibility.

Key Takeaways

- Humans and AI both hallucinate — but for different reasons.

- Human hallucination = emotional, interpretive, meaning-driven.

- AI hallucination = statistical, pattern-driven, confidence without comprehension.

- AI doesn’t understand — it predicts.

- It’s powerful, but blind. It doesn’t “know” what it’s saying.

- Human learning is rich, embodied, and social.

- We learn through touch, emotion, failure, and feedback.

- We must design with awareness.

- Knowing how AI gets things wrong helps us build better interfaces, fact-checking layers, and ethical boundaries.

- Responsibility is human.

- AI can’t tell truth from fiction — we can, and should.

“If You Remember One Thing…”

“Humans seek meaning.

Machines seek patterns.

Knowing the difference is how we stay in control.”

Conclusion

Trust, But Verify

Red Flags: Signs of an AI Hallucination

How to Catch One

- Ask: “Can this be verified?”

- Check the facts manually (dates, names, citations)

- Ask: “Where did this come from?”

- AI doesn’t cite real sources unless specifically trained to

- Ask: “Would a person say this?”

- Look for tone mismatch, missing nuance

- Ask: “Is this too perfect?”

- Hallucinations often sound polished — but are factually empty

🔹 What to Do When You Suspect One

- Cross-check with a search engine

- Ask the model to explain or rephrase

- Look for missing context

- Consult a human expert

AI Hallucination Test

Quick Test

- Does it sound too good to be true?

- Does the source exist?

- Is the logic sound?

- Can a human verify it?

Quick Case Study

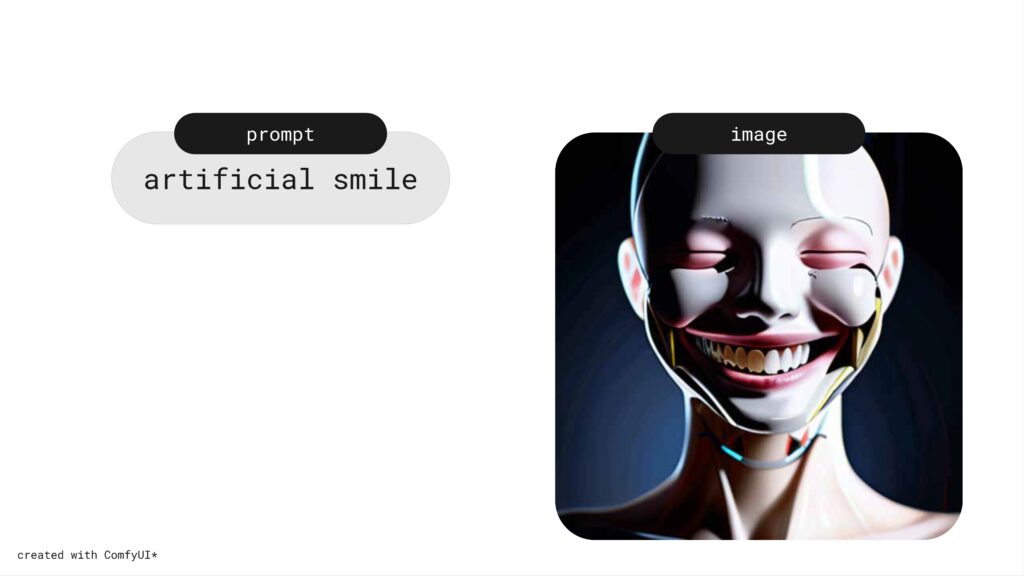

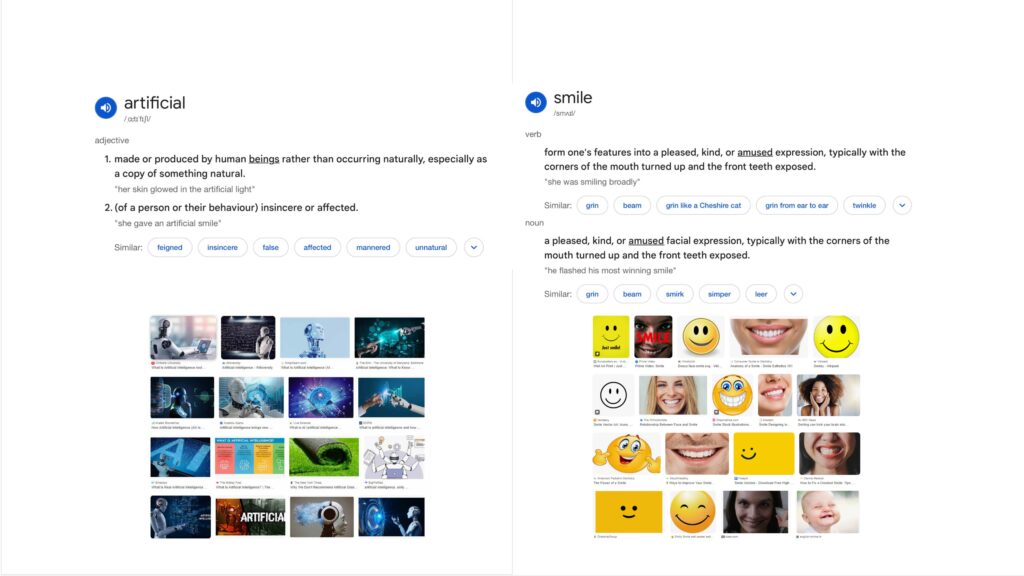

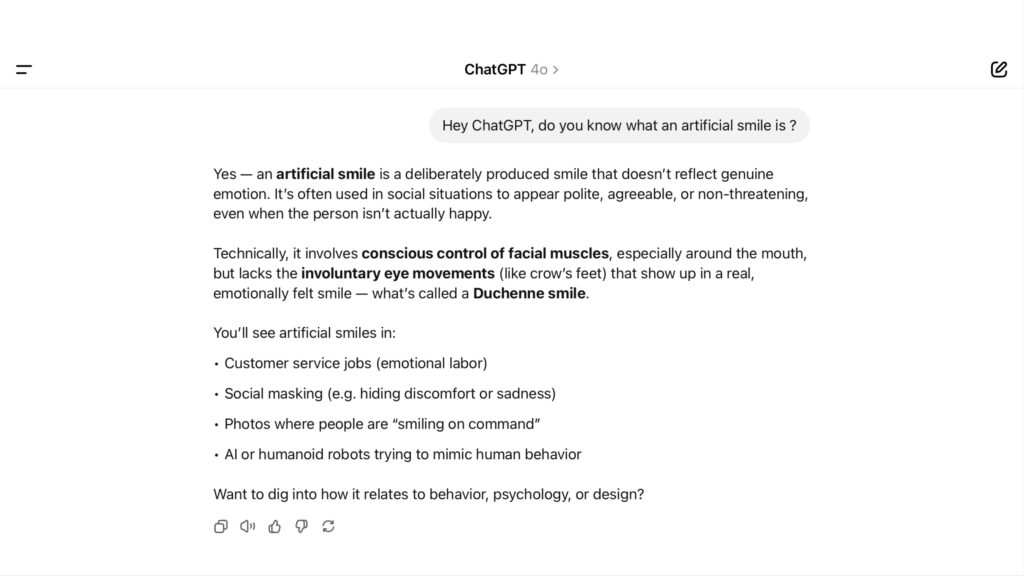

We conducted a quick case study to see how generative AI interprets and visualizes a culturally loaded phrase: “artificial smile.”

We asked both a text-based model (ChatGPT) and an image-based model (ComfyUI) to respond to the same phrase.

Verbally, the model can define what an artificial smile means. It can explain social context, emotional labor, and surface vs. genuine expression.

But when generating an image, it doesn’t use that meaning. Instead, it breaks the phrase into parts “artificial” and “smile” , and looks for what each word is visually associated with.

The result is not a representation of the concept, but a combination of typical features: smooth skin, symmetrical teeth, synthetic texture.

The model performs recognition, not understanding. It knows the phrase in language, but not in image.