An exploratory design process of spaces into atmospheric and phenomenological experiences through lighting design.

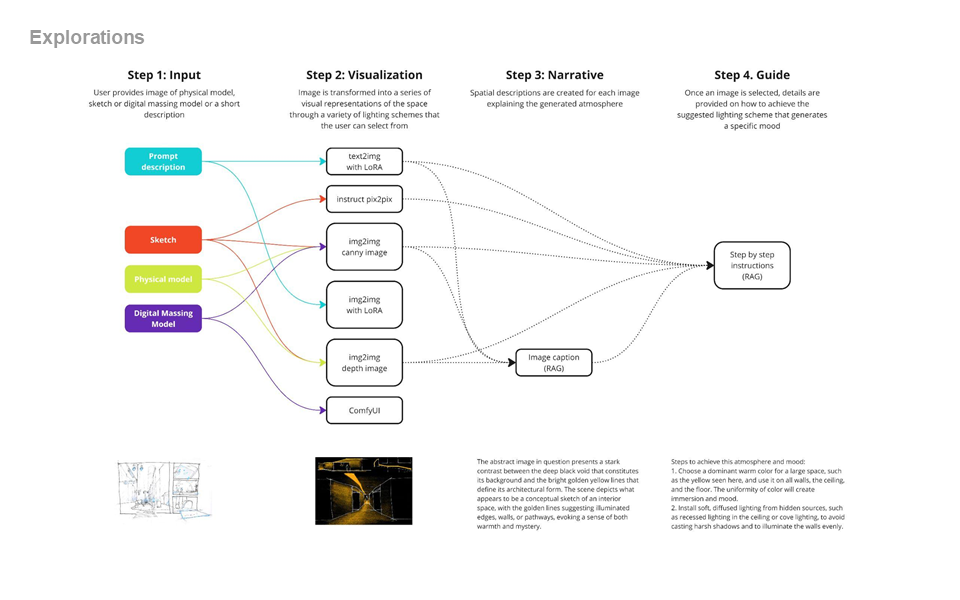

The primary objectives of this project are to develop a robust generative AI workflow that can seamlessly integrate into the architectural design process, enhancing the atmospheric and phenomenological qualities of spaces through lighting design. This workflow aims to provide users with a dynamic range of lighting schemes, each simulated using LoRA (Low-Rank Adaptation) and diffusion models trained to represent various lighting conditions. By transforming user-provided images into a series of visually distinct renderings, this methodology empowers designers to explore and select optimal lighting arrangements that evoke specific moods and atmospheres. Additionally, the project seeks to offer comprehensive implementation details, guiding users on how to recreate the chosen lighting effects in real-world settings. Ultimately, this project seeks to facilitate the use of architectural visualization and lighting design, fostering environments that resonate deeply with occupants on an emotional and experiential level.

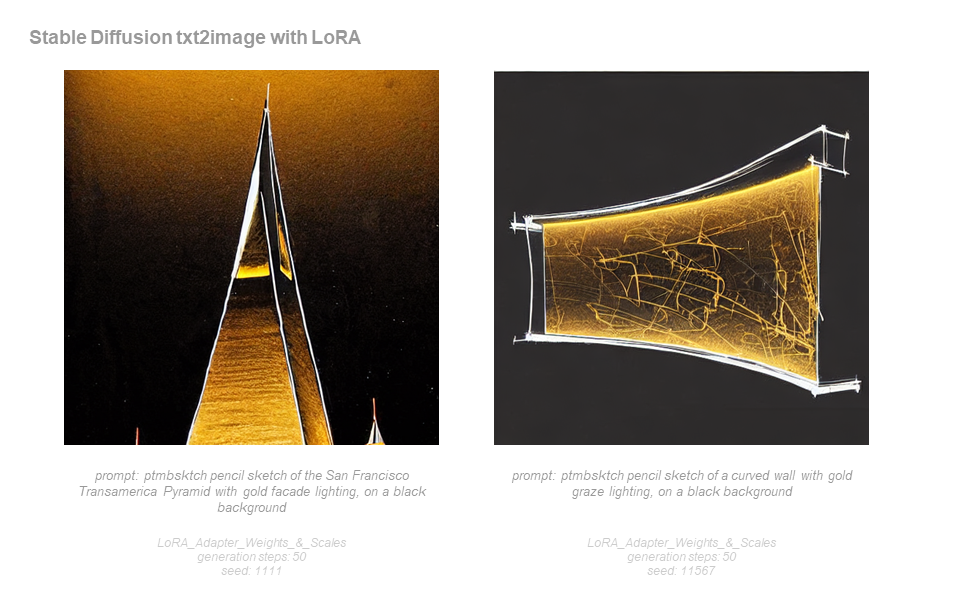

LoRA

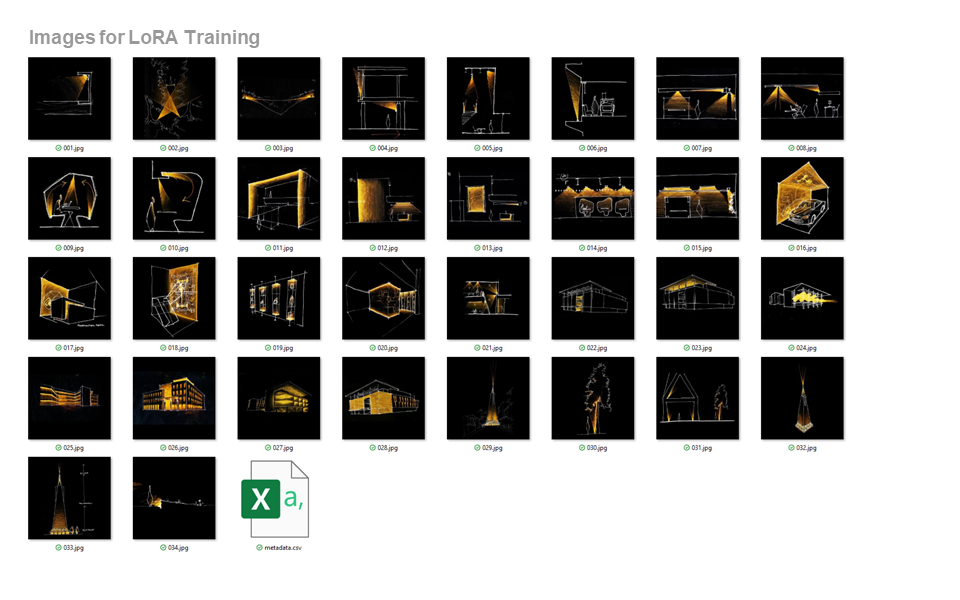

The LoRA was created using 34 images (sketches created by Patrick MacBride) and associated captions. Weight and Biases is utilized to visualize the training and validation across the 1500 training steps. In general, the training produced good results. It was important that the tokens used in the captions such as “ptmbsketch pencil drawing”, “gold”, and “black background” were used in the new txt2image prompts.

Once the LoRA was created, we experimented with ControlNet through Automatic111. We used img2img to try and overlay lighting sketches overtop of architecture. The LoRA generated image (which was created in a separate notebook) was the first image. The ControlNet image is the first image below, which was processed using canny, depth, mlsd, and other controls. The resulting image is on the right, seen below. The process maintained the essence of a the LoRA sketch in color and brightness location while using the architectural image as the starting point.

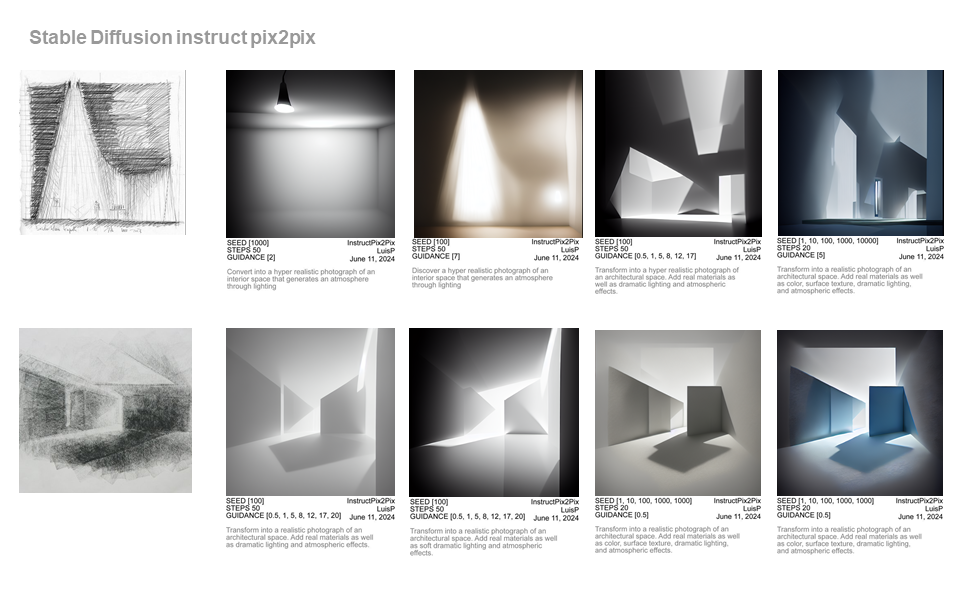

Pix2Pix and img2img Exploration

In our exploration of visualizing light through generative AI, we sought to go beyond the preliminary LoRA experiments to uncover other techniques and outcomes. Our initial experiments involved the use of Instruct Pix2Pix through Stable Diffusion, a tool designed to transform images based on textual instructions to finer details to the generated images. This method proved interesting as our aim to generate realistic images often resulted in abstract interpretations. Initially, the process involved the straightforward addition of simple light fixtures. However, through experimenting with guidance scales and seed variations, we were able to achieve more abstract spatial configurations. These outputs, based on Peter Zumthor’s sketches, highlighted a challenge as the model had problems with the interpretation shaded regions within drawings, resulting in unexpectedly abstract representations of light.

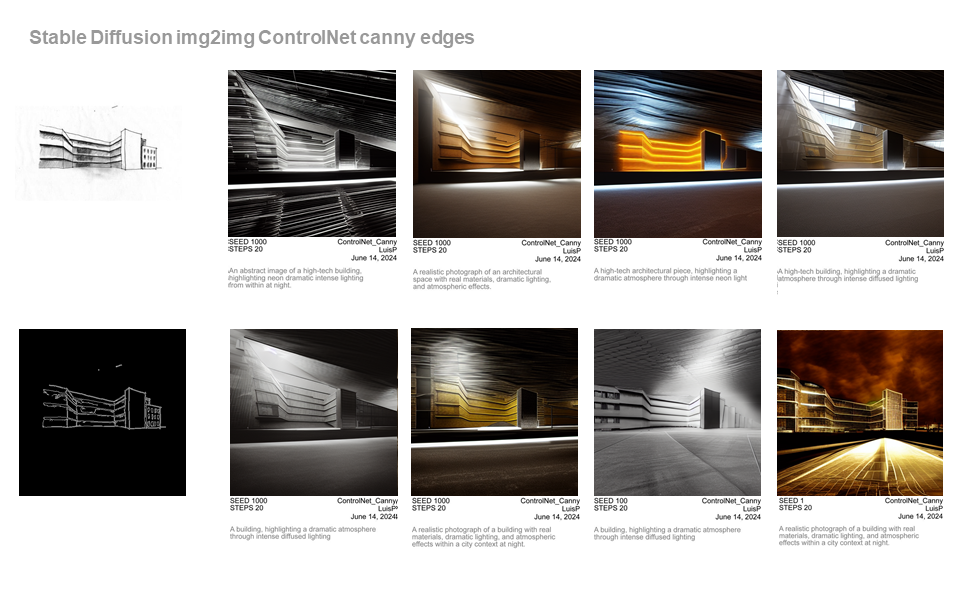

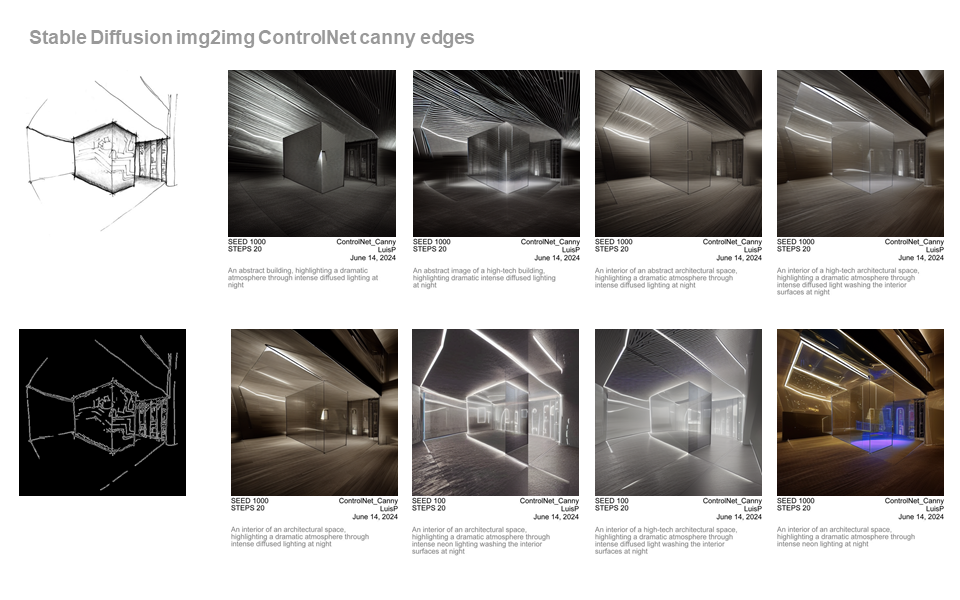

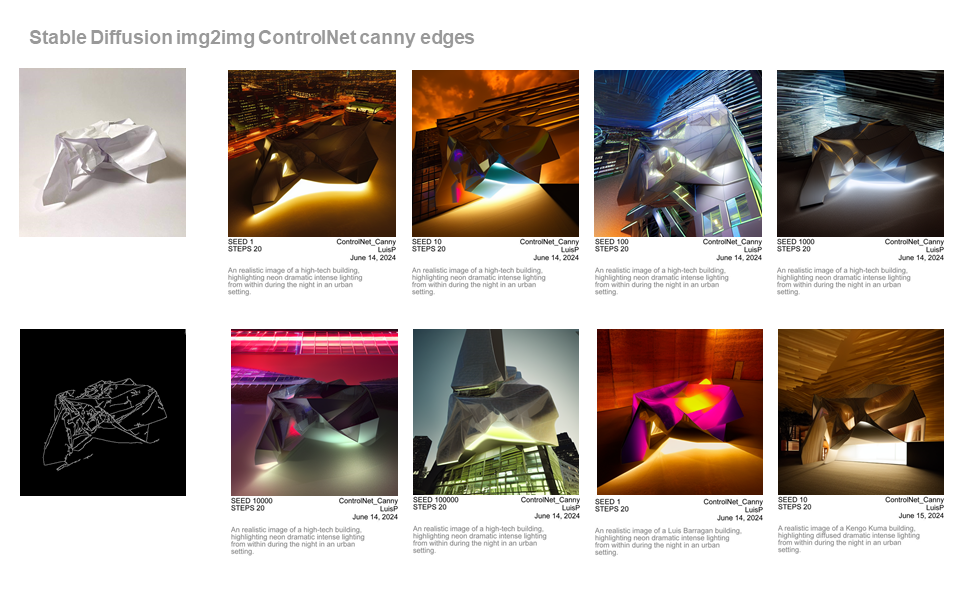

Subsequently, we employed ControlNet’s img2img with canny edges to further explore light schemes and styles for building exteriors. This technique resulted in more control over the creative process and manipulation of the original images. The initial outputs were abstract, yet as the approach was refined, the generated images began to resemble realistic architectural renderings. This method allowed for an exploration of various lighting schemes, offering a greater depth of detail and realism compared to our earlier attempts.

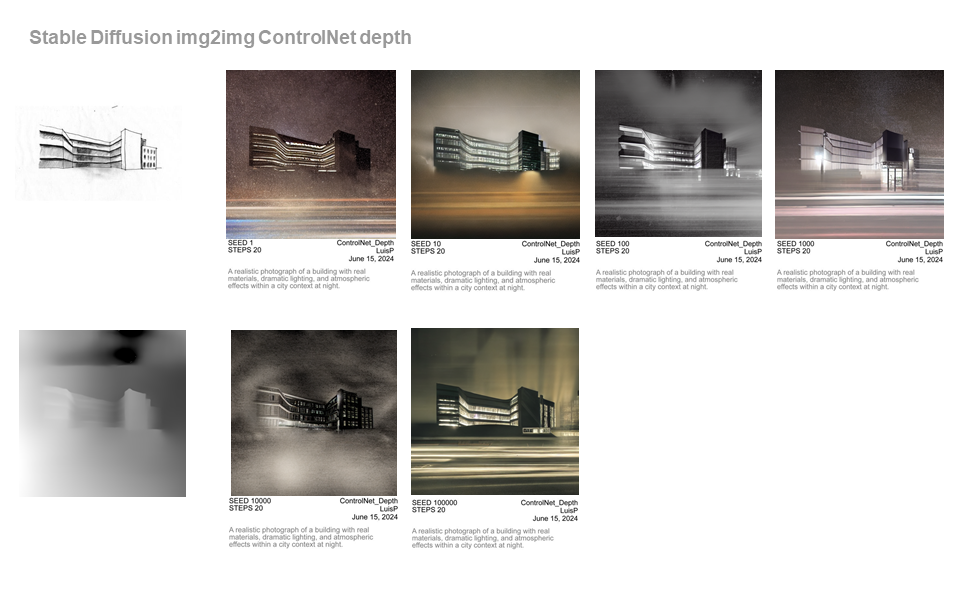

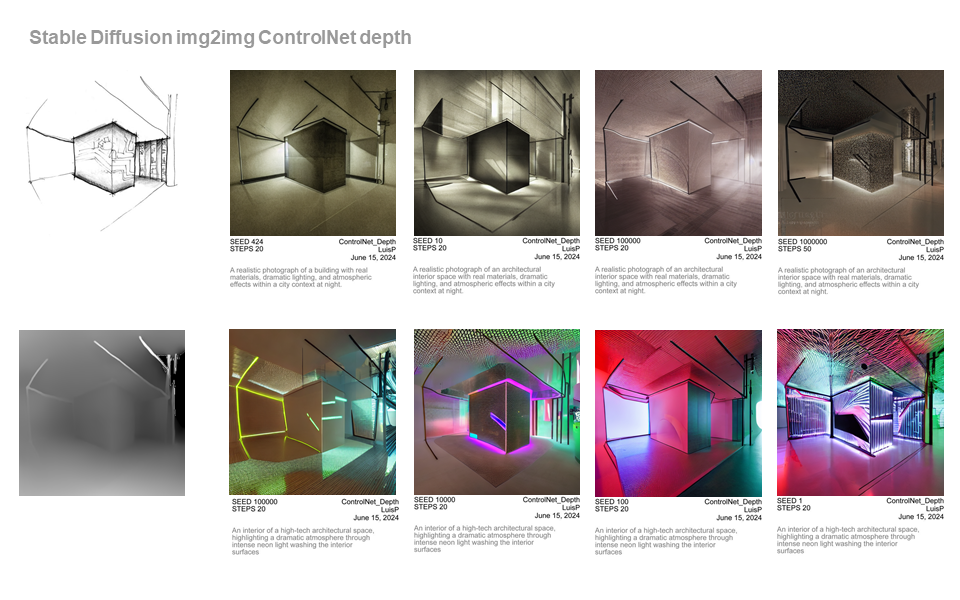

In parallel, we experimented with depth images through ControlNet’s img2img as well, maintaining constant prompts while varying the seeds. This approach consistently produced coherent building structures that added as sense of space. One notable characteristic was the frequent use of a blurry depth of field effect in the foreground, which, while consistent, added a unique atmospheric quality to the images. The interplay of context and atmospheric variations across these images was particularly compelling, taking advantage of the potential of generative AI to create diverse and evocative visual experiences. Through these explorations, we have gained valuable insights into the capabilities and limitations of current open source AI models in rendering complex light and atmospheric effects in architectural visualizations.

ComfyUI

We also experimented with real-time rendering using the ComfyUI and ControlNet and image2image logic. In this way, designers can explore lighting methods and moods while using 3D modeling software like Rhino or Revit.

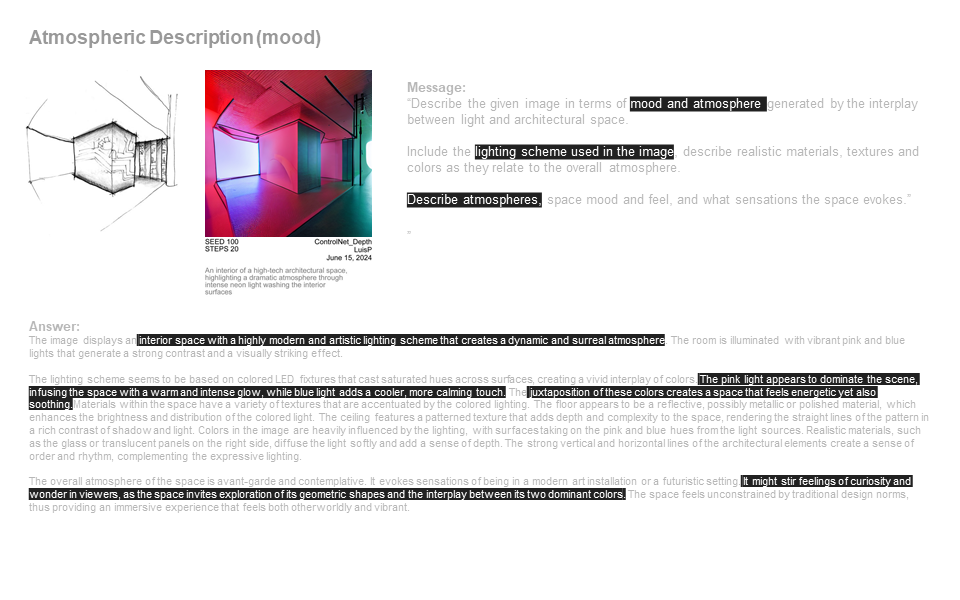

RAG and LLM

We applied RAG methods to Large-Language Models. We created a knowledge pool consisting of open-source text about lighting and atmosphere in architecture, as well as technical documents relating to lighting design. We created vector embeddings with these resources and merged all the vectors into a single JSON file. Once we deployed this JSON file in the LLM, we used it describe provided images. Specifically, we asked the model to provide atmospheric and well as technical step-by-step guidance on how to implement the respective lighting scheme in each image. In this way, the LLM is more robust and able to answer lighting specific concerns that typically arise in the architectural design process.