Abstract

Generative design has always been a very hot topic in recent times. Over the past few years, machine learning tools have emerged as invaluable aids in the creation of more precise and diverse 2D or 3D building models, fostering greater user engagement in the design process. A retrospective examination of previous approaches shows that techniques such as diffusion models, generative adversarial networks (GAN), graph neural networks (GNN) and etc. They all attempt to educate computer to understand the complexity of a building and reconstruct it.

This research will continue this topic and try to develop another method to let the ML understand, learn and reconstruct the information in the building plan to develop a generator. The training results allow users to customize the generation. In addition, it relies on the necessary circulation lines in the building (ie, corridor space) to generate a reasonable building plan.

After verifying the possibility of this methodology, this study propose a feasible solution for subsequent generation of architectural 3D space.

Introduction

Generative design has garnered significant attention in recent years, captivating the design and architecture community with its potential to revolutionize the creative process. The emergence of machine learning tools has played a pivotal role in enhancing the precision and diversity of 2D and 3D building models, thus igniting greater enthusiasm and involvement from users in the design process. Reflecting on past approaches, it becomes evident that techniques like diffusion models, generative adversarial networks (GANs), graph neural networks (GNNs), and others have all aimed to equip computers with the capability to comprehend the intricacies of building structures and subsequently reconstruct them.

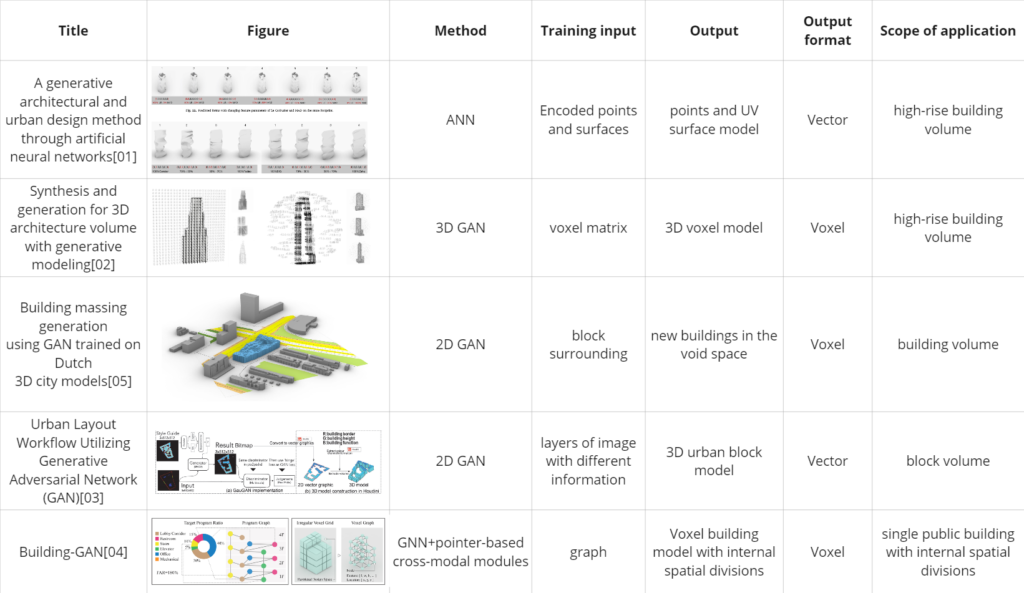

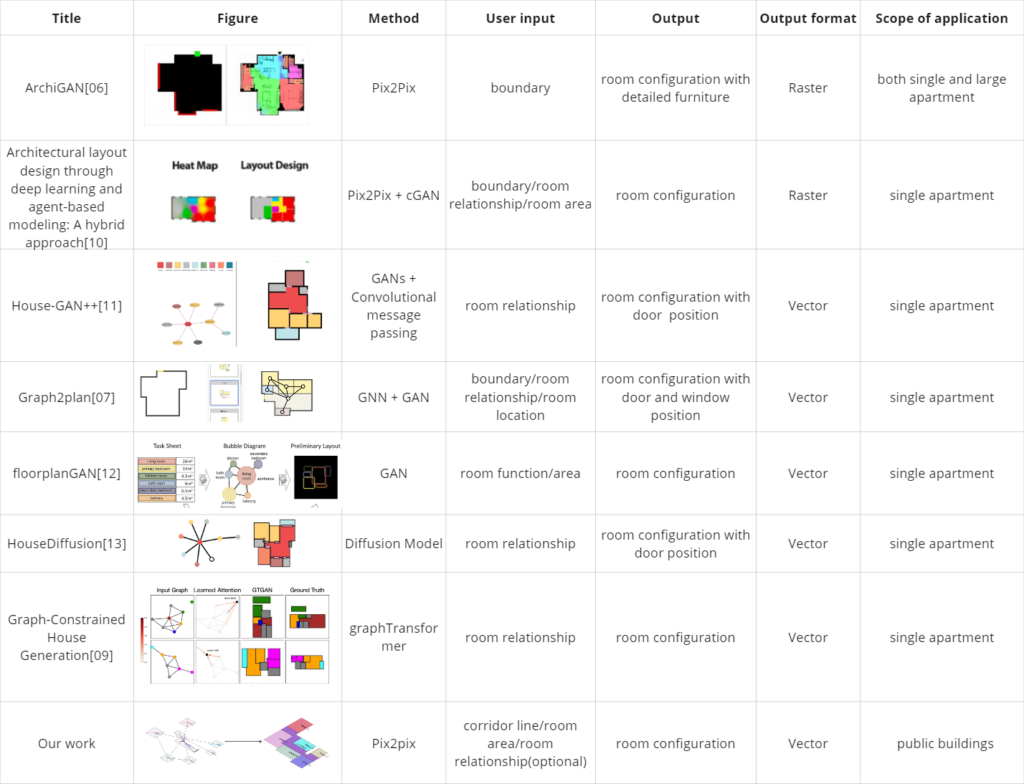

How to make a computer understand the logic of a building and regenerate similar buildings? Previous research can be roughly divided into two aspects, the generation of 3D building volumes and the generation of 2D building plans. The models that achieve this generation result are often based on GAN, 3DGAN, GNN, etc. The results obtained have certain limitations.

Due to the internal complexity of 3D building space, most generative models discussing the division of building space focus on the generation of 2D floor plans. A building plan can often also represent the general layout of a building. There are many ways to generate 2D. It can be a diffusion model, it can be a GAN, it can be a hybrid method of GNN and GAN, etc.

This research endeavor seeks to extend the discourse surrounding generative design by proposing an alternative approach that enables machine learning to not only grasp but also learn and reconstruct the nuanced information inherent in building plans, thereby empowering the development of a versatile generator. The training outcomes attained through this method will grant users the flexibility to tailor the generated designs to their specific requirements and preferences. Additionally, the methodology places a strong emphasis on ensuring the incorporation of essential circulation pathways within the building, such as corridors, in order to produce building plans that are both aesthetically pleasing and functionally viable.

Following the validation of the viability of this novel methodology, this study puts forward a practical solution for the subsequent generation of architectural 3D spaces. By bridging the gap between machine learning and architectural design, this research not only contributes to the evolution of generative design but also promises to reshape the way we conceive and construct buildings in the future.

Literature Review

Research Objectives

Research Objectives

Most of the previous research started from the relationship between architectural spaces and reconstructed a plane by letting the user decide some basic information of the room, such as function, area, and adjacent relationship between rooms. For the single apartment building plane, this condition enough to produce good results.

However, for other building types such as museums, shopping malls, hospitals, etc., traffic circulation is an important element that runs through the space. This study attempts to introduce another condition on the basis of satisfying the basic constraints, focusing on the close relationship between the building plane and its traffic space.

Comparison with previous studies, this research will solve this :

1, This research attempts to find a more precise method to capture building features and learn from it. (both applies to 2d and 3d).

2, not only single apartment, but also explore the possibility to generate public buildings.

3, In addition to meeting the basic conditions for rational building generation, this study will also introduce ‘circulation’, which is an important element of public buildings.

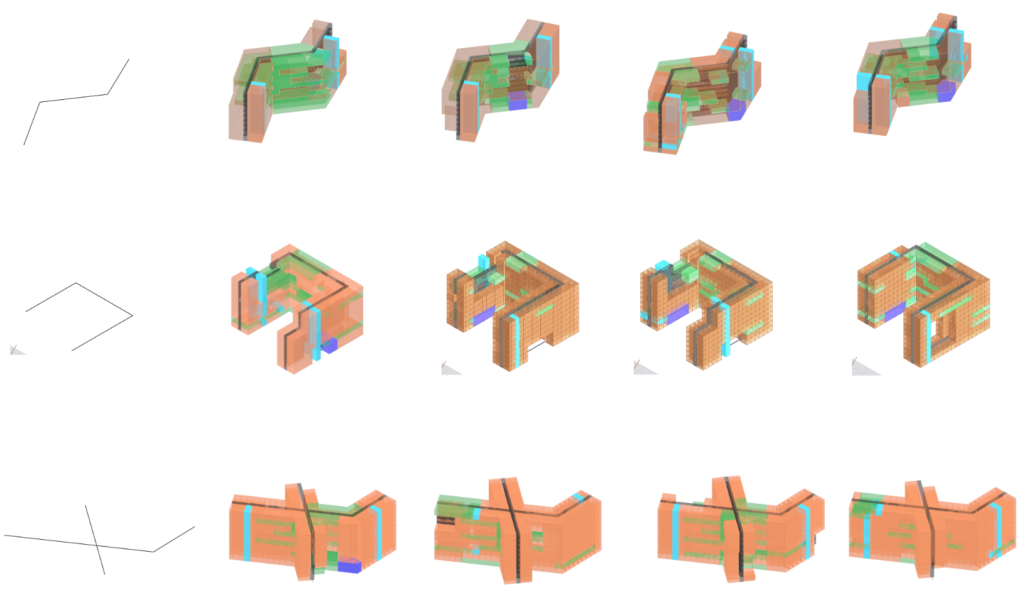

This example was generated by Grasshopper. Different colors in the picture represent different functions in public buildings. The gray part is the corridor space, which simulates the situation of generating a complete building by first specifying the corridor space. When inputting different corridor space reference lines, we can get different random results. As mentioned above, the spatial layout of public buildings often depends on their traffic circulation. Most functional spaces need to be connected to corridors, so it is meaningful to study how to guide the layout of a building through corridors.

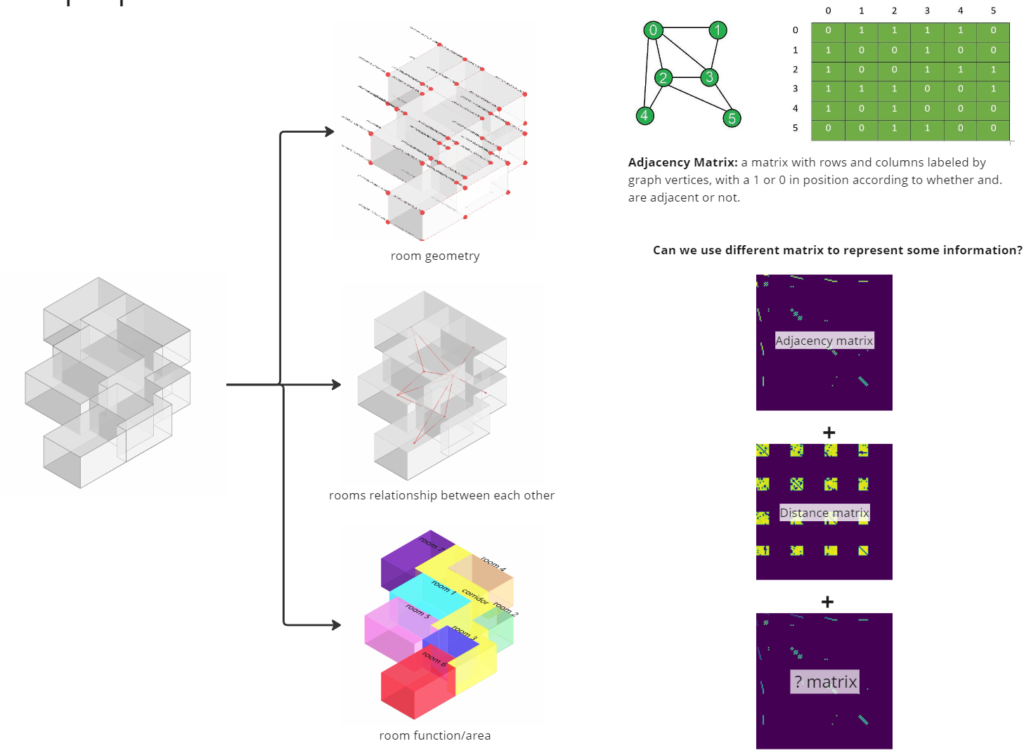

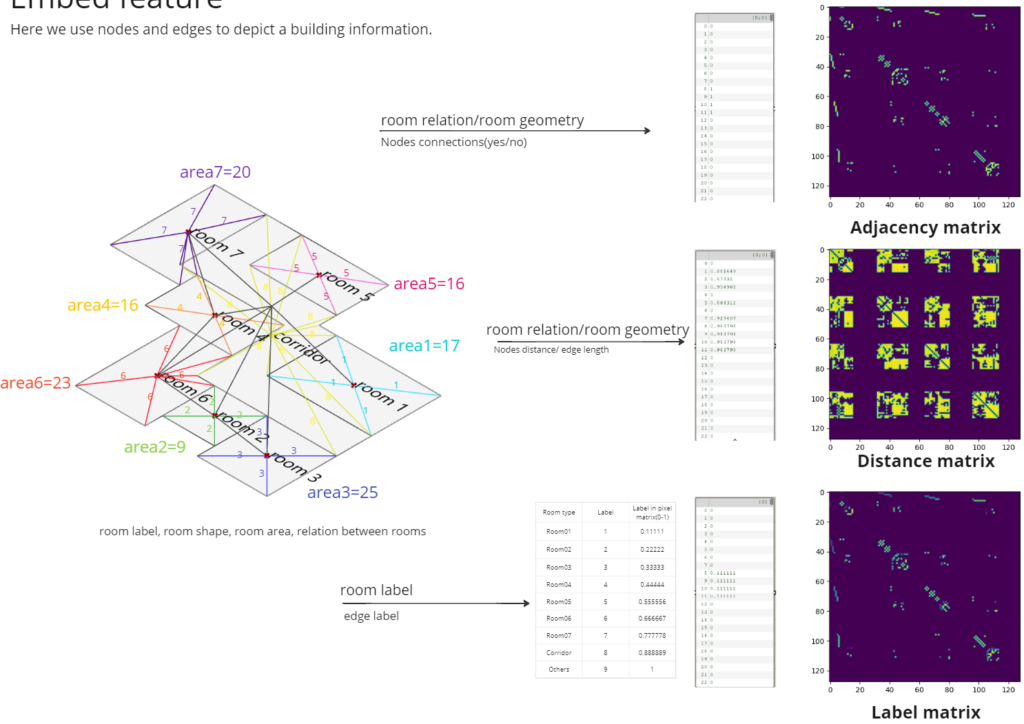

How to embed the info

For a 3D building, we usually need to consider several types of information:

- room function

- room area

- room geometry

- rooms relationship between each other

If the above information can be embedded and learned, then it is theoretically feasible to generate a new building based on the above features.

Methodology exploration

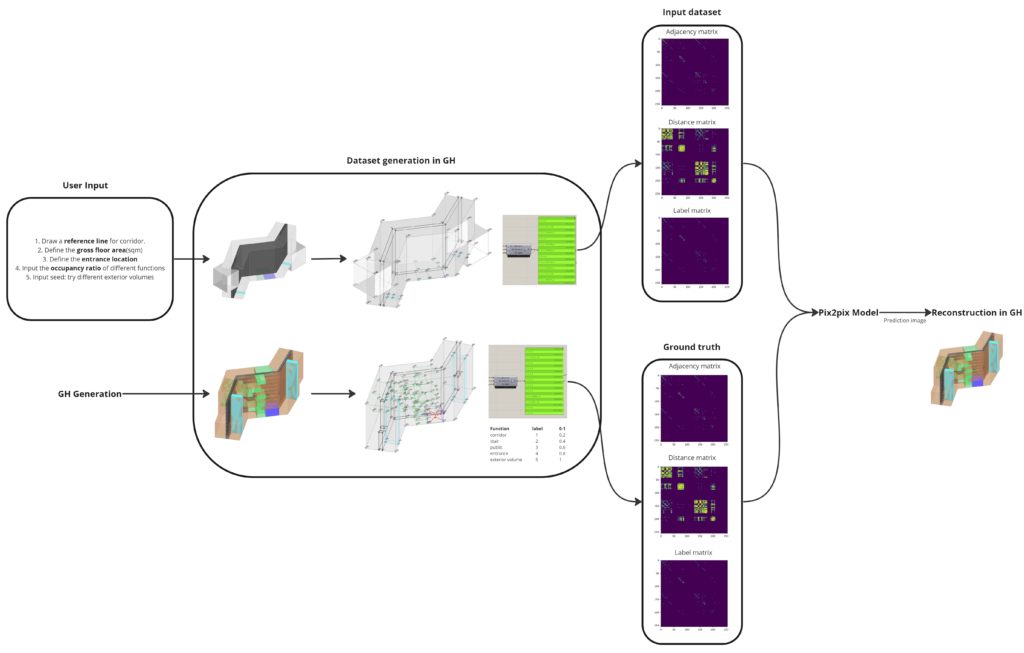

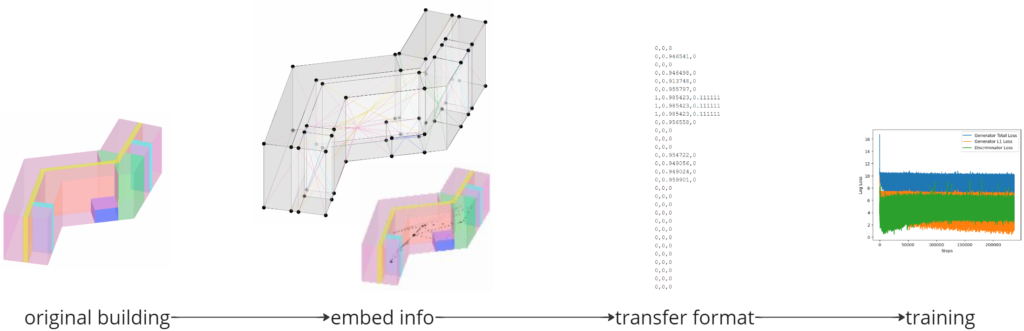

This research initially attempted to encode and generate 3D architectural space through ML. According to the theory of adjacency matrix, we try to extract the points and edges of each shape in 3D space, and encode the connectivity between two points, the length of the edge, the relative position of the points, etc. in the form of an adjacency matrix. These matrix images can be read and learned by the pix2pix model.

When we get the prediction results, we can return to GH to reconstruct the geometry and get the new model generated.

However, in actual practice, this attempt has some limitations.

Limitation

But when I start dataset and training , there is some problems:

- Lack of real 3D building datasets.

- Due to the pixel limit of the pix2pix model, it cannot handle large public buildings with more than 512 feature points.

Considering that the standard floor plan of a public building can represent the layout of the entire building in general. Therefore, this research will first start with the generation of 2D floor plans, verify the possibility of this methodology, and propose a feasible solution for subsequent generation of architectural 3D space.

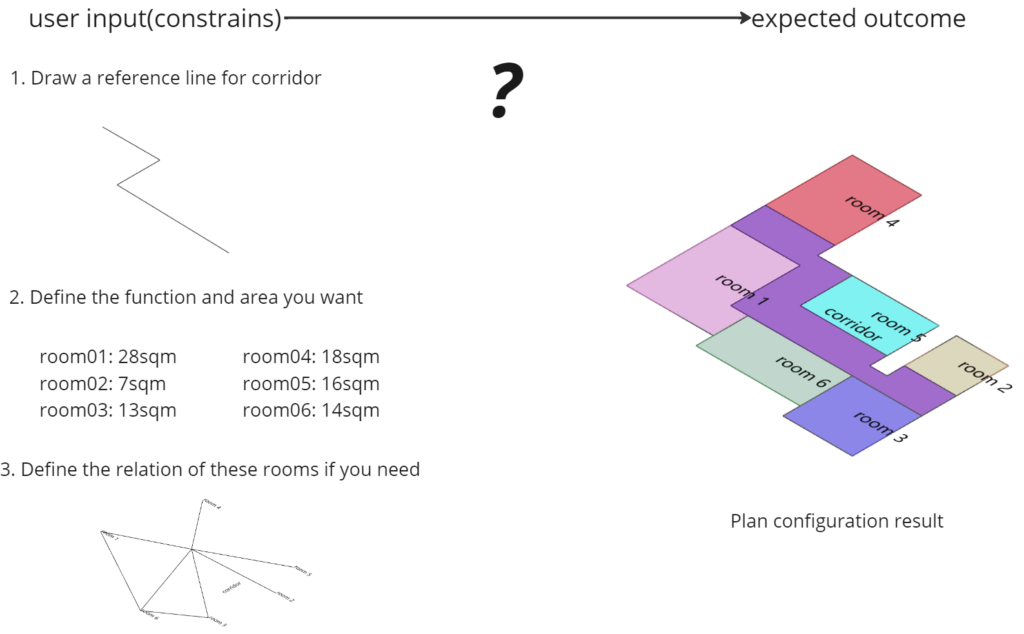

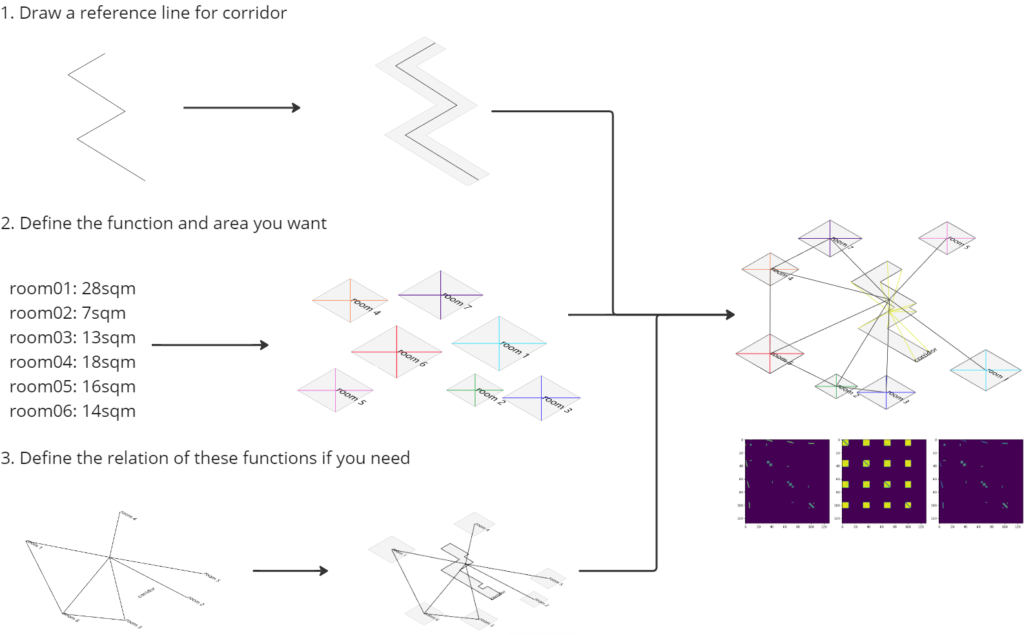

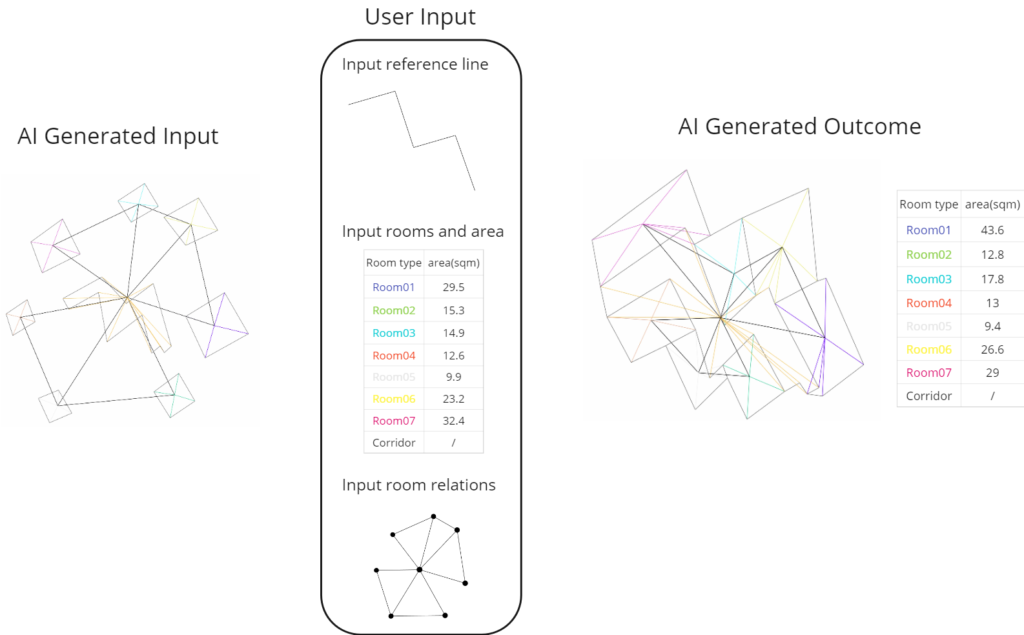

Ideal outcome

The purpose of this research is to develop an architectural plan generator that can interact with users. Users only need to input some simple conditions as constraints. For example, the reference line of the corridor, the number of rooms required and their corresponding areas, and the relationship between each room. The result we are looking forward to is that through machine learning training, the generator can generate a more reasonable building floor plan layout based on these limited conditions.

Methodology

Pix2Pix Model

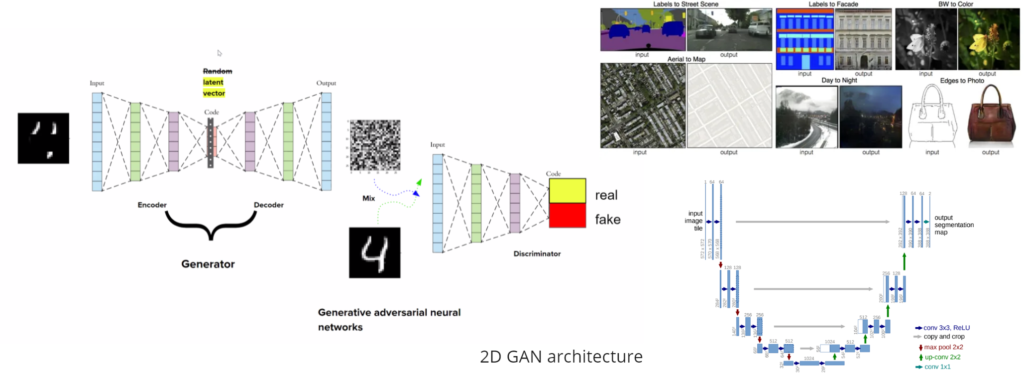

This study choose Pix2Pix model for trianing, beacuse of:

- 2D GAN, unsupervised learning, allows learning of adjacency matrix images.

- pairs of data, with clear output and input, allowing the establishment of a connection between constraint and outcome.

Methodology Workflow

Dataset generation

Grasshopper Generate Dataset

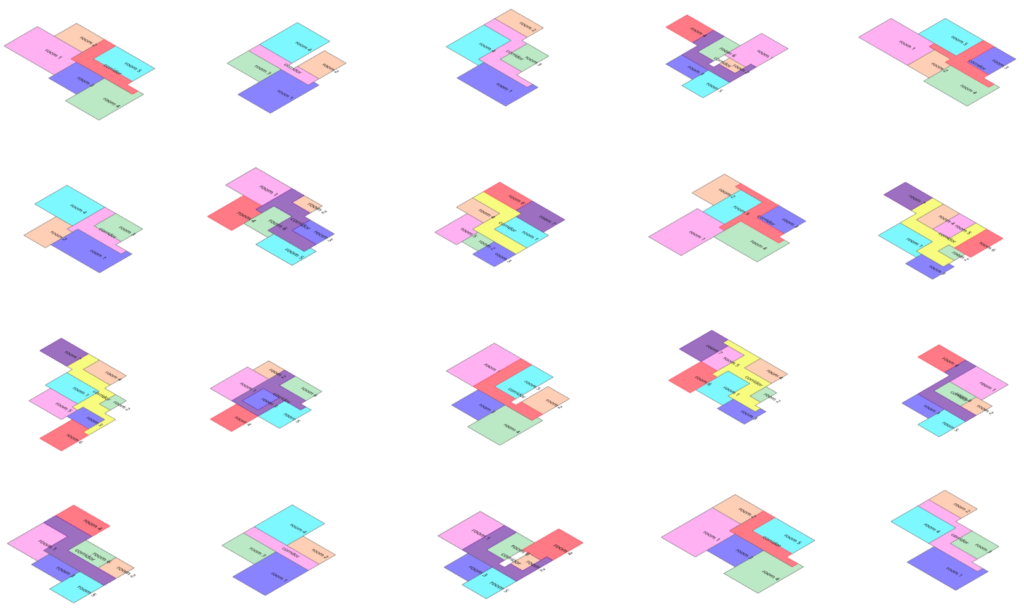

This study produced thousands of different floor plans through the Magnetizing plug-in in Grasshopper. They differ in the number of rooms, room area, and relationship between rooms. These floor plans will serve as the output dataset in ML training. Next, we will further discuss how to embed features and how to generate the Input dataset required for ML training based on these floor plans.

Embed Feature

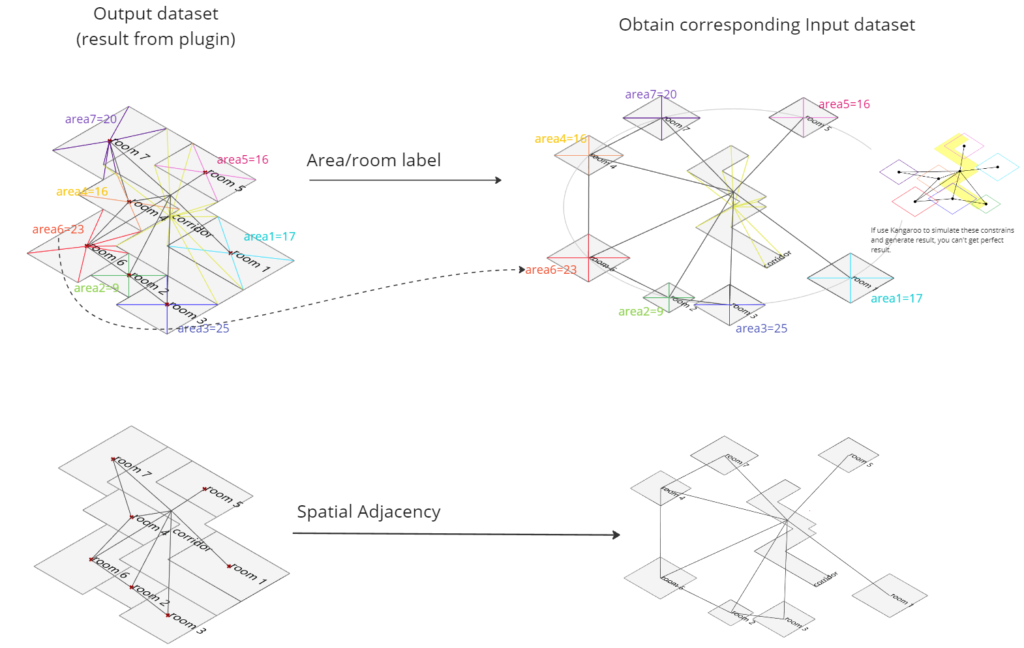

Got input dataset

Translation of relationships between rooms: Connect scattered rooms in the input data set using the same connection method as the output data set.

The input data set should have the same information as the output data set and establish a relationship. We can obtain information about corridor shapes, room areas, and room relationships from the thousands of generated floor plans. We re-express this information centered on the corridor: the input room is transformed into a square with the same area, the rooms are connected with the same line to express the room relationship, and the shape of the corridor is retained… From this, we get the shape of the input data set.

We get the input graph containing constraints. When we use room relationships to simulate attractions and constrain them together with kangaroo, we can see that the resulting floor plan is not perfect: rooms overlap, there are gaps between rooms, and the rooms can only remain square without the appearance of irregular shapes. possible. So this is also why it makes sense to use ML methodology in this problem.

User Input representation

This part shows how to covert the use input information to the input geometry which we can deal with. When we get the geometry representation of these constrains, we will use the same way we mentioned before to abstract the input to these ML-readable image.

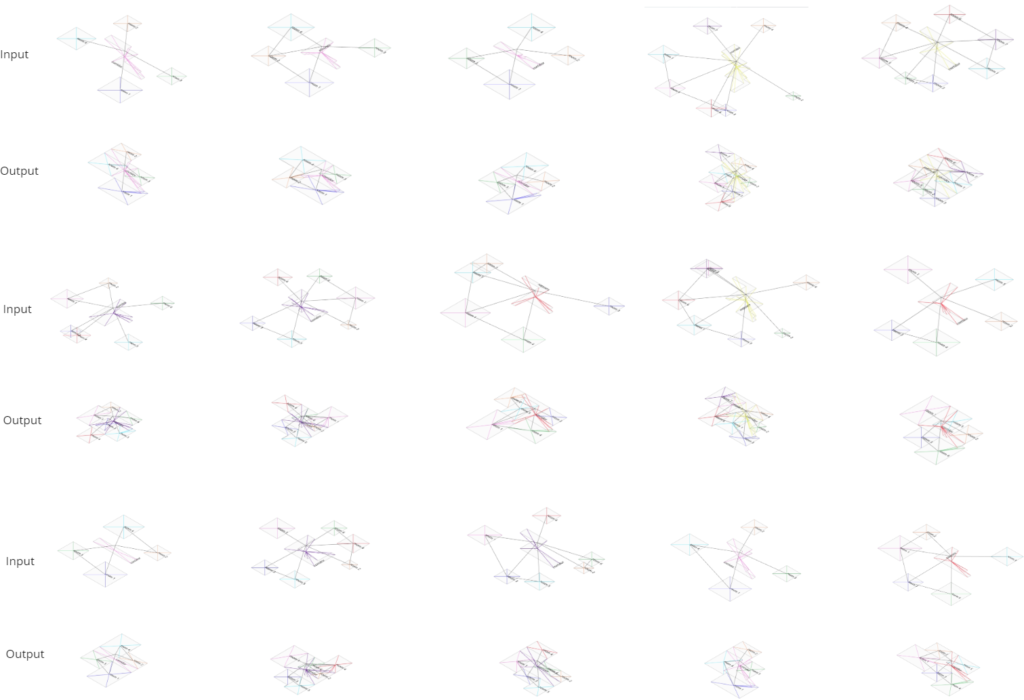

Dataset Variation

Training

Reconstruction Result

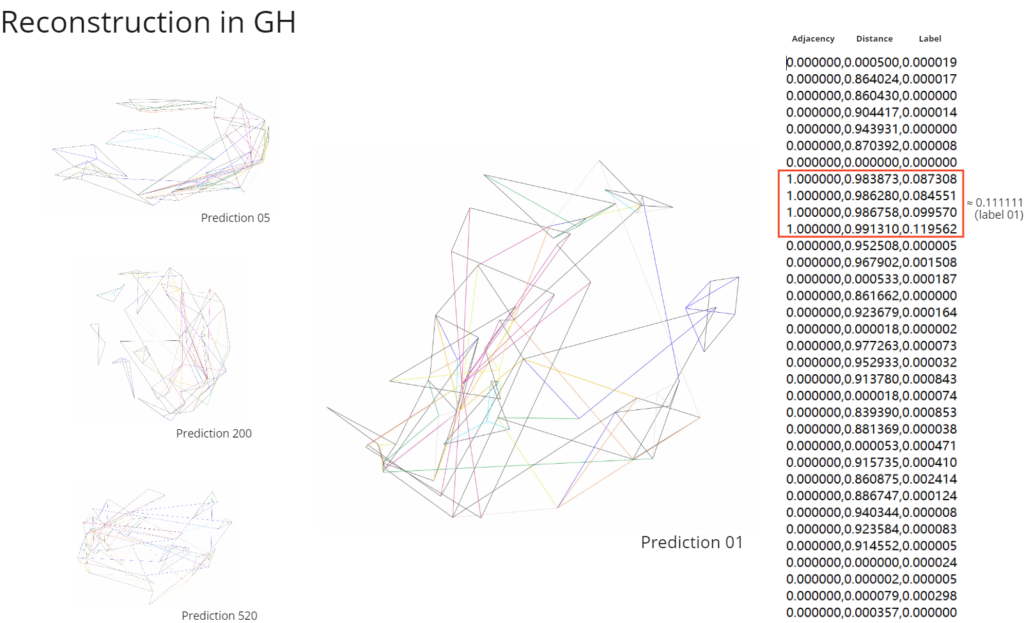

Most of generation result has distortion.

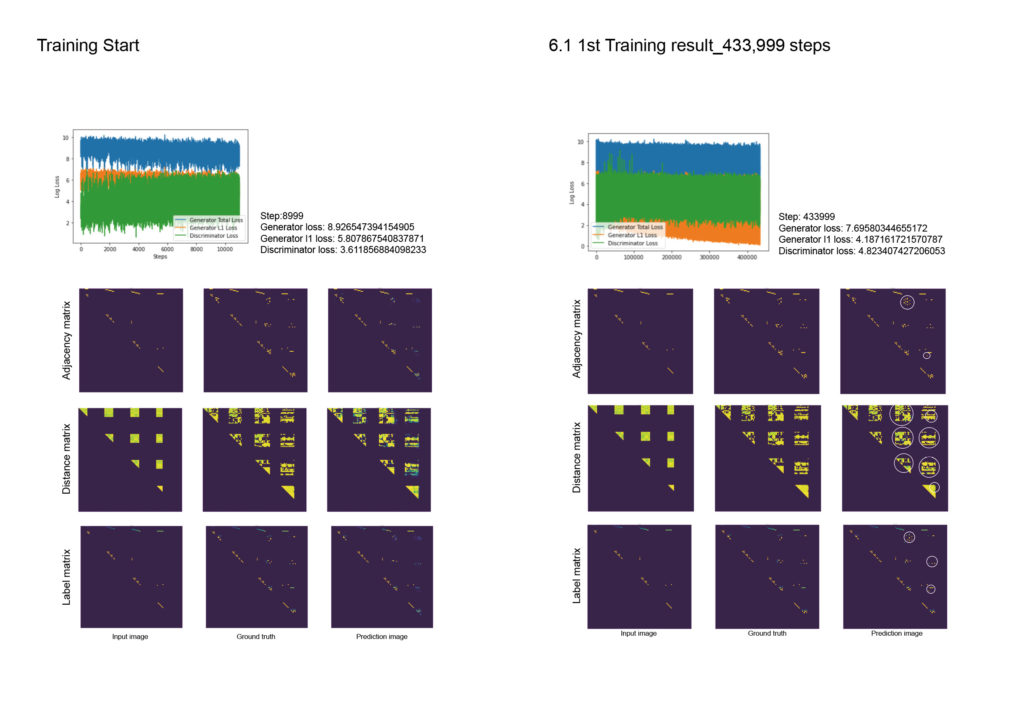

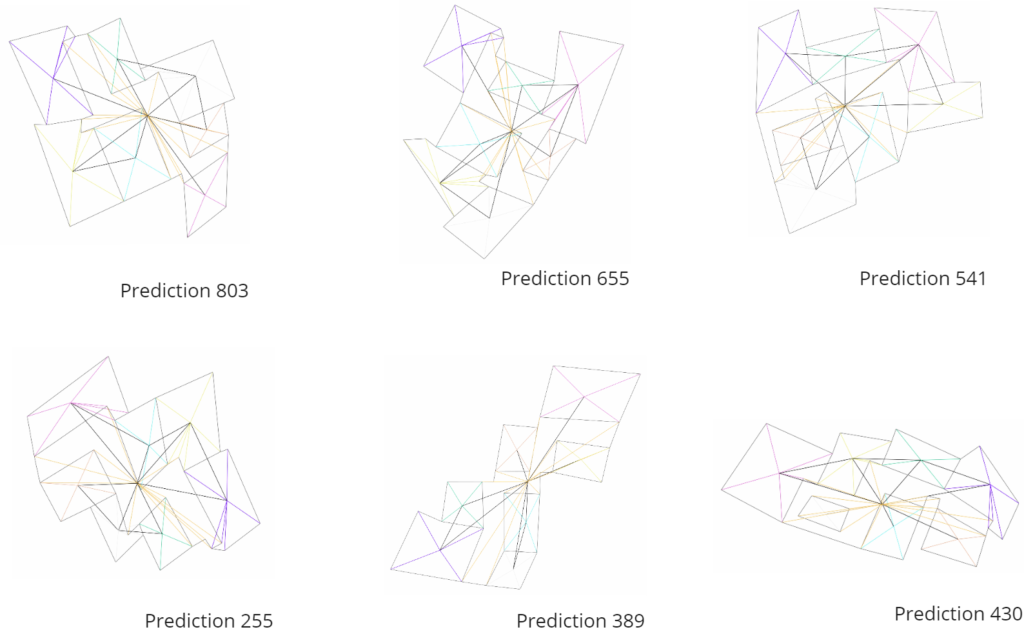

This part shows the reconstruction result in grasshopper. After training for about 400,000 steps, we did not get a good result.

Almost most of the reconstruction results have large distortions. They do not form a continuous flat floor plan. This result is obviously unusable. But by observing the results, we can know that the contents of the adjacency matrix and the label matrix are better predicted to a certain extent, They conform to the rule of appearing in pairs during input. And from GH we can recognize that different colors are scattered together.

Prediction Result

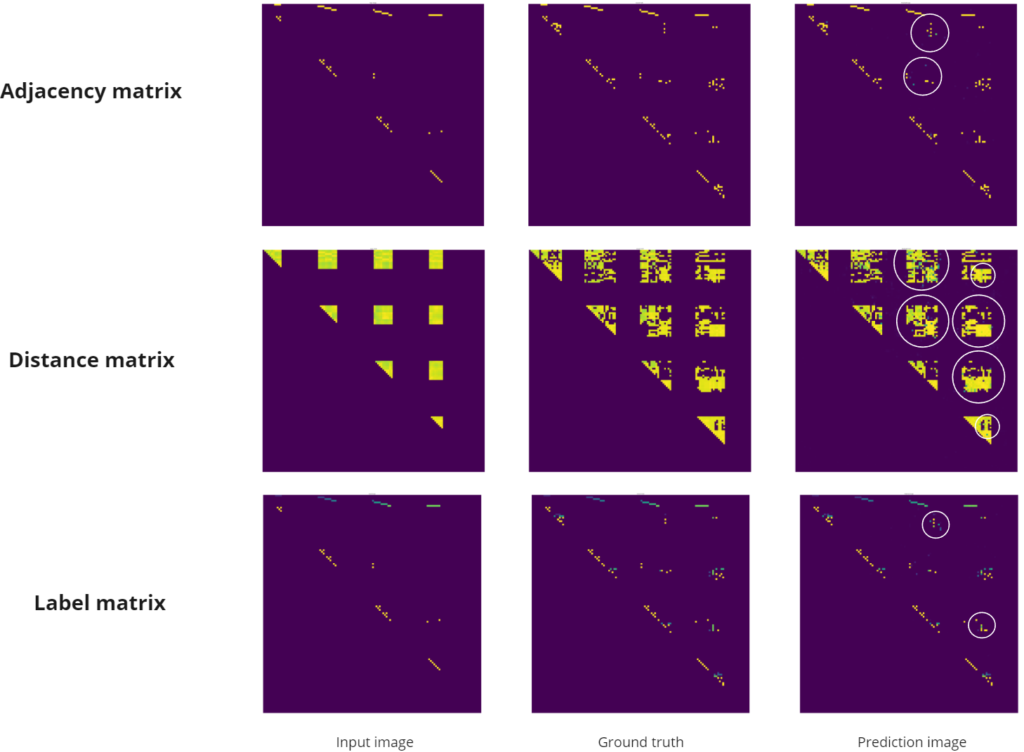

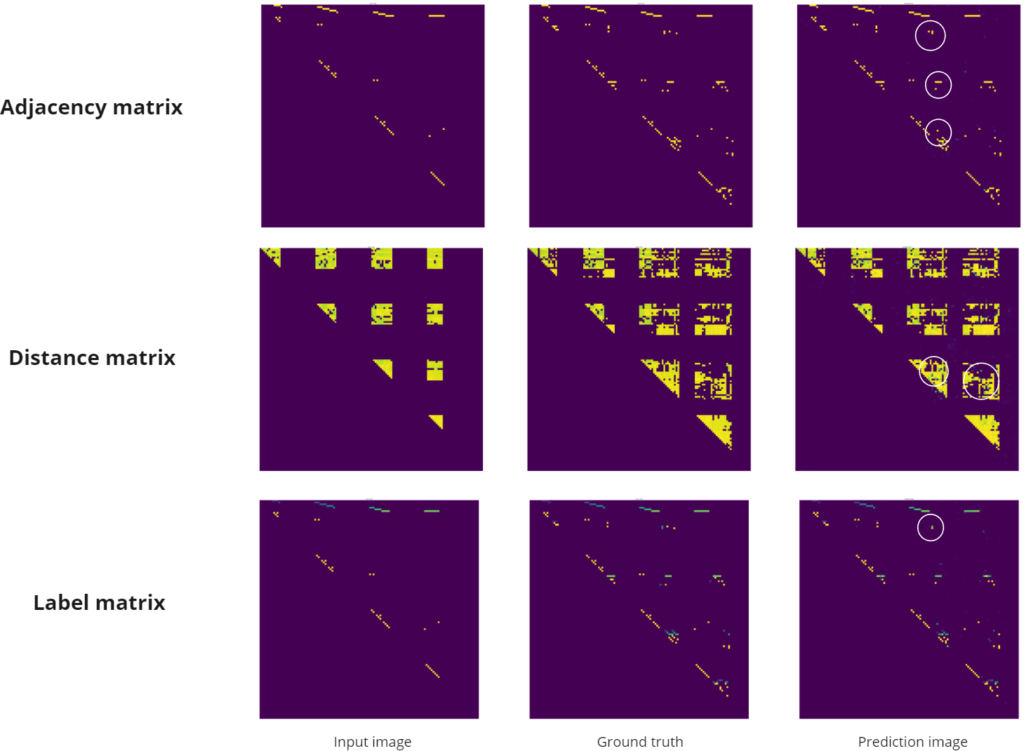

Therefore, we further explore the reasons why this huge distortion occurs. We tried to plot some predictions and compare them with the ground truth.

In most results, the adjacency matrix and label matrix have fewer errors. But in the distance matrix, some pixels are missing, and a large number of pixels have colors different from the ground truth, which indicates that there is an error in predicting the length of this edge. This is why every node seems to be connected in grasshopper, but a reasonable plane cannot be restored.

So in the next step, we doubled the training time, hoping to get better results.

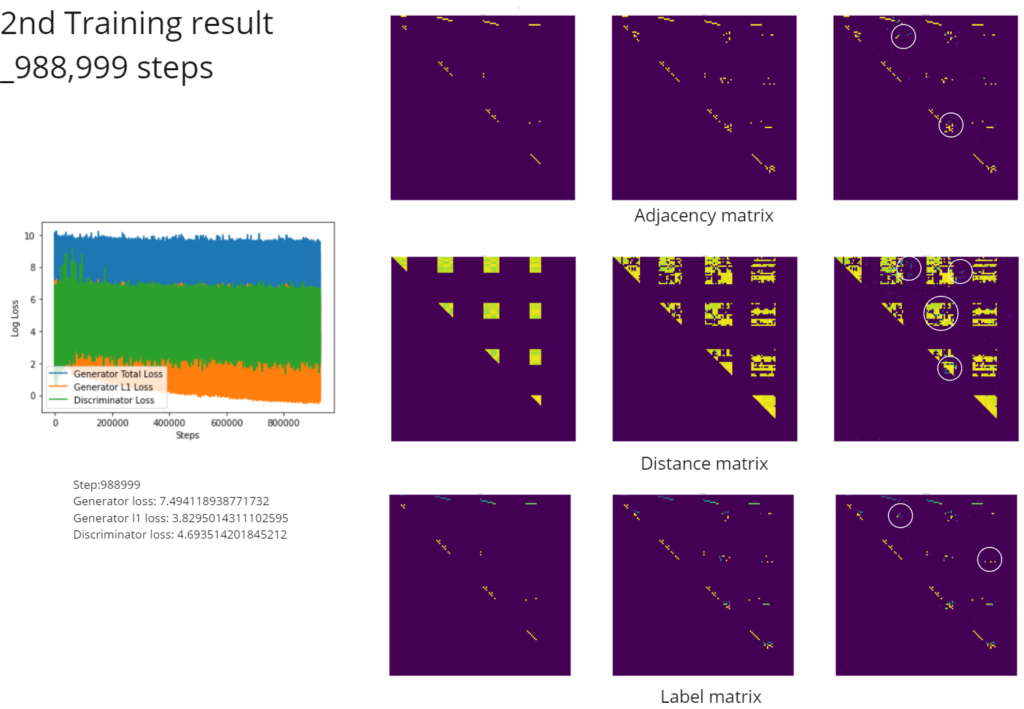

2nd Training result_988,999 steps

In the second training, we trained approximately 988,999 steps. Comparing the data at the beginning of training, we can see that Generation loss and Generation I1 loss continue to decrease, and Discriminator loss continues to increase, which shows that we are moving in the right direction.

When we plot the prediction results again, we can see that the adjacency matrix and label matrix still have relatively small errors. The error in the distance matrix prediction results is also significantly reduced. Most of the pixels have the correct color, with only two obvious errors.

Reconstruction Result

When we reconstruct the geometry in Grasshopper, we can clearly see that the prediction results this time have significantly improved. The result though is still not a flat floor plan and there is still a distortion in the shape of the room within it. But the logic of their layout is already discernible in these floor plans. Colored edges are distributed throughout the interior of each room, and there is no color confusion. The connection between the rooms has also been restored very well.

Next we take one of the results as an example. As we mentioned before, the prediction results of Pix2Pix are pairs of images. In addition to the results representing the floor plan, the other one represents the prediction of random input constrain. We also restored the input shape in Grasshopper and disassembled the user’s input conditions, including reference lines, room area, and room relationships. We then compare whether these conditions still exist in the generated building plans. The comparison results show that except for the large deviation in the area of Room01, the predictions of other rooms, corridors, and room relationships are relatively accurate.

Conclusions

Conclusions

The method used in this study allows ML to accurately capture the shape relationships of the building plan, such as the foot points of the room, the center point of the room and the edges which is necessary to form the shape of the room. Theoretically, most linear geometric shapes can be expressed by the nodes and edges on their boundary. Therefore, compared with methods in some previous studies, the model after deep training should have the ability to accurately reconstruct complex spaces.

These multiple channels in Pix2Pix model allow us to learn more layers of information. In this study, we added a new layer of channel that can identify room labels based on adjacency matrix theory. This study verified its feasibility. This also means that it is possible to add more layers of information to the learning object to generate more complex models.

Go back to 3D

Whether it is a 2D shape or a 3D shape, the basic principle is to find a way to describe the geometric properties of the shape. As mentioned above, this methodology describes an architectural plane by extracting points and edges of geometric shapes and adding different layers of information to the edges. Let ML understand and learn this shape. Theoretically, this method is also suitable for the reconstruction of 3D space. Although this article does not continue to delve into this, it is worth noting: for 3D generation, perhaps we need to use the pix2pix HD model, which allows up to 1024 feature nodes to depict buildings. Of course, 3D space generation is more complex than 2D. Whether the coding method needs to be modified requires further study.

Limitation

1, Pix2pix HD Model can deal with the image size of 1024 dpi. This generator cannot generate too big or too complex 3D buildings which has more than 1024 feature nodes.

2, This method has relatively high requirements for the depth of model training. In the adjacency matrix, a small pixel error will cause a relatively large deviation. And obtaining an accurate distance matrix often takes longer to learn.

Potential & Future work

1, The previous research has shown that this method is feasible. Next, we can increase the training time or find a dataset that is more consistent with the actual situation and retrain the model to make the results more ideal.

2, Not only for the floor plan. How to use this method in the generation of 3D space requires further exploration.

3, It allows us to add more channels for different information. So, change or add more different constraints( sun hours, surrounding), let users control or interact with generator more intelligent.

References

- Hao Zheng, Philip F.Yuan, A generative architectural and urban design method through artificial neural networks. Building and Environment, Volume 205, 2021, 108178, ISSN 0360-1323, https://doi.org/10.1016/j.buildenv.2021.108178.

- Zhuang, Xinwei & Ju, Yi & Yang, Allen & Caldas, Luisa. (2023). Synthesis and generation for 3D architecture volume with generative modeling. International Journal of Architectural Computing. 21. 147807712311682. 10.1177/14780771231168233.

- Zhong, Ximing; Fricker, Pia; Yu, Fujia; Tan, Chuheng; Pan, Yuzhe, A Discussion on an Urban Layout Workflow Utilizing Generative Adversarial Network (GAN) – With a focus on automatized labeling and dataset acquisition. CumInCAD, 2022.

- Kai-Hung Chang, Chin-Yi Cheng, ieliang Luo, Shingo Murata, Mehdi Nourbakhsh, Yoshito Tsuji, Building-GAN: Graph-Conditioned Architectural Volumetric Design Generation. IEEE 2021.

- Ond?ej Veselý, Building massing generation using GAN trained on Dutch 3D city models, 2022. MSC thesis in TU Delft.

- Stanislas Chaillou, ArchiGAN: Artificial Intelligence x Architecture, CumInCAD, 2020.

- RuiZhen Hu, Zeyu Huang, Yuhan Tang, Oliver Van Kaick, Hao Zhang, Hui Huang. Graph2Plan: Learning Floorplan Generation from Layout Graphs. ACM Transactions on Graphics. 2022.

- Nelson Nauata, Kai-Hung Chang, Chin-Yi Cheng, Greg Mori, and Yasutaka Furukawa, House-GAN: Relational Generative Adversarial Networks for Graph-constrained House Layout Generation. ECCV. 2020.

- Hao Tang, Zhenyu Zhang, Humphrey Shi, Bo Li, Ling Shao, Nicu Sebe, Radu Timofte, Luc Van Gool, Graph Transformer GANs for Graph-Constrained House Generation. IEEE, 2023.

- Morteza Rahbar, Mohammadjavad Mahdavinejad, Amir H.D. Markazi, Mohammadreza Bemanian, Architectural layout design through deep learning and agent-based modeling: A hybrid approach, Journal of Building Engineering, Volume 47, 2022.

- Nauata, Nelson; Hosseini, Sepidehsadat; Chang, Kai-Hung; Chu, Hang; Cheng, Chin-Yi; Furukawa, Yasutaka, House-GAN++: Generative Adversarial Layout Refinement Networks, eprint arXiv:2103.02574.

- Ziniu Luo, Weixin Huang, FloorplanGAN: Vector residential floorplan adversarial generation, Automation in Construction, Volume 142, 2022.

- Mohammad Amin Shabani, Sepidehsadat Hosseini, Yasutaka Furukawa, HouseDiffusion: Vector Floorplan Generation via a Diffusion Model with Discrete and Continuous Denoising. IEEE/CVF, 2023.

- Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, Alexei A. Efros, Image-to-Image Translation with Conditional Adversarial Networks. IEEE/CVF. 2017.

- Lei Si, Yoshiaki Yasumura. Gengnagel, Reconstruction of a 3D Model from Single 2D Image by GAN. Lecture Notes in Computer Science book series (LNAI,volume 11248). 2018.

- Wenming Wu and Xiao-Ming Fu and Rui Tang and Yuhan Wang and Yu-Hao Qi and Ligang Liu, Data-driven Interior Plan Generation for Residential Buildings. ACM Transactions on Graphics. 2019.

- Zhiwen Fan, Lingjie Zhu, Honghua Li, Xiaohao Chen, Siyu Zhu, Ping Tan. FloorPlanCAD: A Large-Scale CAD Drawing Dataset for Panoptic Symbol Spotting. IEEE/CVF 2021.