Outline: Depth and canny of an object

by Jose Lazokafatty and Paul Suarez

Introduction

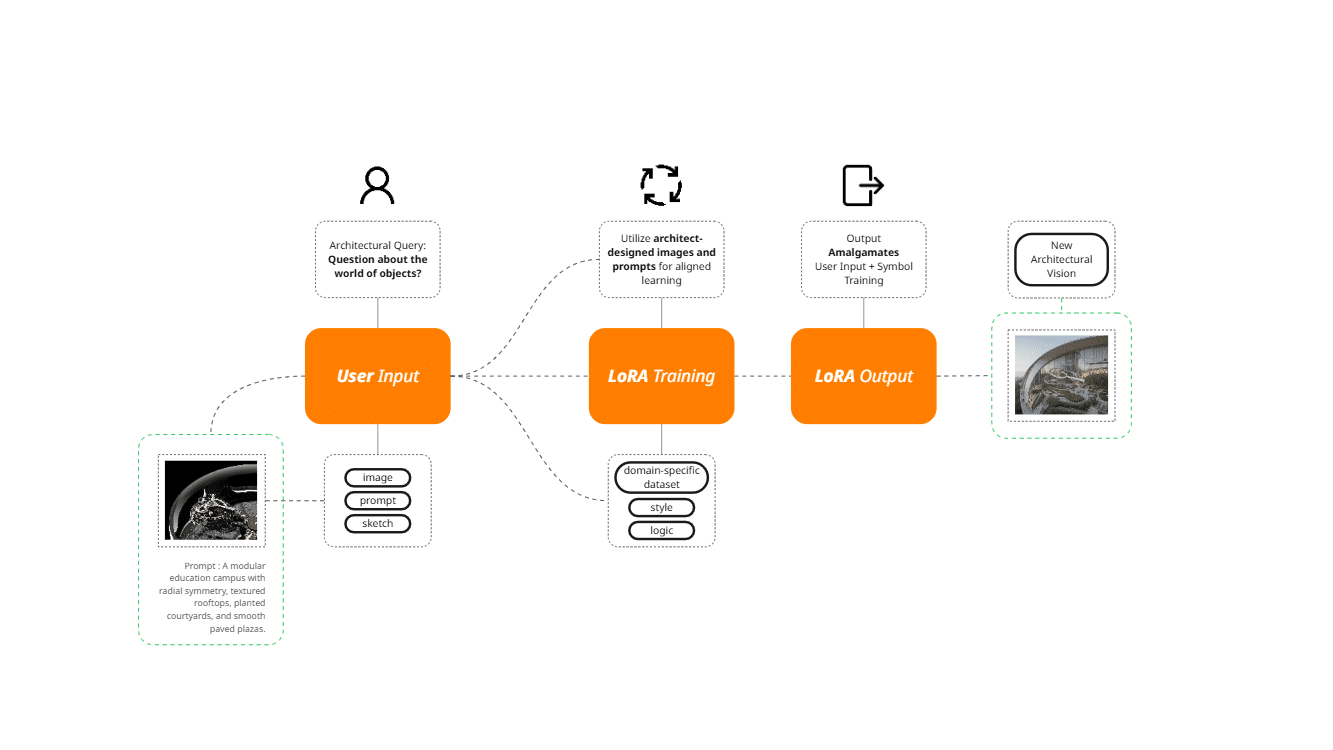

The Machine, as a creative AI-powered image generator that turns your sketches and prompts into richly detailed visions. Born from curiosity—how does a machine interpret an abstract outline of a doodle? or the depth of an image? this project is as much art as it is engineering. Using two distinct AI “lenses” (edge-based Canny and spatial Depth), users can guide the machine’s visual interpretation in fascinating ways.

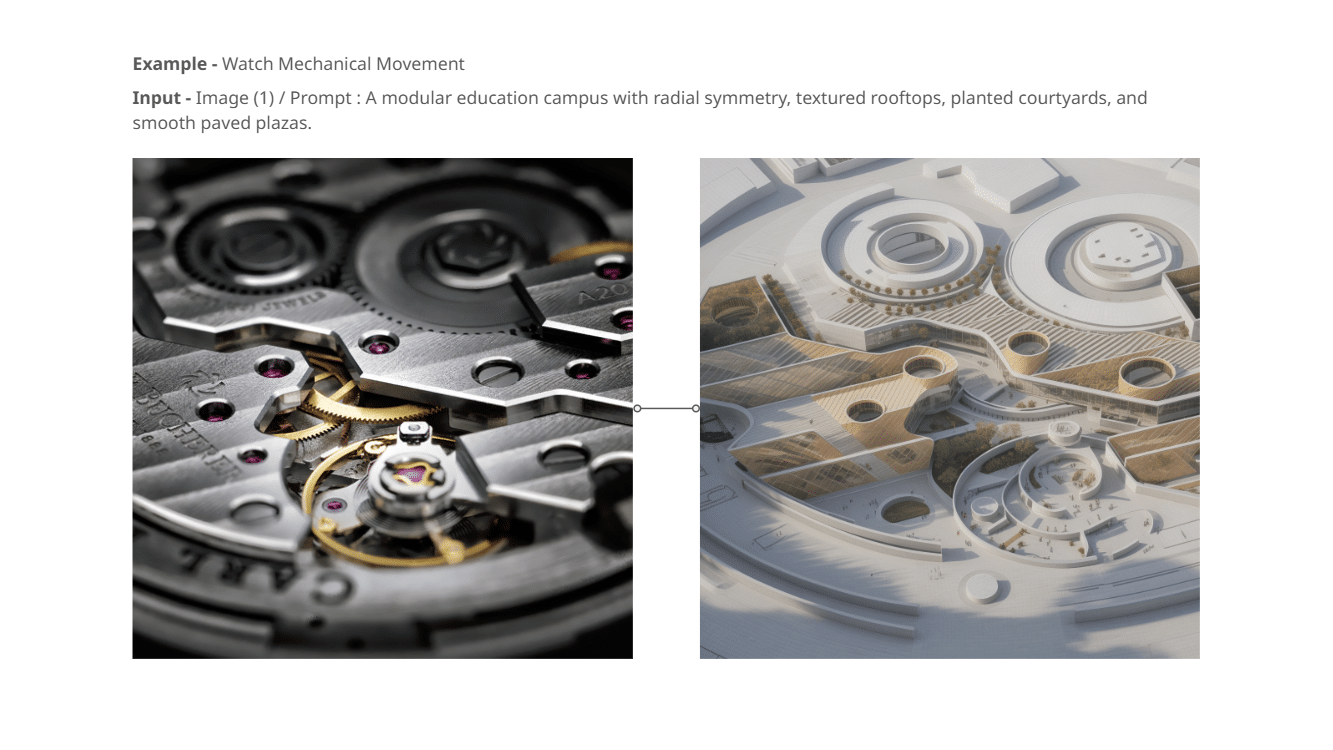

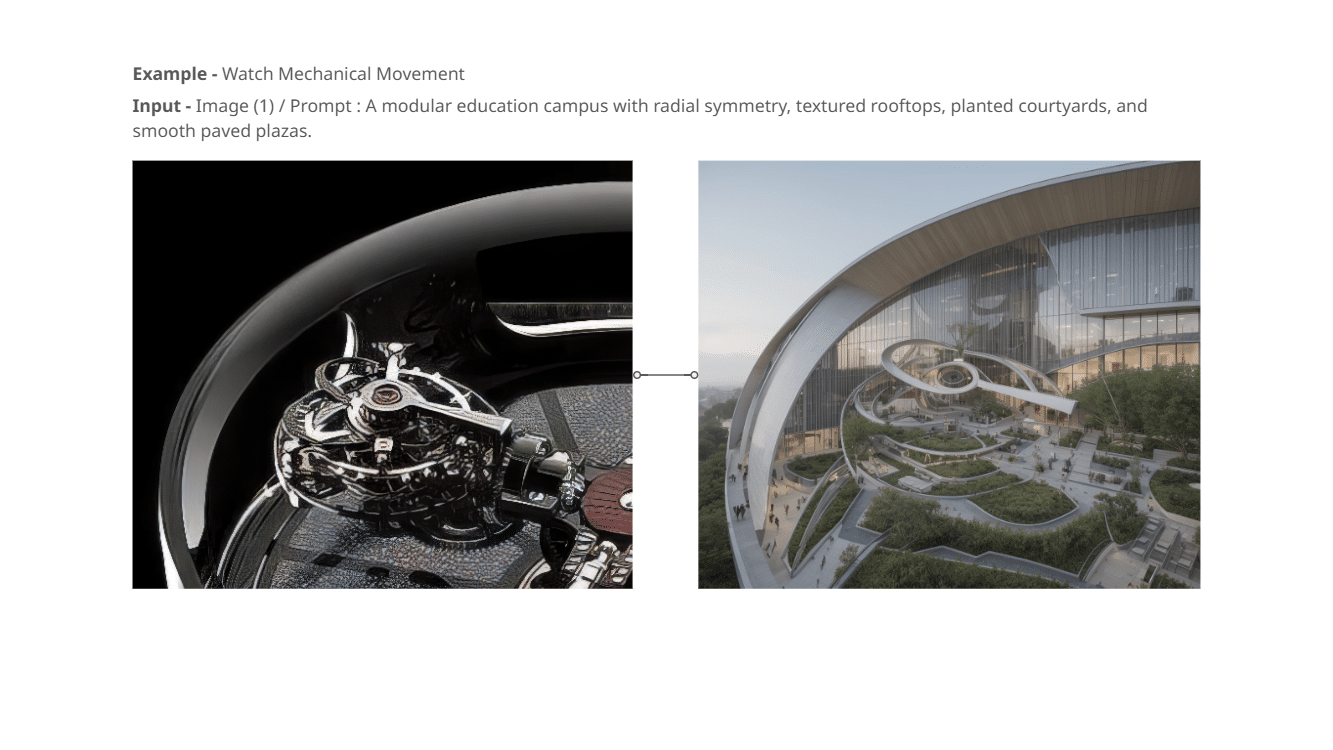

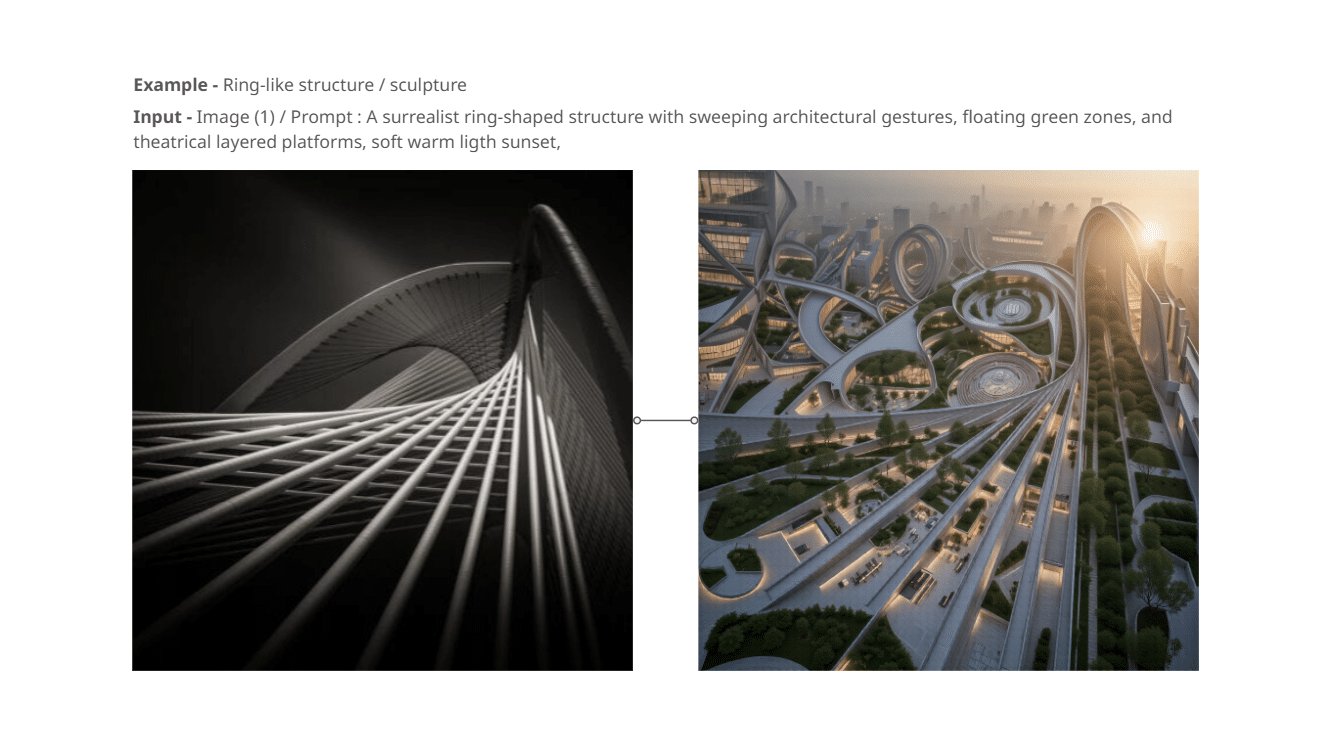

Welcome to Meaning by Machine, a tool that empowers architects to query “the world of objects” and transform those inspirations into architectural visions. What happens when you show the machine a mechanical watch and ask it to rethink those moving parts as built space? That’s the journey this project explores—where curiosity meets computation, sketch meets structure, and doodle becomes design.

Concept

At its core, Meaning by Machine operates like an architectural dialogue with AI. The user provides three things:

- A prompt (“modular education campus with radial symmetry…”),

- A sketch or image (outline or reference), and

- A choice between Canny (contour-focused) or Depth (volume-aware) control.

Then the system co-creates the piece. What really fuels this is a custom LoRA, trained from images of mechanical watch mechanisms, to extract architectural elements (gears, rings, structural elements). The result: the system learns to repurpose mechanical complexity into built forms.

Workflow Diagram

Creative Vision

Technical Workflow

Built with diffusers + controlnet_aux + gradio + peft + Pillow.

LoRAs load dynamically via PEFT, with paths kept editable from the UI.

Local Models & Speed

Open Source and non-commercial License attributed to Black Forest labs

Models are pre-downloaded as:

FLUX.1-Canny-dev/ ├ FLUX.1-Depth-dev/

The generate_image() function:

- Checks for sketch or image input,

- Preprocesses (Canny or Depth),

- Optionally loads the watch-architecture ↔ LoRA adapter,

- Generates and saves image into

outputs/YYYYMMDD_HHMMSS.png, - Unloads LoRA to avoid memory bleed.

UI | Realizing the Vision

The Gradio interface mirrors your original mockups:

- Prompt text + CFG & seed sliders

- Control model switcher (Canny / Depth)

- LoRA Selector dropdown + Refresh button

- Sketch/upload panel

- Mini‑viewers for Canny and Depth preprocessing

- Generate button

- Output panels showing the generated image and its saved file path

The style is a dark UI with high‑contrast buttons and clean block layout.

Sample Workflow in Action

- Upload an aerial sketch of a ring modular campus

- Select Depth control

- Load the “watch‑to‑architecture” LoRA

- Press Generate

- See the depth map preview, then final architectural render

- A file path appears, letting you save or reuse the image output

LoRA Training: From Watch to Urban Form

We co‑created a LoRA that fuses mechanical watch aesthetics into architectural form: (all images used in the lora used to train the model was captured for academic research and non- commercia purpose)

- Collected ~75 high‑res images of mechanical watch internal movements.

- Tagged prompts like: “gear assembly”, “circular mechanism” “Mech Watch”.

- Outputs show the original sketches transformed into radial plazas, structural truss elements, and gear-like canopies, as you can see in the gallery.

Conclusion

Meaning by Machine demonstrates how AI can decode object-space analogies—in this case mechanical movements → architectural gestures. The user fuels with prompt, image/sketch, and LoRA. The system responds with design—without complex 3D software.