During the seminar, we explored the core concepts of ROS (Robotic Operating System), its vital tools, and the seamless integration of Python for interacting with robots. With this integration, we effortlessly processed sensor data and exercised control over the UR10e robot, which served as our platform for the seminar. Through practical exercises, we gained hands-on experience in Python-based ROS nodes, exploring the capabilities of the extensive ROS ecosystem for various robotic tasks. Additionally, we acquired foundational knowledge in utilizing MoveIt for motion and path planning, opening up new avenues for efficient robot control.

Within the seminar, we conducted our work within a Docker container—a self-contained, lightweight package encompassing all the necessary components to execute software. This approach ensured portability and ease of setup. Furthermore, we utilized GitHub as our platform for efficient data management, enabling seamless collaboration throughout the project.

In the course, our goal was to scan a milled cork block using a UR robot and a D405 Camera, comparing it with a 3D digital model. To analyze the accuracy of the robotic fabrication process, we employed Open3D, an open-source library for processing 3D data, to compare the digital and scan models. This provided valuable insights into the fidelity of our fabrication process.

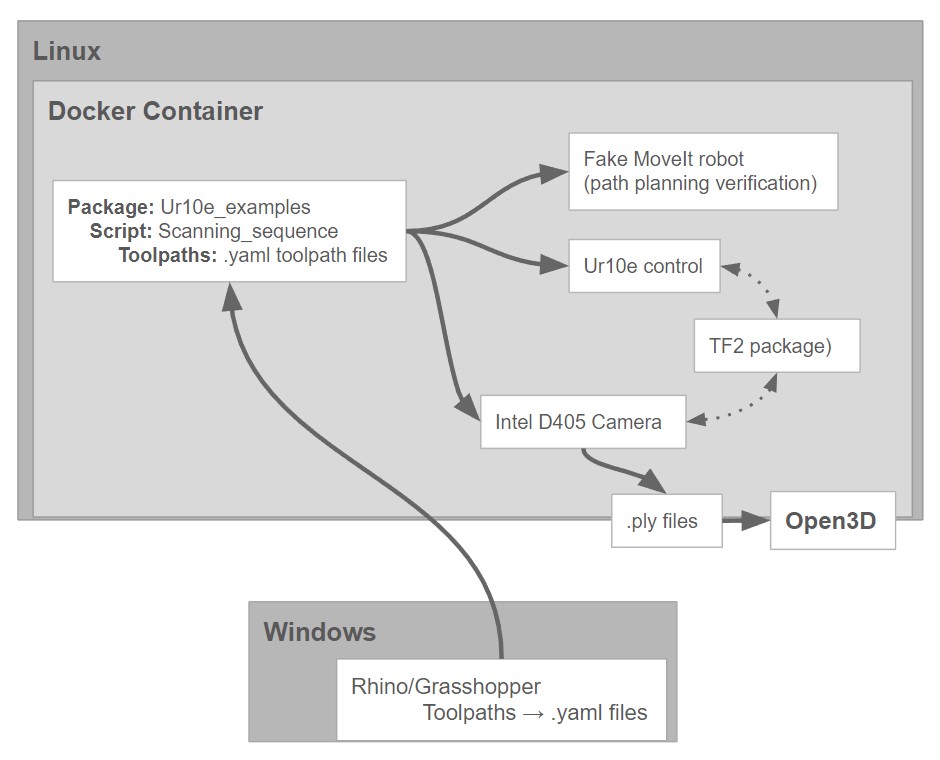

The following image represents an overview of our working environment, encompassing tasks executed on both Linux and Windows platforms. It highlights the diverse range of activities undertaken within these operating systems.

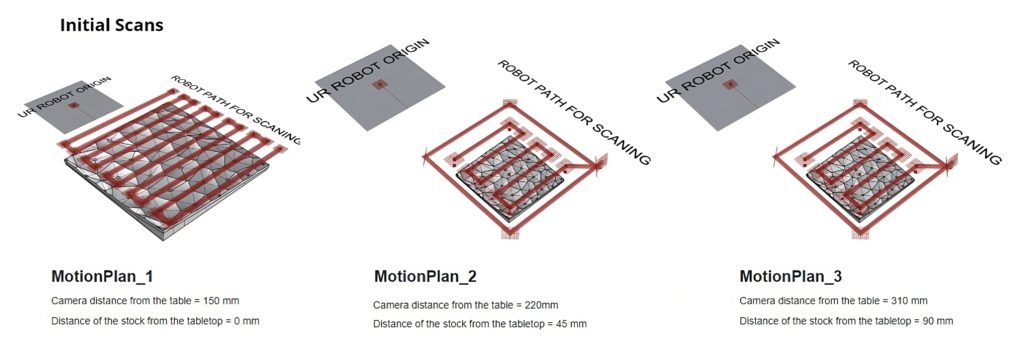

The movement of the robot is achieved through the utilization of motion planning techniques, which enable it to navigate along predetermined paths or directions. These techniques can be broadly classified into two categories: free space planners and process planners. In the context of our task, we mainly employed a process planner known as PILZ motion. This particular planner, suitable for controlled environments, facilitated obstacle-free movements while ensuring smooth speed during the scanning process.

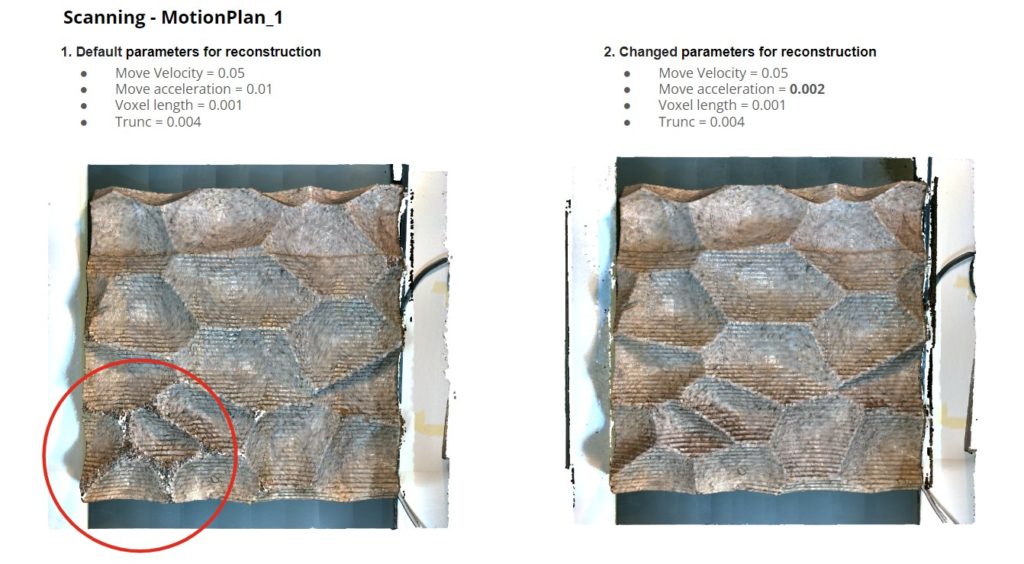

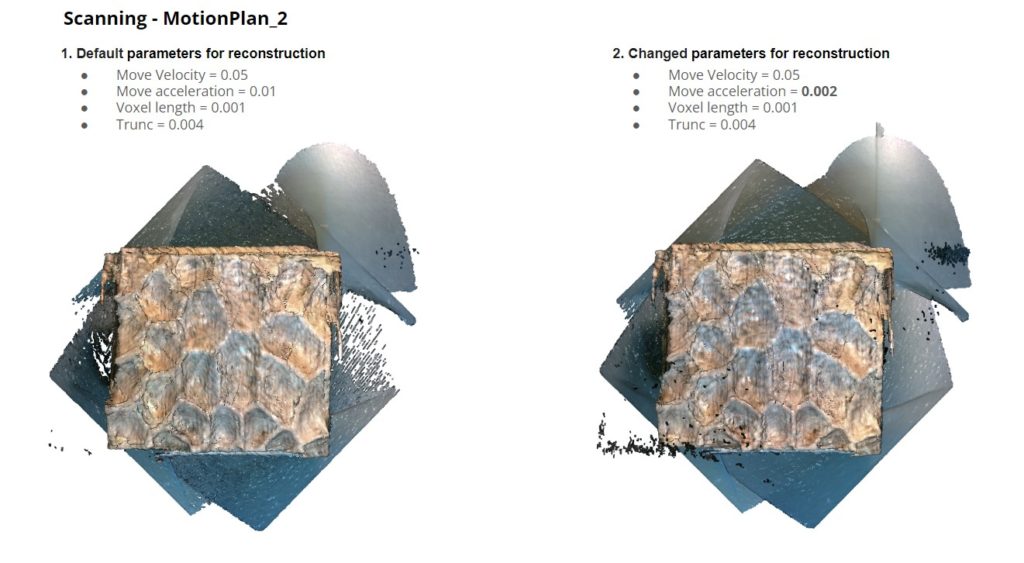

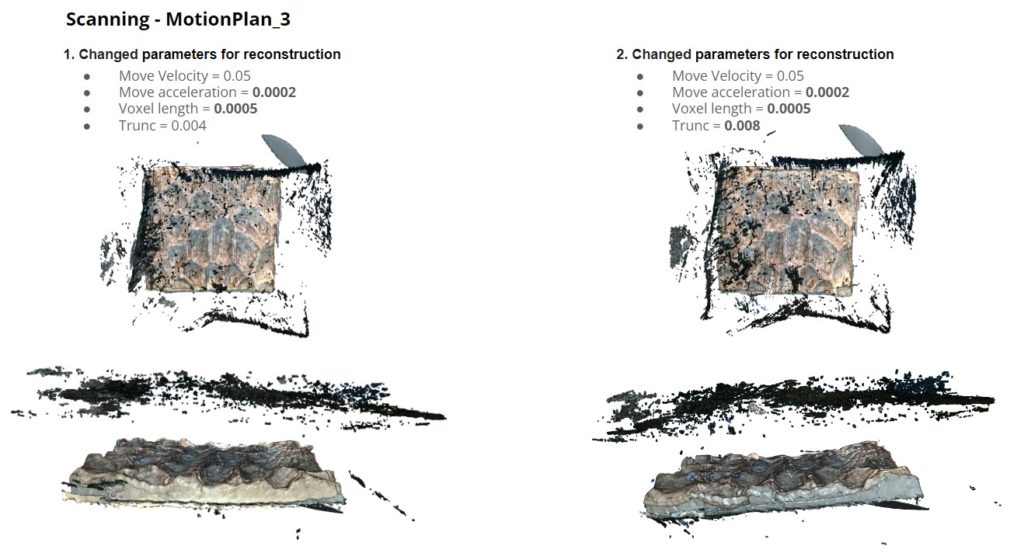

To enhance the quality of our results, we devised three distinct tool paths for the robot to follow during the recording and scanning of the target piece.

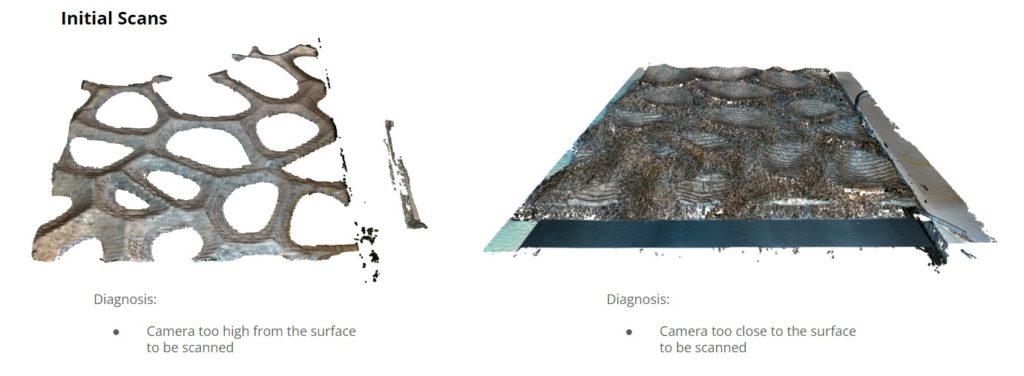

To attain a high-quality point cloud, several preliminary scans were conducted to determine the optimal distance for generating accurate results using industrial reconstruction. This process, employed for constructing the point cloud after scanning, required careful evaluation and adjustment of the scanning distance to achieve the desired outcome. By iterating and refining this distance, we were able to generate precise and reliable point clouds using the industrial reconstruction technique.

Throughout our experimentation, we identified various parameters, such as speed, velocity, distance from the piece, voxel length, and trunk value, that significantly influenced the outcome of the scan. By carefully varying these parameters, we obtained a series of point clouds that vividly illustrate the impact of their variations. The subsequent images showcase these results alongside the corresponding point cloud data.

After undergoing a series of trial and error iterations, we successfully generated a clean mesh utilizing Open3D. This enabled us to compare the resulting mesh with the original piece, facilitating a comprehensive evaluation of the accuracy and fidelity of the scanning process. Through meticulous adjustments and refinements, we achieved a visually satisfying mesh representation, further validating the effectiveness of our approach.