During this Hardware seminar, we were able to understand different types of sensors used in robotics and the techniques used to process the collected data. The aim of the seminar is to use the sensors to understand the environment and then expect the robot to take decisions (automation) and carry a specific task (actuation).

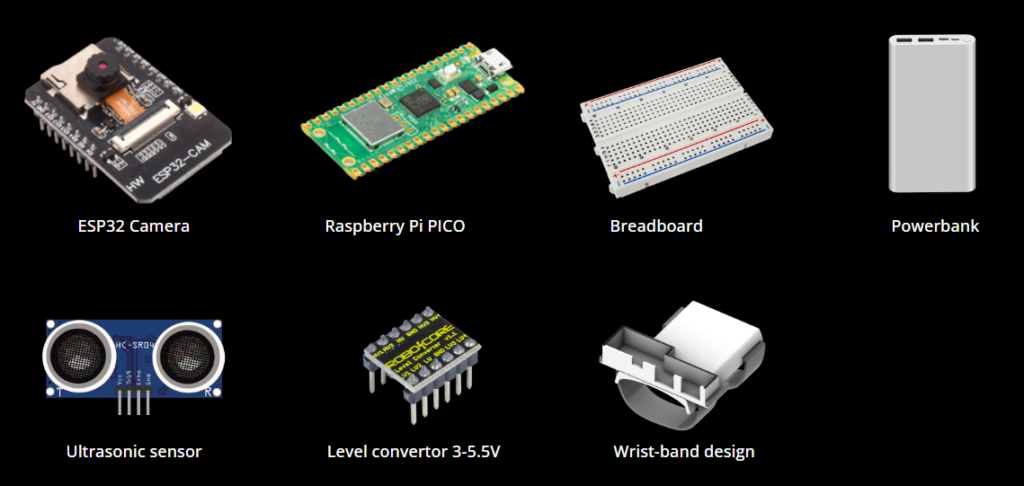

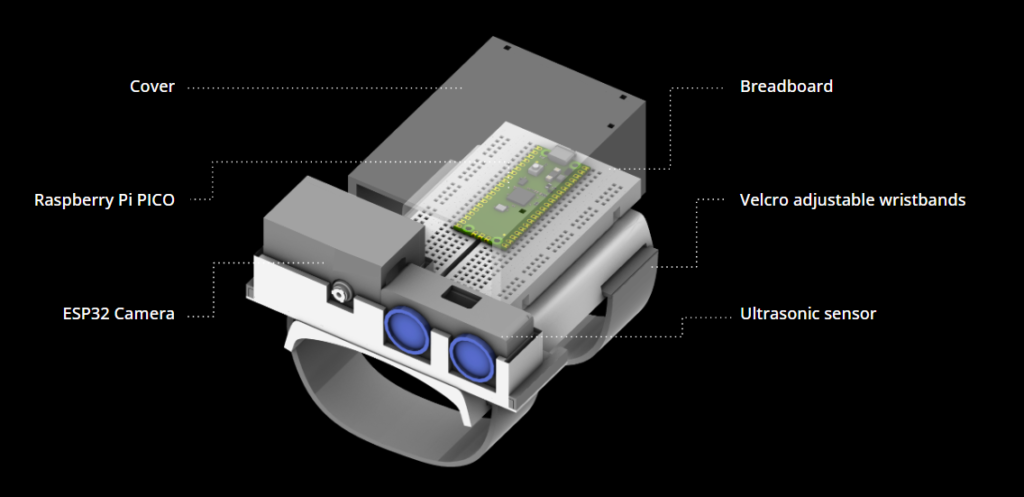

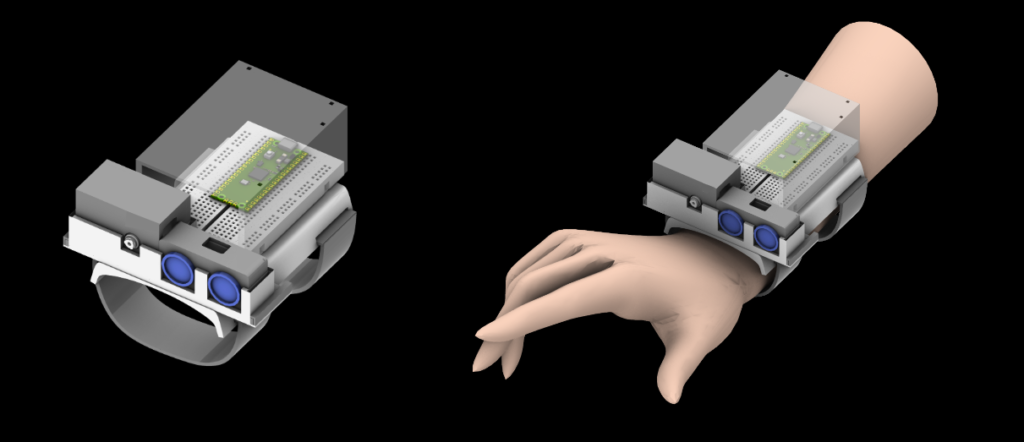

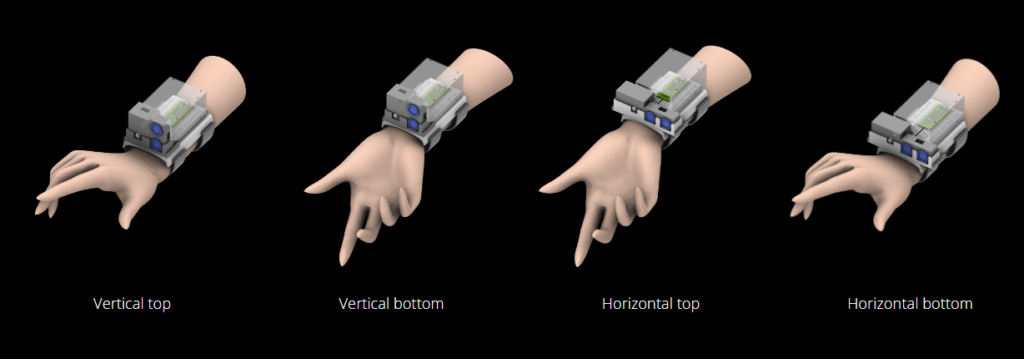

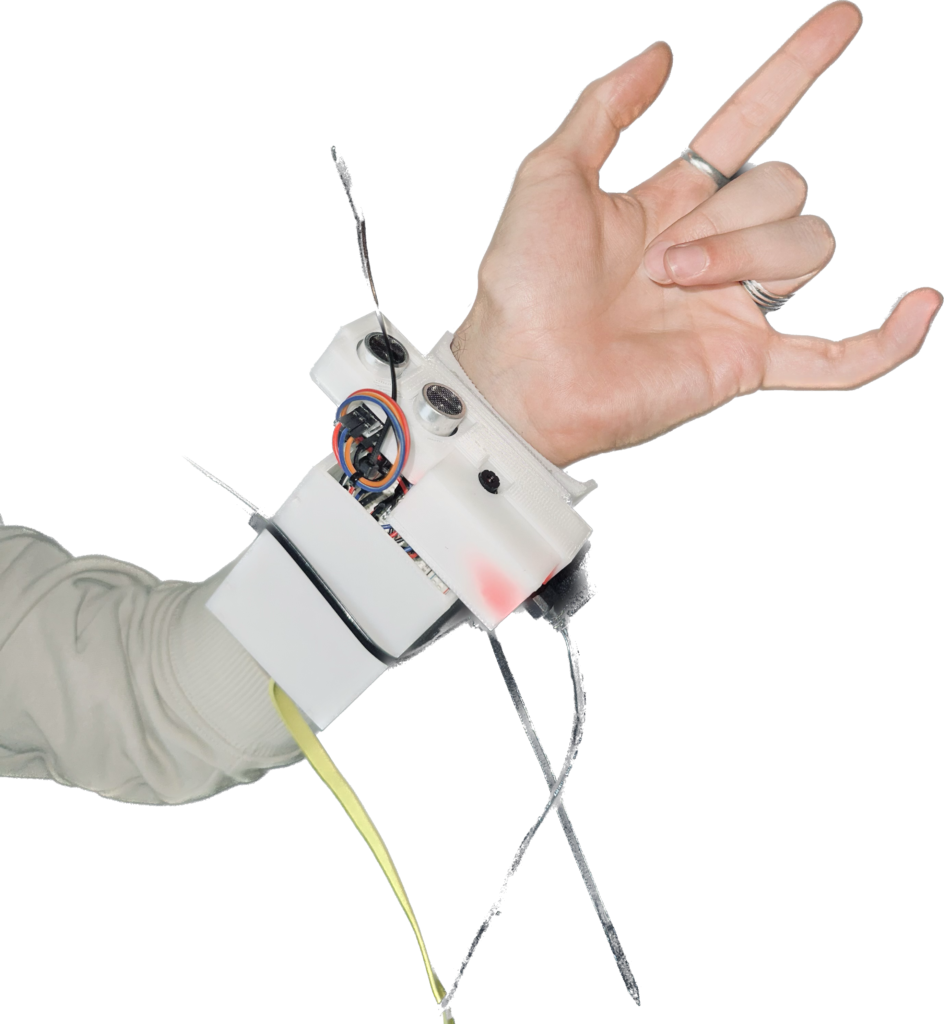

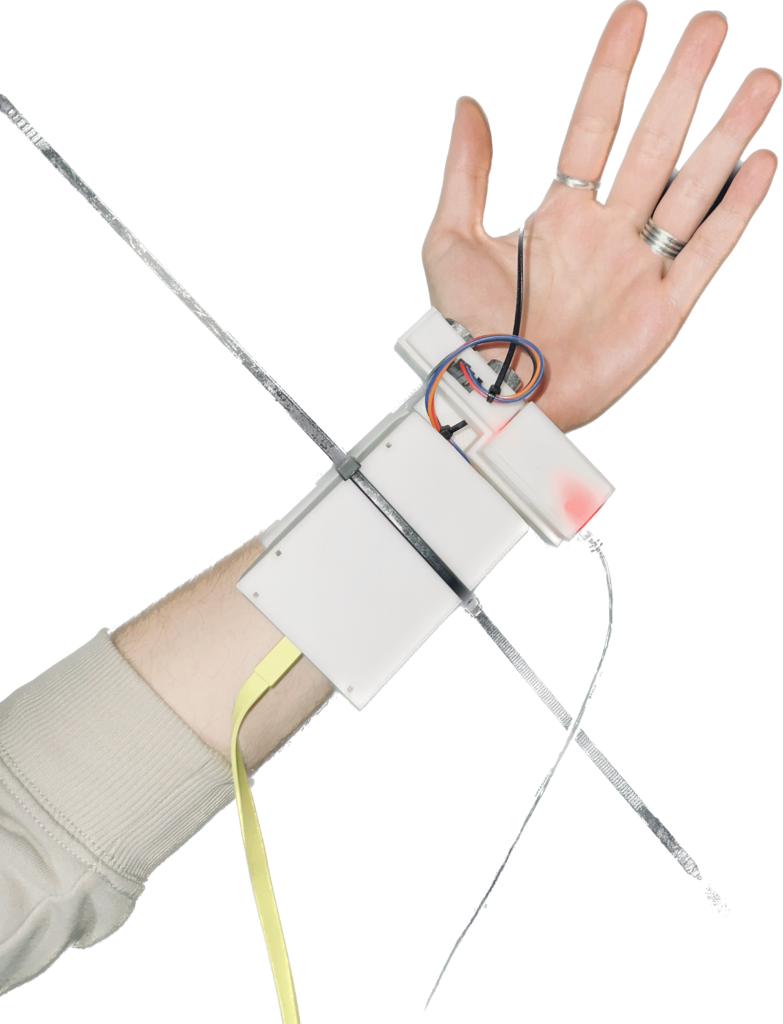

For the class purpose, we decided to fabricate a wearable device with an integrated camera and proximity sensor for blind grasping of objects and navigation. On the following images, we can see the materials used, the device design, its portability and possible configurations.

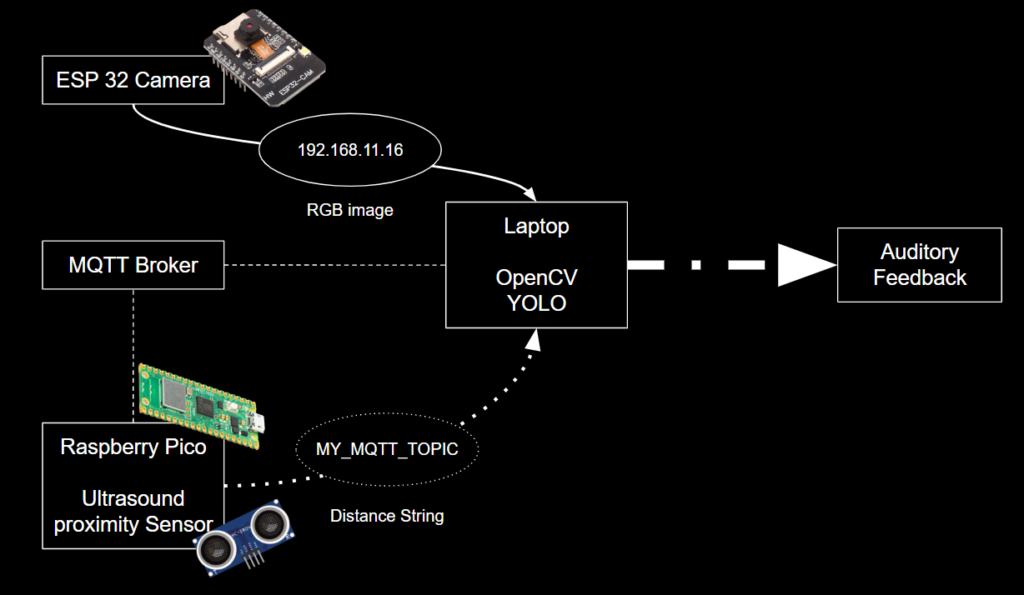

Logic

The logic of the project is explained on the image below. The image of the environment is captured from the ESP 32 Camera at the same time that the Ultrasound proximity sensor is detecting a specific distance from an object. Usint the MQTT Broker and the RGB image, the data is imported into a laptop to correlate it and with the use of OpenCV and YOLO we could get an auditory feedback which would be the result of the project. The camera would detect the image of a bottle (when there is a bottle on the camera, it would give an auditory feedback saying that there is a bottle) and the sensor would detect the distance (depending on the distance of a bottle you would listen to a beep. The closer you get to the bottle, the faster it beeps).

Results

The link below shows the demonstration video in which the wrist band is on action:

https://drive.google.com/file/d/10odAVjk9E1er_ArwqNg2xAfGRBMA9tZW/view?resourcekey