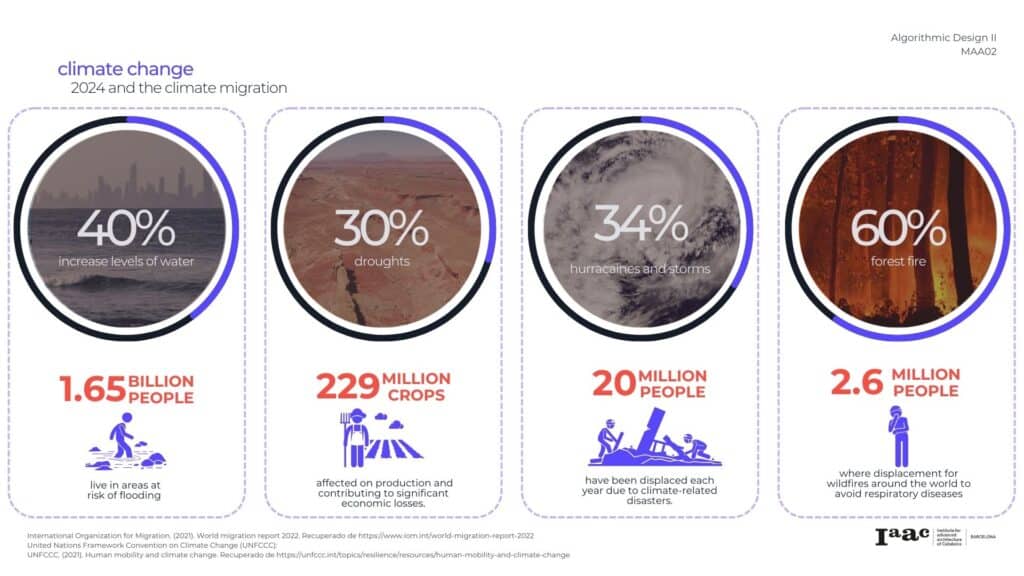

Climate change has become one of the primary drivers of forced displacement around the world, giving rise to the phenomenon known as climate migration.

Here are some examples of statistics in the last year, forcing people to move to other cities and countries.

Some of the most relevant examples of this displacement, have been occurred in Canada, Bangladesh and the USA, having as a result, a huge number of people displacing to other cities and countries.

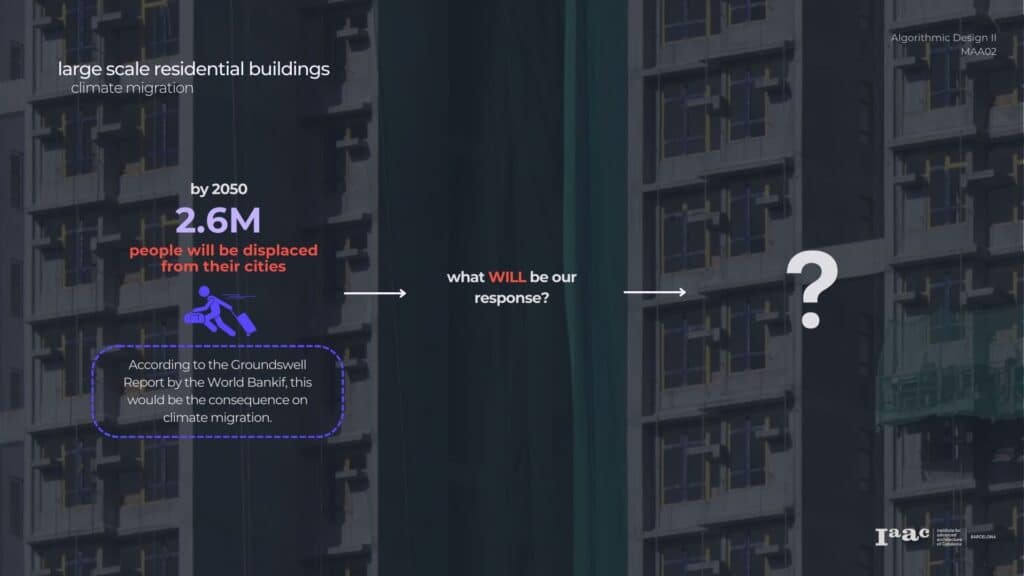

In 1960, the uncontrollable increase of population in Hong Kong created a high demand on house living, giving as a fast and cheap solution, the massive construction of housing projects around the city with no consideration into human aspects, only time, money and not even aesthetics.

Now on, a report from de World Bankif, said that by 2050, more than 2.6M of people, will be displaced from their cities due to climate change issues which it means, a high demand of housing to new and safety areas. The question is, what will be our response?

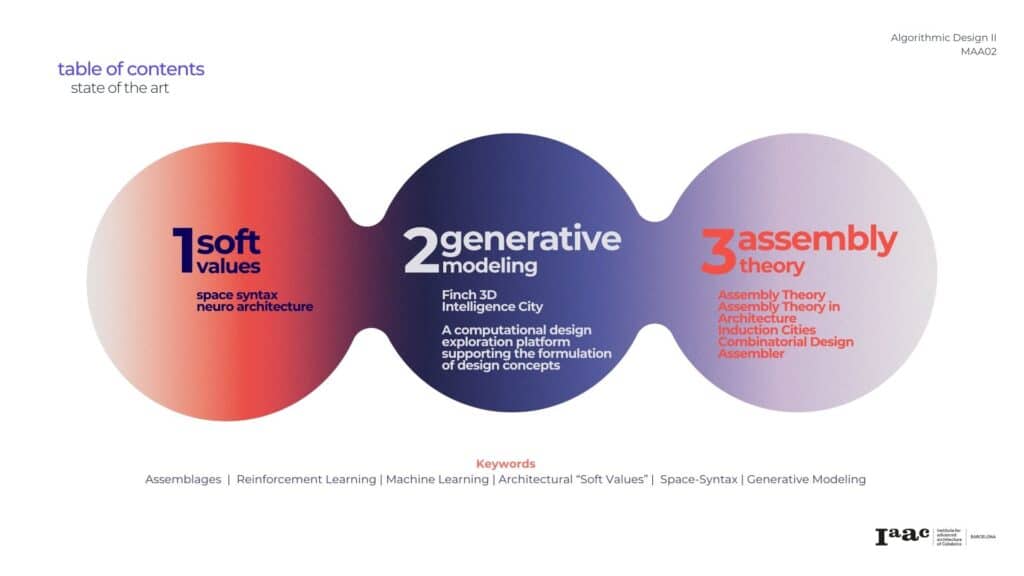

In order to start developing this project, our state of the art was divided in three key areas:

Soft Values, exploring space syntax and neuroarchitecture;

Generative Modeling, focusing on computational tools like Finch 3D; and Assembly Theory, focusing on combinatorial design and induced cities.

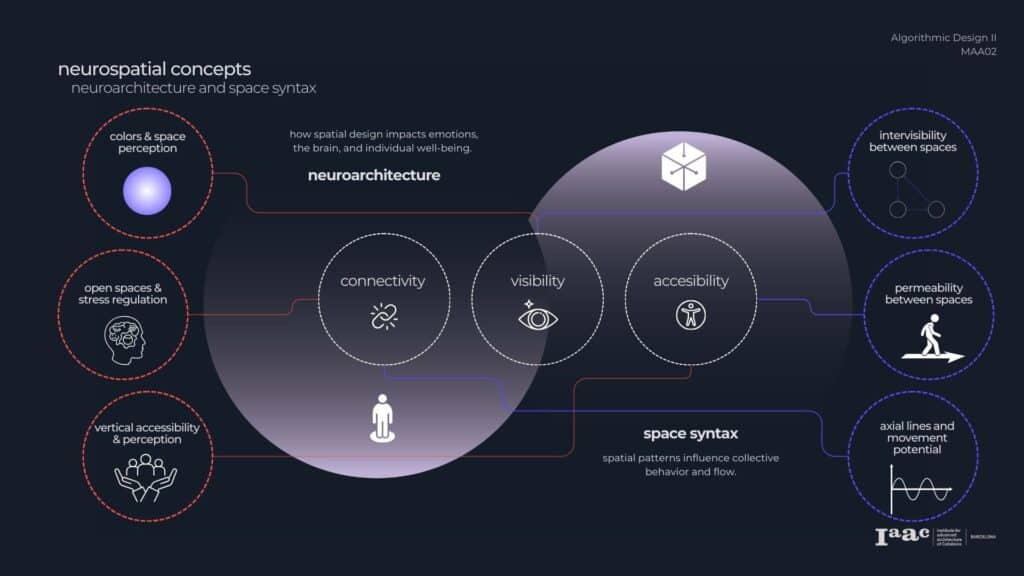

Here we see a comparative framework between Neuroarchitecture and Space Syntax

Neuroarchitecture, focuses on how spatial design impacts emotions, the brain, and individual well-being. On the right, we have Space Syntax, which analyzes spatial patterns to predict collective behavior and movement. Two fields that evaluate spaces through different lenses but share common concepts as connectivity, visibility and accessibility.

In the field of neuroarchitecture:

connectivity relates to how spaces interlink and create fluid movement; visibility ensures clear sightlines and intuitive navigation; and accessibility promotes inclusive design that caters to all individuals.

In the other hand, space syntax focuses in concepts like the intervisibility between spaces, the permeability and the movement potential that individuals are developing in the space.

This two main fields, allow us to understand the connection and the importance between the spaces and the individuals.

Now going through the generative modeling, a design approach using algorithms to create complex data driven forms and patterns, which focuses on the general optimization of different fields as material, economical and time of design.

And as part of the most outstanding projects, we found companies as finch 3d, which focuses in the optimization of floor layouts in real time through AI.

Similarly, Intelligent city start focusing on mass timber to create sustainable and affordable urban buildings with an automatization system for prefabricated pieces.

An last but not least, we found also a relevant project conducted in delf university: A computational design exploration platform supporting the formulation of design concepts through parametric modeling and performance analysis.

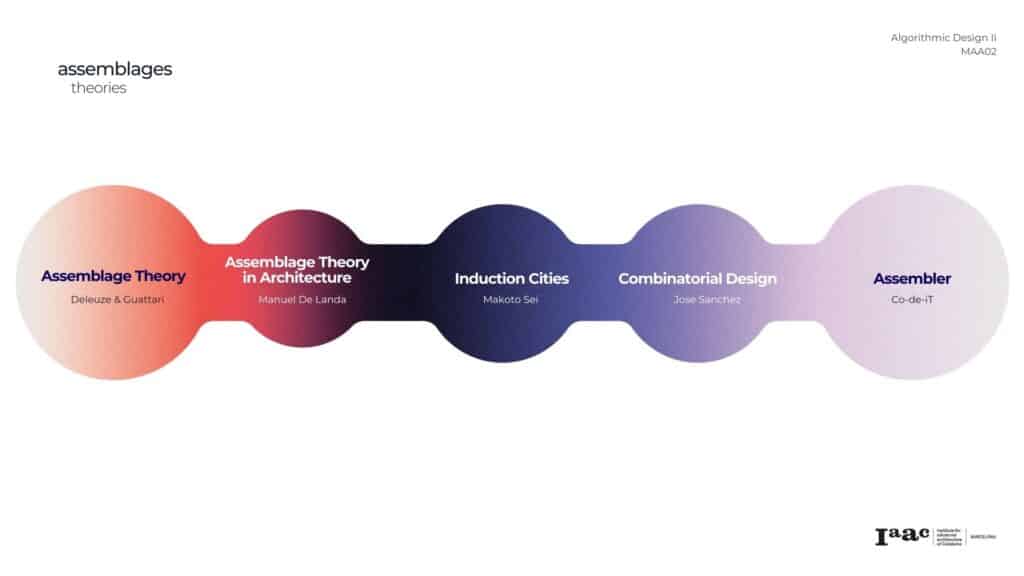

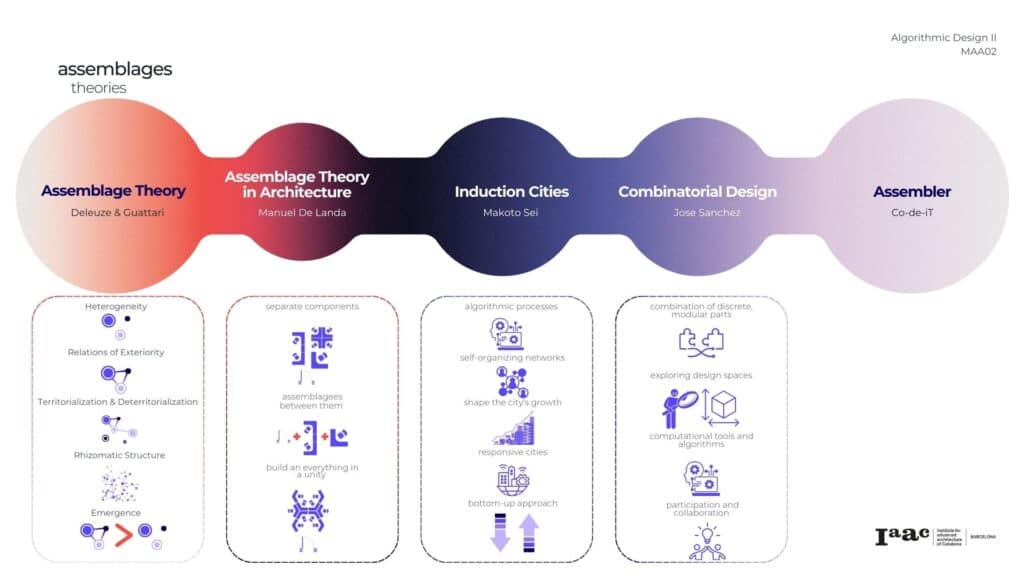

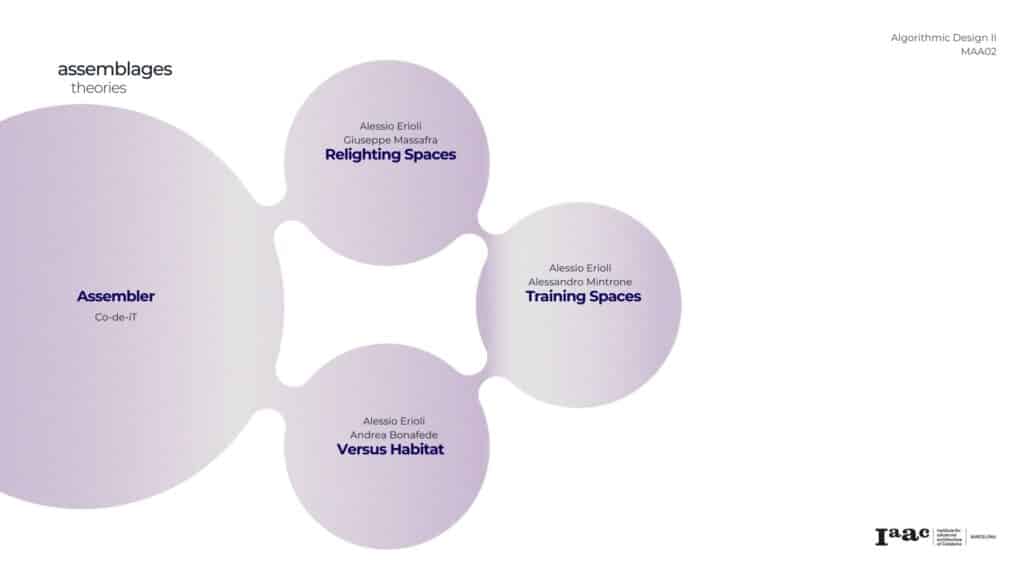

Continuing with the last part of our state of the art, the assemblages theories developed from different philosophers and thinkers, allowing us to understand the impact of them as an space and as part of our way of living.

The Assemblage theory was invented by French philosopher Deleuze and psychoanalyst Guattari in the 80’s. And It emphasizes diversity in components (heterogeneity), their ability to interact without losing identity (relations of exteriority), and their dynamic nature to stabilize or change over time. Assemblages work in decentralized, flexible ways (rhizomatic structure), generating new properties through interactions (emergence).

The ideas of Deleuze & Guattari inspired Manuel DeLanda and later Kim Dovey to develop the Theory of Assemblage in Architecture. This theory looks at how different parts, like architectural elements, people, and materials, come together to create a whole. When these parts interact, they form new qualities that don’t exist in each part alone.

Makoto Sei’s concept of Induction Cities explores urban systems that evolve dynamically through decentralized, emergent processes. It emphasizes adaptability, where cities grow and reorganize based on changing interactions between components, fostering resilience and innovation.

José Sánchez’s Combinatorial Design focuses on creating systems where components are combined through rules to explore diverse design possibilities. It highlights adaptability, scalability, and emergent outcomes in architectural and urban design.

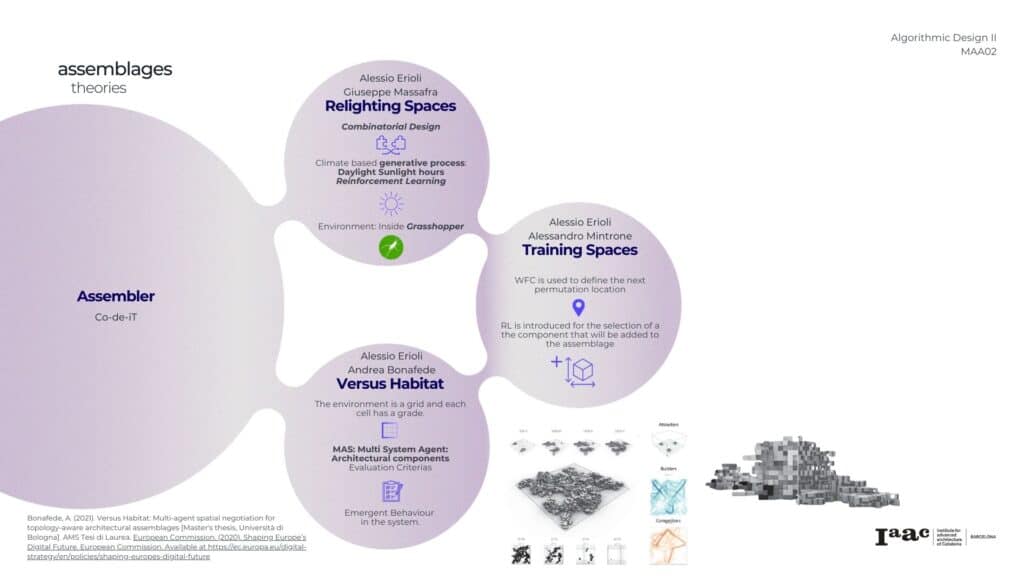

First of it, Giuseppe Massafra’s

with ‘ReLighting Spaces’ uses reinforcement learning to design adaptive spatial assemblies that optimize daylight access.

He manage to integrate Reinforced Learning model within the environment of Rhino and grasshopper, conducting the sun analysis in lady bug.

First of it, Giuseppe Massafra’s with ‘ReLighting Spaces’ uses reinforcement learning to design adaptive spatial assemblies that optimize daylight access. He manage to integrate Reinforced Learning model within the environment of Rhino and grasshopper, conducting the sun analysis in lady bug.

Alessandro Mintrone’s ‘Training Spaces’ combines reinforcement learning and Wave Function Collapse to control the growth of assemblages. Wave function collapse is used to define the place of the component and Reinforced Learning algorithm is used to select a specific component from a defined catalogue. His set of rules to drive the iterative process are related to: Density, spatial distribution, structural, spatial and planar connectivity.

Versus Habitat” is a project by Andrea Bonafede that explores multi-agent systems for generating large-scale architectural assemblages. It focuses on spatial negotiation among agents to create topology-aware designs, emphasizing decentralized and emergent processes in architecture.

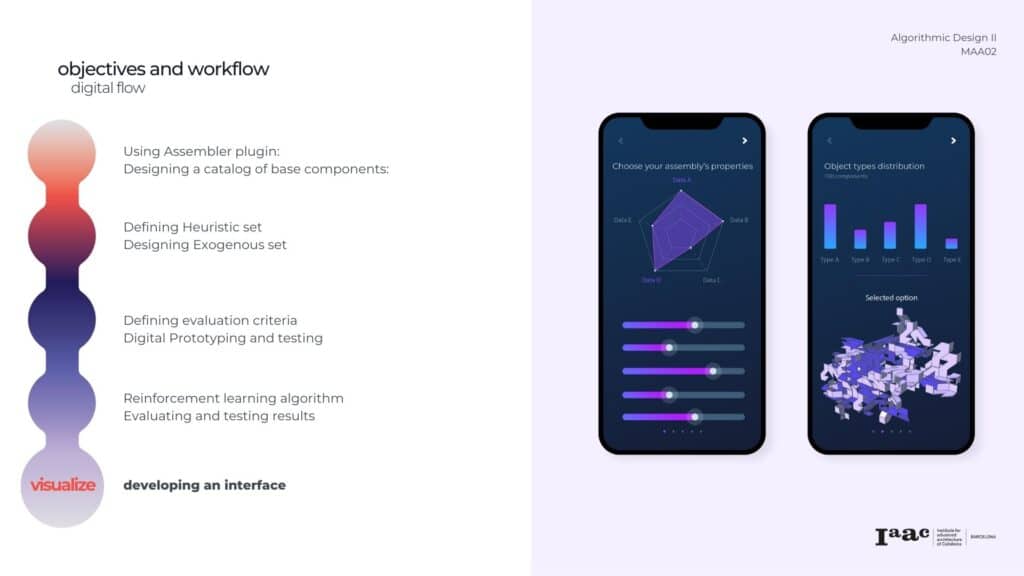

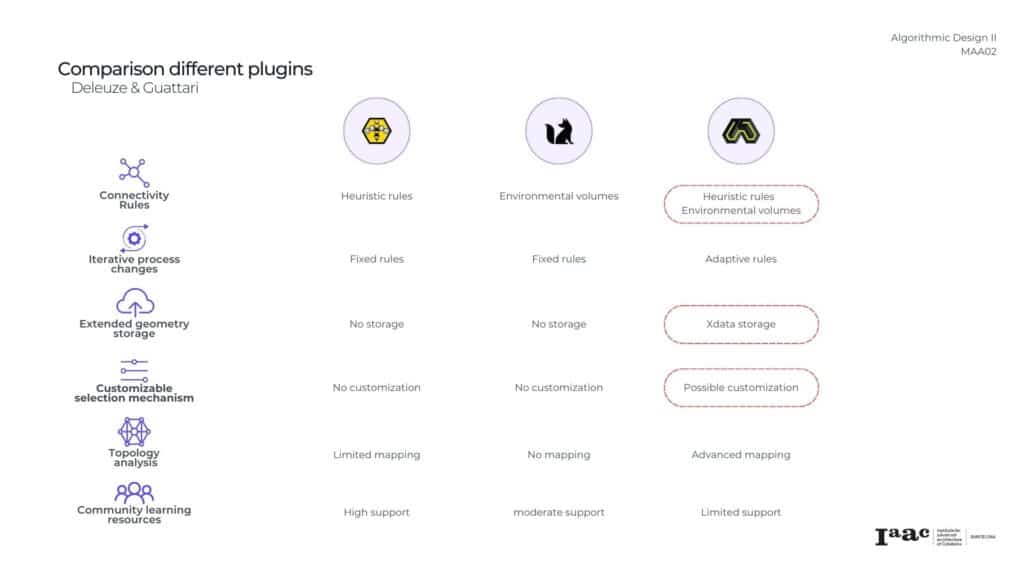

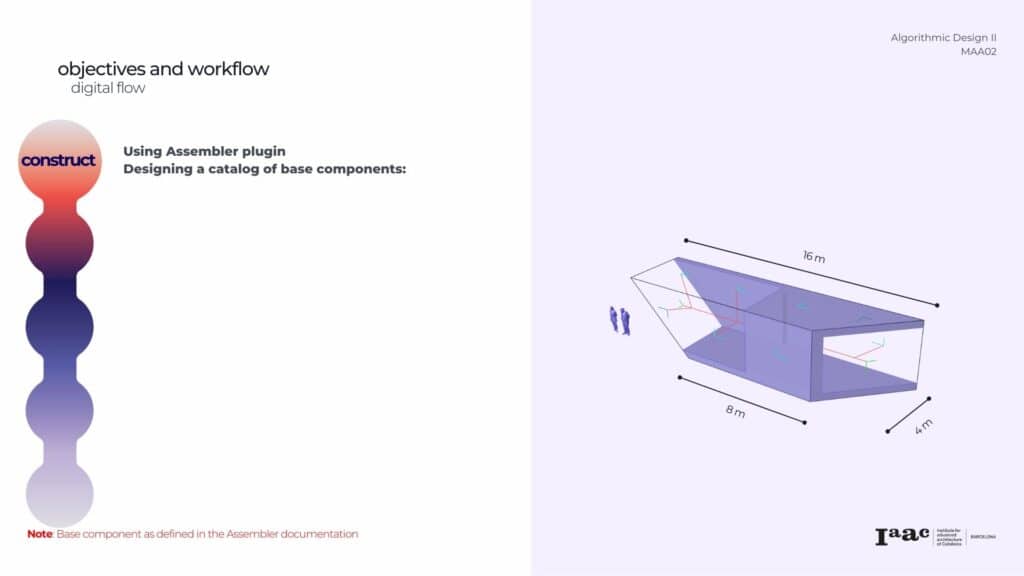

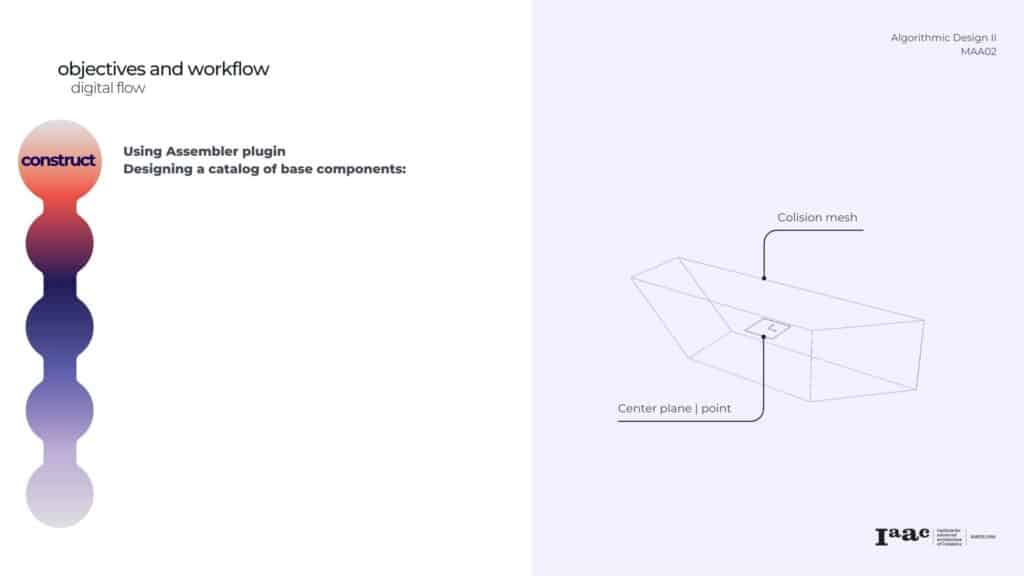

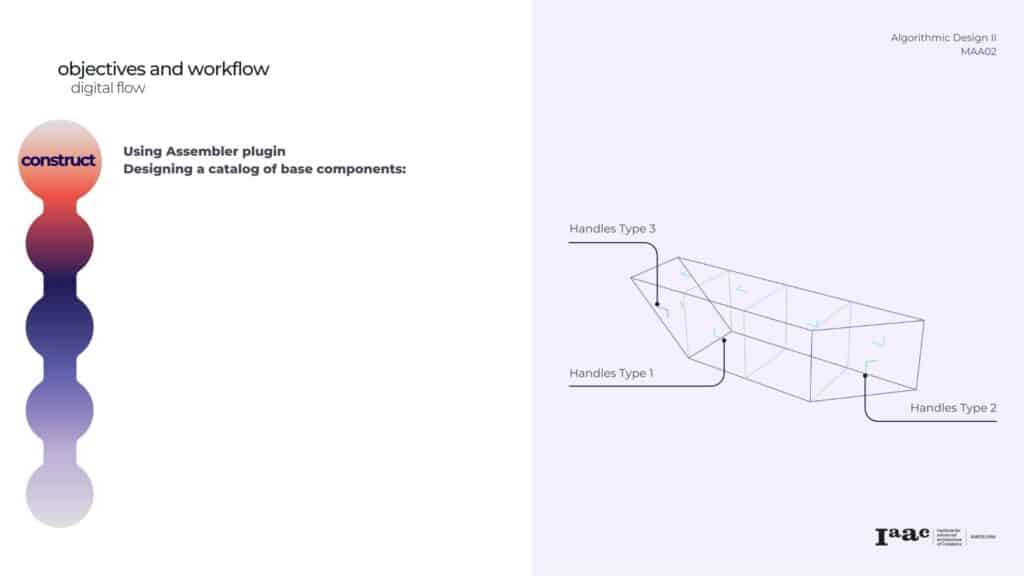

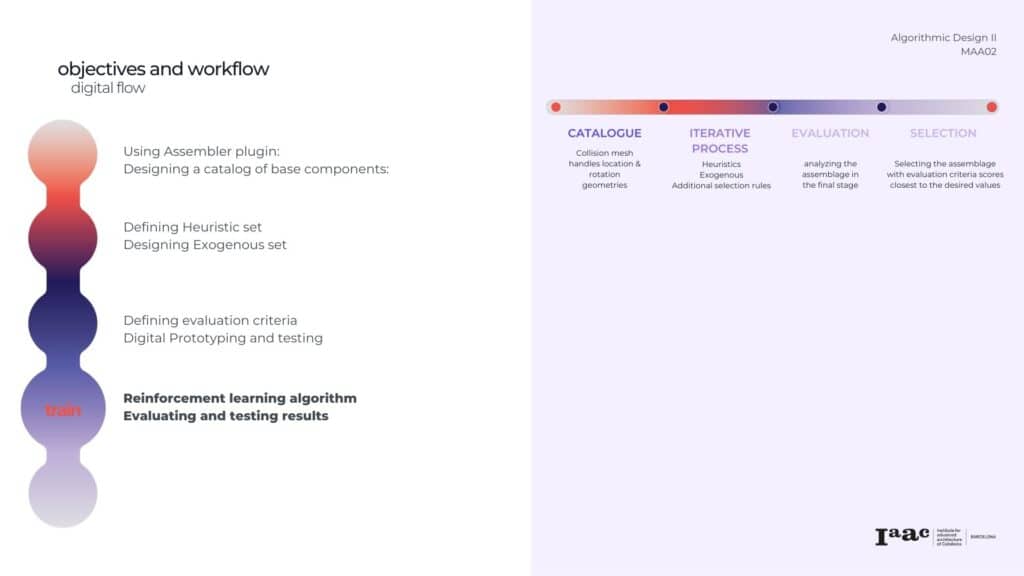

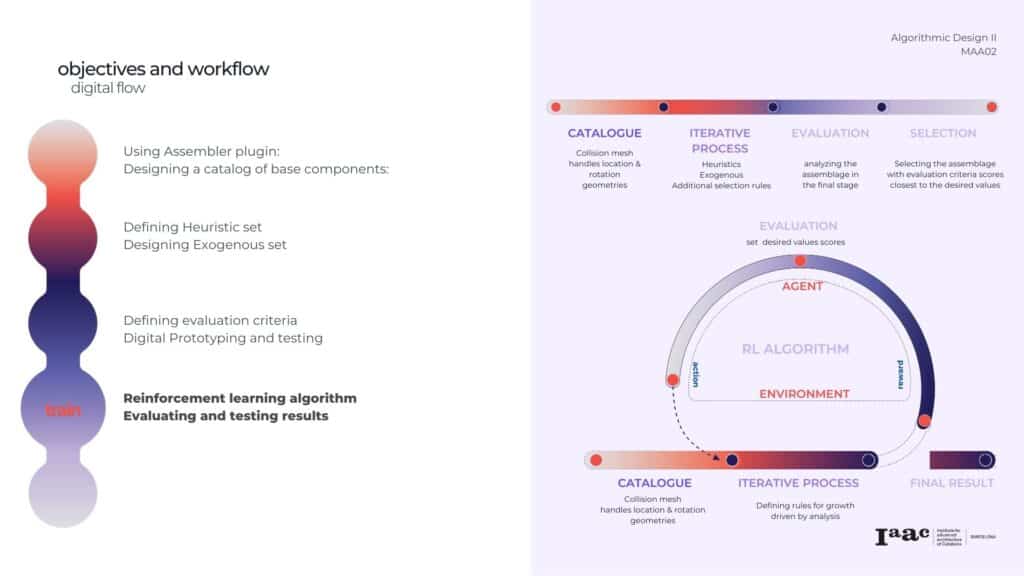

To establish our methodology, we compared several aggregation plugins and found Assembler the most suitable, as it reduces randomness and offers greater customization in permutation selection.

This term, we focused on developing our algorithmic flow for creating and evaluating assemblages. Our next step will focus on integrating an ML algorithm into the process and designing a user interface.

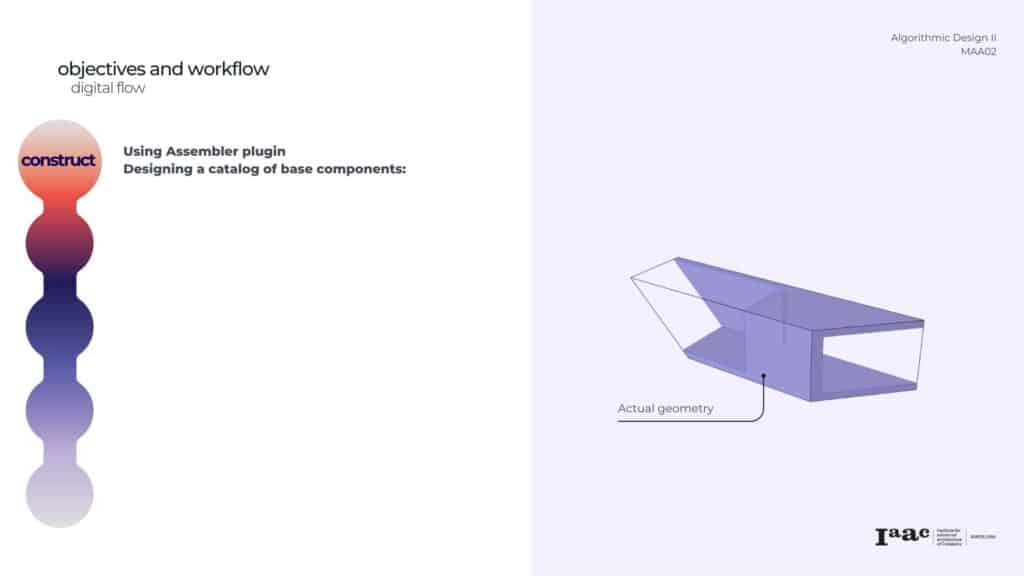

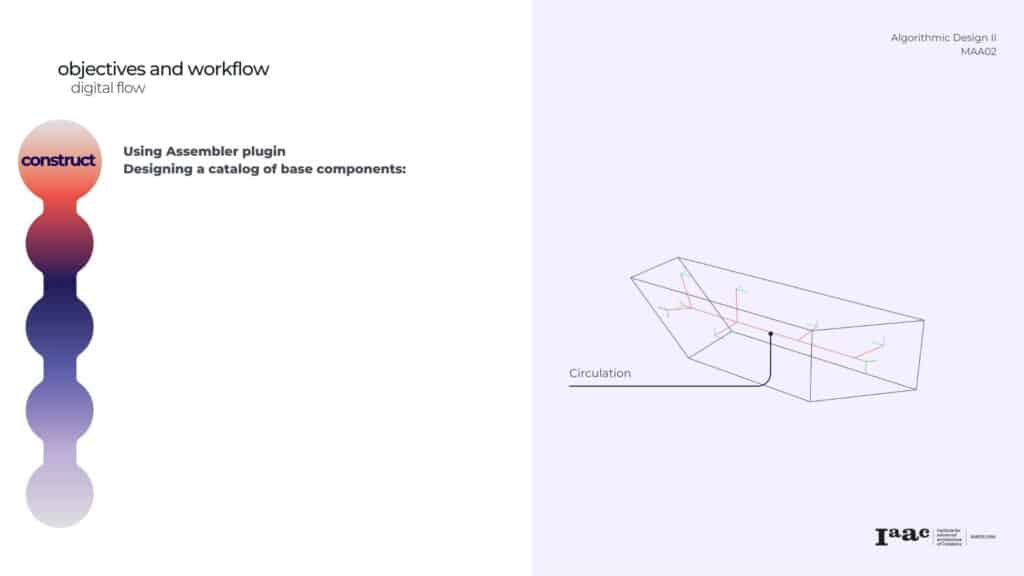

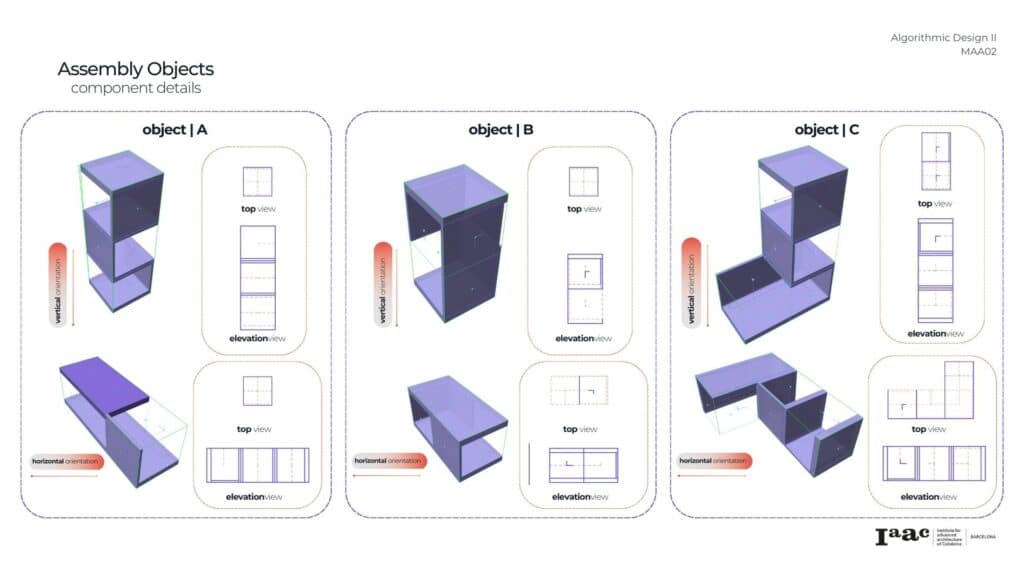

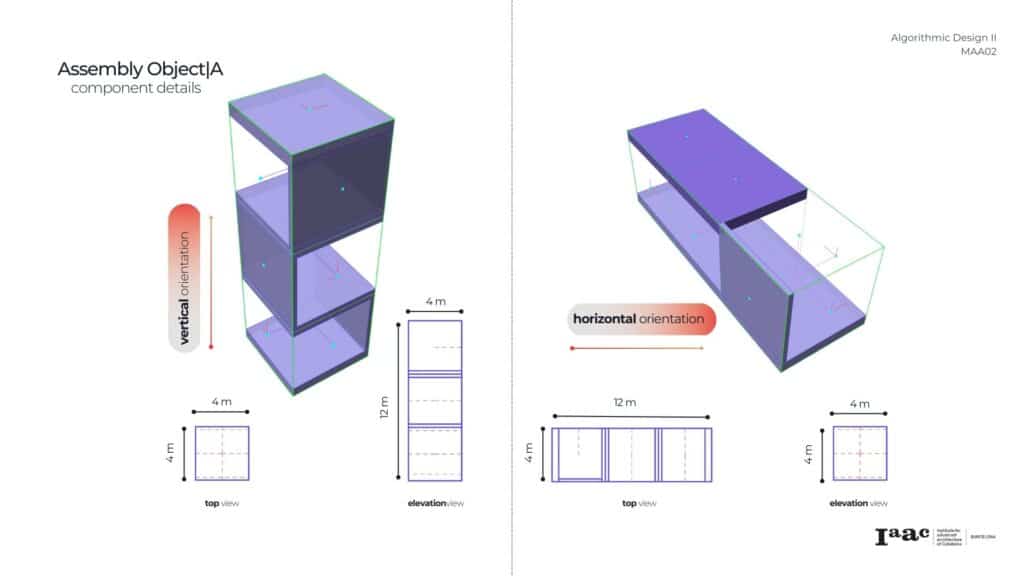

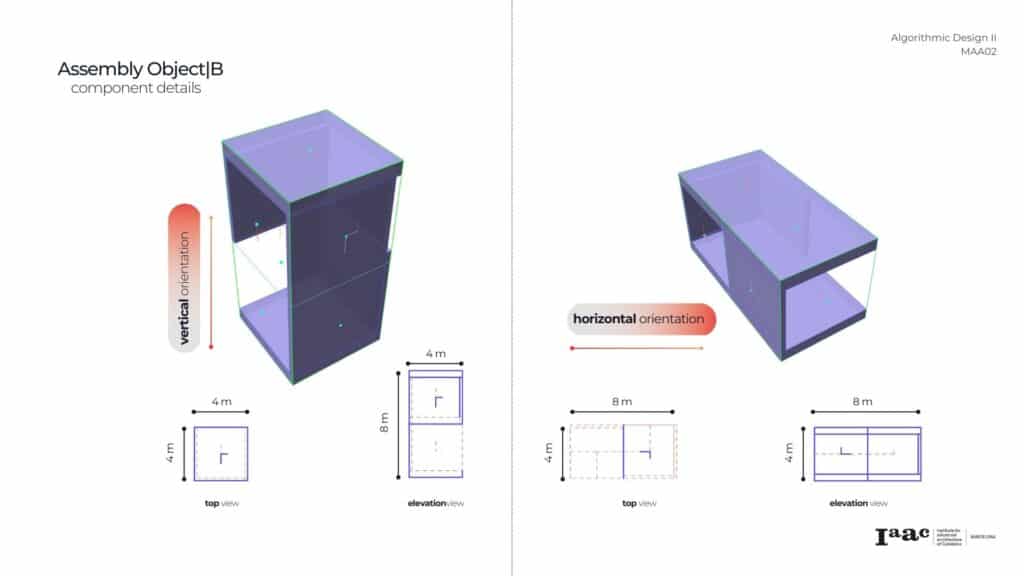

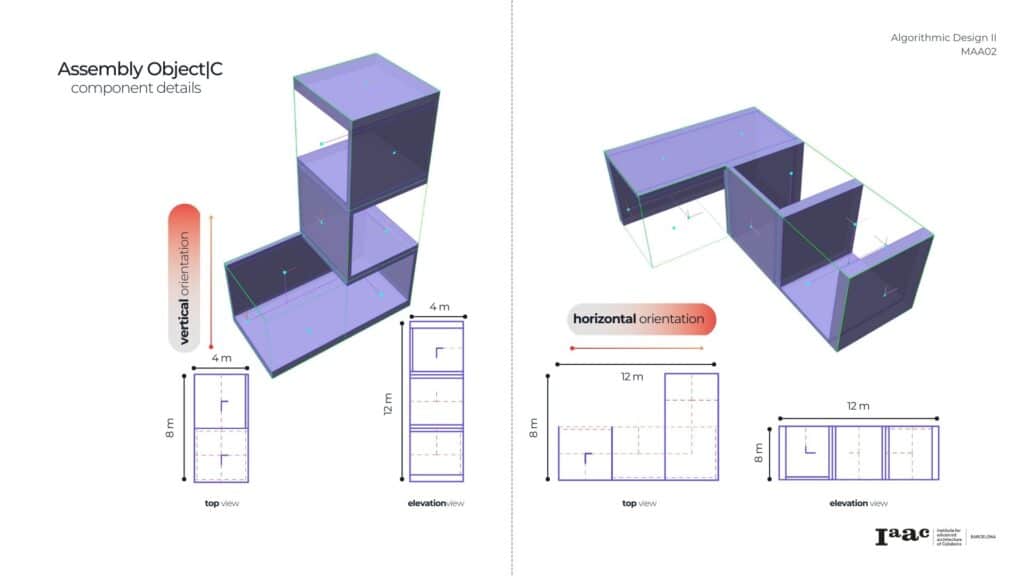

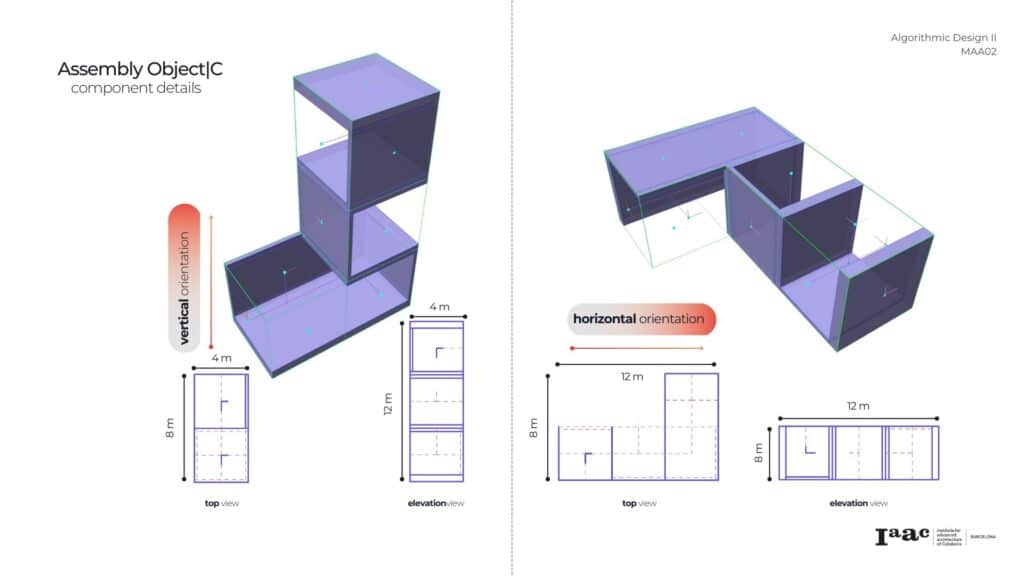

In order to ease the postprocess analysis we decided to work within a voxelised grid of 4x4x4 m. To test our digital pipeline we generated 3 base objects. For each one of them we defined a basic geometry of walls, floors and ceilings.

The geometry defers depending on the object orientation.

Before running the engine that will create the assemblage we define all the possible connections. Here is the heuristic set for the combination of object A and object B.

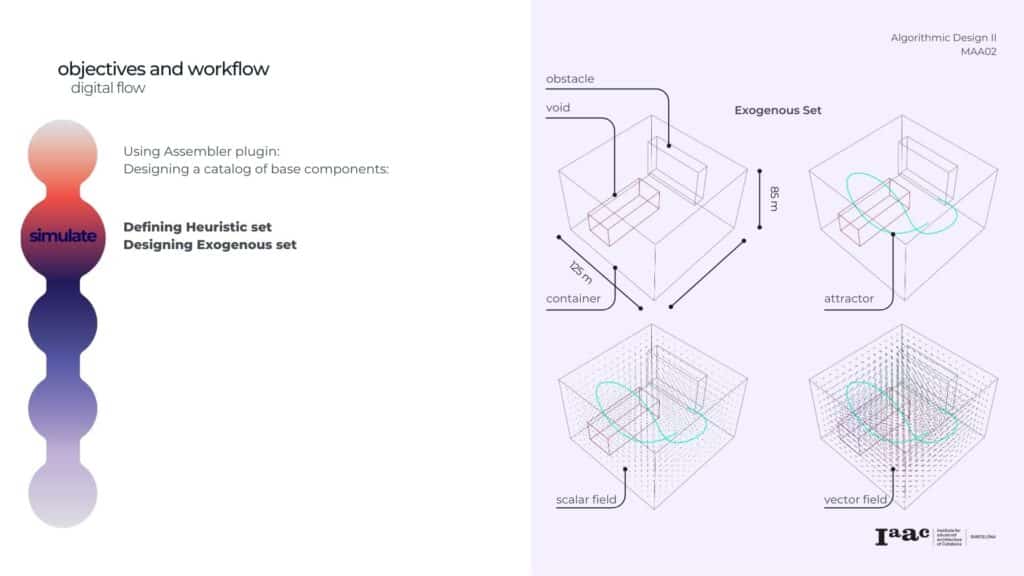

We also set an environment that will guide the growth as:

Container mesh

Obstacles and voids

Attractors – in this case a curve a scalar field defined by the distance from the attractor and a Vector field

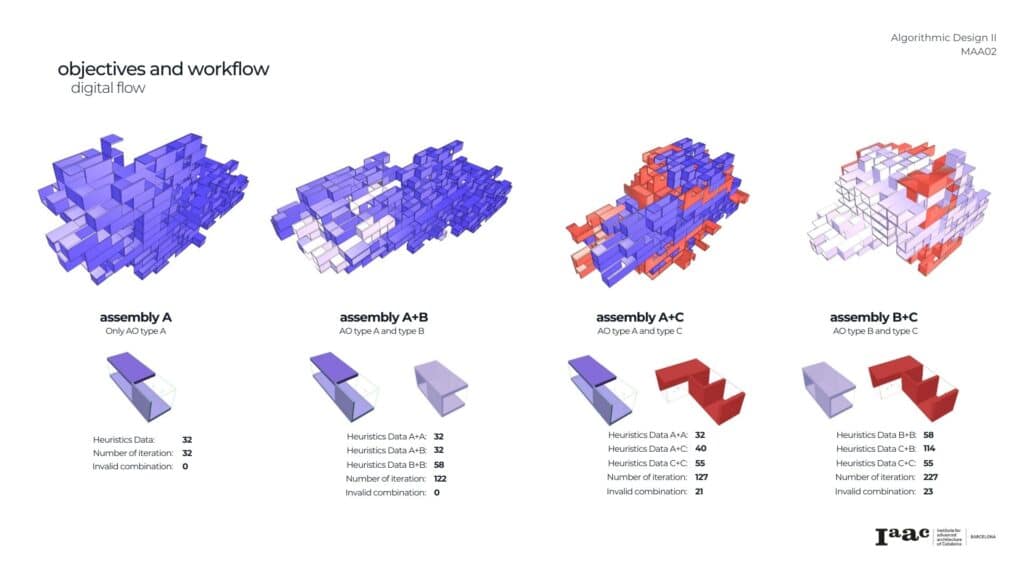

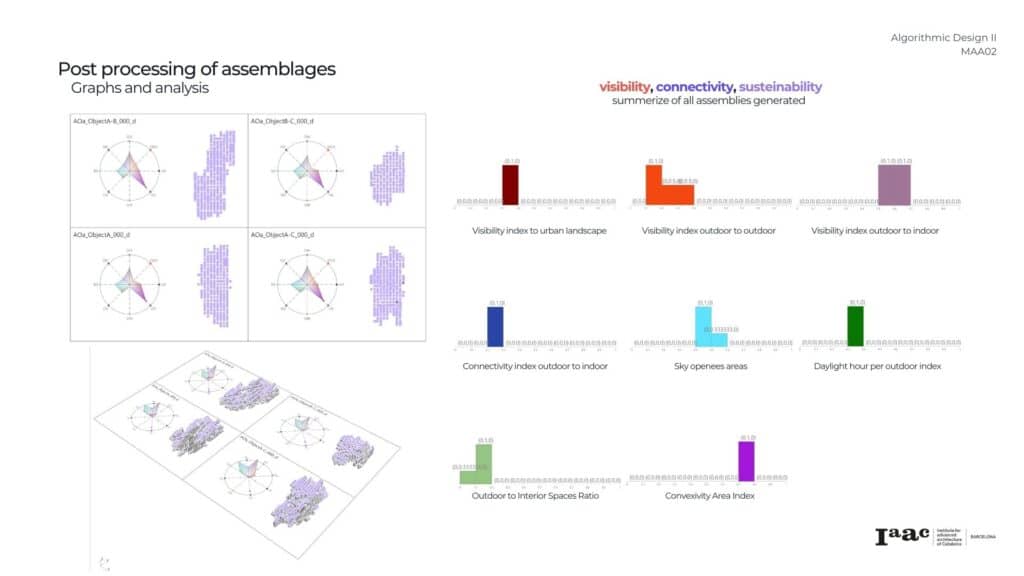

We ran 4 assemblages of 300 iterations combining different components, for testing our workflow

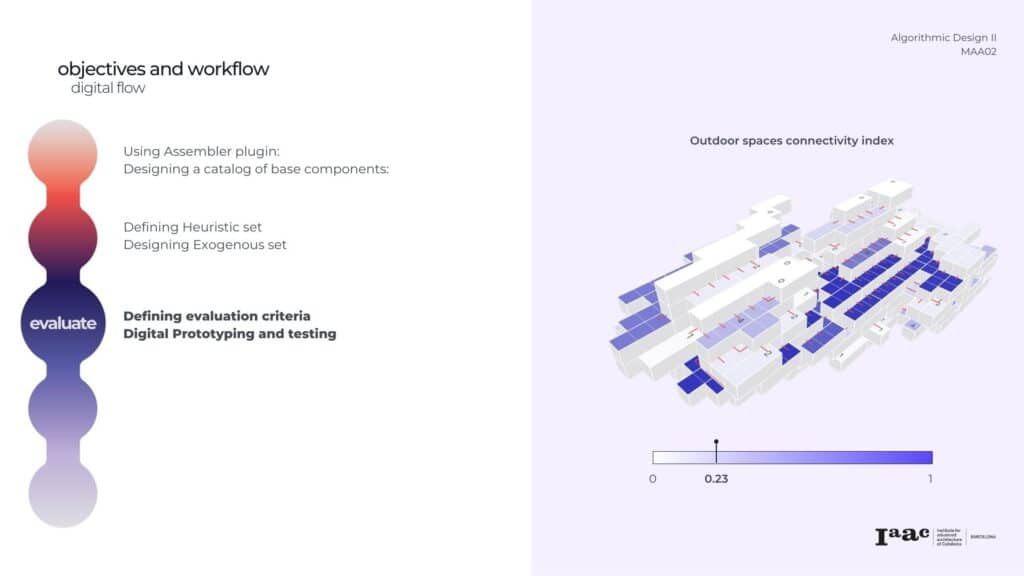

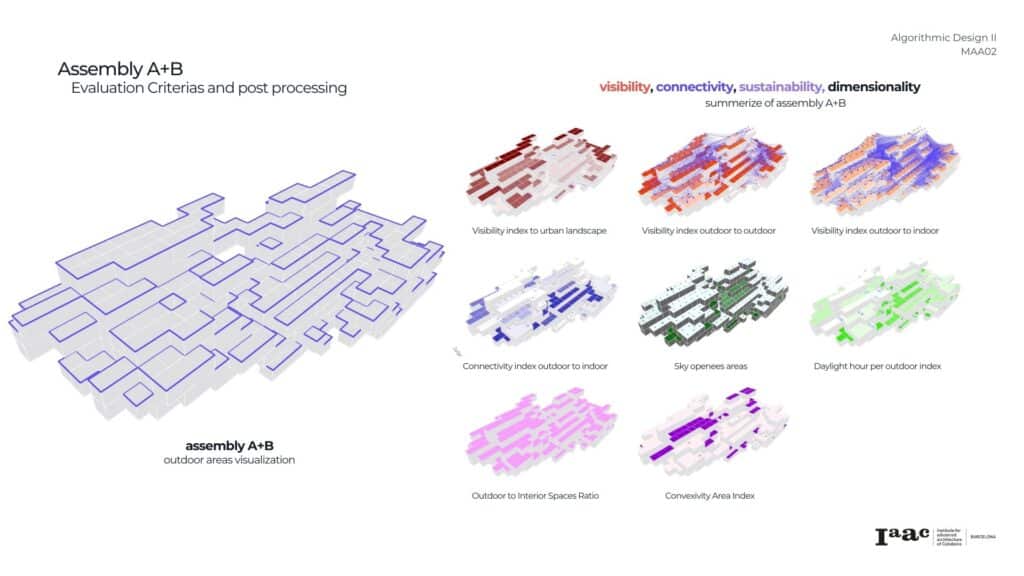

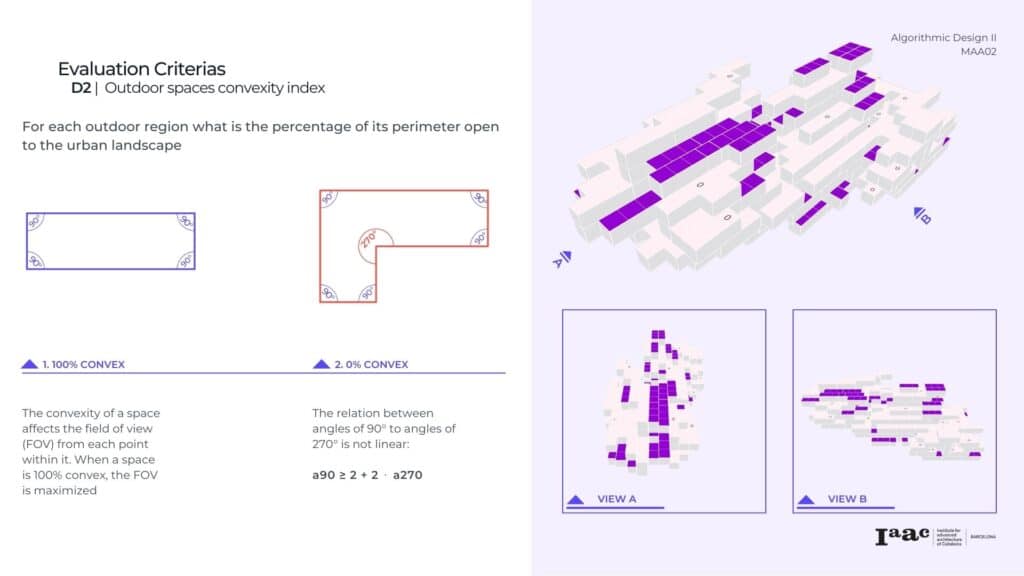

Our main work focused on defining the evaluation criteria and determining the analyses we want to generate

As Rem Koolhaas said, “Architecture is not about the building itself, but what happens in the space it creates. It is the activities, the encounters, and the social relations that make space come alive.”

Recognizing the potential in the in-between spaces formed by the assemblages, we have chosen to focus our attention primarily on these areas for now.

Few things to considerate for the evaluation process – A computationally lightweight flow, scalability – for the assessment of different configurations, normalized data.

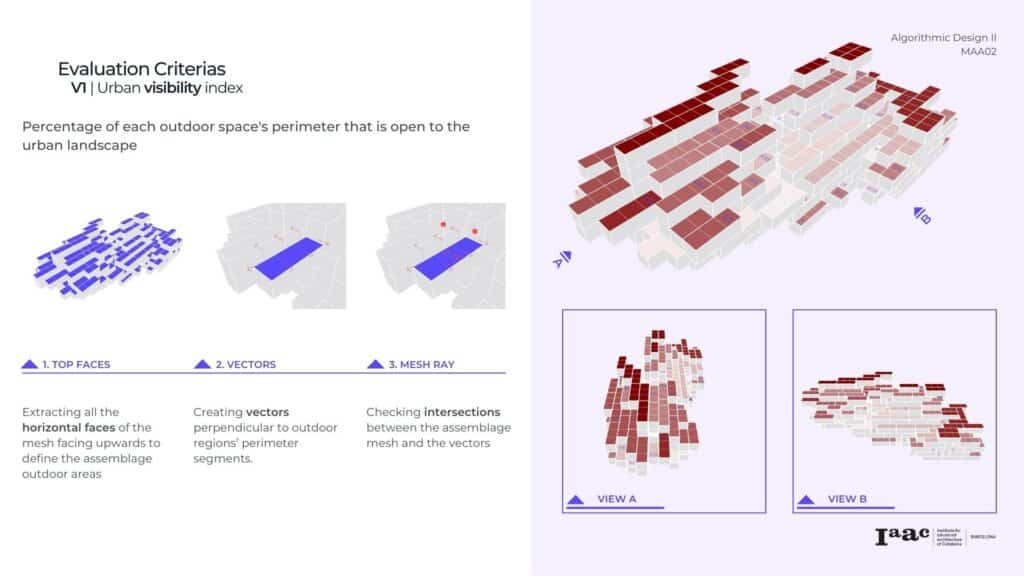

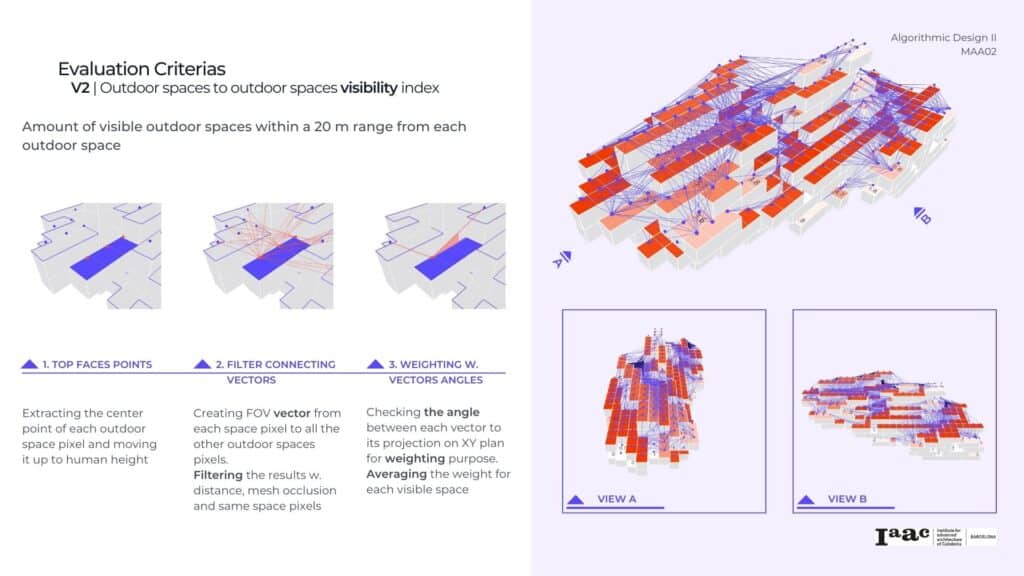

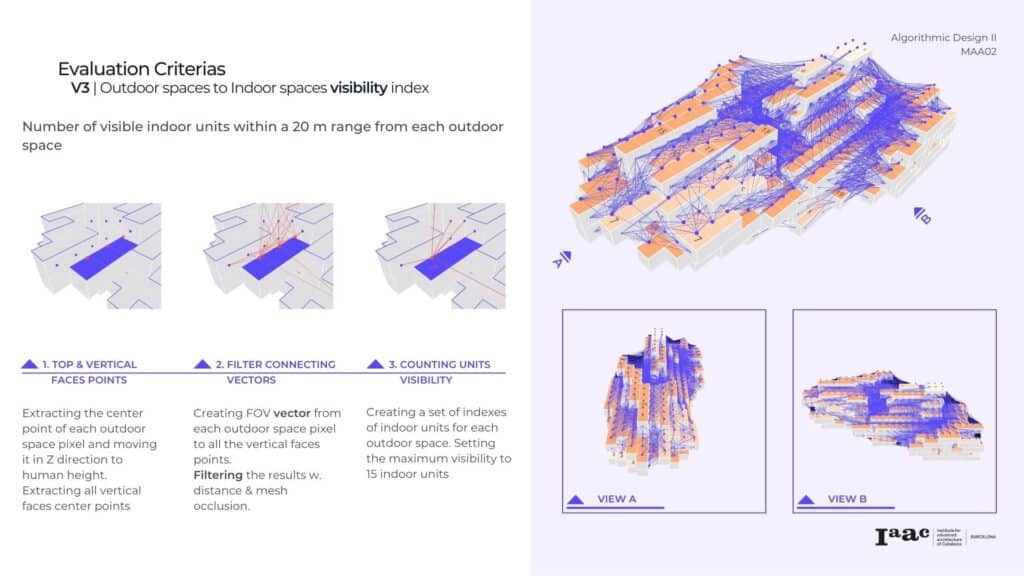

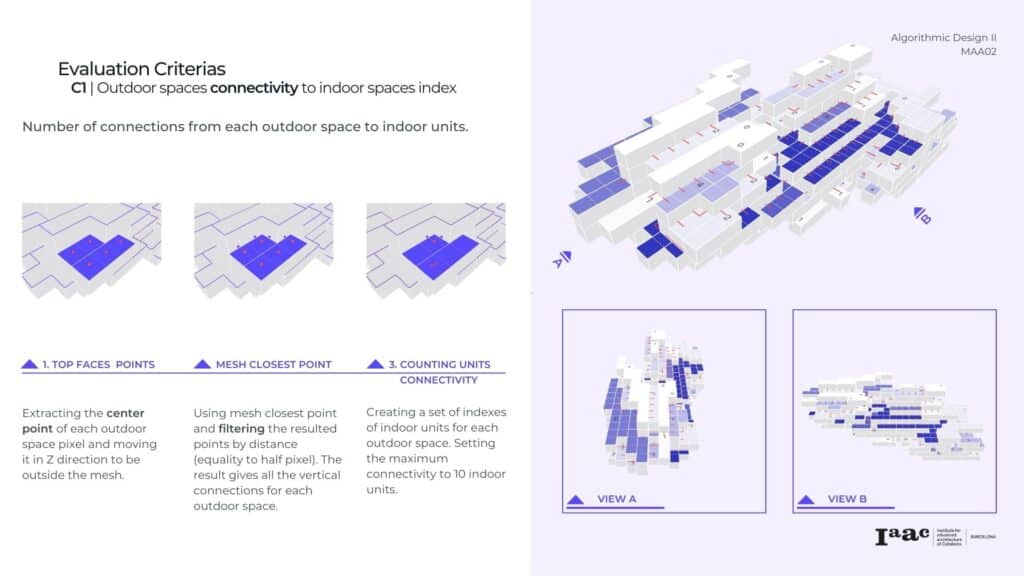

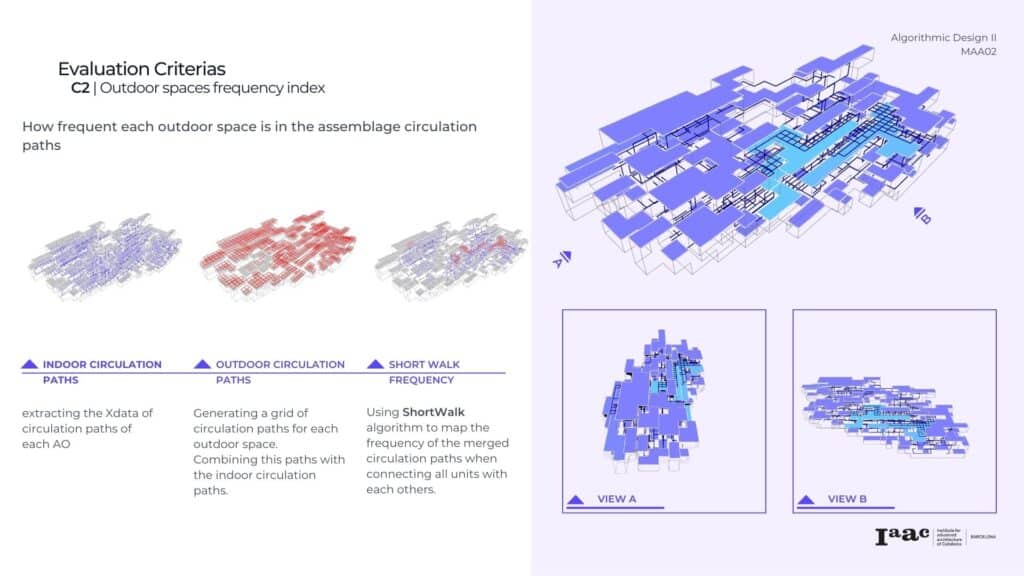

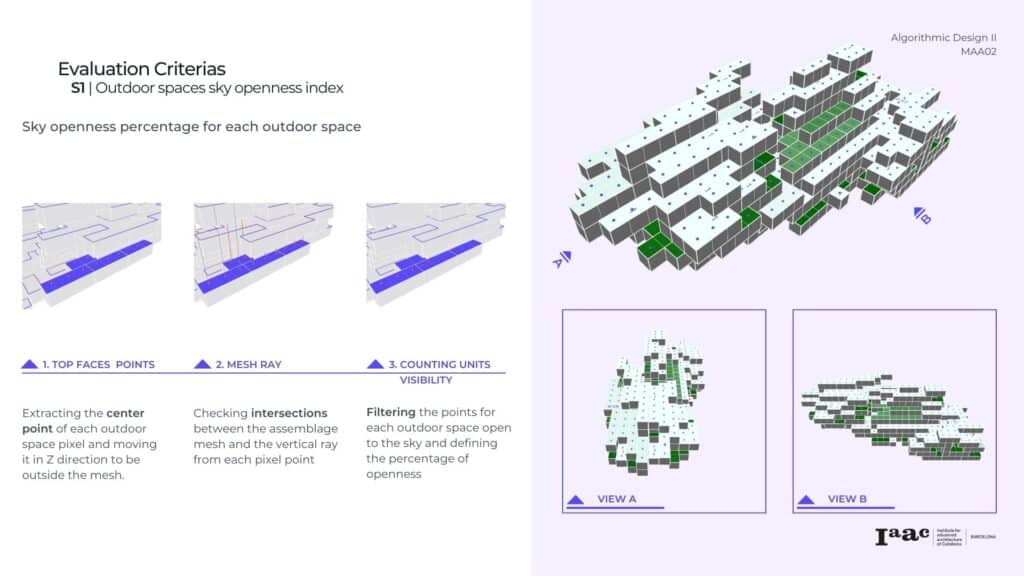

We generated 9 evaluation criteria indexes and we classified them by:

Visibility

Connectivity

Sustainability

Dimensionality

The process we just presented is linear. In order to get to a specific set of criteria indexes, a big amount of assemblages need to be generated and the best candidate will be picked.

By introducing a reinforced learning model to the iterative process we can define the desired scores we aim for and train the model to reach them.

Differently from other kinds of Deep Learning algorithms, RL is not based on a preexisting dataset. Instead, a feedback loop is created where the algorithm learns its behavior from the experience accumulated by an agent interacting with an environment, trying to maximize a series of rewards awarded after its actions.

Our last goal is to develop an interface to make the entire workflow and data representation accessible and user-friendly.