A sustainability CoPilot

Gaia is an intelligent design assistant, a copilot that supports architects in navigating the early stages of design with real-time environmental feedback. Named after the ancient Greek personification of the Earth, Gaia reflects both the ecological focus of the tool and its role as a guiding presence in the creative process.

The term copilot emphasizes collaboration: derived from “co-” (together) and “pilot” (to steer), it captures Gaia’s purpose, to steer alongside the designer, not in place of them. With Gaia, sustainability is no longer a post-design concern, it’s part of the journey from the very first sketch.

Gaia personification of Earth in the Greek mythology

Copilot The term ‘copilot’ combines ‘co-‘, meaning together or jointly, with ‘pilot’, which originates from the Latin ‘pīlōta’, meaning the person who steers a ship.

The Problem

In architecture, the early design stage holds the greatest potential for shaping a building’s environmental impact. Yet paradoxically, this is also when data is most limited, feedback is slow, and decisions are made under the most uncertainty. As a result, critical choices, like building form, material systems, and envelope strategies, are often based on intuition or precedent rather than measurable performance.

While simulation tools exist, they tend to be fragmented, technically complex, or introduced too late in the process to meaningfully influence design. Environmental consultants are often brought in after schematic design, when many key decisions are already locked in. This delay reduces the opportunity to lower carbon, improve efficiency, or explore alternatives with better long-term outcomes.

Architects are asked to design with sustainability in mind, yet they often lack the tools to test, iterate, and understand the implications of their ideas in real time. What’s missing is a way to bring performance feedback into the design process at the moment when it’s most useful: as decisions are being made.

This is the gap gAIa is designed to fill.

Deadlines

When starting working on our architectural projects, one of the biggest problems is the limited time to execute actual design tasks. Deadlines accumulate in very short periods and often we find ourselves immersed in a multitasking frenzy

Early Design Stages

The early design stage has the most influence on the sustainability outcomes and at the same time data is mostly uncertain and unavailable (Kanyilmaz et al., 2023). This gives us a great opportunity to tackle the knowledge gap and reduce effort in the higher stages of design, reducing the cost and effort of changes in typical design frameworks

What if we could pack all of this under the same hood?

The Solution: Let’s Meet gAIa

no Grasshopper, no coding, no headache

Chat Tab

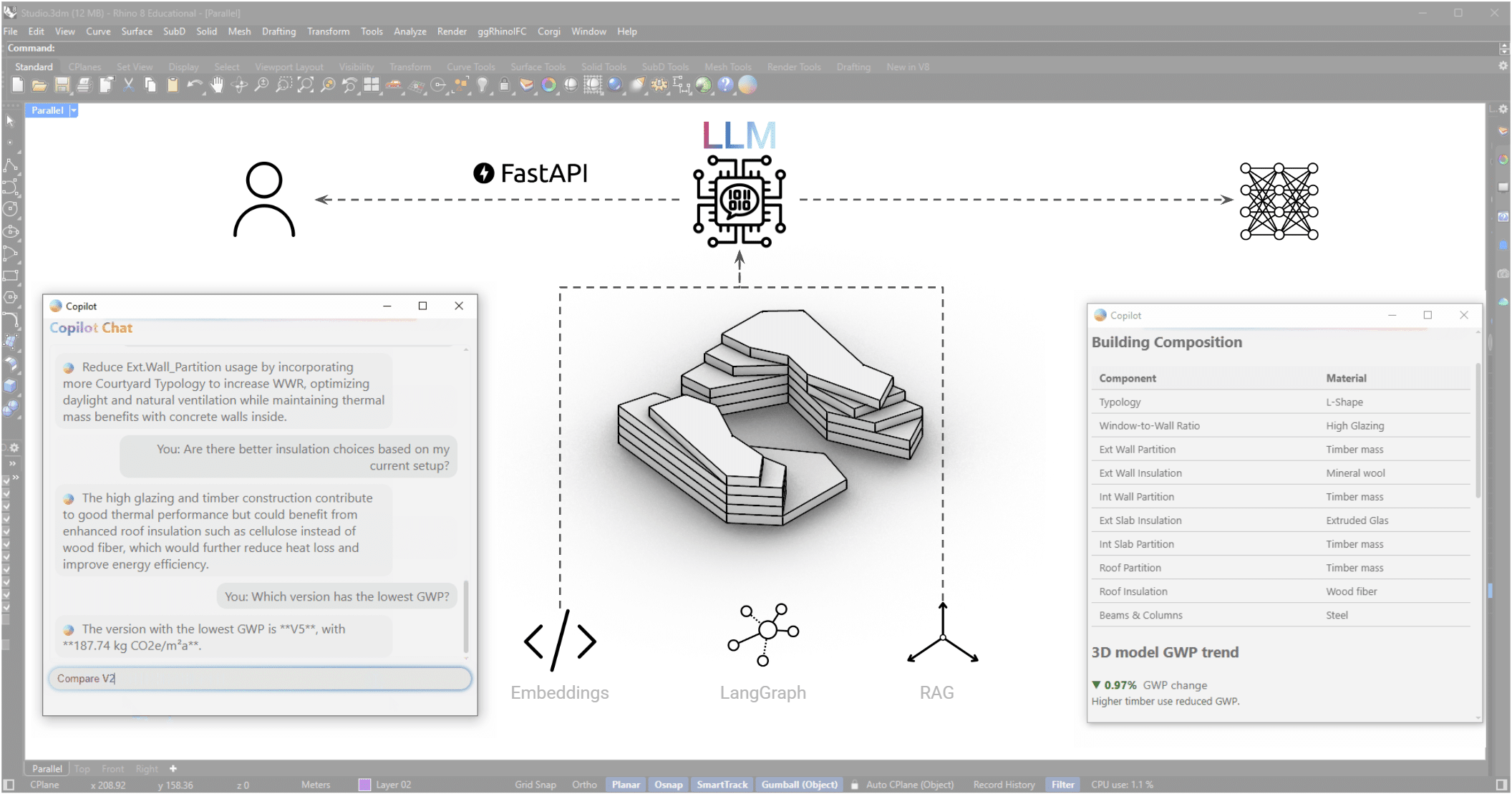

The Chat Tab in Gaia serves as the primary interface between the designer and the system’s underlying intelligence. Powered by a Large Language Model (LLM), it allows users to communicate with Gaia using plain language, no scripting or technical commands required.

Users can:

- Ask direct questions (e.g., “What is the embodied carbon of this version?”)

- Request changes (e.g., “Switch roof insulation to cork”)

- Seek suggestions (e.g., “How can I reduce operational energy without changing geometry?”)

- Compare design options (e.g., “Which version has lower GWP?”)

Ask Anything….

Data Tab

The DATA tab displays live information to the user:

- Actionable buttons allow to save iterations, clear them, export a report or open a WebApp

- Material composition of the current iteration informs the user of the material layers used in the design

- Both visual & text based explanation of GWP trends informs and makes the user aware of the GWP performance on each design step

- Plots for each saved iteration present more KPIs for assessment. GWP, operational carbon, and more, allowing a comparison of iterations in real time.

- And, finally, a visual gallery at the end of the data tab

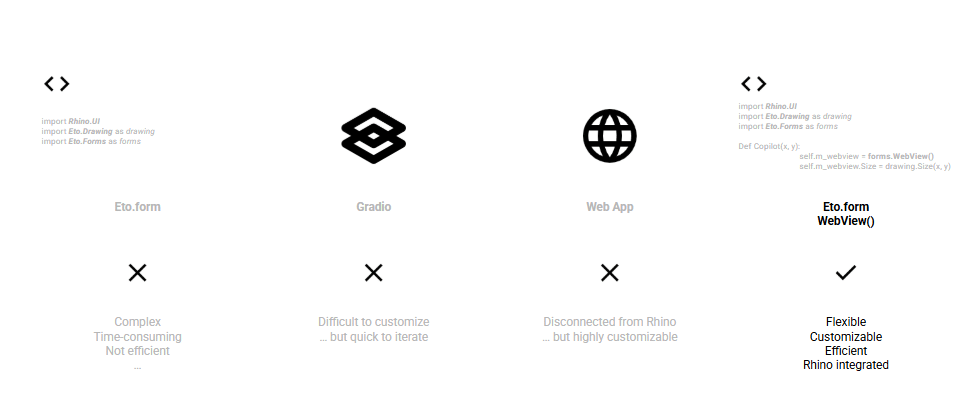

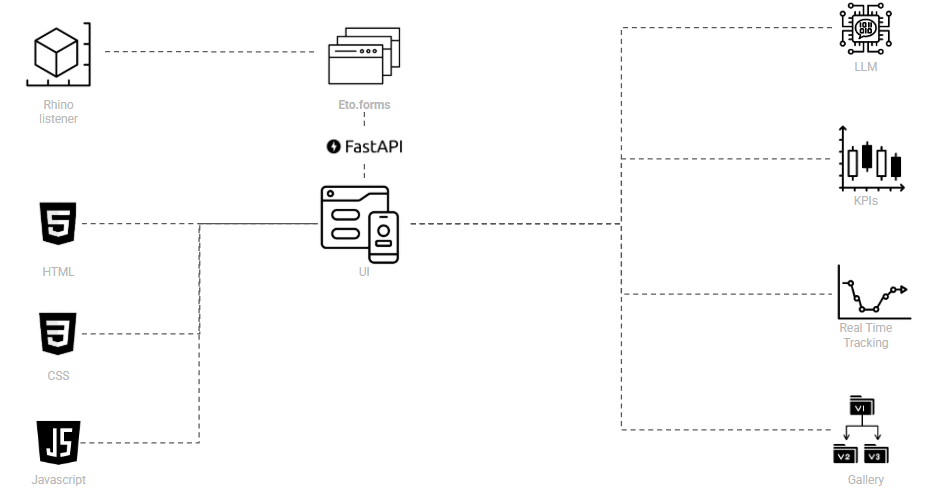

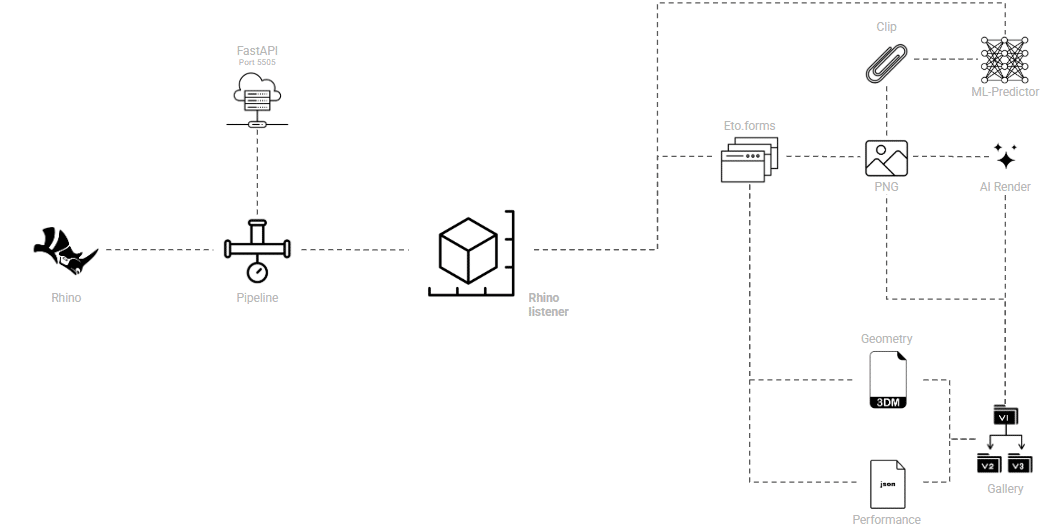

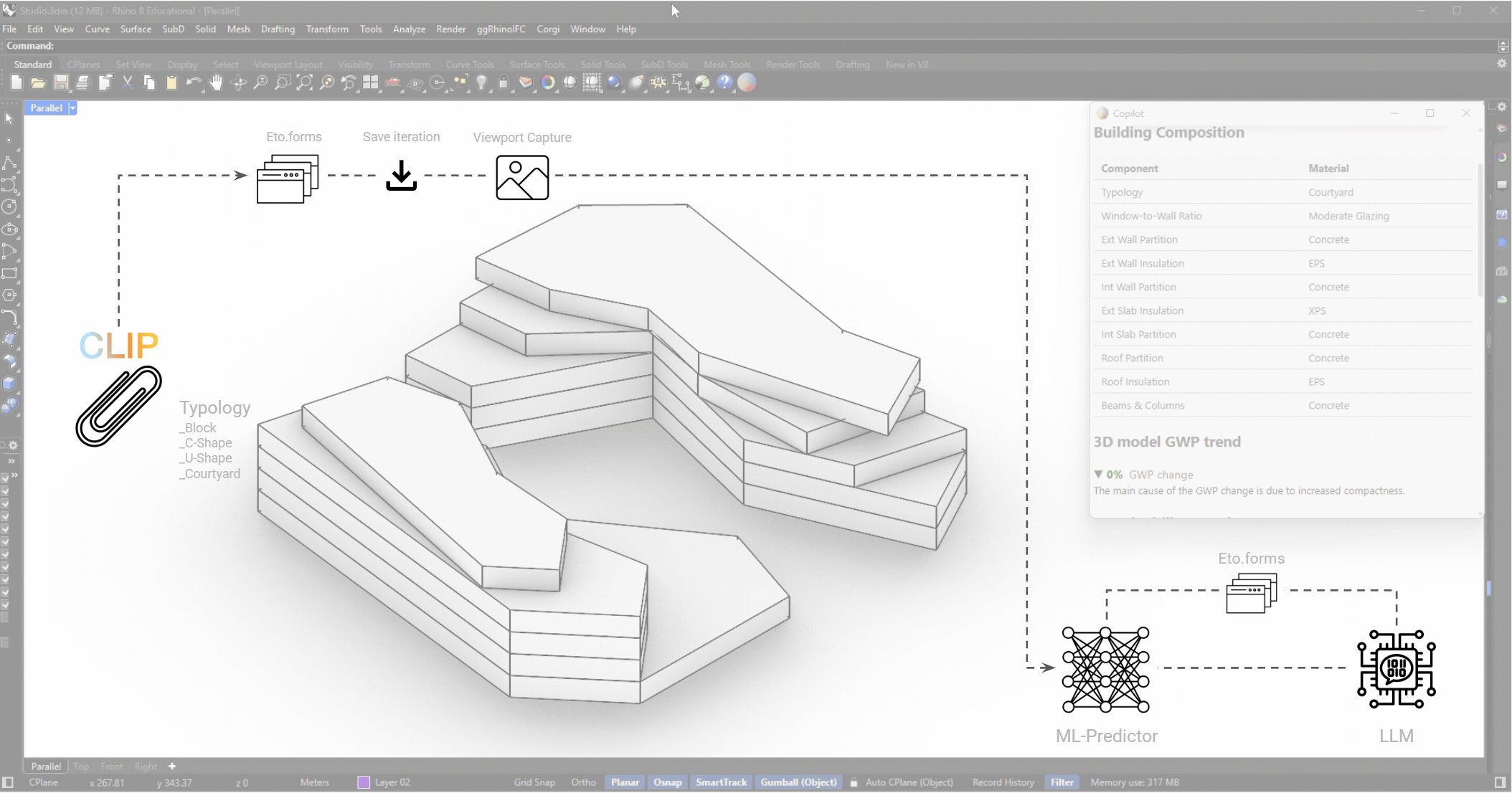

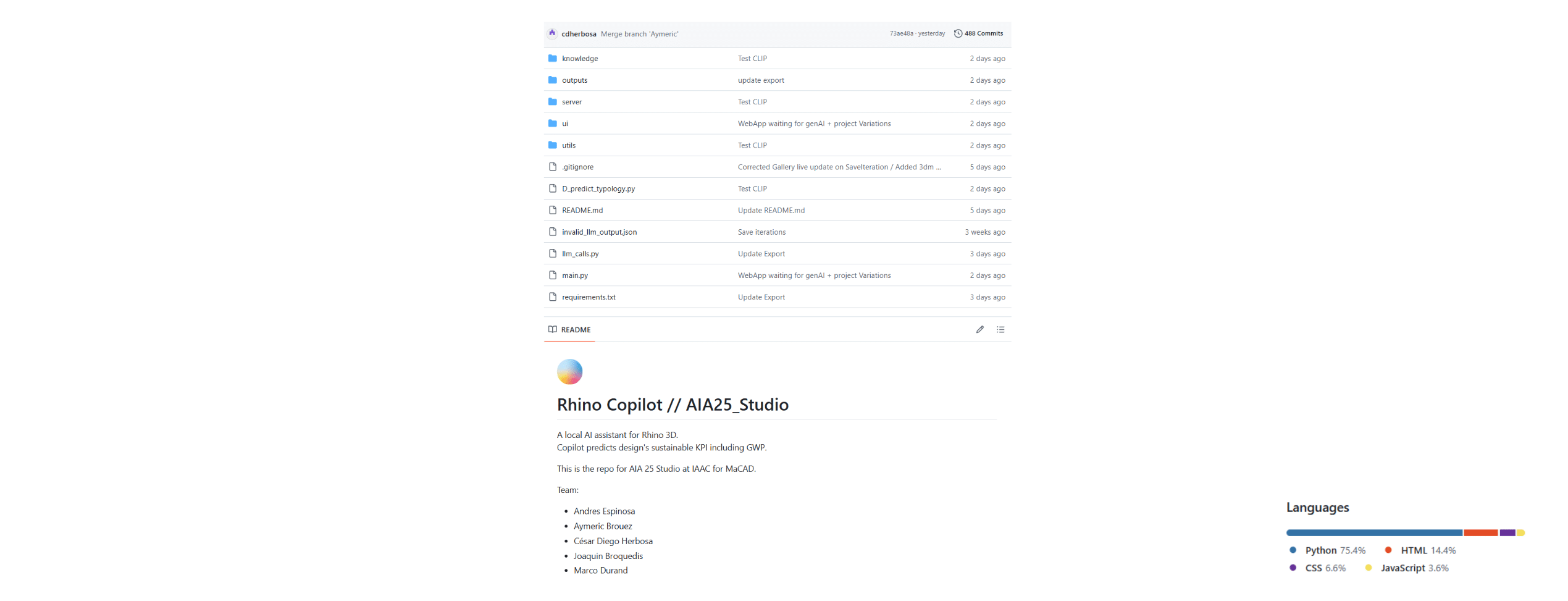

To enhance the user experience, the UI has been integrated into Rhino. A combination of Eto.form and different Web Design features have been implemented to ensure visual consistency and flexibility.

In the backend, the rhino listener module communicates via Eto.form with the UI. HTML, CSS and Javascript enhance the User experience. The outcome is represented in a integrated LLM-Chat, KPIs displayed in plots and real time tracking, and finally a gallery of saved images or renders.

The interface, base for gAIa and user interactions, is a key aspect of the user experience and in the success of our copilot. Our iterative process to develop the UI made us try different type of interfaces, such as Gradio, full eto.form UI, full web UI. Ultimately, we leveraged the power eto.forms, natively integrated in Rhinoceros3D, with the felxibility of the webview(). This enables the UI to be powerful, flexible, adaptable and a lot customizable than previous iteration.

The Core

gAIa is capable of assessing sustainability aspects of your design decisions in real time. For example, a trend of GWP makes the user aware of its choices in every step.

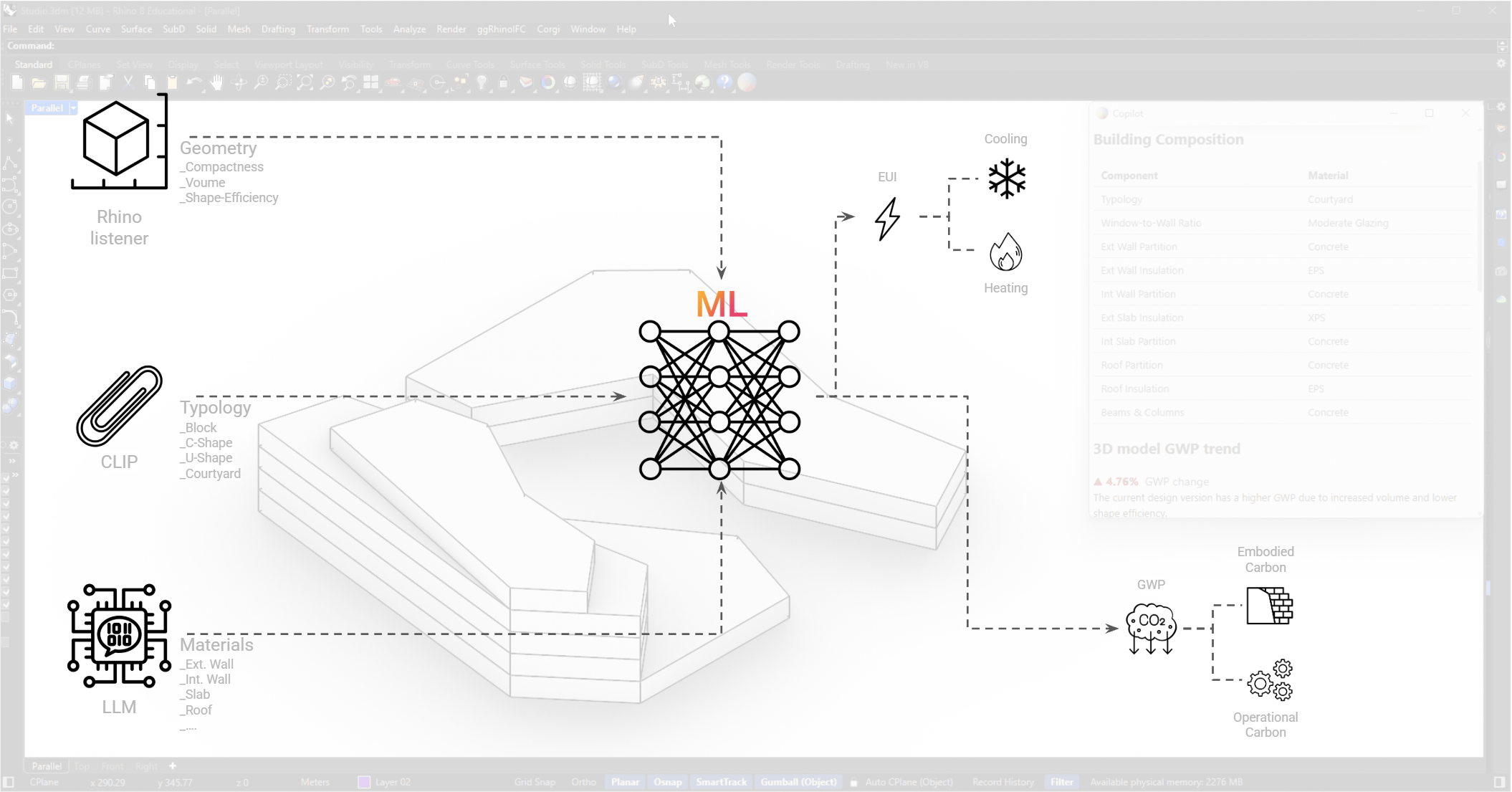

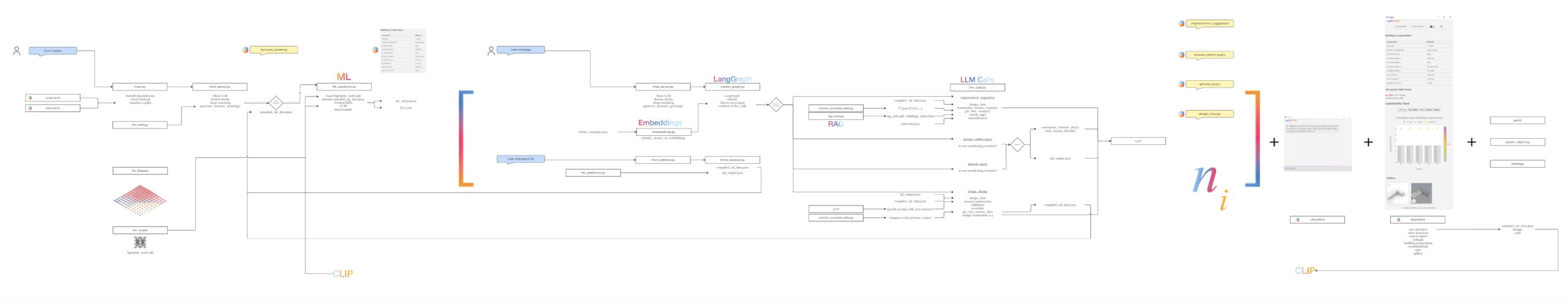

The ML

To remove complex building simulations from the way, a Machine Learning model was trained.

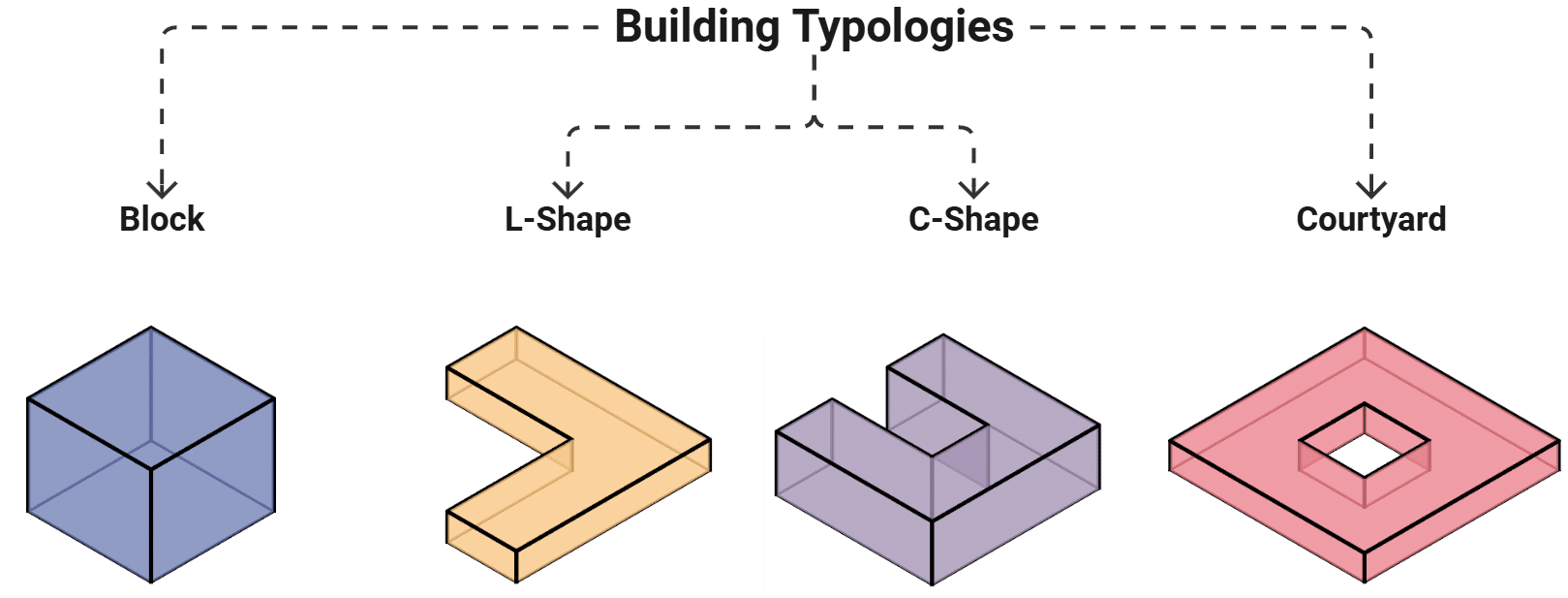

This model receives geometrical information from Rhino, decoded typology data via image-to-image with CLIP and user-driven materiality inputs through the LLM integrated to the chat Tab.

This approach allows the prediction of Energy (EUI, Cooling Demand, Heating Demand) and Carbon (embodied, operational) related metrics.

The Dataset

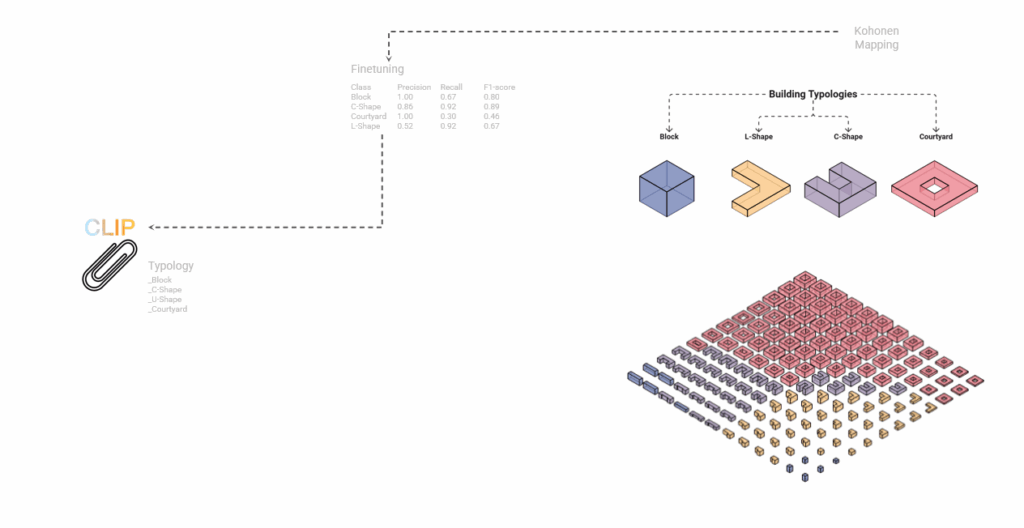

Kohonen Mapping for Geometry Set

Energy Simulations + LCA for Performance

The dataset used to train the model is derived from a set of geometries produced via kohonen mapping, capturing the diversity of different typologies. Energy simulations are performed to the entire lot, varying the materiality and energy-related characteristics accordingly. This creates a robust dataset of almost 93.000 datapoints to train the ML-model.

For more information about the training process visit: https://blog.iaac.net/carbonai/

The CLIP

CLIP (Contrastive Language–Image Pre-training) is a machine learning model developed to understand the relationship between images and natural language. In Gaia, CLIP is used to interpret visual representations of architectural designs, such as 3D viewports or typology diagrams, and connect them to descriptive labels like “courtyard,” “block,” or “L-shape.”

By embedding both images and text in a shared vector space, CLIP allows Gaia to classify design typologies, verify user prompts against visual outputs, and support more intuitive interactions. For example, when a user asks to “switch to a courtyard layout,” CLIP helps determine whether the current geometry aligns with that typology, or if a change is needed.

This ability to connect what the designer sees with how they describe it makes CLIP a key component in enabling visual literacy within AI-driven design tools.

CLIP is triggered by the button “save iteration” at the top of the data tab. This allows a screen capture of the rhino viewport and as well the prediction on the CLIP typology output received by the ML (Cobanov, s. d.).

The LLM

We generated a robust embeddings database to represent query ideas as vectors. When a user inputs text, we first compare its vector representation with our database and then based on their comparison we classify it using a LangGraph, allowing a fast interaction with the user via the chat and connected to the ML-Model to display modifications in real time.

Changes

The first method is responsible for responding to inputs related to changes in the model and subsequently providing feedback that clarifies the nature of these changes from a sustainable design perspective. This approach enables design decisions to be made with a clear understanding of their broader implications, going beyond mere alterations in materiality.

Suggestions

The second method analyzes the current design proposal and compares it, using RAG, with the best solutions sharing similar characteristics. This enables the system to provide guidance on potential improvements to the design. Through this approach, the LLM goes beyond the ideas proposed by the user, helping them arrive at the most optimal solution from a sustainable design perspective.

Versioning

This method is responsible for comparing different versions that the user has saved using the Save Iteration function. It enables a departure from a linear workflow, which often limits the ability to maintain a holistic view of the changes made throughout the design process.

General Queries

General Queries serves as the fallback category for user inputs whose intent scores fall below 0.67. It is designed to provide answers to general or ambiguous questions, while still incorporating specific design-related information whenever possible. This ensures that users receive meaningful guidance even when their queries lack precise context or clear intent classification.

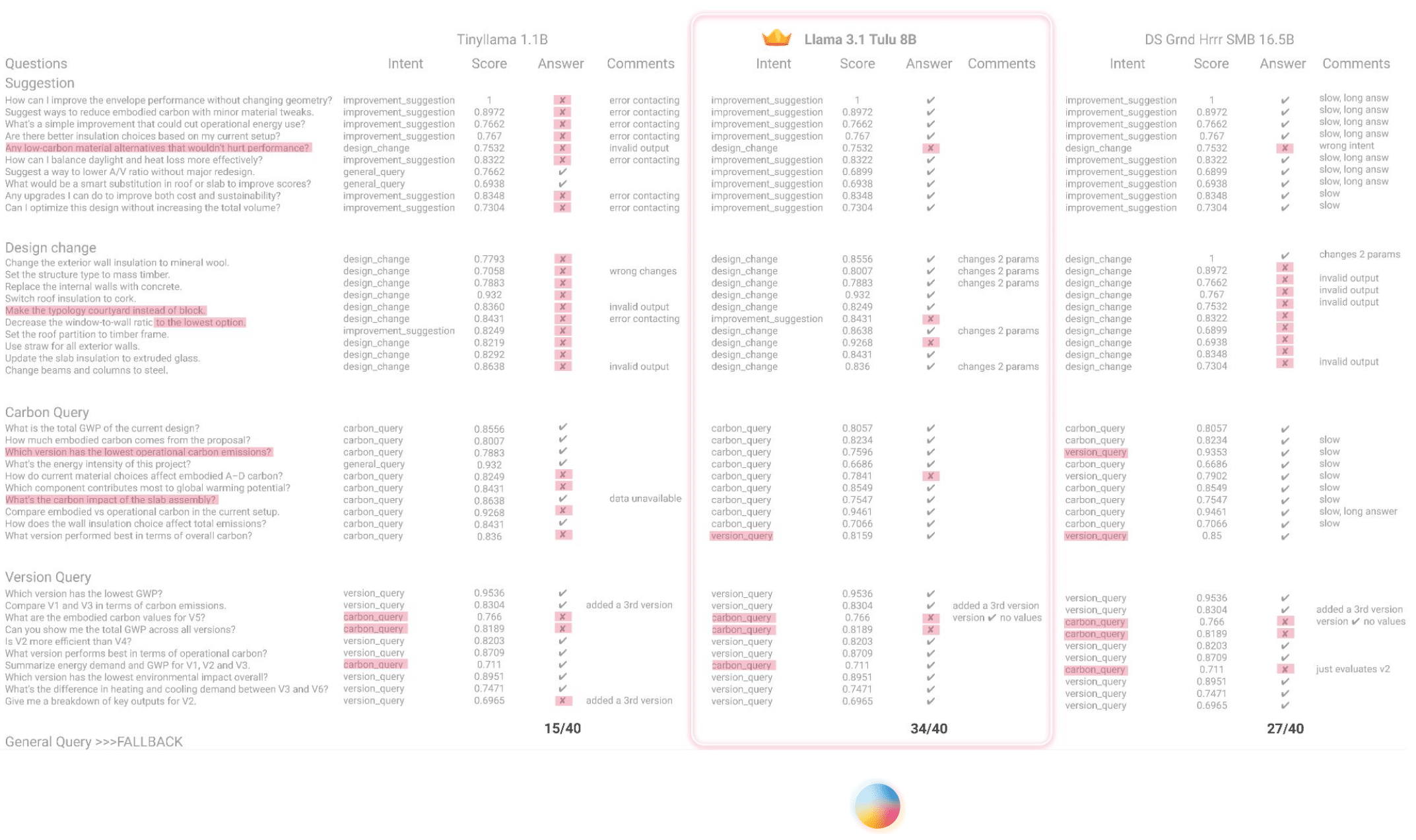

LLM Evaluation

The LLM approach was thoroughly tested with different Models. For our specific case, Llama Tulu showed the most robust features.

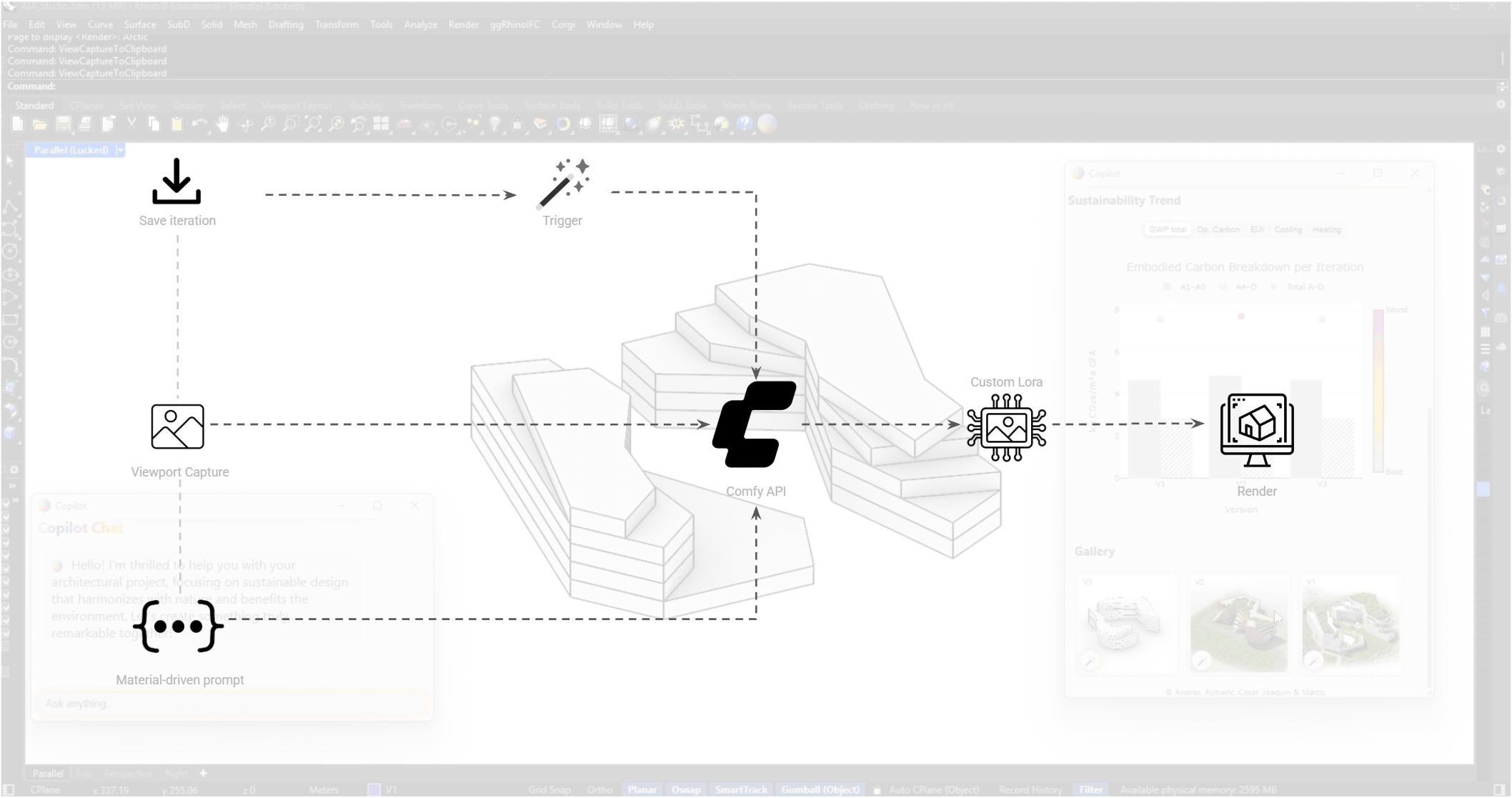

The AI-Rendering

Using ComfyAPI, each saved iteration assigns an image, but also information about the materiality. Thus, an automatic prompt is triggered every time we click on the “magic wand” button and a render appears. This approach allows the user to integrate their own workflows in ComfyUI into the generation process. Therefore, gAIa can become customized to specific rendering styles.

The Report

The Web App

The Product

The Architectural Diagram

The Repository

The Demo

The Next Steps

gAIa is currently a functional prototype, offering a foundation for AI-assisted sustainable design. However, its potential extends beyond its current scope. The next stages of development aim to enhance usability, expand coverage, and deepen integration across workflows:

- Rhino Plugin Deployment

Transitioning Gaia from a research interface to a fully integrated Rhino plugin will improve accessibility and allow for smoother adoption in practice. - Support for Diverse Climate Contexts

Currently limited to one weather dataset, gAIa will expand to include multiple climate zones, enabling geographically responsive design strategies. - Broader Typology and Geometry Recognition

Future iterations will support a wider range of building typologies and complex geometries, allowing Gaia to assist with more varied architectural programs. - Expanded Material and Component Libraries

Integrating more comprehensive environmental datasets will improve the accuracy of embodied carbon estimates and allow for finer-grained material comparisons. - Enhanced Retrieval-Augmented Generation (RAG)

By feeding Gaia more structured design documentation, codes, and certification criteria, the system will provide richer, more context-aware feedback to design queries. - Collaborative and Cloud-Based Features

Long-term development will explore shared workspaces, remote rendering, and multi-user feedback for teams working across disciplines or locations. - Performance Optimization and Offline Use

Efforts are underway to improve computational efficiency and reduce dependence on cloud APIs, making gAIa more portable and responsive in local environments.

References

- Kanyilmaz, A., Dang, V. H., & Kondratenko, A. (2023). How does conceptual design impact the cost and carbon footprint of structures ? Structures, 58, 105102. https://doi.org/10.1016/j.istruc.2023.105102

- Cobanov. (s. d.). GitHub – cobanov/clip-image-similarity : Simple image similarity calculator using the CLIP. GitHub. https://github.com/cobanov/clip-image-similarity