https://github.com/PaintDumpster/ai_for_robotic_fabrication.git

In the field of robotic fabrication, precision is everything! Yet there’s often a significant gap between a simulated toolpath and the real-world movement of a robotic arm. Subtle discrepancies can lead to printing errors, structural issues, or failed prototypes. We explored how AI can be used to minimize this gap.

This investigation sits at the intersection of robotics, data science, and machine learning, highlighting how even simple AI models can enhance the capabilities of industrial robotic systems within architectural workflows.

What?

We use machine learning models that learn from discrepancies and improve accuracy of joint configurations and simulated robotic movements predictions.

Why?

The more predictable the robot is, the better the quality and reliability of robotic fabrication, and the less collisions risks there are.

How?

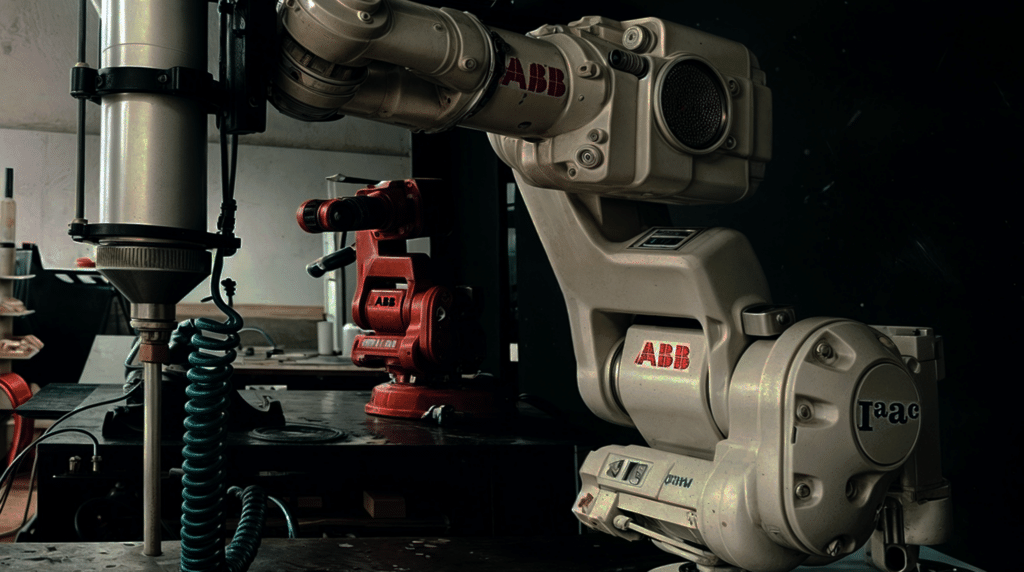

This project focuses on a case study of a 3D printing experiment, using supervised learning to teach the robot to better predict and align its real motions with its simulated paths and help robots “learn” from their own error. By training a regression model to predict the 6-joint configurations of an ABB robotic arm during 3D clay printing, based on the rotation of the rest of the joints as features, we aim to improve the robot’s motion planning, increase printing accuracy, and bring a layer of intelligent adaptation to the fabrication process. This has been achieved by collecting and preprocessing joint angle data from Rhino-modeled toolpaths, and actual real movements, we train a regression model through polynomial regression to predict joint values for given toolpath positions, enabling us to analyze, anticipate and prospectively refine robotic motion.

Block Diagram

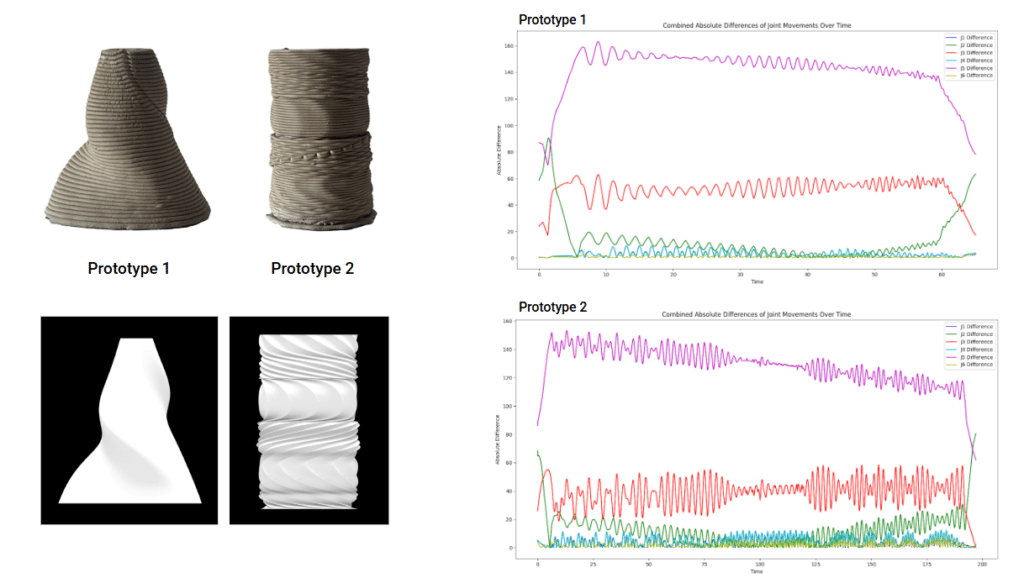

The initial workflow focused on analyzing the difference between simulated joint movements and real robotic motion during 3D clay printing.

1. The structure was first modeled in Grasshopper.

2. The digital model was then simulated in Grasshopper and exported to RobotStudio for robotic execution.

3. During physical printing, we recorded the real-time joint movements of the robotic arm.

4. Simultaneously, we extracted the simulated joint coordinates from the digital toolpath.

5. Finally, the two datasets were compared and analyzed to identify discrepancies and inform future adjustments manually.

Datasets

We trained our model on joint angle data collected from two sources:

- Rhino-modeled toolpaths (digital design phase)

- Post-printing movements (actual joint movements from the ABB robot)

By comparing these datasets, the model learns how the real robotic motion deviates from the simulated path, and begins to predict more accurate joint values based on previous behaviors.

AI Implementation

To improve motion accuracy, we introduced an AI-based supervised learning model into the existing workflow. Using both simulated and real joint data as previously mentioned, the model was trained to predict more accurate movements, specifically for joint 6 (end effector). This step allowed the system to learn from past discrepancies and enhance the robot’s ability to perform more precise and consistent 3D printing.

The Code Objectives

The supervised learning model was developed with the following key goals in mind:

- Data Processing: Import and preprocess joint angle data from both simulation and real-world robotic printing, ensuring the format is consistent for training.

- Model Training: Apply polynomial regression specifically to predict joint 6 (end effector) rotation, training the model to learn from discrepancies between simulated and real joint configurations.

- Performance Evaluation: Measure how closely the predicted joint values match real-world behavior, using error margins and curve comparisons to assess model accuracy.

- Visualization & Analysis: Generate graphs that allow interpretation of movement trends, anomalies, and the model’s prediction reliability over time.

- Code Structure & Clarity: Maintain clean, modular, and well-documented code to support updates, debugging, and future implementation across other joints or other models.

THE Code

Interpretation of Results

The graphs below show two comparisons between the actual joint movement and the predicted output from our regression model. The first plot evaluates joint rotation behavior over time from the simulation and actual printing, while the second highlights how closely the predicted values match the real output.

In the second plot shown here, we can see that while the model follows the oscillating pattern of joint 6’s movement, it does so in a way that mimics the dataset rather than truly learning the behavior. The shape of the motion is replicated, but there’s a lack of accuracy in amplitude and timing, which suggests that the model is overfitting to visible patterns without deeper understanding of the motion dynamics.

This also reveals an important limitation: even though the regression model captures some periodic behavior, it struggles with accuracy and synchronization, especially when dealing with rapid or oscillatory joint motions like those in joint 6.

Finally, we drastically increased the number of rows in the datasets, which helped the model make better predictions as shown in the diagram, because training the model on small datasets leads to overfitting. Therefore, this plot shows a much more accurate prediction pattern, where the overall motion of the robotic joint closely matches the actual real-world behavior. This suggests that the model successfully learned the dynamic pattern of the movement. HOWEVER, there’s still a noticeable offset in the starting degree, the predicted movement begins around 140°, while the actual starts at 0°.

Although this offset might not drastically affect joint 6 (which often controls end-effector rotation), in other joints such as joint 2, such a difference could result in a significant positional error during fabrication. This highlights the importance of not only learning the motion shape but also accurately predicting the starting degree of rotation, especially for joints that control position rather than orientation.

Conclusion

By integrating a regression model into the robotic printing workflow, we improved motion prediction and highlighted the potential of AI in enhancing fabrication precision. While results were promising, issues like initial offset show there’s still room for refinement, not just in movement patterns, but in understanding spatial intent. This opens up broader questions about how intelligent systems can learn, adapt, and eventually collaborate in architectural making.