Albert, is a rock-paper-scissors robotic hand designed to serve as a playing partner for the rock-paper-scissors game, aiming to bridge human-robot interaction.

Rock-paper-scissors is a universally understood game, known for its simplicity and effectiveness in decision-making. Our objective was to establish a unified language between a robot and a human by humanizing the robot. We aimed to devise an intuitive solution that users could easily grasp by incorporating vision and other sensors to facilitate this interaction.

Our approach was structured around defining our goals for this three-week project:

- Initiate the game with a handshake or high five.

- Engage in multiple rounds against the robot until one participant emerges victorious.

- Ensure a wireless interaction: The human player should not require any additional equipment to play, other than their hand.

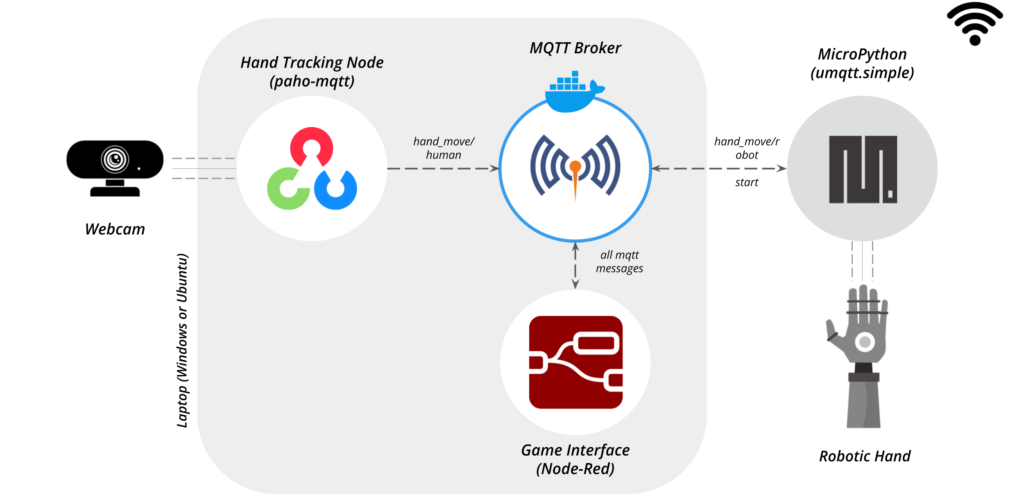

System Architecture

After extensive research into similar projects, robotic hand designs, and strategies for implementing these concepts, we decided on a system architecture that would effectively fulfill our objectives.

The system employs a webcam to read the user’s hand position, which is processed by a computer depicted in the gray box above. This computer serves as the main processing unit and is separate from the hand, allowing it to be placed elsewhere in the room. It runs the hand-tracking node, MQTT broker, and a game interface that calculates the winner and provides feedback to the user about the score. The robotic hand, wireless and independent from the computer, is equipped with a Raspberry Pi Pico W running MicroPython.

Hand Pose Detection

The MediaPipe library by Google offers a powerful hand tracking solution for real-time hand pose estimation. Leveraging 21 landmarks, it accurately identifies and tracks key points of the hand. By customizing the code, we enabled it to discern specific gestures like rock, paper, scissors. For example, by comparing the positions of landmarks 20 and 17, we determine if the pinky finger is closed. However, our implementation currently works optimally only when the hand faces the camera directly vertically (utilizing y-coordinate for finger openness) or horizontally (employing x-coordinate). Recognizing hands from different angles remains a challenge for future improvements.

Once the hand pose of the human player is detected, it is subsequently published to the MQTT Broker under the topic “hand_move/human”.

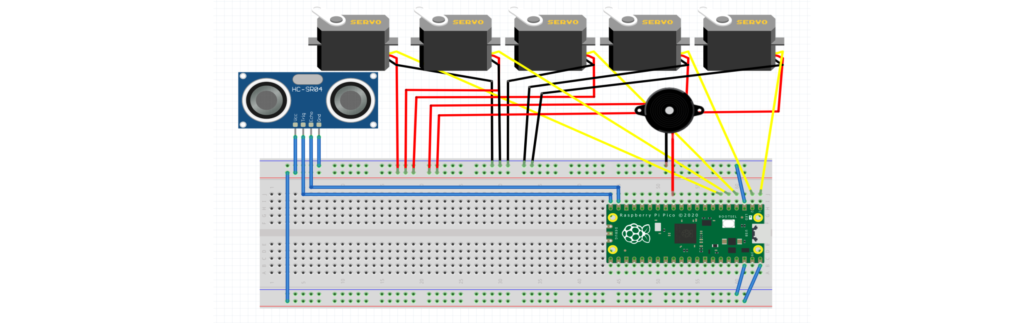

Hardware Development

For this project, we analyzed various robotic hands that have been constructed and decided to use servos to control the individual fingers. We incorporated an ultrasonic sensor for assessing the distance necessary for a handshake or high five, and a beeper was added for auditory feedback.

For the physical aspect and hardware implementation, we utilized the finger design from an open-source project: Arduino Flex Sensor controlled Robot Hand. Employing fishing wire to pull the fingers closed and tensioned elastic cord for their retraction proved to be an effective method of controlling movement.

Due to time constraints, we adopted this design for our hand. However, to accommodate the ultrasonic sensor, we had to make slight modifications to it.

3D Printed Prototype Function

Robotic Hand State Diagram

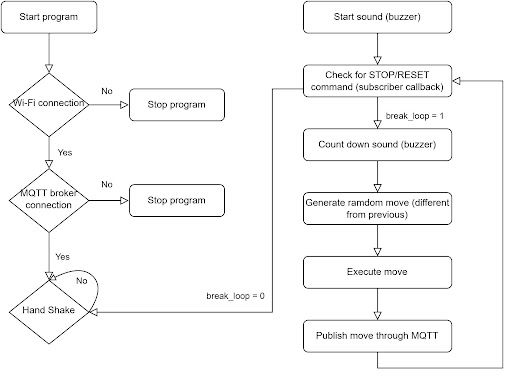

To enable the hand’s autonomy and facilitate its connection to both the WiFi network and the MQTT broker, we used the network and umqtt.simple packages provided by MicroPython.

Upon plugging in the hand, the program initiates by verifying the correctness of the WiFi and MQTT broker settings (such as password, IP address, etc.). If these settings are incorrect, the program halts. Assuming correct settings, the hand transitions into a waiting state, awaiting a handshake to commence a game. Upon game initiation, the hand emits a specific sound to alert the player. Each round begins with a check for user input from the stop/reset button or if someone has achieved three wins (explained later in the game interface). If either condition is met, the hand returns to the waiting state; otherwise, the game proceeds. Each round features a countdown sound to signal when to make a move. A move is randomly generated and executed, and the chosen move is then transmitted via MQTT topic “/hand_move/robot”.

Game Interface

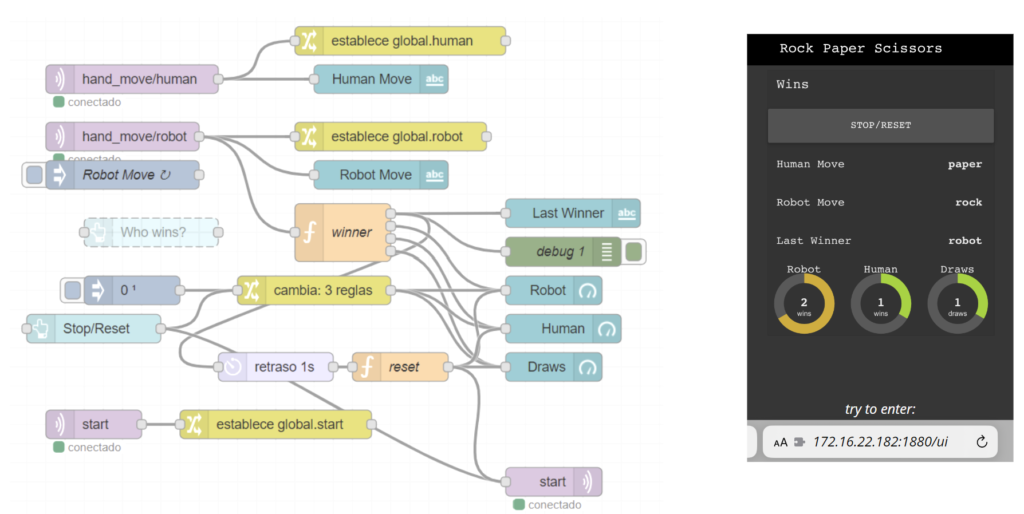

The interface was developed using Node-Red, a component-based programming language built on Node.js. This script subscribes to both the “hand_move/human” and “hand_move/robot” topics, determining the winner of each round. The game is configured to require three wins (or three draws) for victory. Once a winner is determined, a stop/reset command is dispatched automatically (via the “/start” MQTT topic) to the robot, prompting it to enter a waiting state until a player initiates another round. Moreover, a stop/reset button is provided for the player’s convenience, enabling them to halt the game at any time.

Game Time!

Once all components were implemented, we integrated them into the same network and commenced testing our entire system. These tests proceeded smoothly, allowing us to identify and resolve some minor bugs; the system was working exceptionally well.

Future Opportunities

Looking ahead, there are numerous possibilities to enhance the system’s rigidity and reliability. We plan to integrate the camera directly into the robotic hand setup and implement hand pose detection using deep learning techniques (train our own models), enabling the user to play from any angle. Additionally, we aim to make the experience more interactive by adding a speaker, screen, and microphone. An “extreme mode” where the robot always wins is also under consideration, among many other possibilities.