ABSTRACT

Recent developments in generative artificial intelligence, particularly diffusion models such as Stable Diffusion and DALL·E, have enabled the creation of visually compelling architectural façades from text-based prompts. While these outputs are expressive and stylistically diverse, they remain fundamentally unstructured, lacking spatial intelligence, semantic clarity, and geometric editability. This limits their integration into architectural design workflows, which require precision, adaptability, and the capacity for reuse.

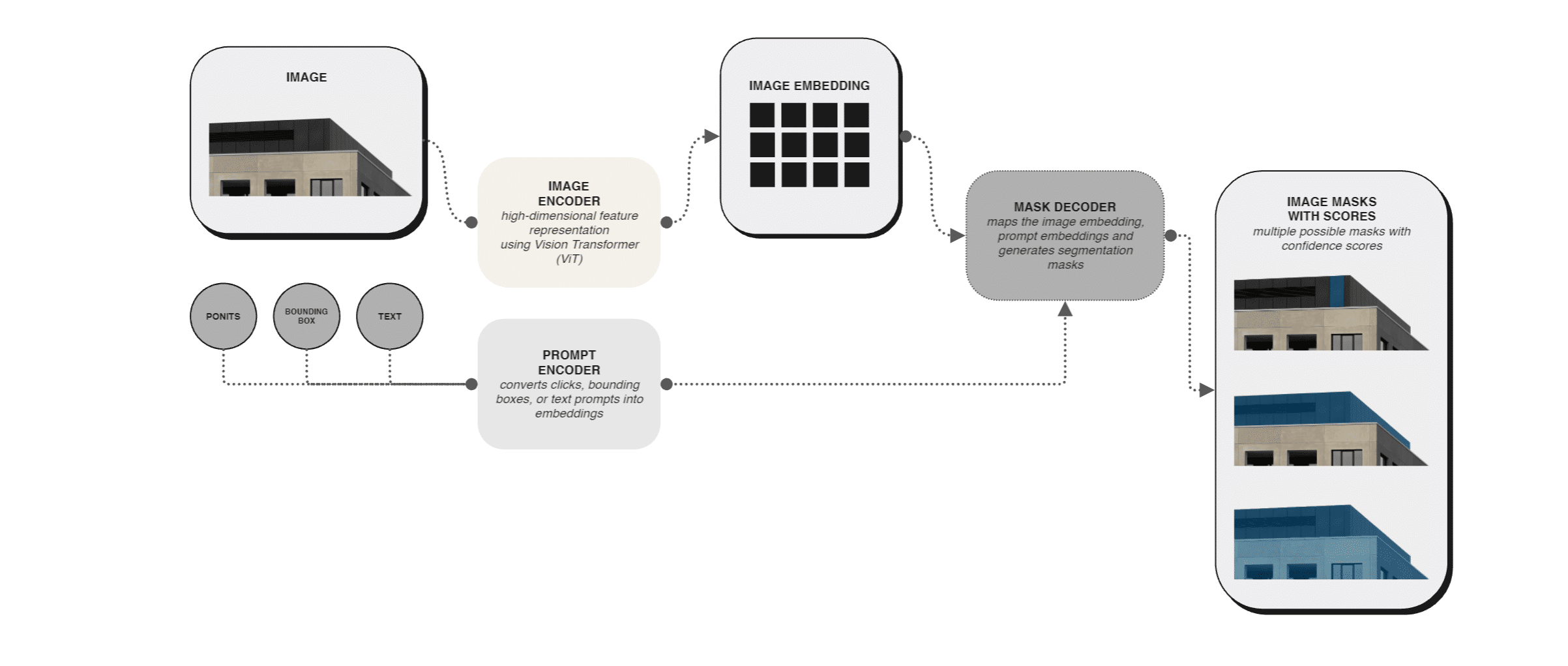

This research aims to bridge the expressive potential of generative AI with the precision of parametric design. It proposes a pipeline that interprets and segments AI-generated façades and reconstructs them as editable parametric geometry. The workflow integrates diffusion-based façade generation, segmentation using the Segment Anything Model (SAM) with combination of GroundingDino, scalable vector graphics (SVG) translation into structured data, and reconstruction in the BIM software environment.

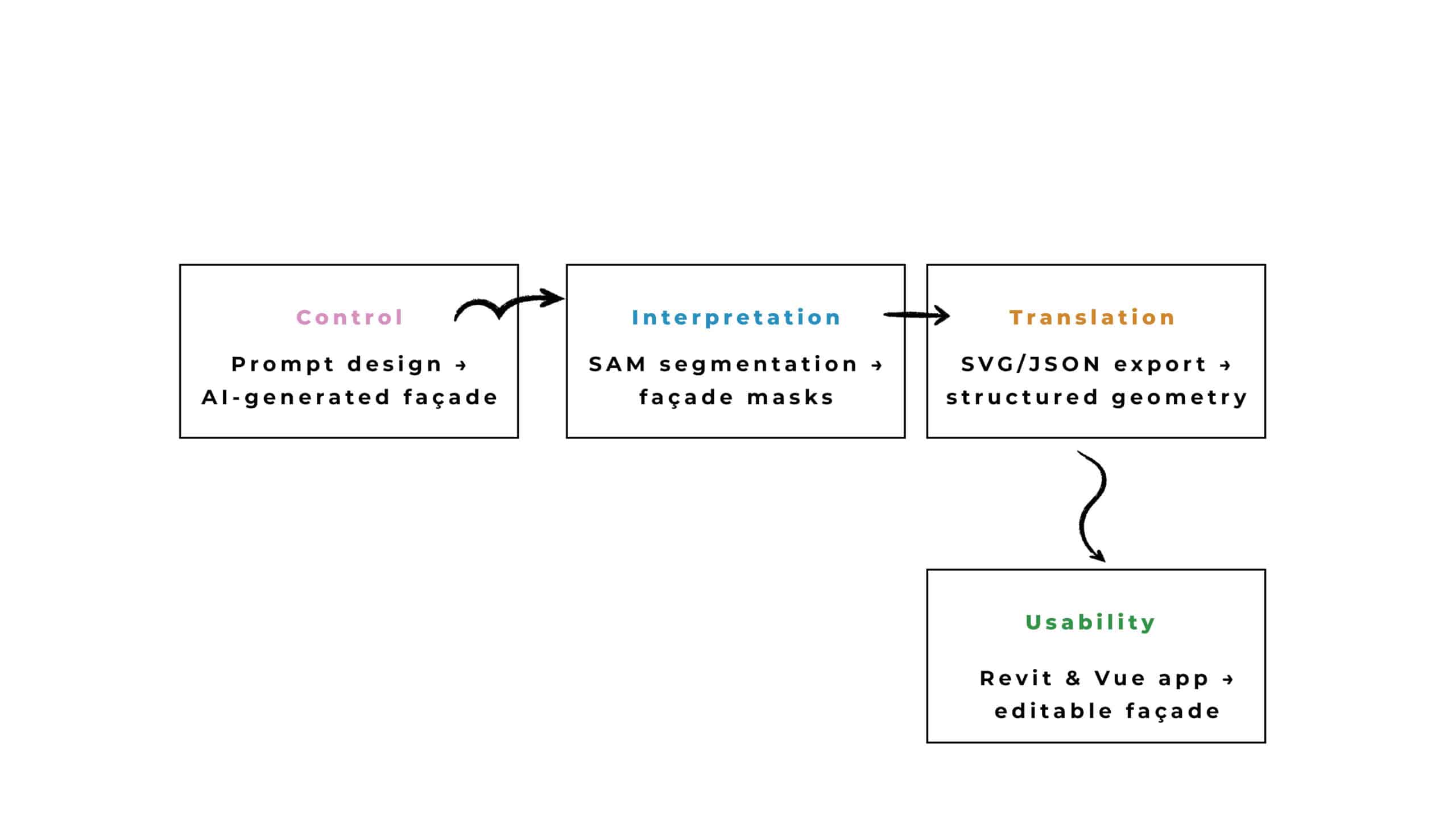

The study is structured around four research objectives:

- CONTROL – influence of prompt structure on façade legibility,

- INTERPRETATION – accuracy of segmentation in detecting architectural elements,

- TRANSLATION – transformation of AI-generated images into parametric geometry,

- USABILITY – adaptability of reconstructed façades in design workflows.

Findings indicate that while diffusion models can produce diverse outputs, segmentation remains inconsistent, requiring manual intervention. Nevertheless, the pipeline demonstrates the potential to convert expressive but static imagery into structured and reusable design components. The implications point toward the development of spatially intelligent AI systems that can meaningfully contribute to architectural practice by linking generative exploration with computational precision.

INTRODUCTION

PROBLEM STATEMENT

Despite the expressive capabilities of diffusion-based AI models, their outputs are confined to static images that lack spatial intelligence, semantic structure,

and geometric editability. This absence of structured data prevents architects from directly using AI-generated façades in computational or BIM-based workflows, where precision and adaptability are essential. Consequently, architects are left to approximate designs manually, limiting efficiency and undermining the potential of generative AI to serve as a true design partner.

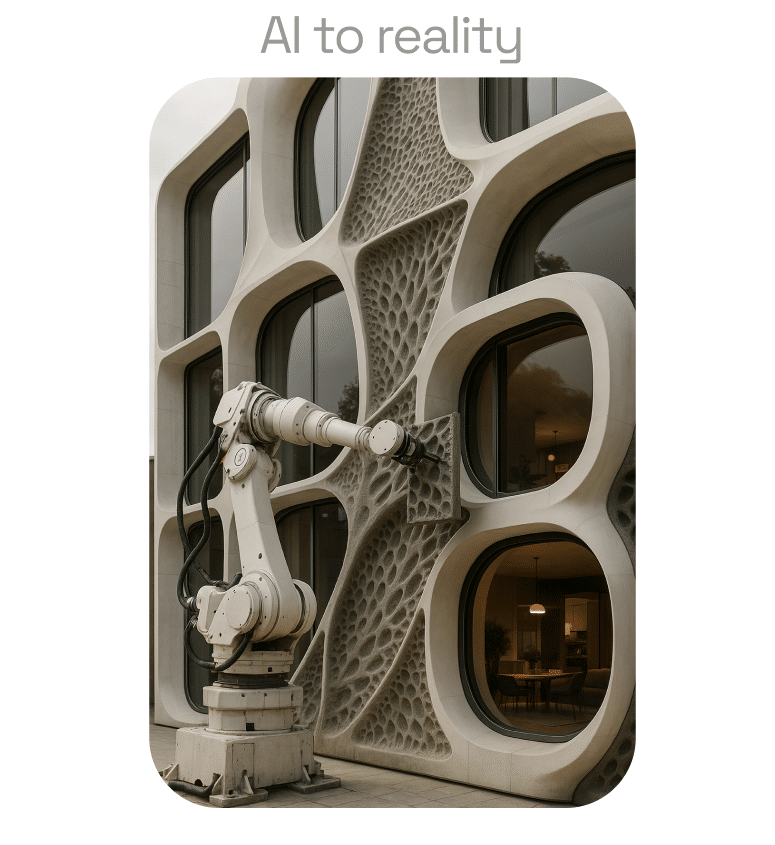

VISION

This research seeks to bridge the expressive capacity of generative AI with the precision of parametric and BIM design. The proposed pipeline interprets and segments AI-generated façades and reconstructs them as editable geometry that can be integrated into computational workflows. By connecting image-based generativity with parametric logic, the study aims to advance toward spatially intelligent AI systems capable of contributing directly to architectural design. The implementation in this research was tested in Revit to evaluate BIM interoperability.

HYPOTHESIS

The central hypothesis of this research is that generative AI outputs can be operationalized within architectural design workflows when coupled with segmentation and reconstruction methods. Through this integration, expressive but unstructured façade images may be transformed into structured, editable, and reusable design components. Such a pipeline would enable a hybrid practice in which AI-driven image generation is not an isolated creative tool but a catalyst for workflows that combine visual expressiveness with parametric precision and BIM.

This research is structured around these primary objectives:

- To investigate the influence of prompt structure on the legibility of AI-generated façades, determining how textual formulation affects architectural clarity and control in diffusion model outputs.

- To evaluate the accuracy of segmentation models, with particular focus on SAM, in identifying and classifying façade elements.

- To establish a workflow for transforming AI-generated façades into structured geometries through the reformulation of segmentation data into vector representations.

- To assess the usability of these reconstructed façades within parametric and BIM-based workflows, tested in Revit, examining their capacity for reuse, variation, and integration into new design scenarios.

STATE OF ART

2.1 GENERATIVE AI IN ARCHITECTURE

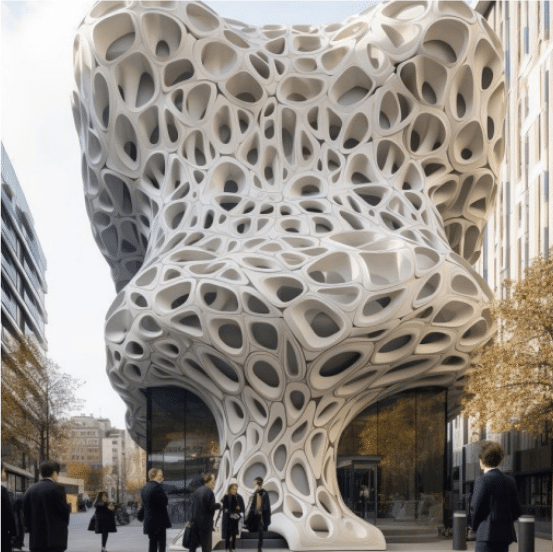

The emergence of diffusion-based generative models such as DALL·E, Stable Diffusion, and Stable Diffusion XL (SDXL) has significantly expanded the capacity to produce architectural imagery from text prompts. These systems enable the rapid generation of visually compelling façades that explore diverse stylistic and formal variations. Within architectural discourse, they have been applied to speculative design studies, visual storytelling, and conceptual explorations that highlight the expressive potential of machine learning in creative processes [1].

Despite this capacity for visual experimentation, the outputs of generative AI remain limited to raster images. They lack the spatial intelligence, semantic annotation, and geometric structure required for integration into parametric or construction-oriented workflows. Architectural elements such as windows, doors, and structural grids appear as stylistic textures rather than as defined components that can be manipulated or reused. As a result, the application of generative AI in architectural practice is constrained to image production, offering inspiration but not direct interoperability with computational design tools.

Recent research has begun to investigate ways of extending these models through fine-tuned datasets and custom latent representations, such as LoRA models trained on architectural typologies. These approaches improve stylistic consistency and domain-specific outputs but do not resolve the underlying limitation of geometry. Thus, while generative AI has introduced a new paradigm for architectural ideation, its current role is primarily aesthetic rather than operation-

al, highlighting the necessity of hybrid workflows that connect visual generation with parametric logic [2].

Diffusion-based generative models like DALL·E and SDXL have expanded architectural image creation from text prompts, enabling rich visual exploration. However, their outputs are limited to raster images without geometric or semantic structure, making them unusable in parametric or construction workflows. Even with fine-tuning methods like LoRA, these models remain primarily aesthetic tools, highlighting the need for hybrid workflows that connect visual generation with spatial and computational design systems [2].

2.2 COMPUTER VISION AND SEGMENTATION

The current state of the art in computer vision and segmentation leverages advanced deep learning models to transform the analysis and documentation of architectural environments. In architecture, engineering, and construction (AEC), these methods facilitate the extraction of features such as windows, doors, façades, and urban patterns, enabling more structured, automated, and data-driven workflows [3].

Deep learning models—especially convolutional neural networks (CNNs) and their variants—have become foundational in architectural image segmentation.

- U-Net is widely used, originally in medical imaging, for its encoder-decoder structure and skip connections that recover fine details. It provides robust segmentation for architectural features when trained on sufficient domain-specific data [4].

- Mask R-CNN extends Faster R-CNN by generating pixel-wise masks for detected objects, excelling in instance segmentation tasks such as distinguishing different windows or façade components [4], [5].

- DeepLabV3+ integrates dilated convolutions and spatial pyramid pooling for multi-scale contextual information, empowering finer segmentation in complex urban scenes [5].

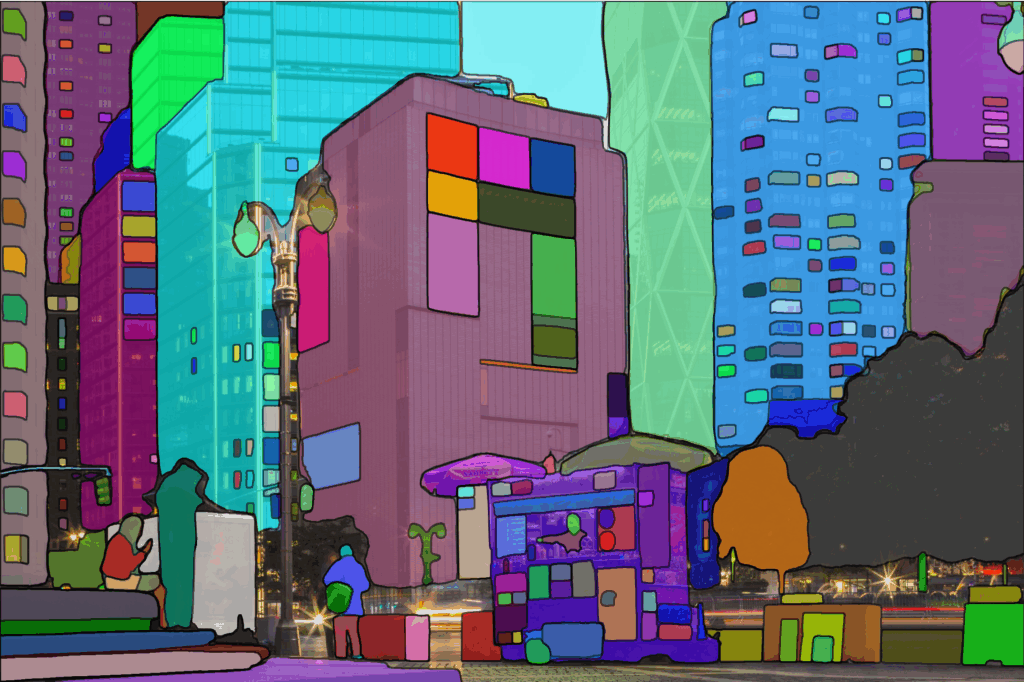

A major development was the introduction of the Segment Anything Model (SAM) in 2023, designed to provide zero-shot segmentation on a massive variety of images using the SA-1B Dataset, which contains over 1 billion masks from 11 million images. SAM can produce segmentation masks for arbitrary prompts—without retraining—making it especially attractive for architectural tasks where annotated data is often scarce. For instance, it can segment façade patterns, windows, and other architectural elements in real time and with minimal supervision. However, its performance is occasionally hampered by the lack of architectural-specific training, resulting in common errors such as confusing shadows or reflections with actual building features [6].

These models are used for automated mapping of urban environments, façade documentation, and the parametric reconstruction of buildings from imagery. U-Net and Mask R-CNN have shown success in urban rooftop extraction and detection of architectural elements, while SAM brings new flexibility to segmentation workflows [7]. Effective use in architectural contexts demands large, well-annotated datasets, which is still a bottleneck. SAM reduces this need but is susceptible to domain-irrelevant errors and requires post-processing for accurate architectural geometry generation [8].

Deep learning models have been employed in aerial image analysis for automatic detection of building rooftops [7]. Segmentation algorithms are used to extract windows, doors, and ornamentation from street-level architectural imagery for documentation or simulation [2]. Segmentation output (e.g., from SAM or Mask R-CNN) can be translated into structured formats like SVG masks for downstream parametric modeling in design software [9].

GroundingDINO stands out as a top choice for facade segmentation because of its strong zero-shot detection capability, ability to leverage both image and text inputs, and flexibility when combined with segmentation models like SAM [10].

Core advantages of using the model include:

- Zero-Shot Segmentation: GroundingDINO enables segmentation without retraining for new façade types, using descriptive text prompts (e.g., “windows,” “balconies”) to guide object detection, which accommodates the variability of architectural patterns in urban scenes [11].

- Open-Set Recognition: Its transformer-based dual-encoder architecture fuses image and language features, allowing the model to detect a wide range of objects whether previously seen or unseen. This is critical for façades, which often feature unique or irregular elements not present in training data [12].

- Text-Guided Segmentation: Compared to traditional models like Mask R-CNN, GroundingDINO can be precisely directed to segment specific architectural features with simple textual prompts, enhancing accuracy and usability for design documentation and analysis tasks [13].

GroundingDINO detects elements on the facade by pairing the image with relevant text prompts and outputs bounding boxes with associated labels. These detection results are refined into pixel-accurate segmentation masks when integrated with the Segment Anything Model (SAM), resulting in high-quality masks even for novel or rare architectural features [14].

GroundingDINO+SAM pipelines outperform classic models in zero-shot segmentation benchmarks, achieving strong results without extensive façade-specific annotations [15]. The method is highly scalable, making it suited to both batch processing of large urban datasets and detailed analysis of individual buildings [16]. GroundingDINO’s ability to combine language-guided detection with advanced segmentation makes it ideally suited for the challenges of facade segmentation in the architecture domain.

2.3 PARAMETRIC WORKFLOWS AND HYBRID APPROACH

Parametric design has become a methodological foundation in contemporary architecture, enabling designers to encode geometric relationships, functional rules, and contextual dependencies through algorithmic logic. Platforms like Grasshopper for Rhino and BIM environments such as Revit facilitate the creation of adaptive and editable geometries, supporting rapid design responses to changing requirements, environmental conditions, and site-specific constraints. This versatility is central to multi-objective optimization, allowing practitioners to balance competing goals in academic and professional contexts [17].

Recent studies highlight that parametric workflows enable dynamic adjustments for sustainability, microclimate control, and social scenario modeling. It shows how parametric models are used to optimize spatial layouts for daylight, ventilation, and energy efficiency, as well as to integrate transformable facades and interior systems, resulting in more resilient architectural solutions. For example, the G.A.R.D.E.N. framework was introduced, where structures the parametric process into iterative steps: Generate, Analyze, Represent, Develop, Evaluate, and Normalize. This methodology provides designers with the tools to address complex optimization problems and assess spatial performance in real time [18].

Hybrid workflows connecting generative AI and parametric modeling are emerging, as demonstrated by their integration with BIM tools, which makes it possible to import image-generated concepts and develop them further within precise parametric frameworks. However, studies show that geometry extracted from AI-generated images often requires semantic annotation and manual refinement before full integration into design systems, highlighting ongoing challenges with interoperability.

Iconic projects like the Heydar Aliyev Center by Zaha Hadid Architects and the Al Bahar Towers in Abu Dhabi exemplify the use of parametric thinking for merging cultural narratives, dynamic geometries, and climate-responsive design. The study extends these principles to landscape architecture, showing how parametric methods can be linked with GIS data for large-scale urban modeling and adaptive planning. [19].

In summary, contemporary parametric workflows—enhanced by scientific research—are driving a transition from fixed forms to adaptable systems, offering designers the capacity to integrate data-driven automation and creative exploration within resilient, sustainable architectural practices. Based on that, we developed out draft concept idea.

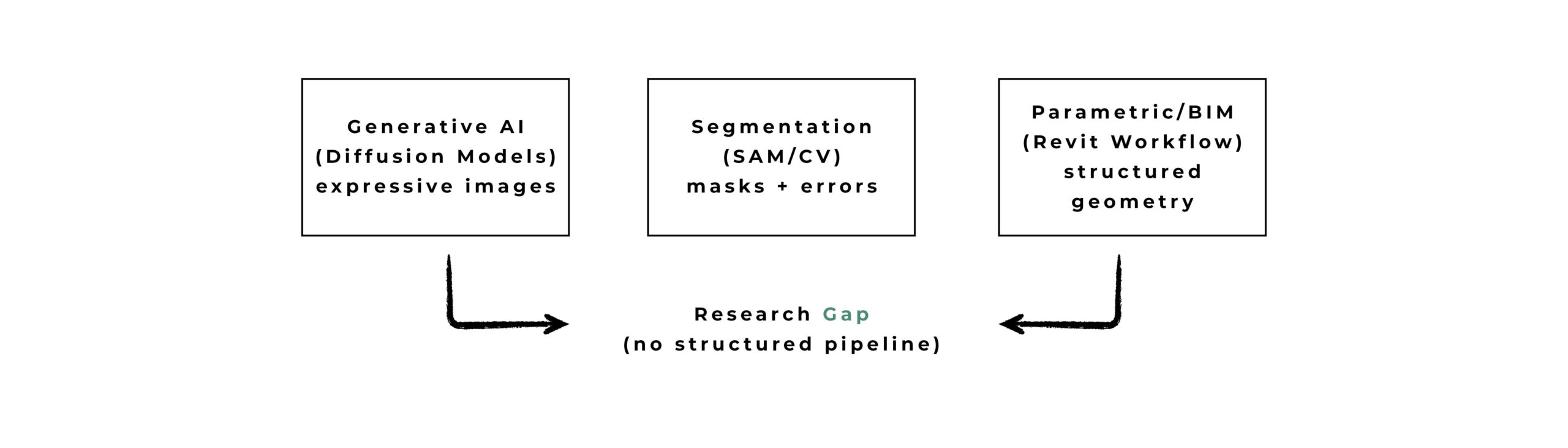

2.4 RESEARCH GAP AND CONTRIBUTION

Despite significant advances in generative AI, computer vision, and parametric design, architectural workflows remain fragmented. Diffusion-based models such as those examined during these studies [21], [22] generate visually expressive façades but lack embedded semantic structure and operable geometry, limiting their direct interoperability with parametric or BIM environments. Segmentation approaches, including large-scale models like SAM and specialized systems such as Segment Any Architectural Facades (SAAF) and NeSF-Net, demonstrate improved façade parsing by leveraging multimodal guidance and generative AI prompting, but still require refinement for accurate translation into structured formats compatible with design tools [23], [24], [25].

BIM platforms demand geometries with rich annotation, hierarchical organization, and explicit component definition—requirements that current AI outputs rarely satisfy out of the box. Research shows persistent interoperability challenges, including the need for manual cleanup and semantic classification of segmented features to comply with BIM standards and downstream construction workflows [26]. Recent reviews and experiments underscore how the absence of a unified pipeline impedes the adoption of AI-powered generative and segmentation tools in real architectural practice [27].

The studies addresses the gap by developing and testing a pipeline that bridges generative AI image creation, advanced segmentation workflows, and parametric/BIM reconstruction. By investigating prompt design for façade generation, evaluating segmentation performance on urban imagery, and converting segmentation masks into editable geometries suitable for parametric modeling and BIM systems, the research demonstrates how unstructured outputs from AI can be systematically operationalized for design practice [24], [25], [28].

This contribution proposes an integrative solution, moving beyond inspirational raster outputs and isolated segmentation toward a streamlined, semi-automated method for generating, interpreting, and deploying façade geometries within computational design and construction contexts. The following chapter details the methodology, platform architecture, and prototyping—covering experiments in Google Colab, interface development, and BIM integration—that underpin this investigation.

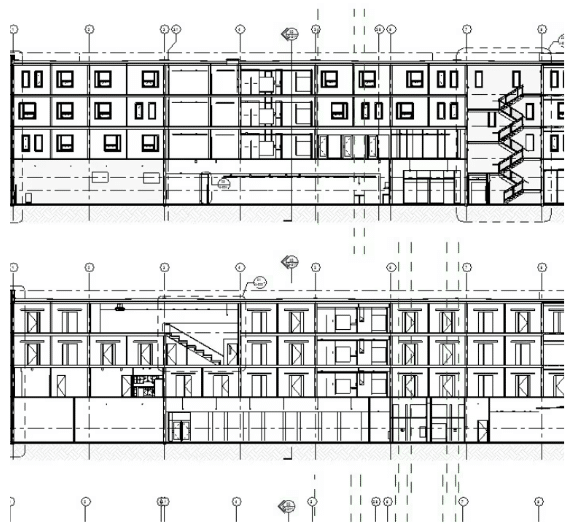

NO DIRECT LINK

METHODOLOGY & PIPELINE

3.1 WORKFLOW OVERVIEW

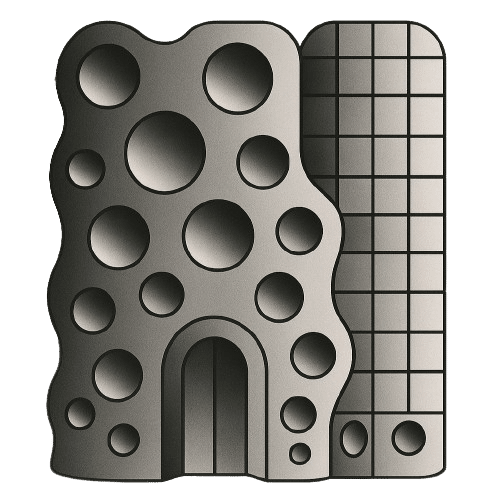

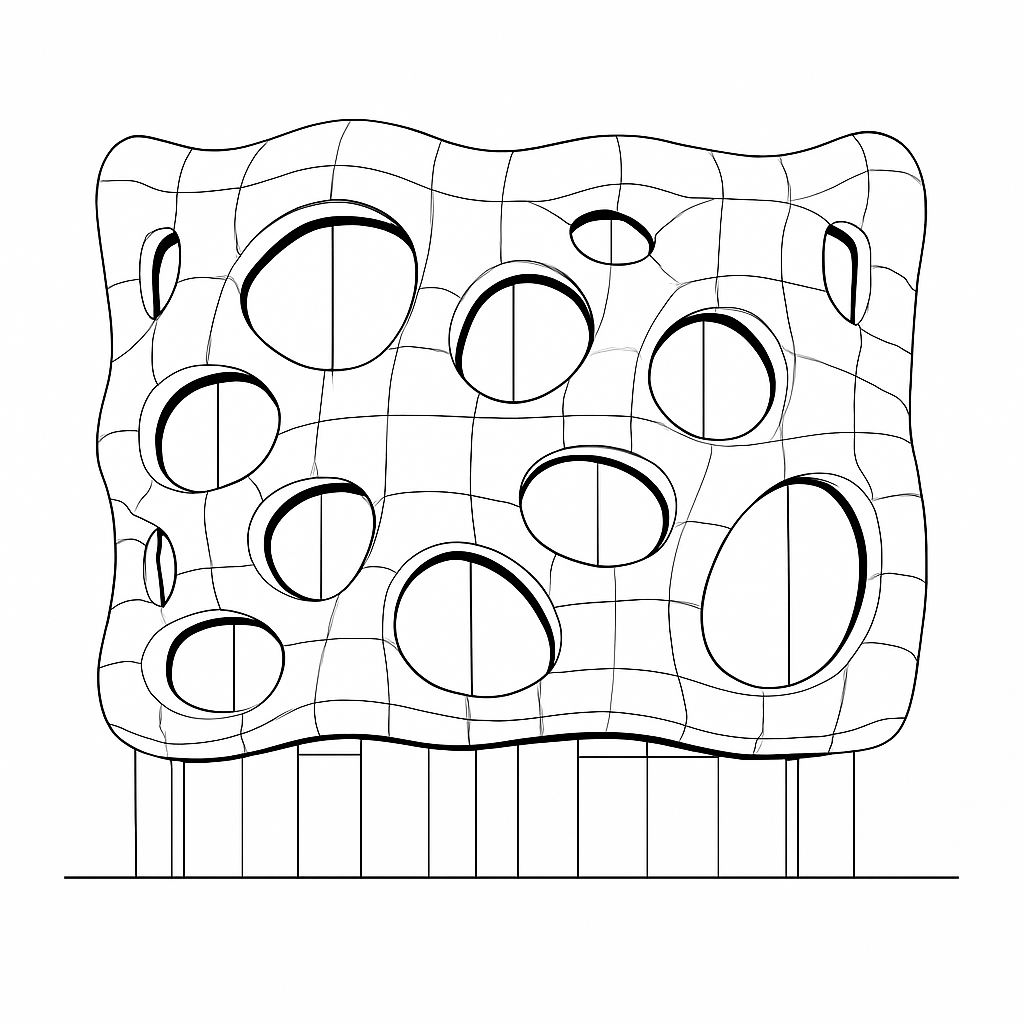

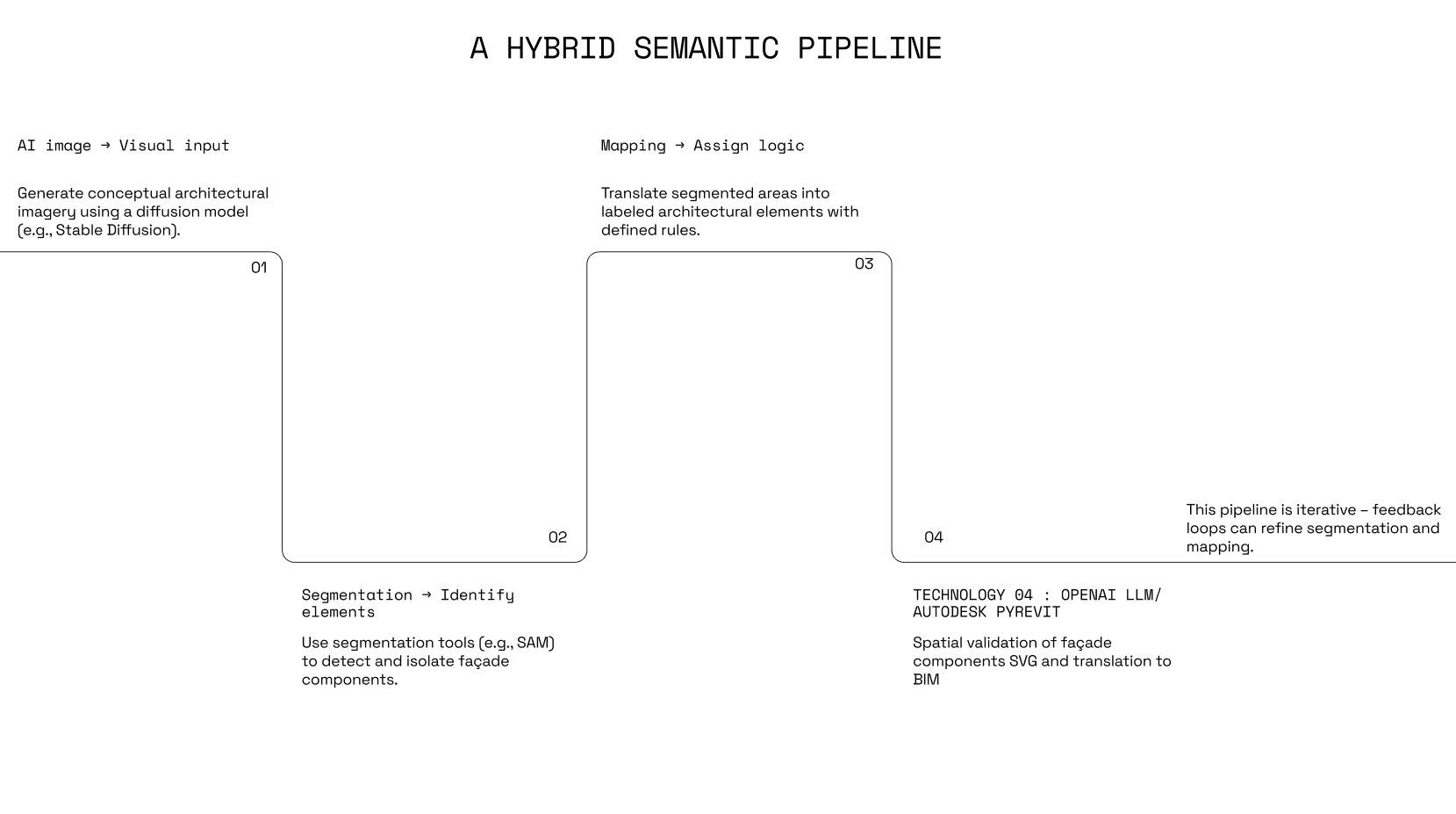

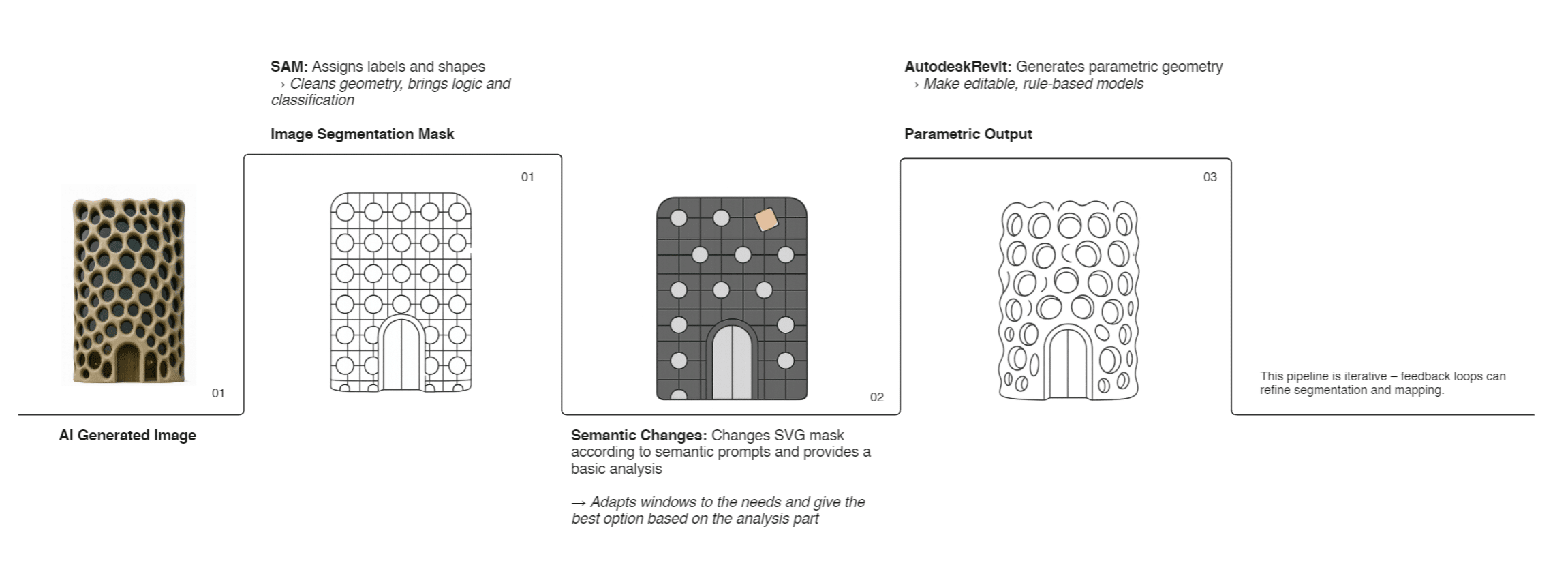

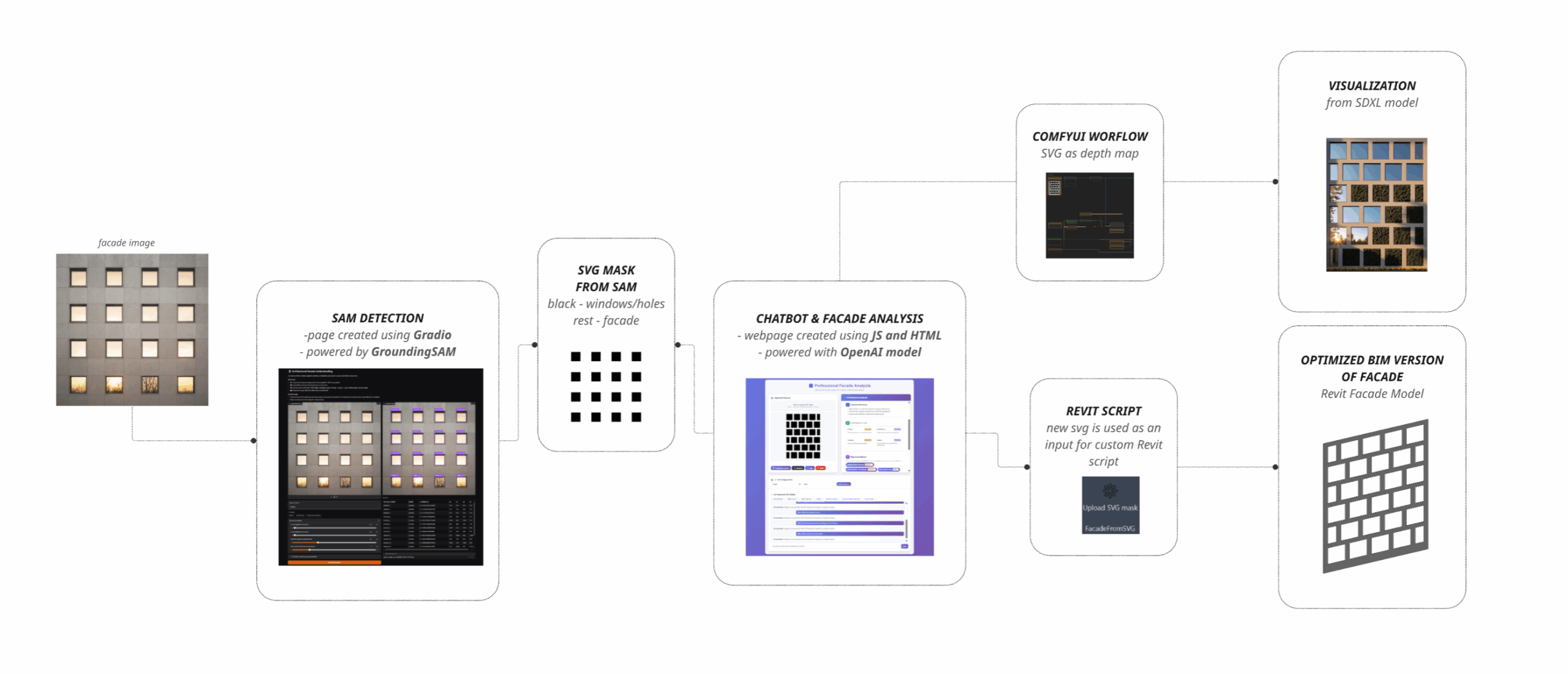

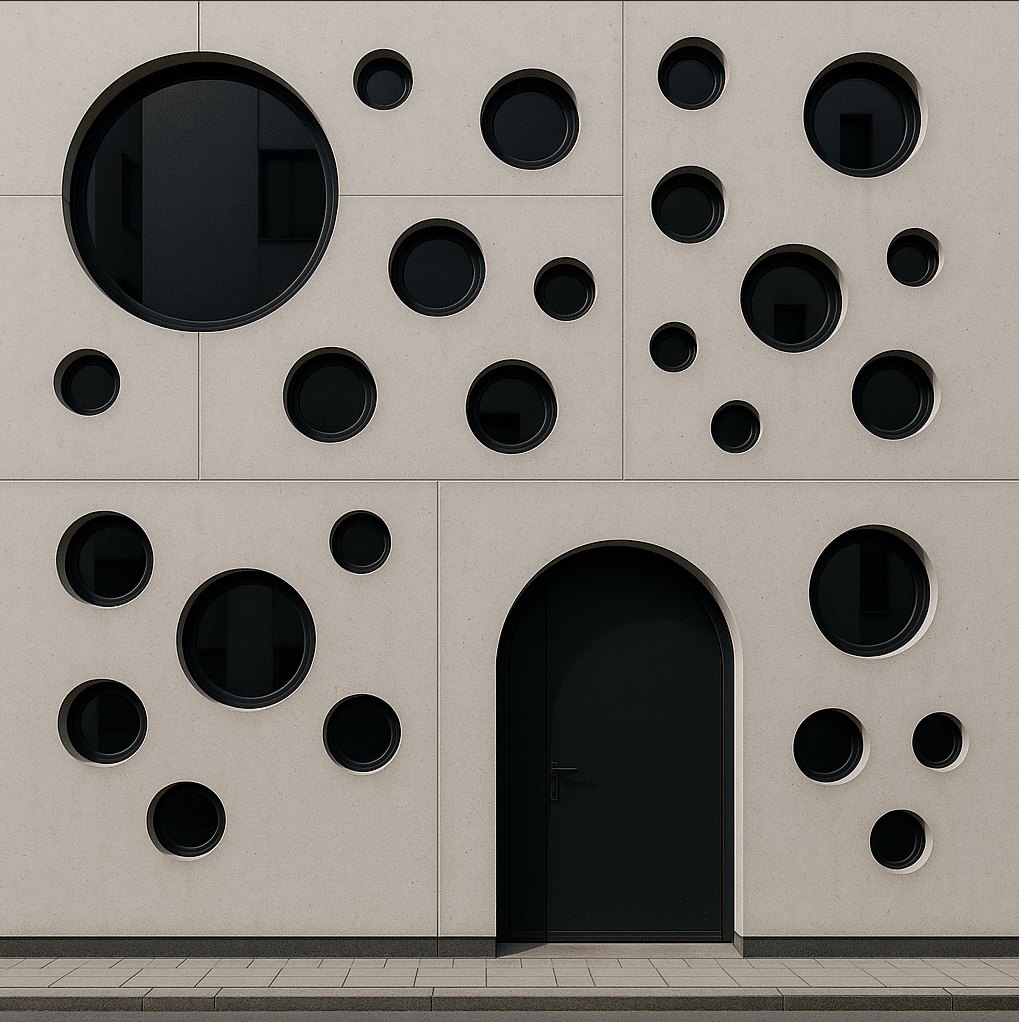

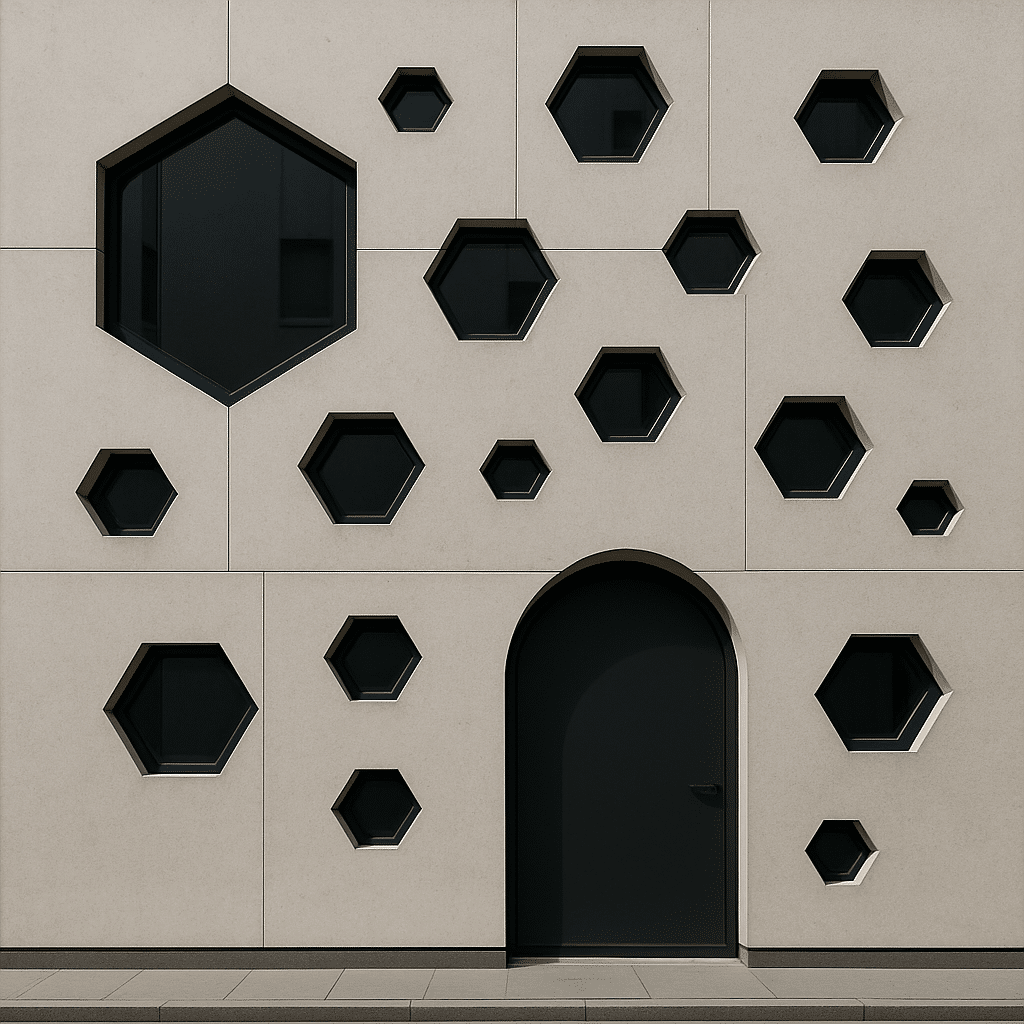

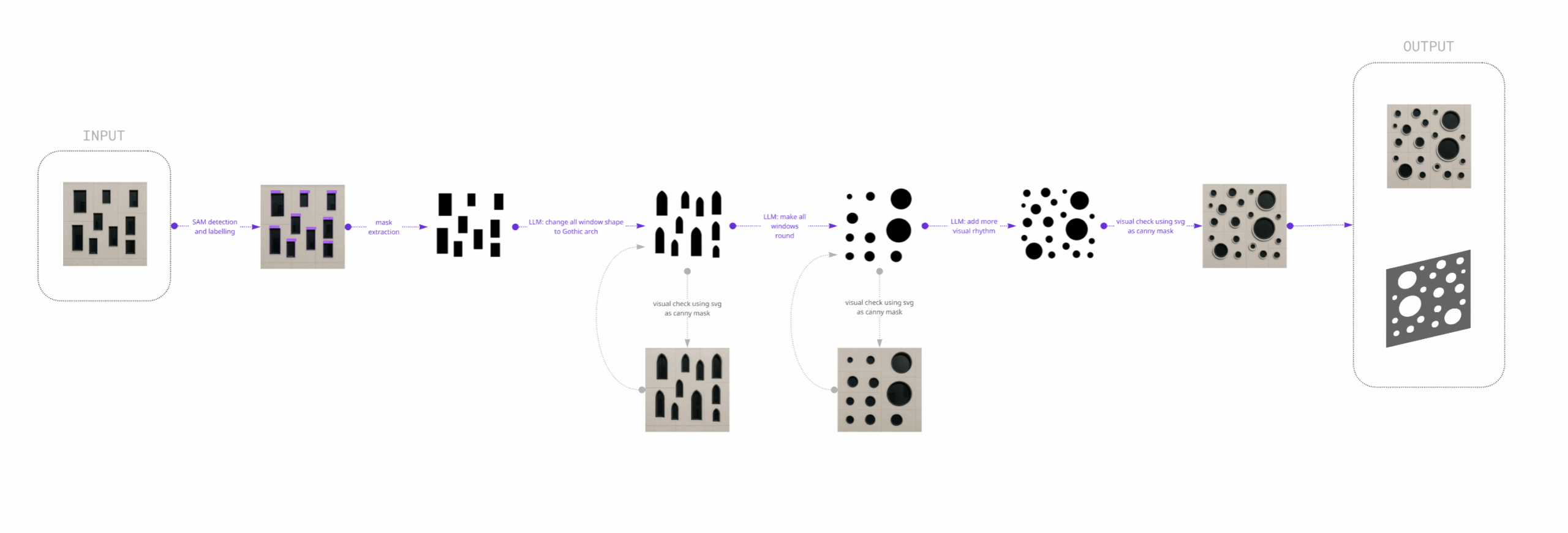

Our pipeline enables the transformation of AI-generated architectural concepts into fully parametric models. The process begins with a raw AI-generated image of a building façade, often featuring complex geometries such as circular openings. This image is processed using the Segment Anything Model (SAM), which detects and segments geometric features, assigning logical classifications and cleaning up the output for further use.

Next, the segmentation results—output as an SVG mask—are further refined through semantic adaptation. Guided by user prompts and analytical feedback, elements such as windows are modified to better satisfy functional and performance criteria. This cleaned, semantically enriched SVG is then imported into Autodesk Revit, where it becomes an editable, rule-based parametric model. The workflow is inherently iterative, supporting continuous feedback and refinement between segmentation and parametric mapping, and ensuring a seamless transition from creative AI outputs to practical, editable architectural models.

Technically, the pipeline utilizes SAM detection powered by GroundingSAM via a Gradio interface to identify façade elements—creating SVG masks where window openings are differentiated from structural surfaces. A chatbot interface, built using JavaScript, HTML, and OpenAI models, provides real-time analysis and interactive geometry editing based on analytical results and user instructions.

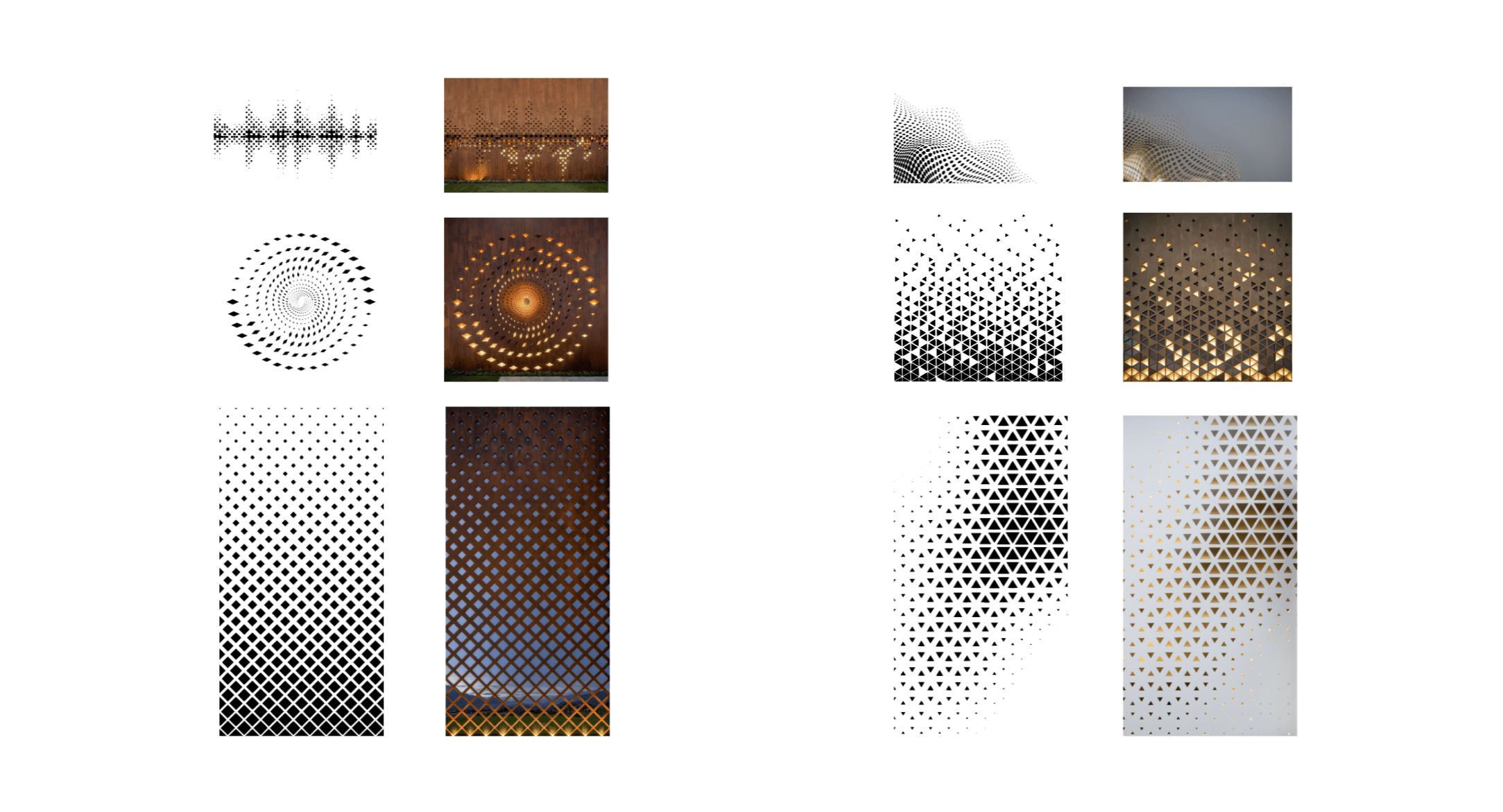

For visualization, the SVG can be uploaded into a ComfyUI workflow and used as a depth map to produce realistic renderings of the façade—complete with materials and lighting—by leveraging SDXL. The same SVG also serves as input for a custom Revit script, generating a parametric, BIM-compatible façade within the application. Here, professional tools further analyze and optimize the BIM model, ensuring the geometry remains editable and rule-driven.

As a result, a single image of a building façade can be automatically processed, segmented, analyzed, and converted into both a photorealistic visualization and a BIM-ready parametric model—effectively bridging generative AI, automated analysis, and computational design.

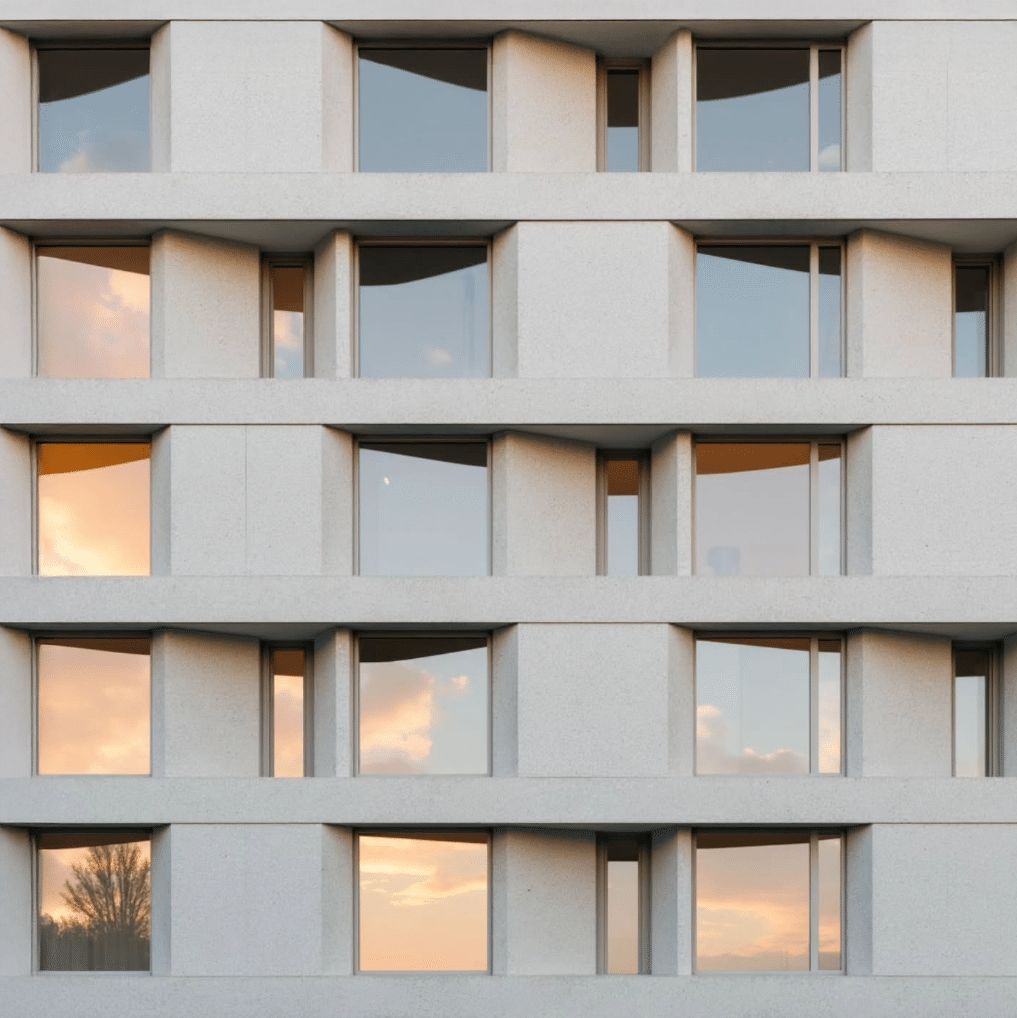

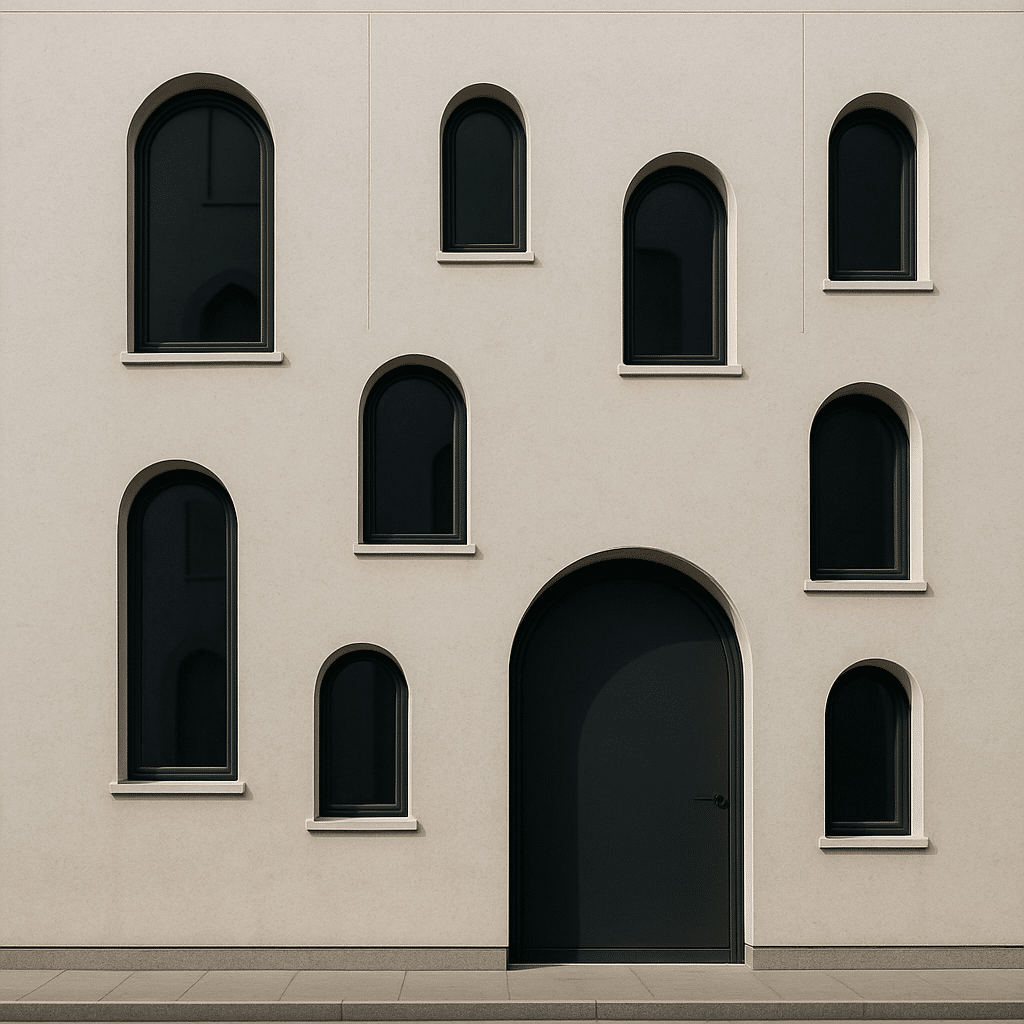

3.2 GENERATIVE AI EXPERIMENTS

The first stage of the methodology focuses on the generation of façade images using diffusion-based models. Recent advances in text-to-image synthesis, particularly Stable Diffusion XL (SDXL) and DALL·E, provide the capacity to produce architectural imagery directly from natural language prompts. Within this research, these models were accessed through Colab environments, enabling controlled experimentation with different prompt formulations.

Three categories of prompts were tested: grammar-based prompts, universal prompts, and free prompts. Grammar-based prompts employed structured, rule-like formulations intended to constrain the model toward architectural clarity. Universal prompts used more general descriptions, relying on the model’s internal biases to define the output. Free prompts emphasized stylistic freedom, prioritizing diversity of imagery over legibility. By comparing these approaches, the experiments examined how textual structure affects the architectural readability of the resulting façades.

Outputs from this stage consist of AI-generated façade images that vary in their degree of clarity, consistency, and architectural coherence. These results serve both as a dataset for subsequent segmentation testing and as evidence for assessing the role of language in shaping generative out-comes.

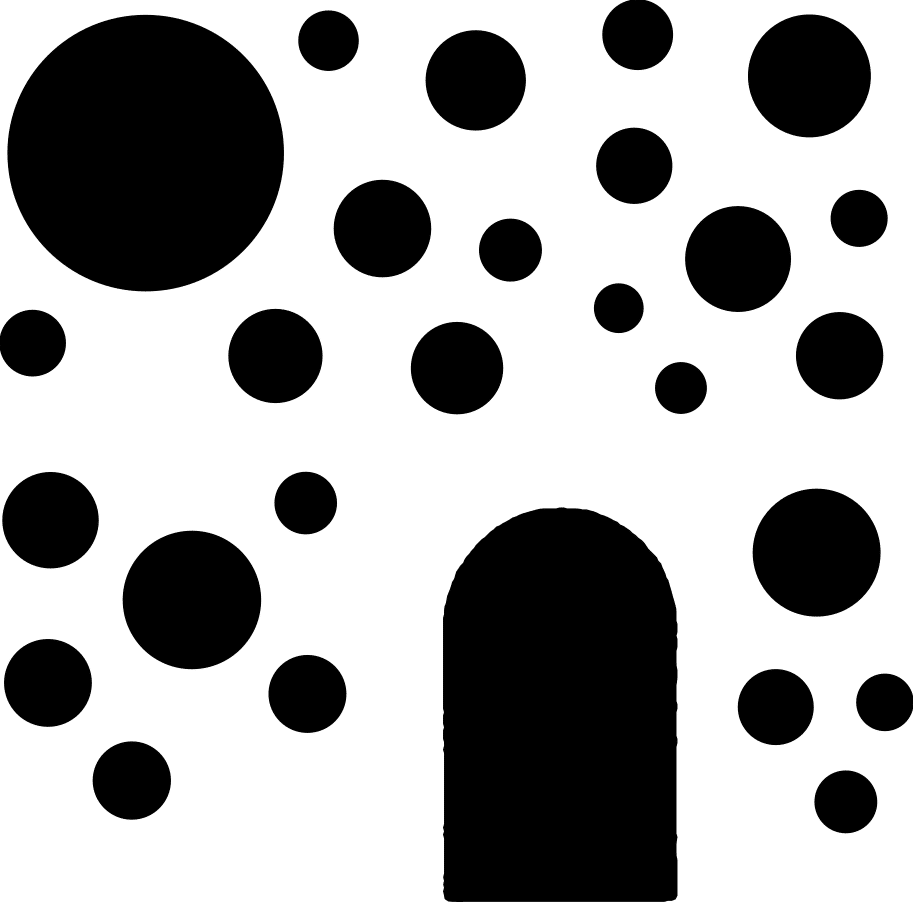

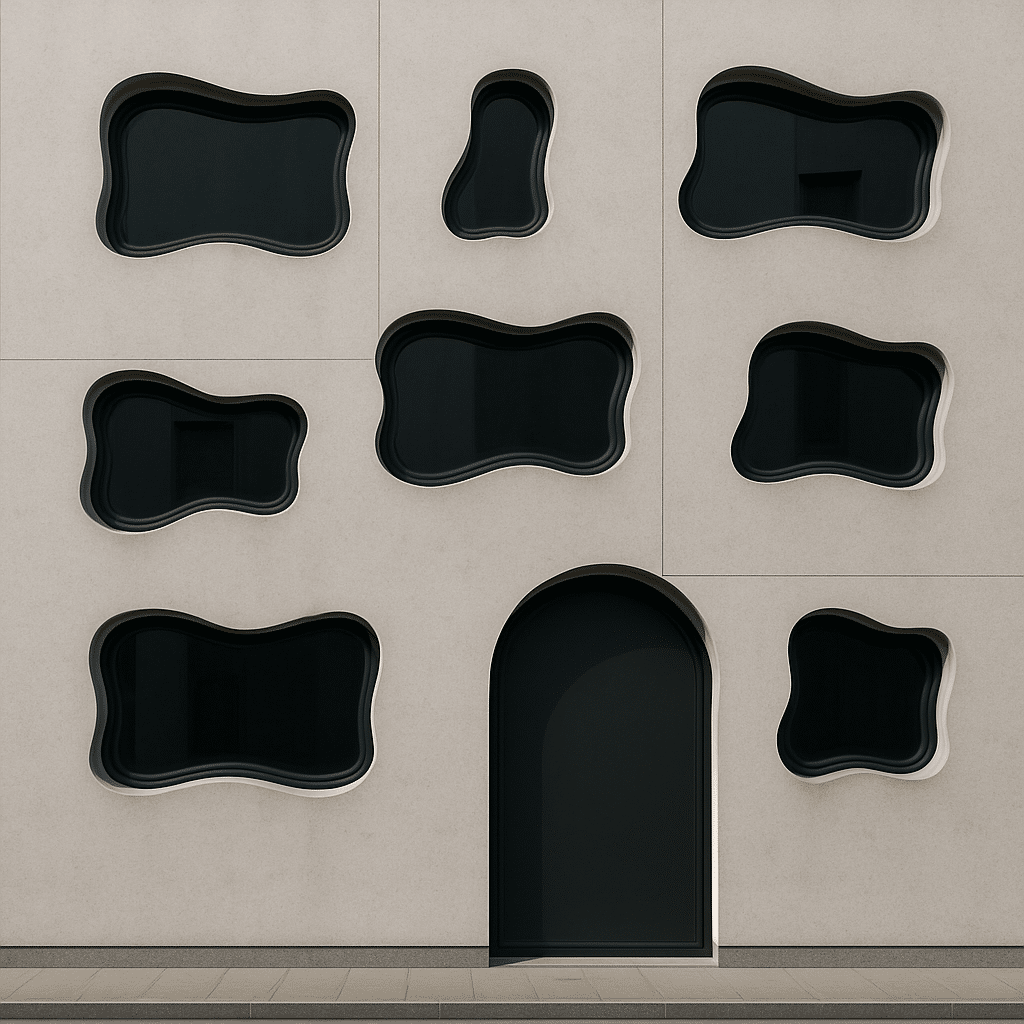

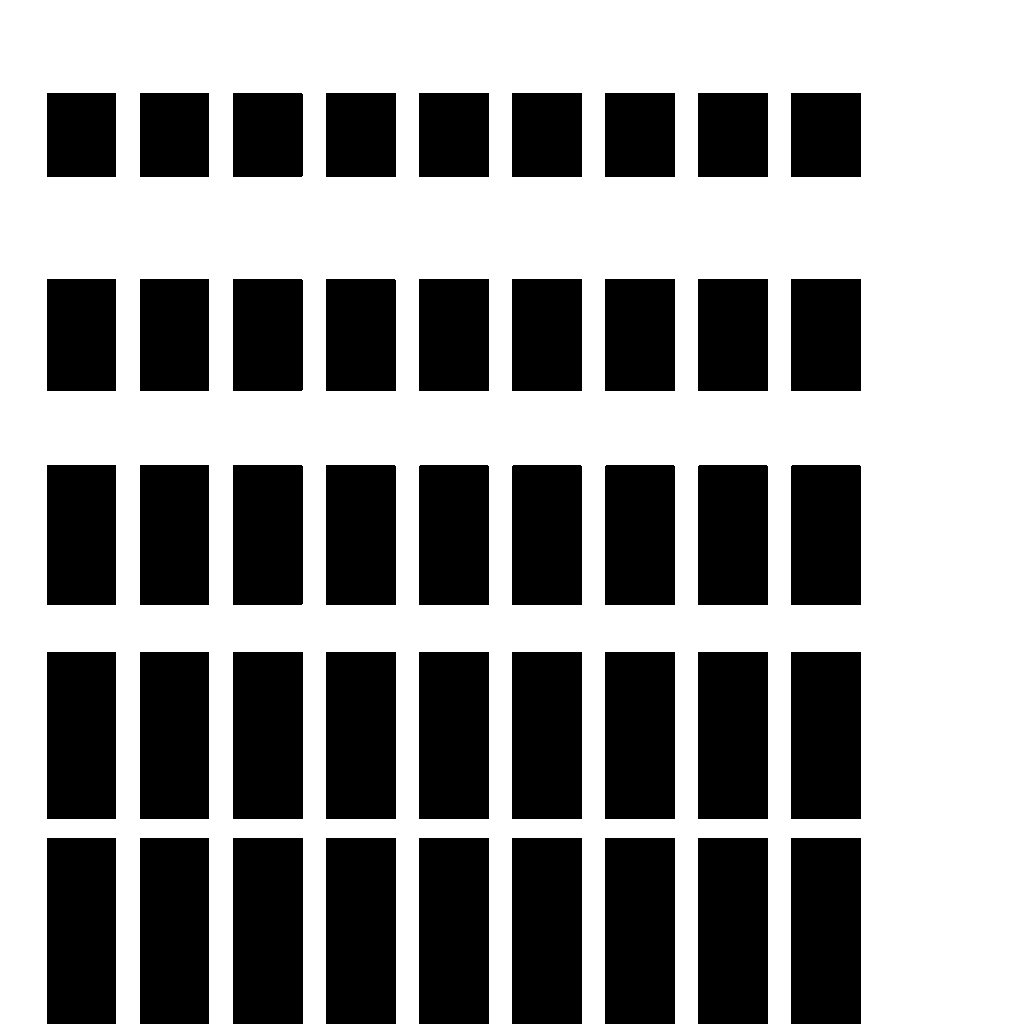

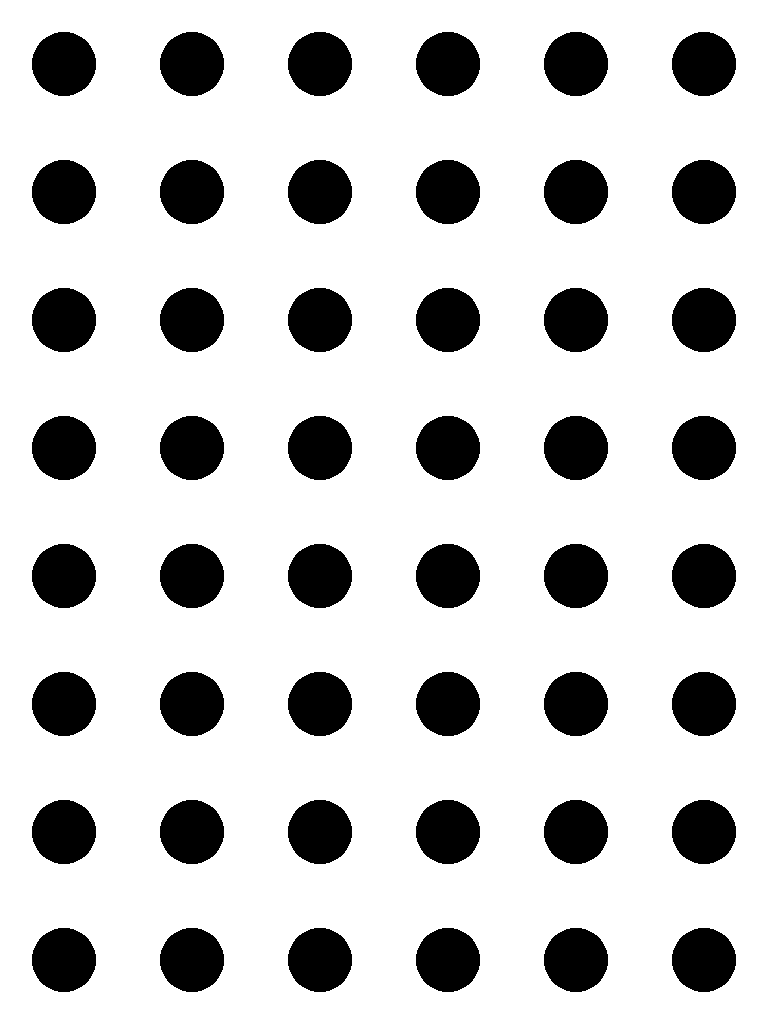

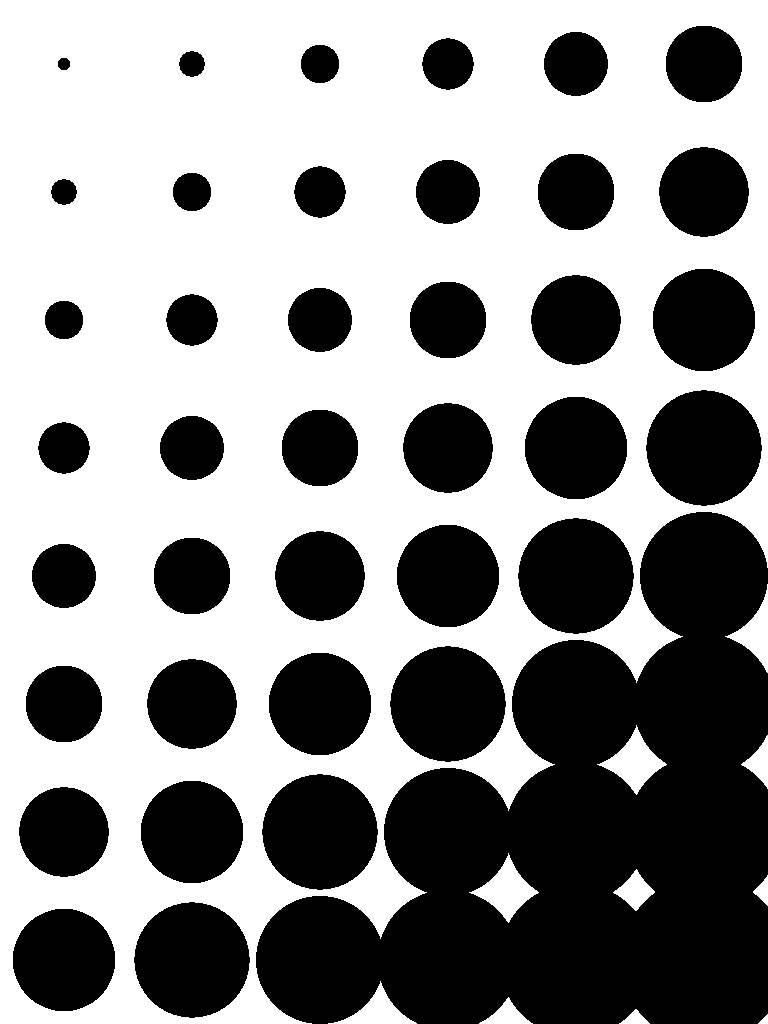

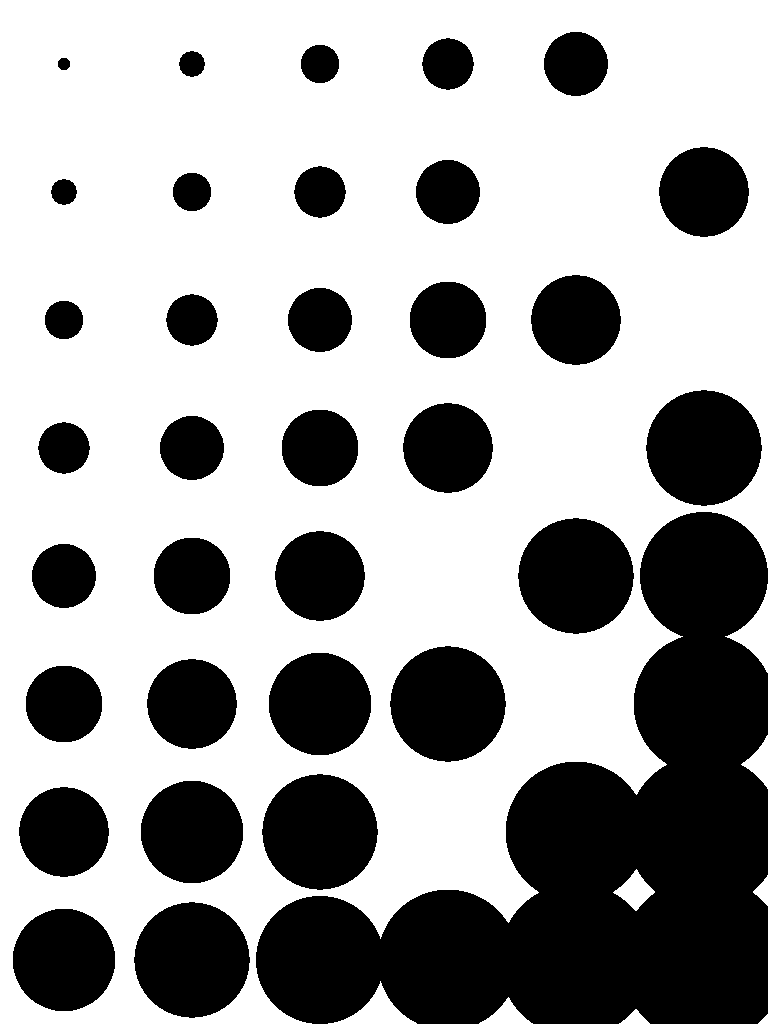

EXPERIMENTS WITH DIFFERENT FACADES

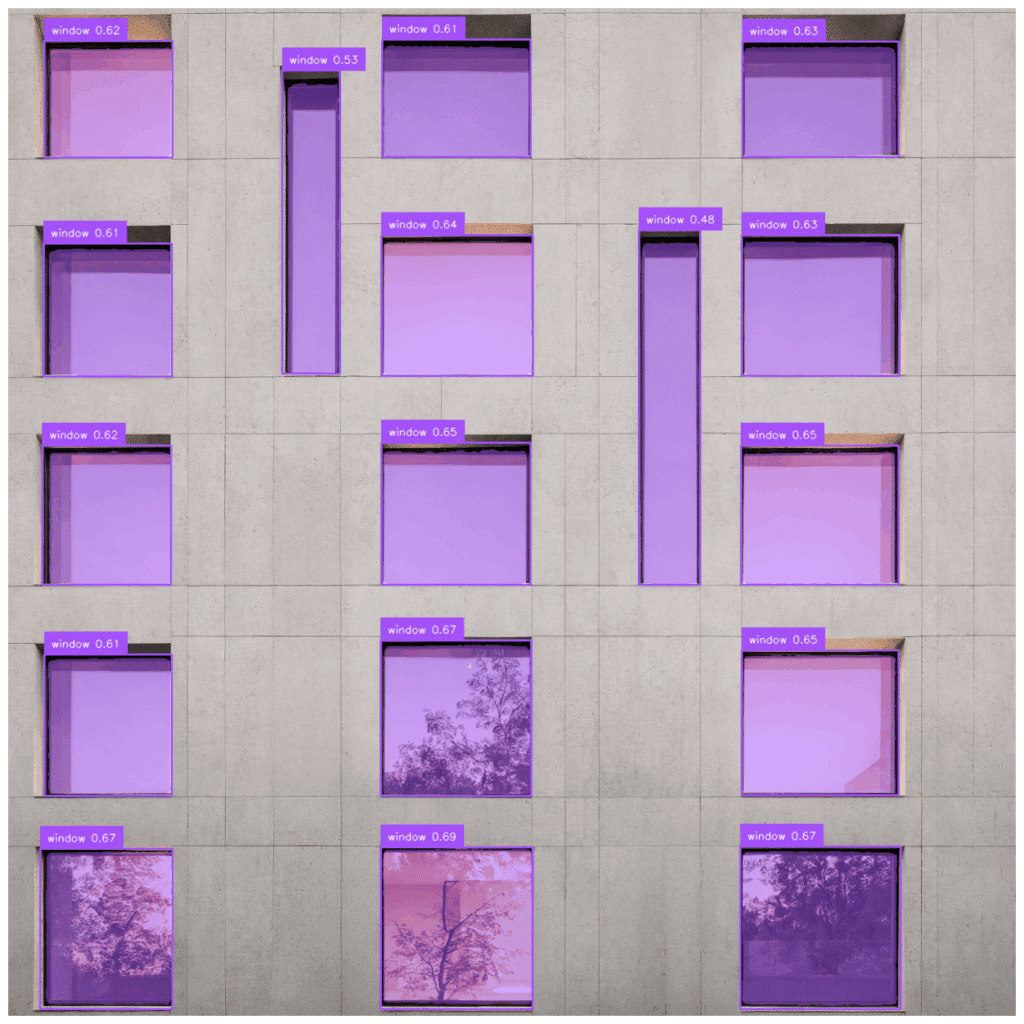

Input image

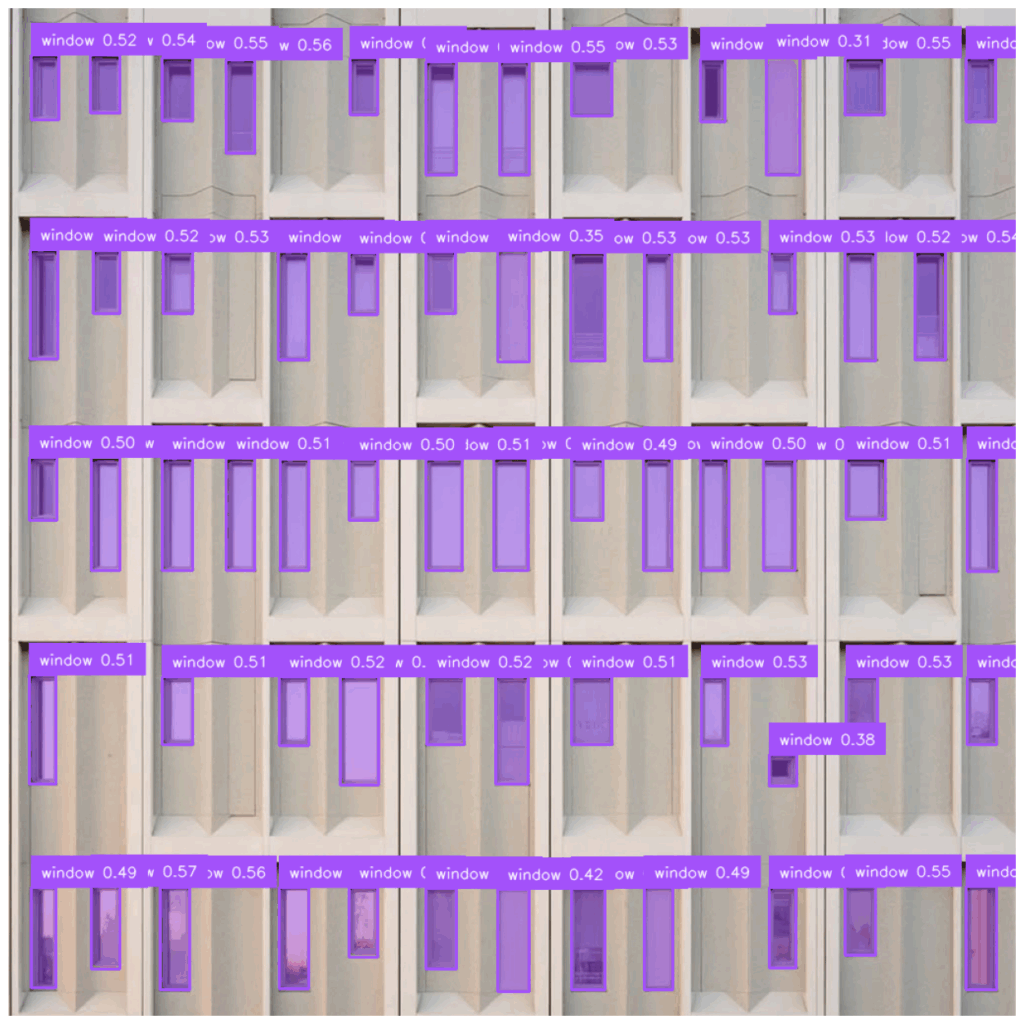

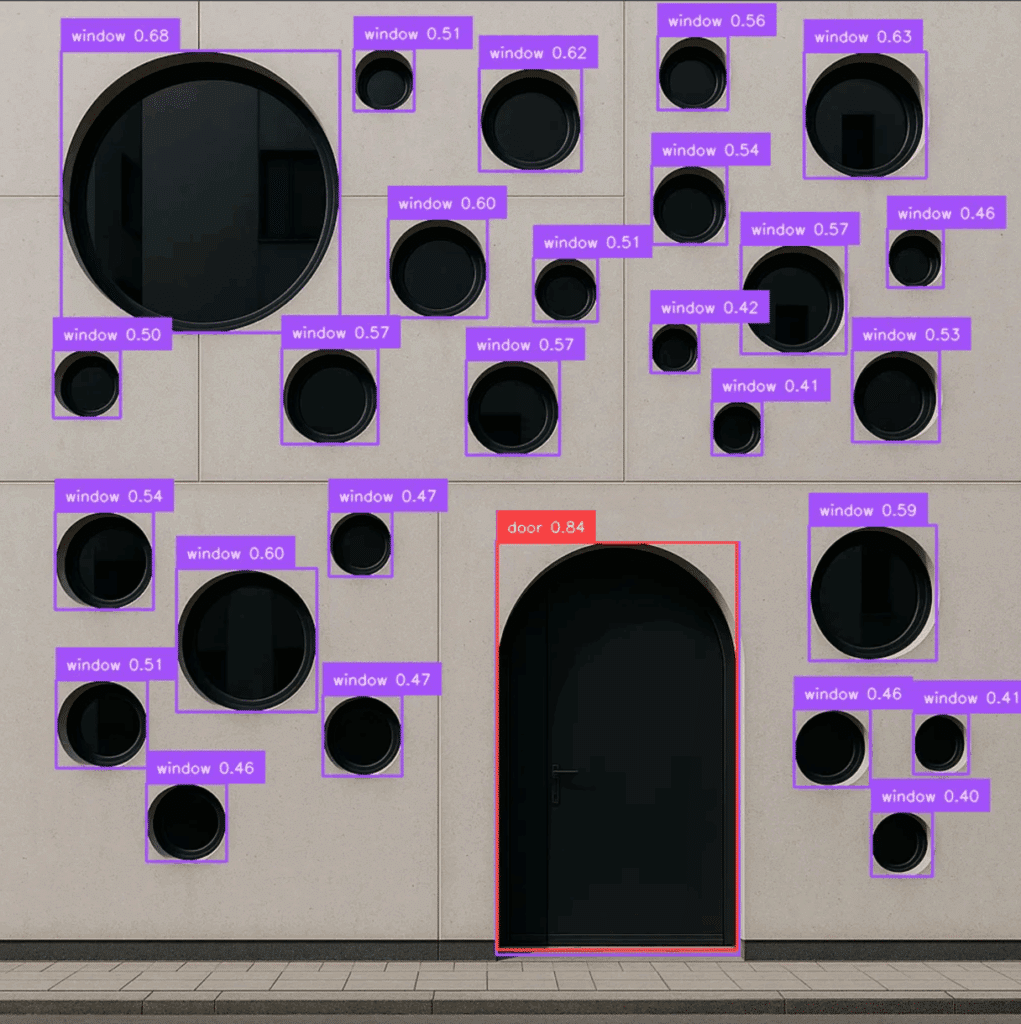

Detections

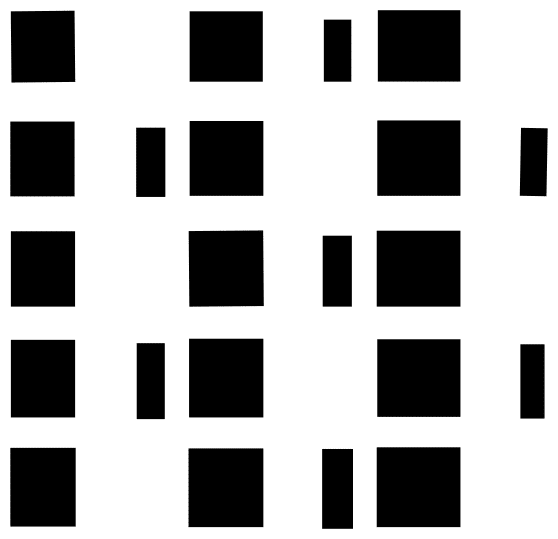

Cleared mask

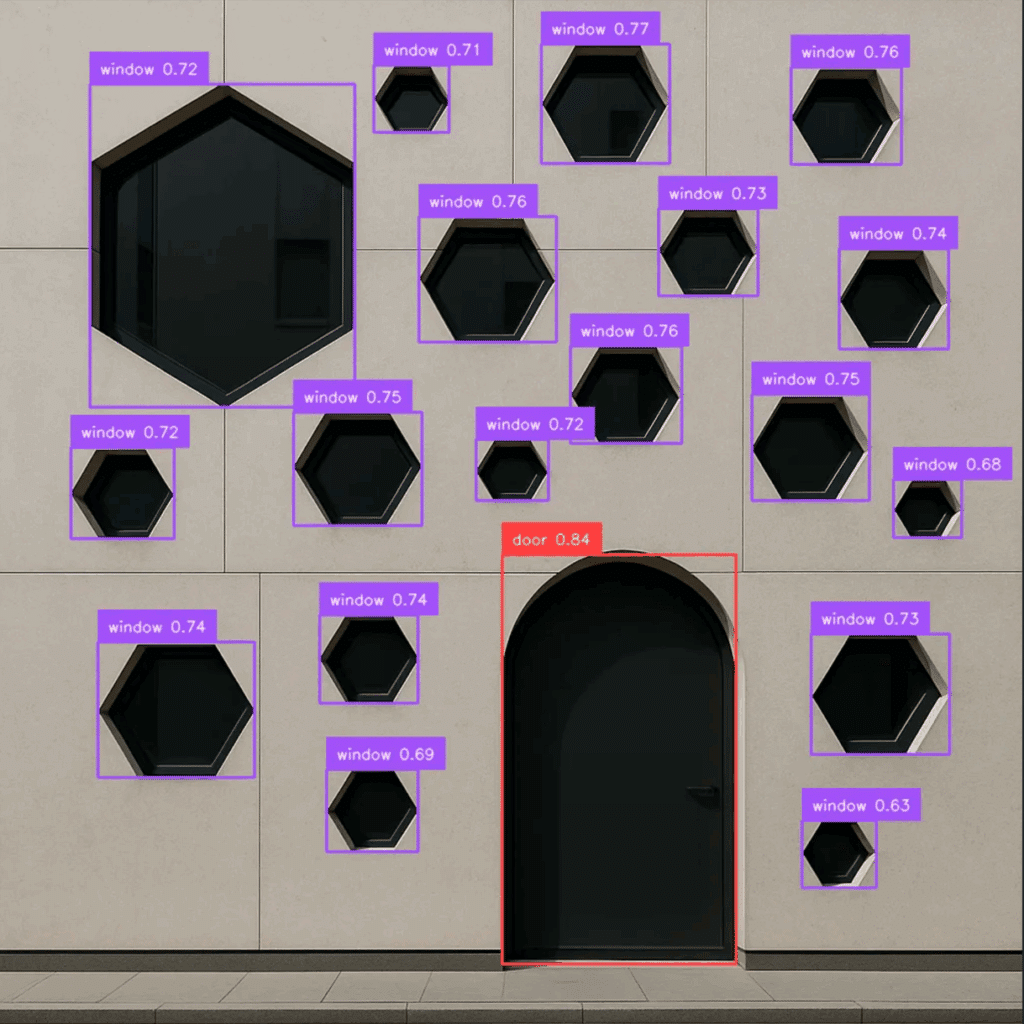

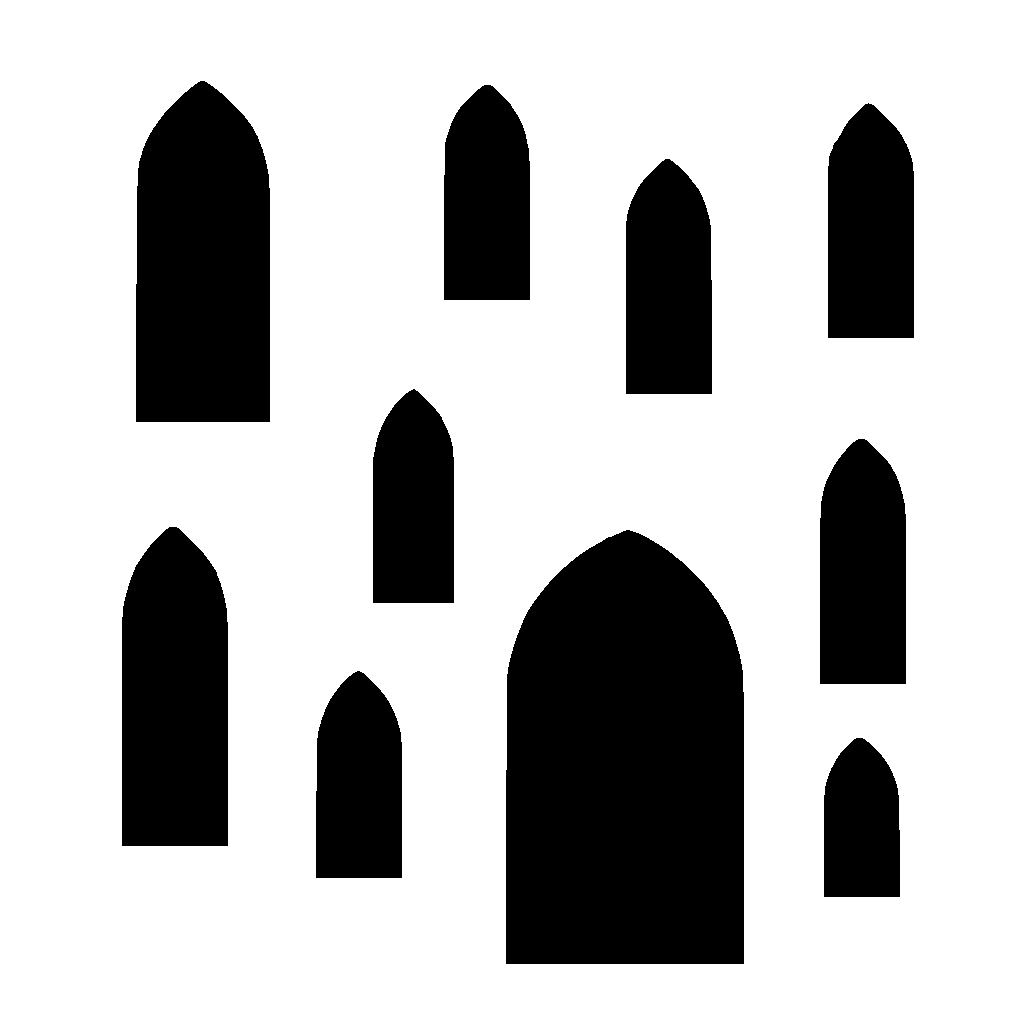

3.3 SEGMENTATION AND MASKING

In the second stage, AI-generated façades were processed with the Segment Anything Model (SAM) in Colab to produce pixel-level segmentation masks of components such as windows, arches, and surface divisions. While SAM effectively detected some architectural features, it also misclassified shadows, reflections, and ornamentation as structural elements, revealing limitations in applying general-purpose models to architectural imagery. Chosen for its domain generalization and ability to generate masks without retraining, SAM offered a practical yet imperfect tool for testing computer vision in design contexts.

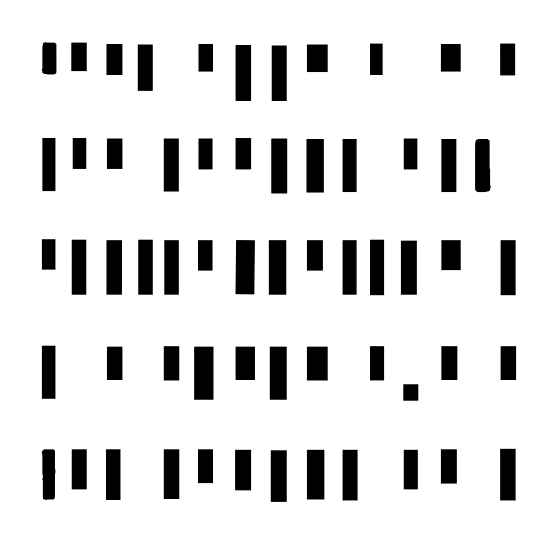

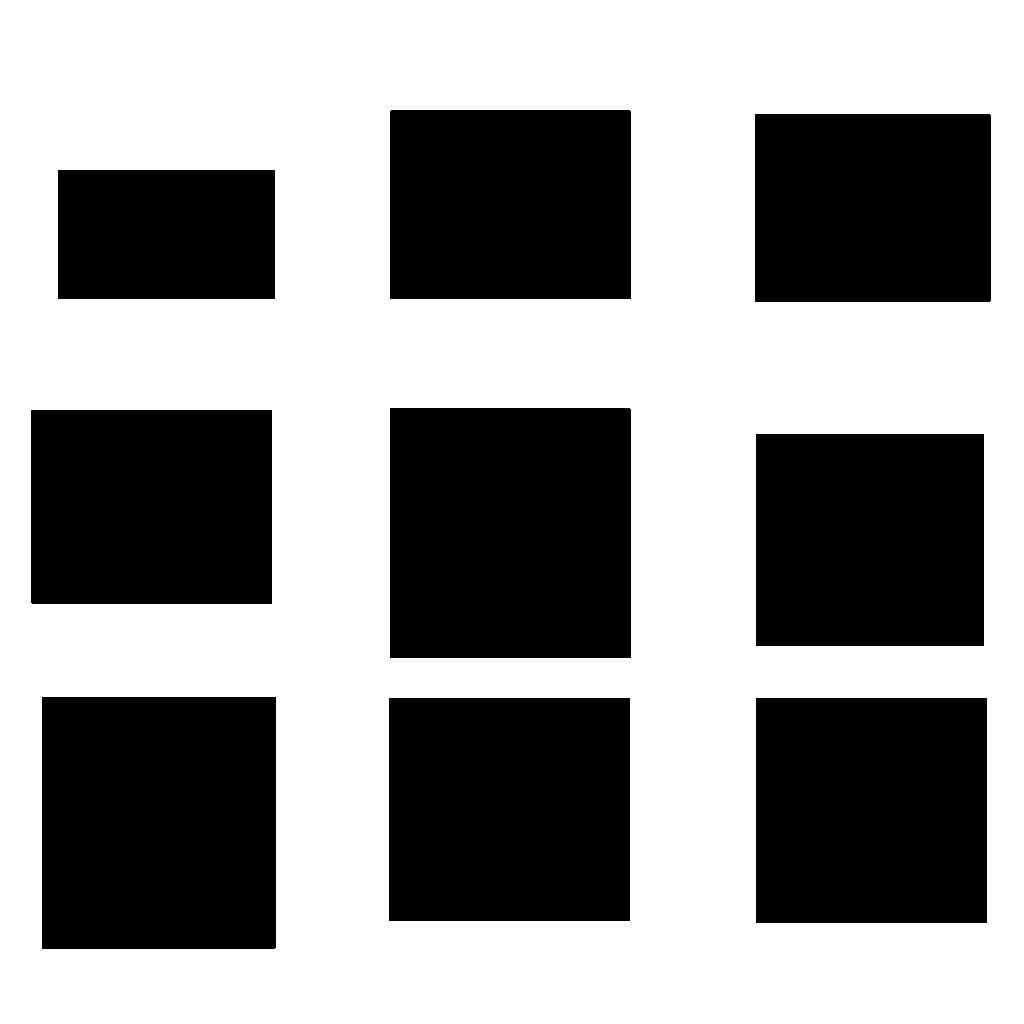

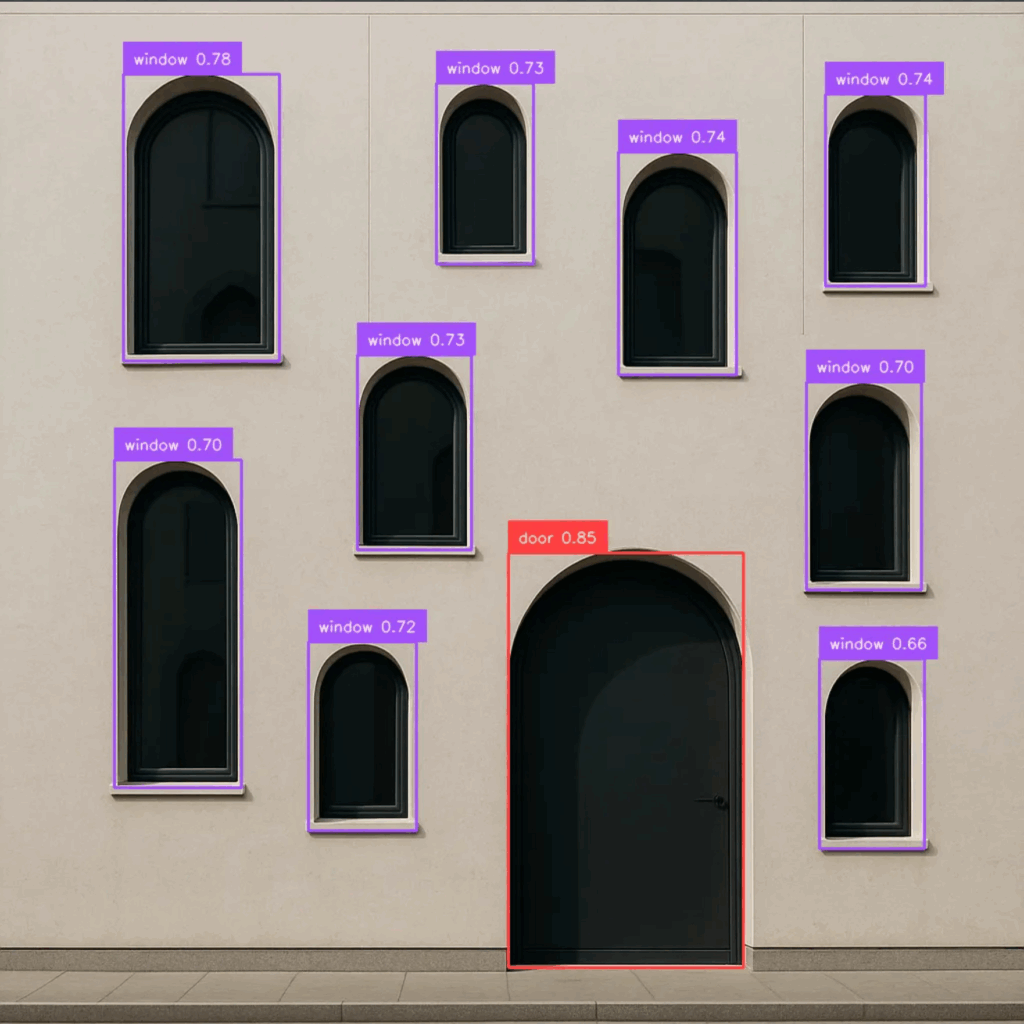

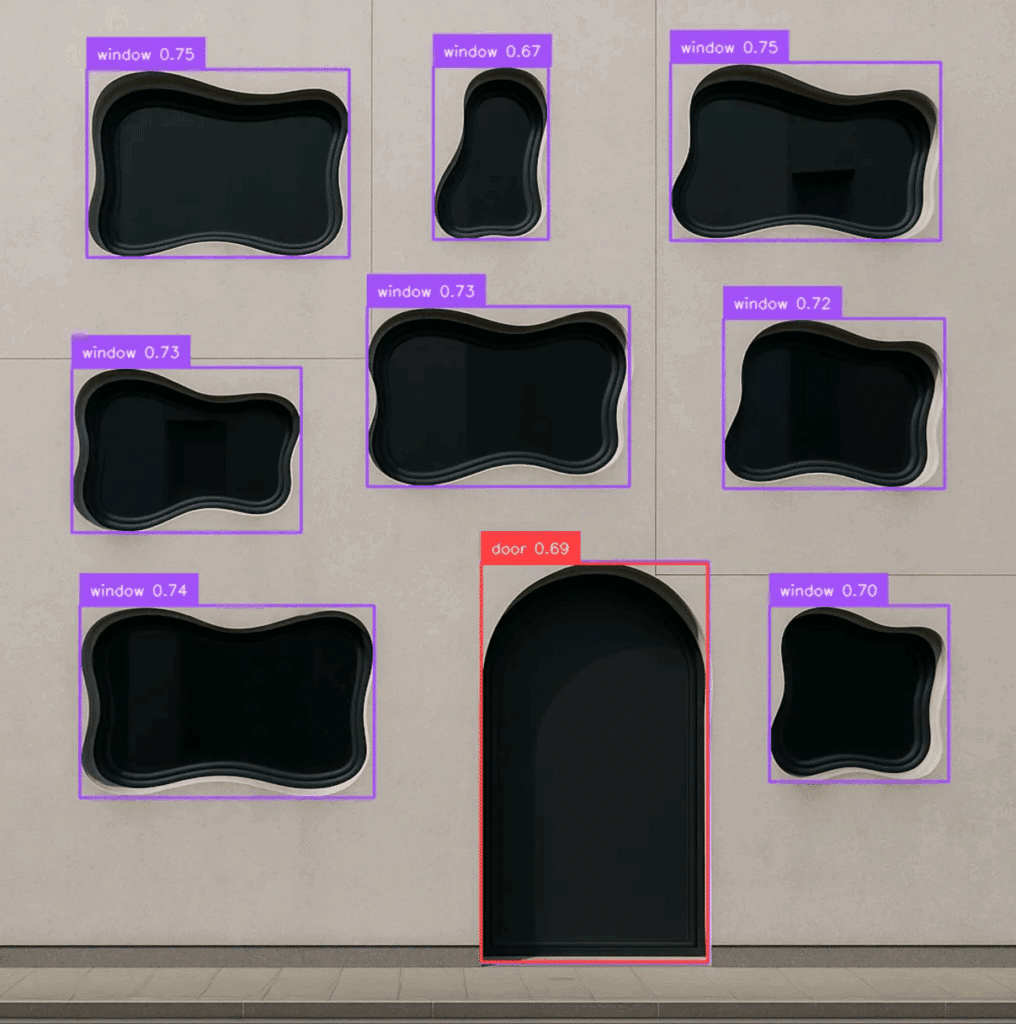

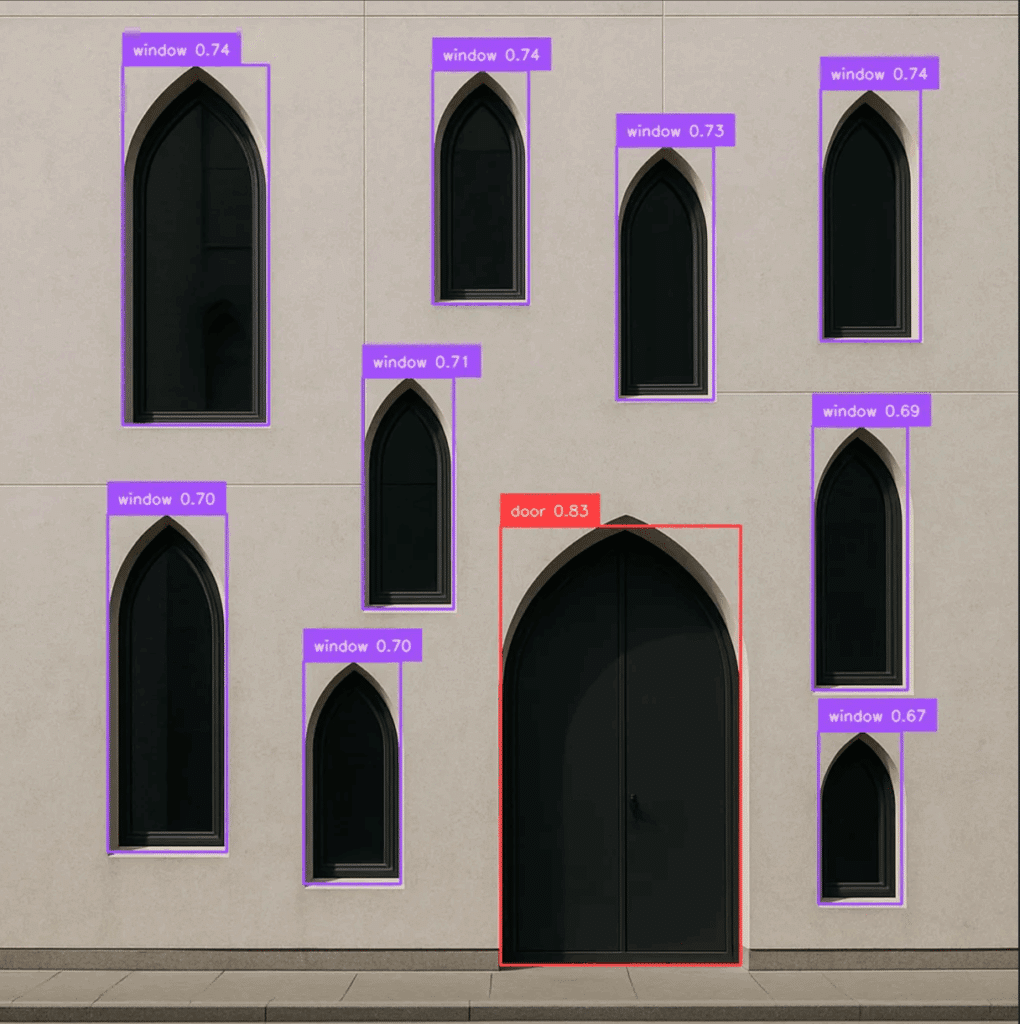

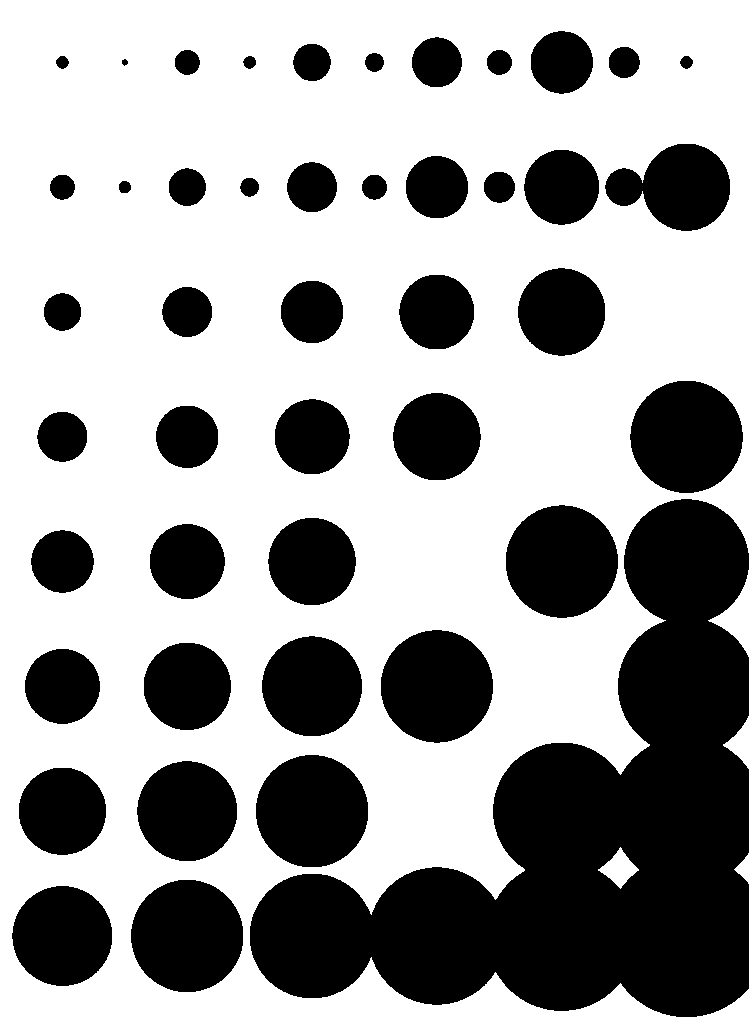

EXPERIMENTS WITH DIFFERENT WINDOW SHAPES

Input image

Detections

Cleared mask

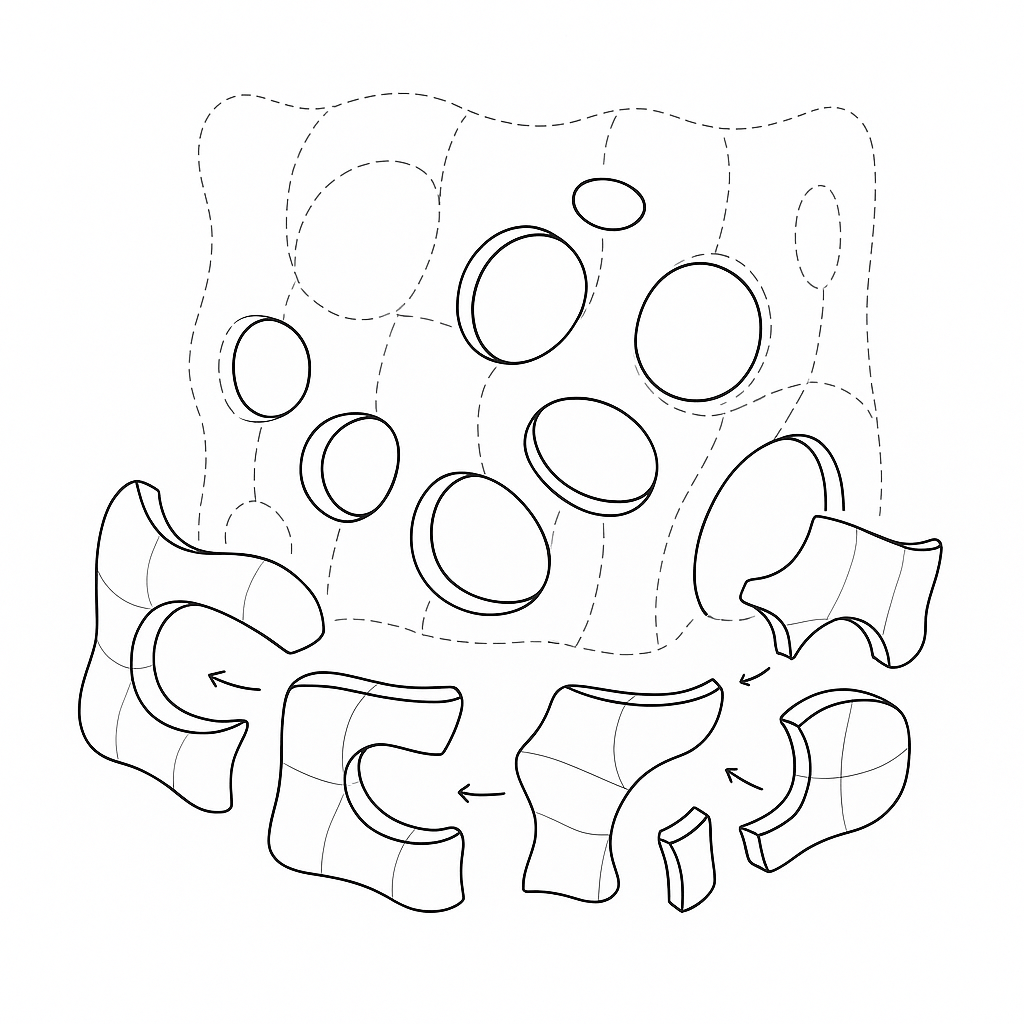

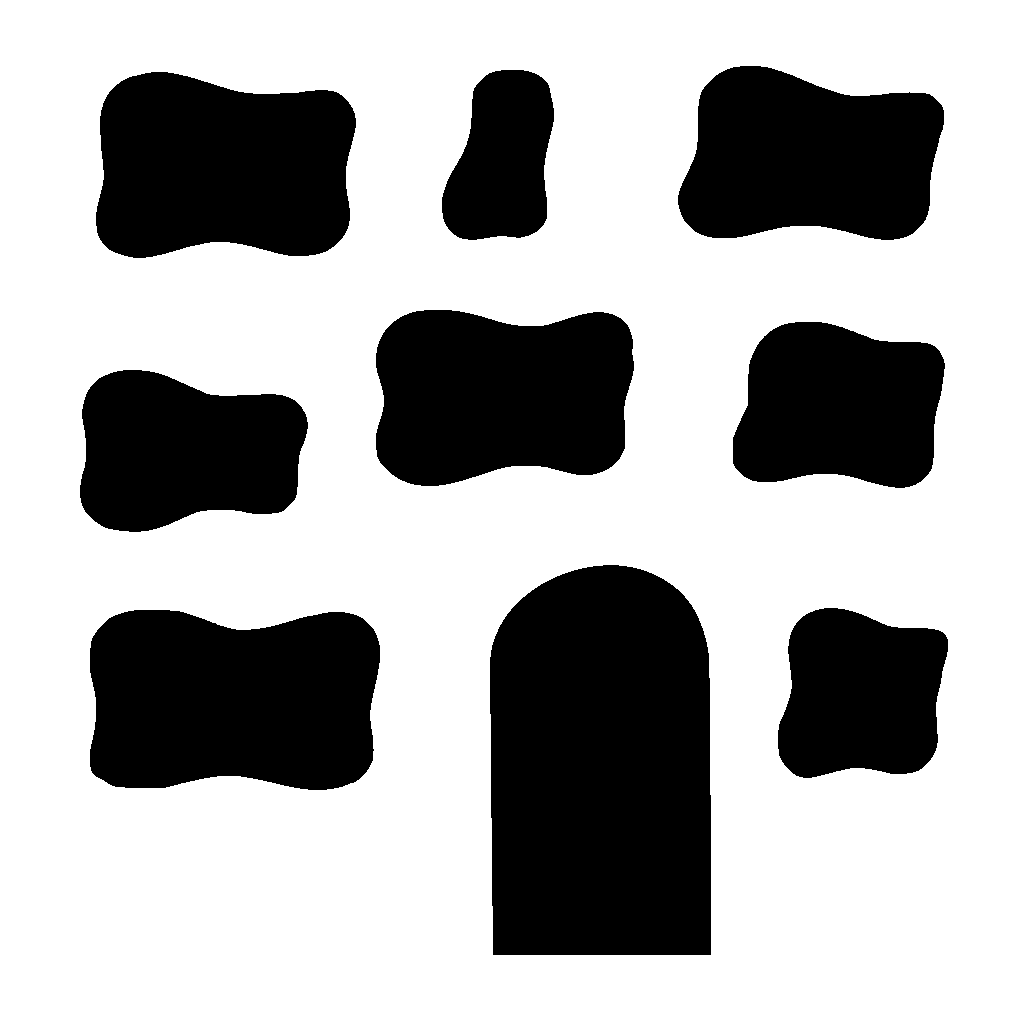

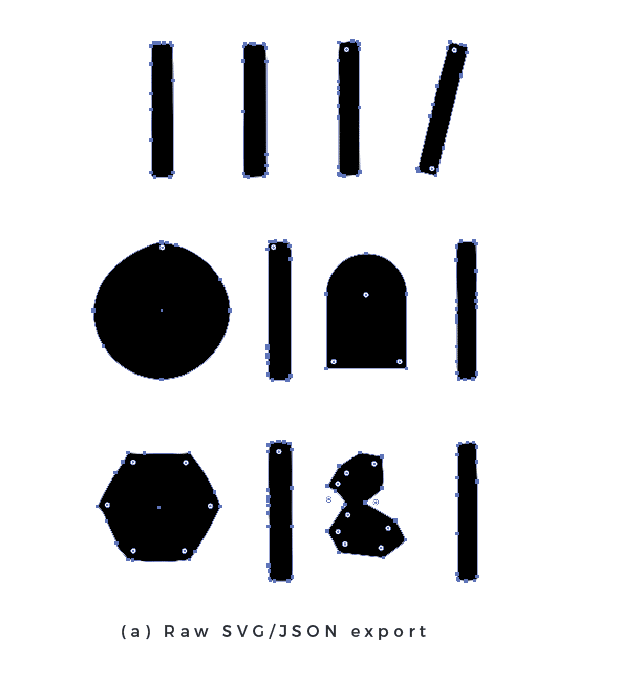

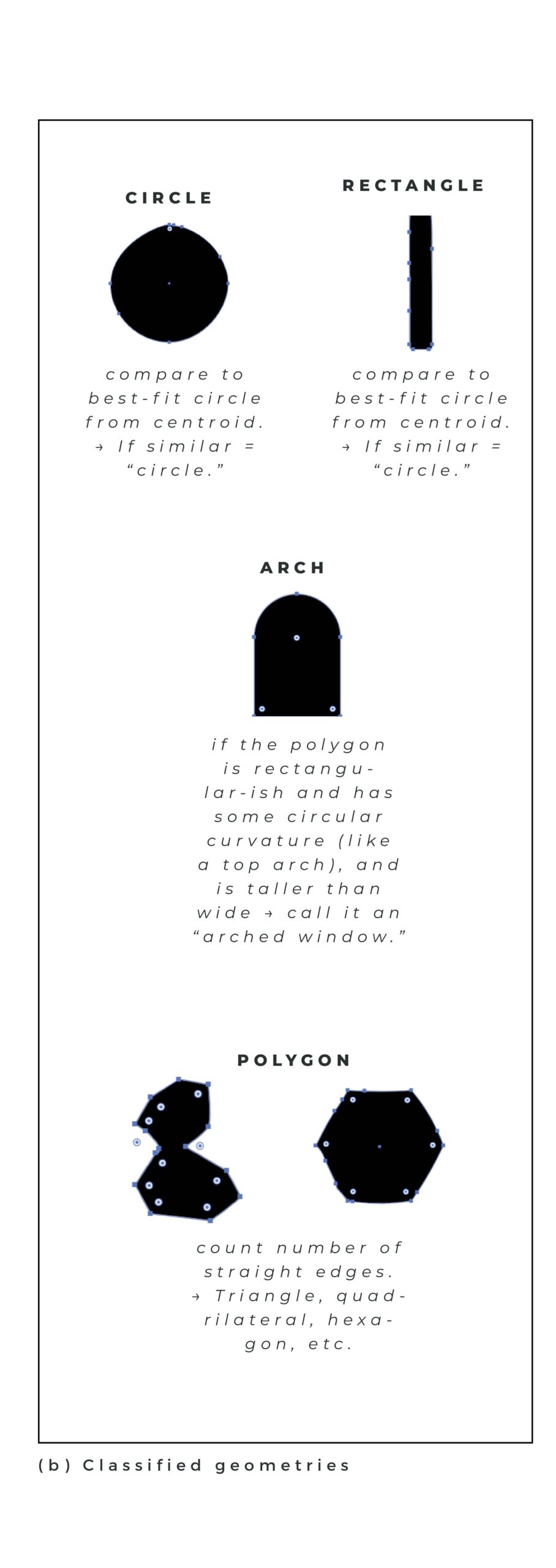

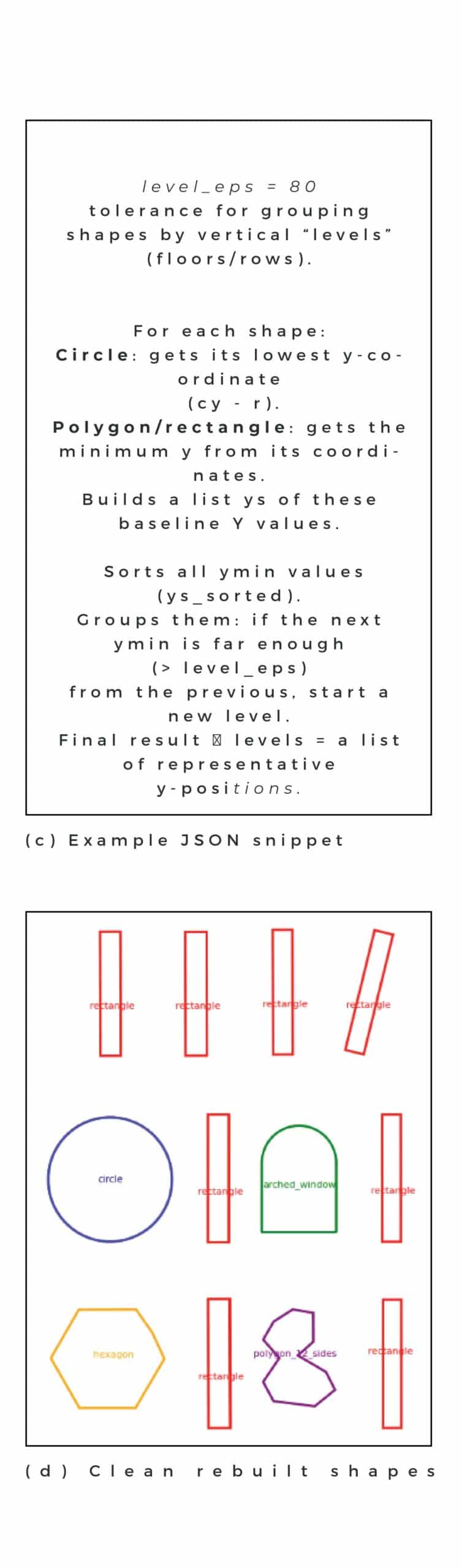

3.4 POSIBLE OPTIMIZATION FOR COMPLEX SHAPES: CONVERTING SVG TO JSON

For Revit script optimization, in order to avoid long processing time of SVG (around 5-15 min), we first converted SVG to JSON file and created Revit script that creates facade from JSON instantly.

For that stage, we focused on reformulating the segmentation outputs into structured geometric data. The masks generated by SAM were exported as SVG and converted into JSON using specific algorithm in Google Colab. This Colab translates pixel-based information into geometric primitives such as polylines and polygons, creating a bridge between image interpretation and design software.

The exported data, however, contained a number of artifacts, including fragmented

lines, overlapping shapes, and misclassified regions. Processing involved identifying

and classifying these outputs, distinguishing between usable closed polylines (e.g.,

window or door outlines), open polylines, and irregular shapes. While imperfect, this stage demonstrates the potential for segmentation data to serve as a foundation for parametric and BIM-based reconstruction.

DEVELOPMENT

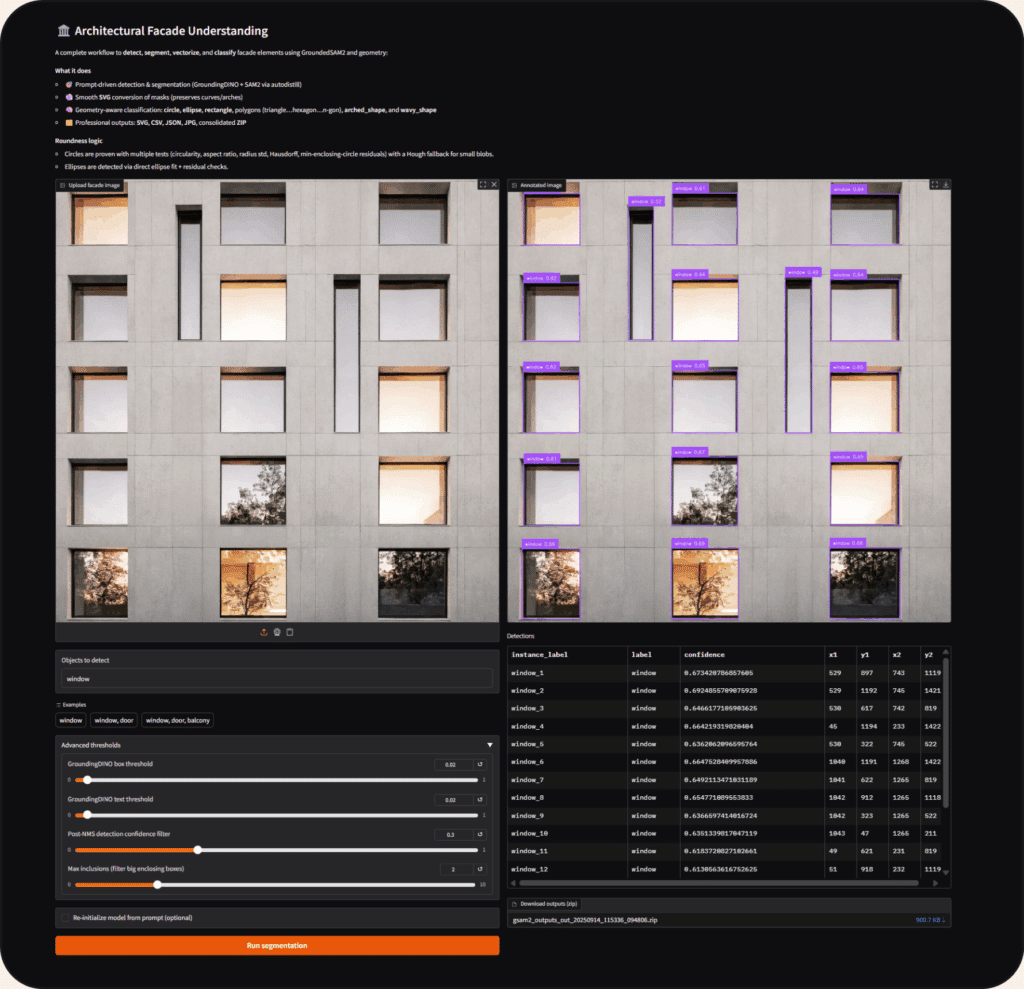

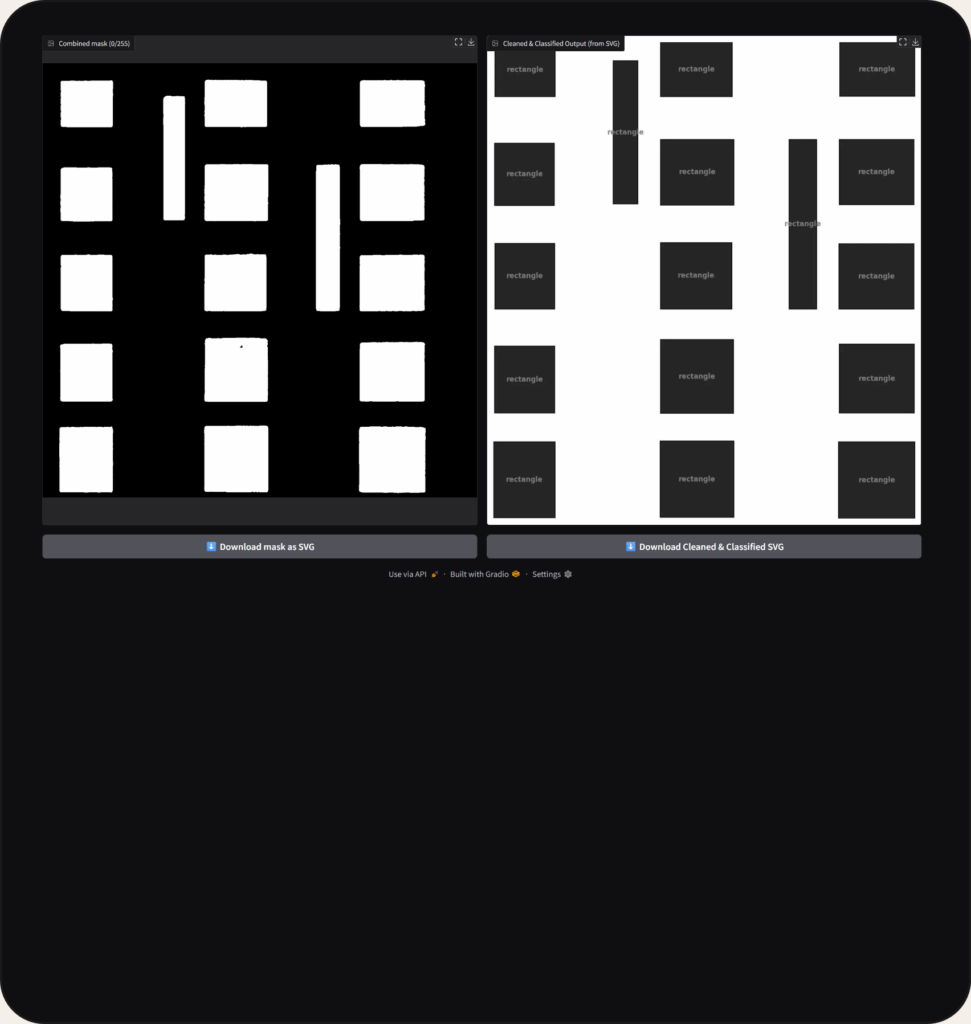

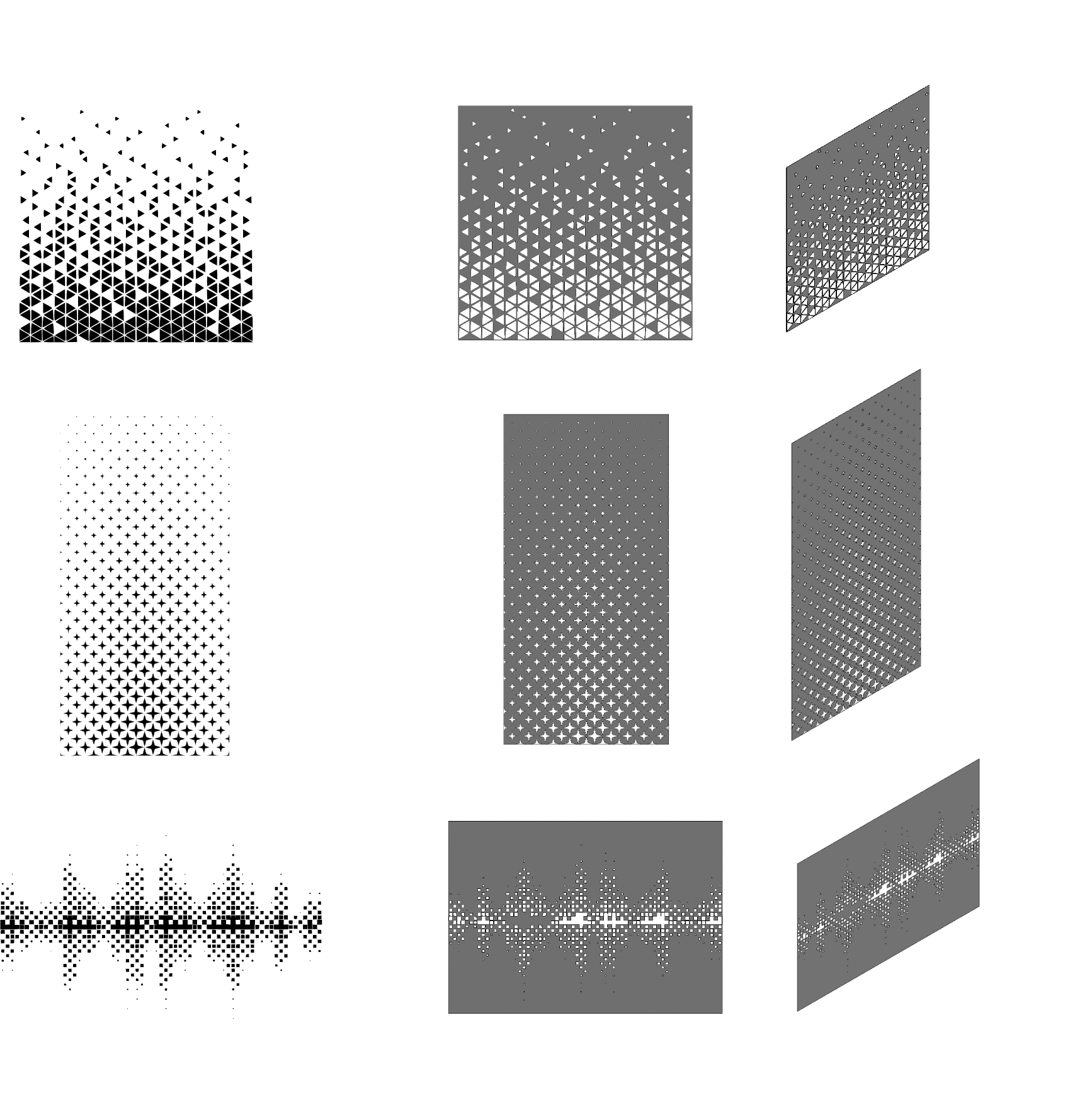

STAGE 1. GRADIO SAM APPLICATION

To easily run segmentation, we managed to create a Gradio app that inputs an image and runs segmentation using GroundingDino with SAM2 model. After pressing ‘Run segmentation’ button, the segmentation results are presented for review, including an annotated image and a list of detected objects. Below, the raw mask is shown next to the processed version. Our geometry-aware classification correctly identifies all shapes (rectangles, polygons, circles, ellipses, arcs, and curves), converting the pixel-based mask into a clean vector graphic, which can be downloaded as an SVG.

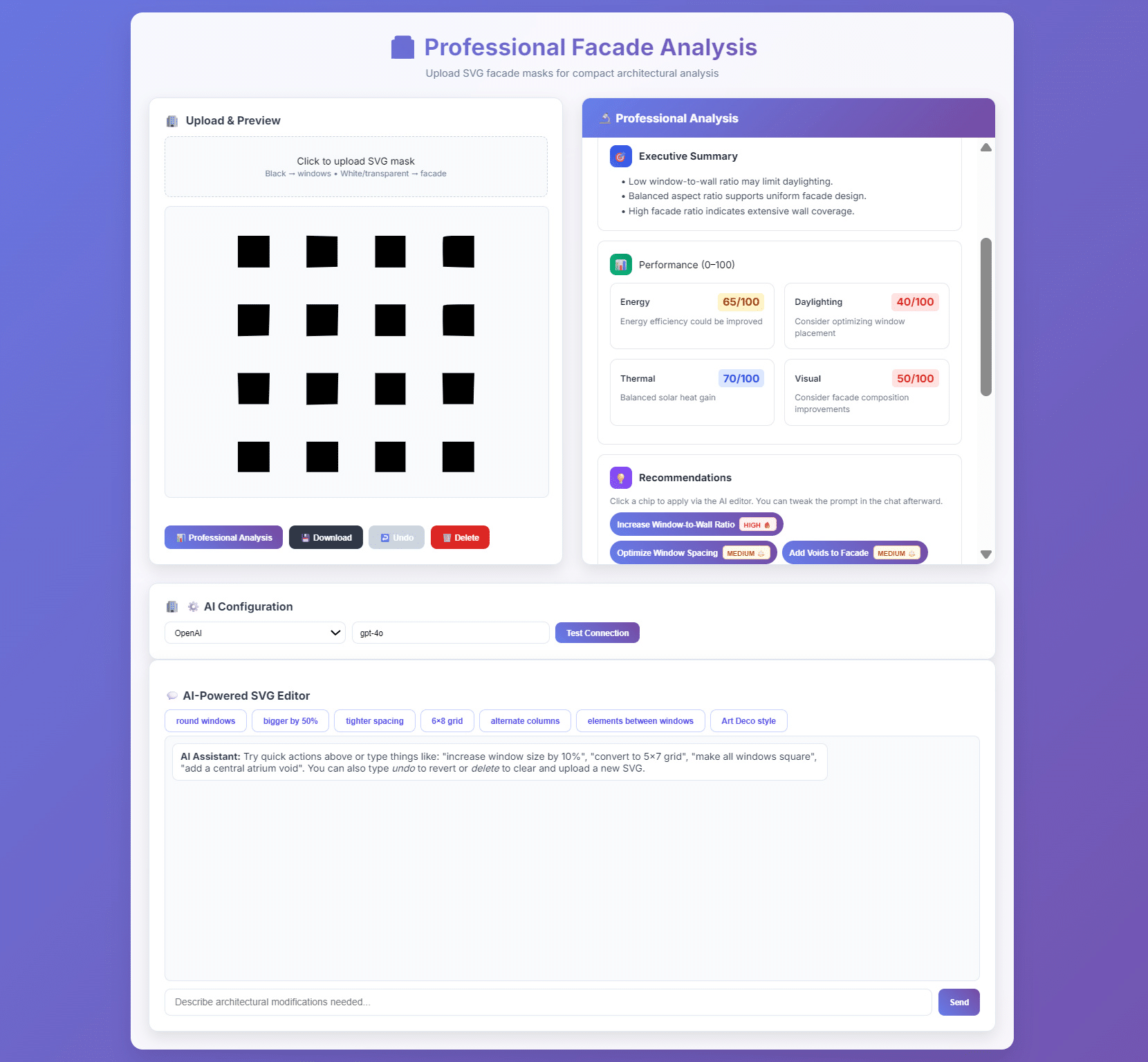

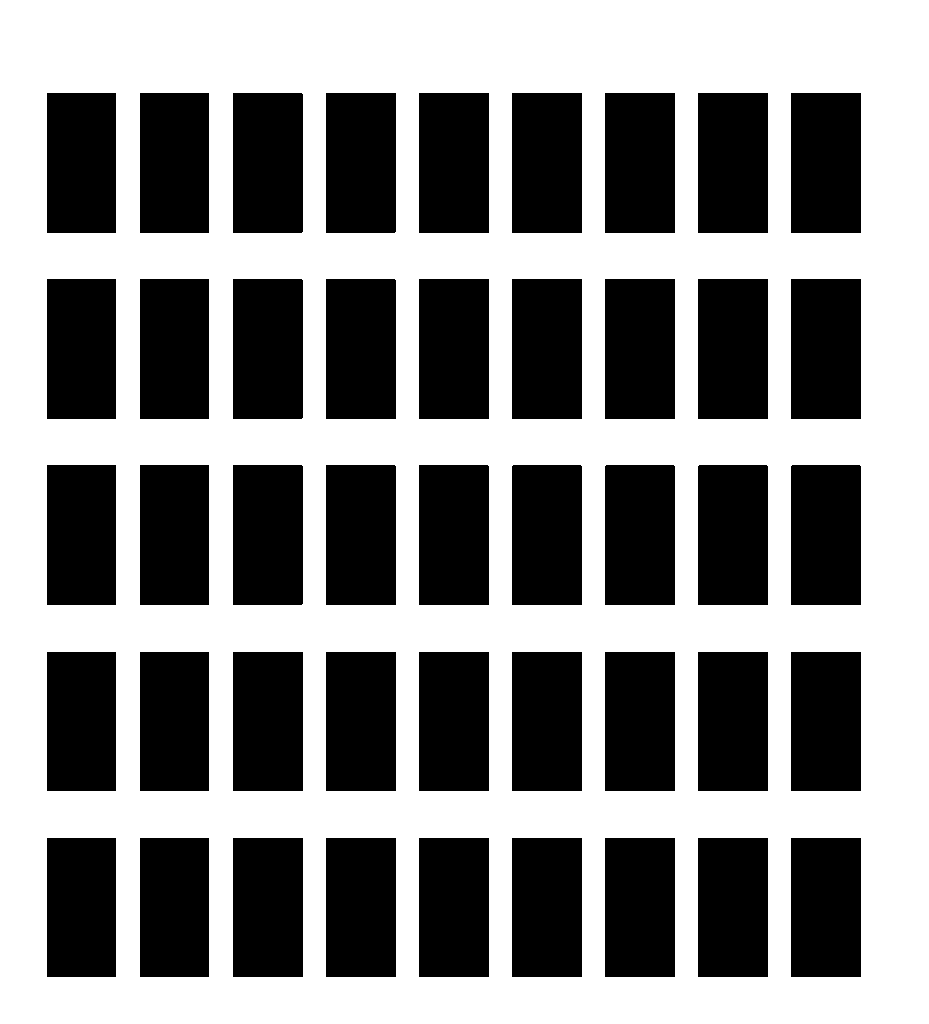

STAGE 2. LLM CHATBOT

Using our chatbot, you can iteratively enhance your facade design. After each change, run the GPT-powered Professional Analysis to quantify the impact—evaluating daylight, rhythm, and thermal performance—so you can see how each iteration improves the design with clear, measurable feedback. Below is an example of how chatbot can significantly improve the design and it performance scores by implementing changes expressed semantically using the chat section.

Initial performance analysis for facade design based on window mask:

- Energy – 65/100

- Thermal – 70/100

- Daylighting – 40/100

- Visual – 50/100

Final performance analysis based on window mask:

- Energy – 65/100

- Thermal – 60/100 (5% lower)

- Daylighting – 70/100 (30% higher)

- Visual – 75/100 (25% higher)

CHATBOT WORKFLOW EXAMPLES

Prompt: align windows into 6 columns and 8 rows

Prompt: add more visual rhythm

Prompt: increase window-to-wall ratio by 25%

Prompt: make it more energy-efficient: there might be more daylighting on upper floors

Prompt: align round windows into 6 columns and 8 rows

Prompt: create a diagonal gradient NW→SE where window area scales 0.2× to 1.2× smoothly

Prompt: randomly remove 10% of openings but never two adjacent

Prompt: add a perforation pattern to the upper facade panels

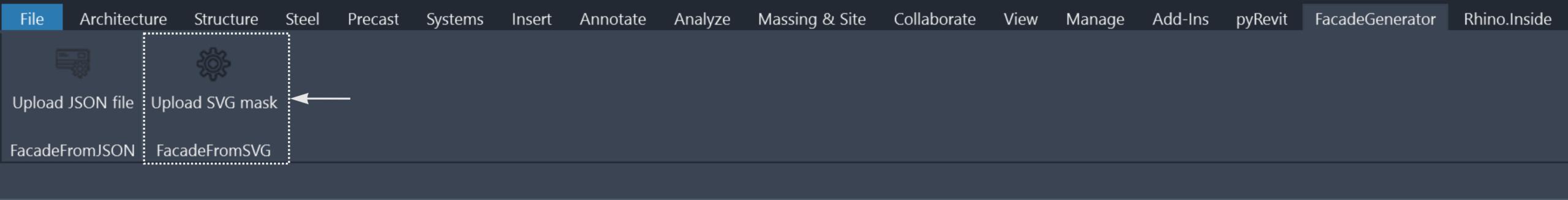

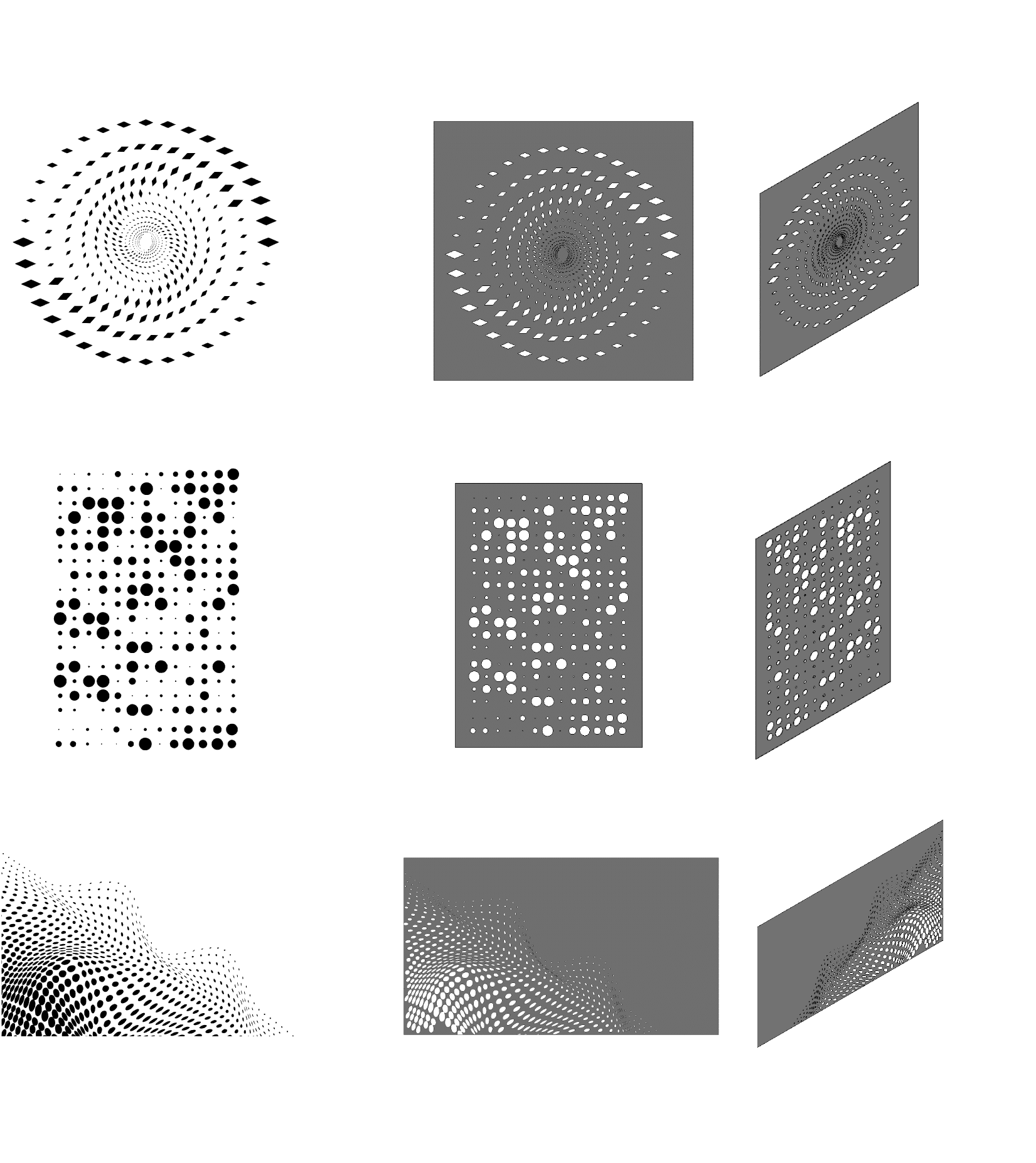

STAGE 3. REVIT CUSTOM SCRIPTS

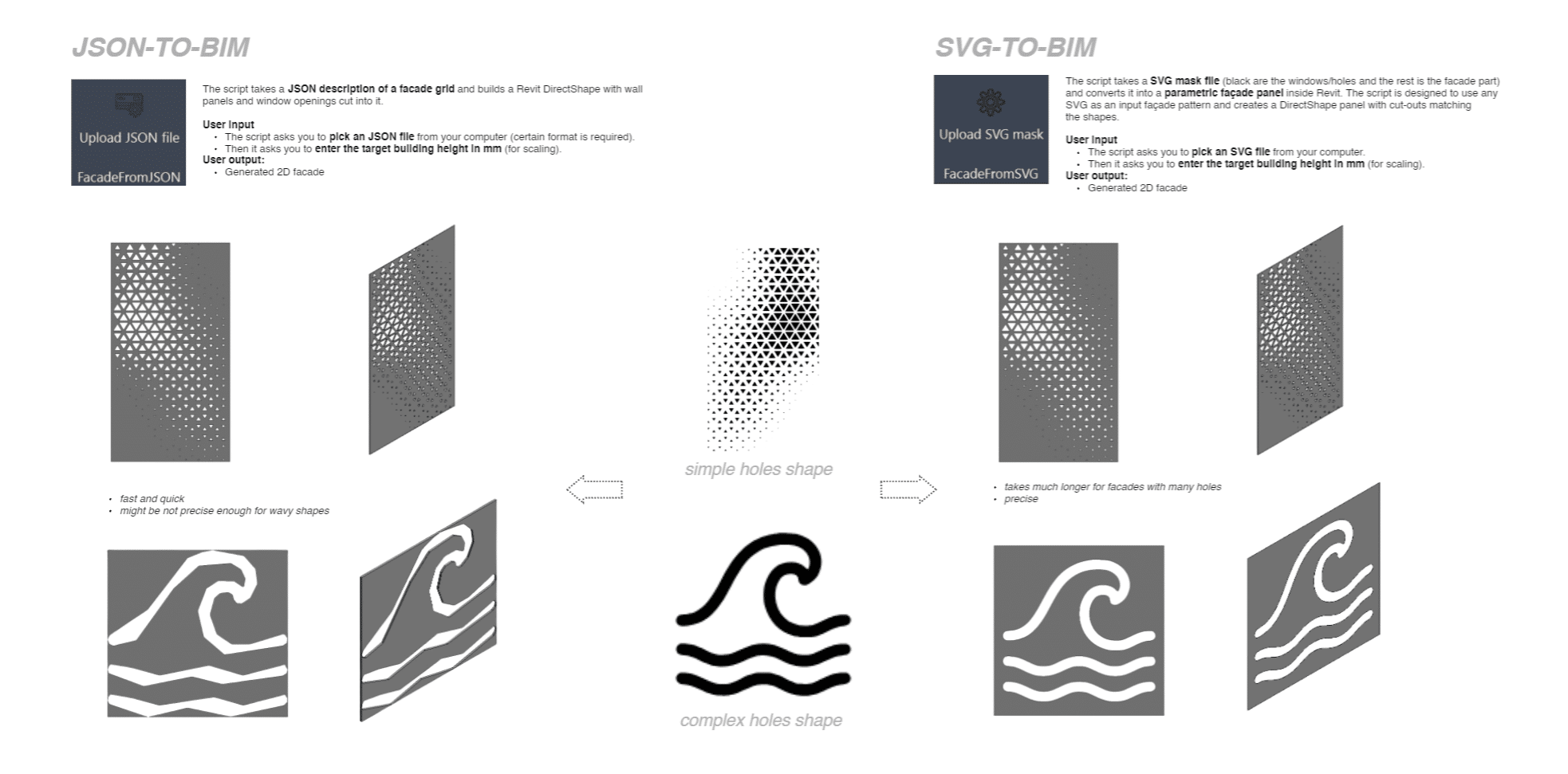

Initially, a custom Revit script was developed using pyRevit, titled FacadeFromSVG, which automates the creation of façade elements from SVG masks while assigning a fixed depth to each geometry. This tool seamlessly translates vector-based representations into parametric models, allowing for rapid prototyping and visualization within the Revit environment. Below are several examples demonstrating the capabilities of this script in efficiently generating complex façade designs from SVG inputs.

INPUT

OUTPUT

INPUT

OUTPUT

In cases where façade SVGs contained numerous openings, the creation process could become time-consuming, requiring approximately 5–15 minutes per example. In contrast, SVGs representing facades with fewer openings were generated almost instantaneously. To enhance workflow efficiency, we developed an auxiliary script, FacadeFromJSON, which reconstructs facades directly from a JSON input. The process begins by preprocessing the original SVG files using a dedicated Google Colab environment, converting them into JSON format suitable for input into the Revit-based FacadeFromJSON script. This combination of tools allowed for near-instantaneous reconstruction, resulting in substantial time savings across repeated experimental cycles.

While the FacadeFromSVG script accurately recreates façade shapes—including precise curves and complex outlines — the FacadeFromJSON approach simplifies all geometries into polygons. As a result, features such as curved or Bezier-shaped openings are approximated as straight-edged polygons. This trade-off enabled rapid iterations and testing, although it introduced minor losses in geometric fidelity for facades with curved details.

STAGE 4. VISUALISATION

To evaluate the design and visual qualities of façades during earlier stages of SVG modification, we utilized the SVG masks as inputs for edge detection (Canny) or depth maps to drive visualization workflows. These inputs were fed into Stable Diffusion XL (SDXL) and Flux models running in Google Colab and ComfyUI environments, enabling the generation of high-quality and context-aware façade visualizations. This approach allowed us to rapidly preview architectural variations and assess compositional changes in near real-time. Below are representative results demonstrating the effectiveness of combining SVG-derived data with generative diffusion models for façade visualization.

FULL WORKFLOW EXAMPLE

Tracing the progression of a single AI-generated façade through all stages of the workflow. Beginning with segmentation in SAM, the façade is progressively modified using LLM-driven edits, verified through SVG representations, and reconstructed as a parametric model in Revit.

Workflow scheme:

- Input image

- SAM window/door/balcony detection

- Mask extraction with extracted shapes:

- rectangle

- arch

- circle

- ellipse

- n-polygon

- curved shape

- LLM loop

- create SVG according to the prompt until confirmed

- create visualisation:

- if confirmed – move forward

- if not confirmed – step back

- BIM model creation

DEMO

STAGE 1 – GRADIO DEMO

Given the substantial compute demands of the SAM application leveraging GroundingDINO and SAM2, we migrated execution to Google Colab backed by an A100 GPU.

In our app, we can input the facade image, set all necessary thresholds to control the segmentation process and choose detection objects such as windows, windows & doors or windows, doors, balconies.

After running the segmentation, it displays the annotated image to check if all was detected and labelled correctly with the list of detected objects. Below, you can view the raw mask output alongside the processed, classified mask. We apply a geometry-aware classification algorithm to this mask, which accurately identifies all shapes—including rectangles, polygons, circles, ellipses, arcs, and curves—and converts them into clean, vector geometries. The final, cleared mask is available for download as an SVG file.

STAGE 2 – CHATBOT DEMO

The chatbot application features two main sections: the Professional Analysis panel (positioned on the right) and the chatbot interface (located below). The Professional Analysis panel provides an in-depth assessment of the uploaded SVG façade mask, calculating metrics such as aspect ratio, window-to-wall ratio, and overall façade proportions. Below this, performance scores are displayed for parameters including daylighting, thermal comfort, energy efficiency, and visual quality. The panel also generates tailored recommendations for façade improvements, offering actionable prompts—such as enhancing visual appeal or increasing daylight penetration—with detailed guidance.

In the chatbot section, users can interactively test their current model connection or switch between options like GPT-4, GPT-5, and others. Sample prompts are available, such as “make all windows round,” enabling rapid experimentation with façade designs. Once a text prompt is submitted, the SVG façade is modified in real time, with processing speed depending on the selected AI model. If the outcome is unsatisfactory, users can easily undo changes and retry using a different model, ensuring adaptable and iterative design revisions. Once the SVG modification process is complete, the finalized file is available for download, streamlining workflow and facilitating seamless integration with other design tools.

STAGE 3 – REVIT CUSTOM SCRIPT DEMO

FacadeFromSVG script takes a SVG mask file (black are the windows/holes and the rest is the facade part) and converts it into a parametric façade panel inside Revit. The script is designed to use any SVG mask as an input façade pattern and creates BIM panel with cut-outs matching the shapes.

User input

- The script asks you to pick an SVG file from your computer.

- Then it asks you to enter the target building height in mm (for scaling).

User output:

- Generated 2D facade with fixed depth.

Some complex SVGs with many holes took quite a long time to produce BIM model, around 5-15 min. To optimize the process, we made up a new script FacadeFromJSON. So, first we convert SVG to JSON file of certain format and then pass it to this script and it creates model instantly allowing to save some time.

FacadeFromJSON script takes a JSON description of a facade grid and builds a Revit shape with wall panels and window openings cut into it.

User input

- The script asks you to pick an JSON file from your computer (certain format is required).

- Then it asks you to enter the target building height in mm (for scaling).

User output:

Generated 2D facade with fixed depth.

CONCLUSION

Research questions

Control

Interpretation

Usability

Outcome

Structured prompts improved façade legibility

SAM detected grids and major elements

Prototype editing in Vue and BIM in Revit

Challenges

Open-ended prompts

Reduced clarity

Misclassification of textures and shadows

Limited automation, no semantic

classification

In this research, we explored integrating generative AI imagery with computational design through a pipeline of prompt control, segmentation, translation, and usability testing. It found that structured, rule-based prompts using Python produce more coherent architectural façades, while general-purpose segmentation models like Segment Anything can identify key elements but struggle with precision and clearness of outputs. Vector translation of segmentation data enables geometry reconstruction, though often requires manual cleanup. Finally, prototypes in Vue and Revit show that AI-generated façades can be transformed into editable components, highlighting both the potential and current limitations of using AI in spatially aware architectural design workflows. Synthesis of the research outcomes, mapping the four research questions : Control, Interpretation, Translation, and Usability — to the corresponding stages of the pipeline.

Despite having some limitation and misclassification errors, we believe that the pipeline can be useful and widely used if it founds resources for future development! Thank you!

BIBLIOGRAPHY

1. C. Li, T.Zhang, X. Du, Y.Zhang, H.Xie (2024). Generative AI Models for Different Steps in Architectural Design: A Literature Review https://arxiv.org/abs/2404.01335

2. M.Chen, S.Mei, J.Fan, M.Wang (2024). Opportunities and challenges of diffusion models for generative AI https://academic.oup.com/nsr/article/11/12/nwae348/7810289

3. D.Liu, Z.Wang, A.Liang (2025). MiM-UNet: Building Image Segmentation. https://www.sciencedirect.com/science/article/pii/S1110016825002029

4. A. Chou, W. Li, E.Roman (2022). GI Tract Image Segmentation with U-Net and Mask R-CNN. Stanford University https://cs231n.stanford.edu/reports/2022/pdfs/164.pdf

5. R. Draelos (2020). Segmentation: U-Net, Mask R-CNN, and Medical Applications https://glassboxmedicine.com/2020/01/21/segmentation-u-net-mask-r-cnn-and-medical-applications/

6. A.Kirillov, E.Mintun, N.Ravi, Hanzi Mao2 Chloe Rolland (2023). Segment Anything. Meta AI Research, FAIR https://arxiv.org/pdf/2304.02643

7. I. Picon-Cabrera, P.Rodríguez-Gonzálvez, I.Toschi, F.Remondino, D. González-Aguilera (2021). Building Reconstruction and Urban Analysis of Historic Centres with Airborne Photogrammetry https://doi.org/10.3989/ic.79082

8. S.Minaee, Y. Boykov, F.Porikli, A.Plaza, N.Kehtarnavaz, D.Terzopoulos (2020). Image Segmentation Using Deep Learning: A Survey https://arxiv.org/abs/2001.05566

9. V7lab Blog (2023) https://www.v7labs.com/blog/segment-anything-model-sam

10. DeepDataSpace (2023). Grounding DINO https://deepdataspace.com/en/blog/2/

11. Encord Blog (2025). Grounding DINO + Segment Anything Model (SAM) vs Mask-RCNN: A comparison https://encord.com/blog/grounding-dino-sam-vs-mask-rcnn-comparison/

12. Labellerr Blog (2025). Enhanced Zero-shot Labels using DINO & Grounded Pre-training for Open-Set Object Annotations https://www.labellerr.com/blog/grounded-dino-combining-dino-with-grounded-pre-training-for-open-set-object-annotations/

13. Roboflow (2024). Zero-Shot Image Annotation with Grounding DINO and SAM https://blog.roboflow.com/enhance-image-annotation-with-grounding-dino-and-sam/

14. S.Mukherjee, J. Lang, O. Kwon, I.Zenyuk, V. Brogden, A.Weber, D.Ushizima (2025). Foundation Models for Zero-Shot Segmentation of Scientific Images without AI-Ready Data https://arxiv.org/html/2506.24039

15.F.Mumuni, A.Mumuni (2024). Segment Anything Model for automated image data annotation: empirical studies using text prompts from Grounding DINO https://arxiv.org/abs/2406.19057

16. N.Tarkhan, M.Klimenka, K.Fang, F.Duarte, C.Ratti, C.Reinhart (2025). Mapping facade materials utilizing zero-shot segmentation https://www.nature.com/articles/s41598-025-86307-1

17. Á.Semjén, J.Szép (2025). Integrating generative and parametric design with BIM: A literature review of challenges and research gaps in construction design https://www.sciencedirect.com/science/article/pii/S2666496825000512

18. F.Qiu, X.Chen, L. Ma (2025). The G.A.R.D.E.N framework for parametric design: a literature review https://doi.org/10.1080/17452007.2025.2504030

19. N.Xiao (2025). Research on parametric design method of landscape architecture based on ARCGIS https://www.spiedigitallibrary.org/conference-proceedings-of-spie/13642/136421Y/Research-on-parametric-design-method-of-landscape-architecture-based-on/10.1117/12.3066723.full

20. J.Leite Alves, R. Perez Palha, A. Teixeira de Almeida Filho (2025) Towards an integrative framework for BIM and artificial intelligence capabilities in smart architecture, engineering, construction, and operations projects https://www.sciencedirect.com/science/article/abs/pii/S0926580525002080

21. J. Ko, J.Ajibefuna, W.Yan (2023). Experiments on Generative AI-Powered Parametric Architectural Design Frameworks https://arxiv.org/pdf/2308.00227.pdf

22. Y.Ye, J.Hao, Y. Hou, Z.Wang, S.Xiao, Y.Luo, W.Zeng (2024). Generative AI for Visualization: State of the Art and Future Directions. Information Visualization https://arxiv.org/abs/2404.18144

23. M.Dai, W.Ward, G.Meyers, D.Densley Tingley, M.Mayfield (2021). Residential building facade segmentation in the urban environment https://www.sciencedirect.com/science/article/abs/pii/S0360132321003243

24. P.Li, J.Yin, J.Zhong, R.Luo, P.Zeng, M.Zhang (2025). Segment Any Architectural Facades (SAAF): An automatic segmentation model for building facades, walls and windows based on multimodal semantics guidance https://arxiv.org/abs/2506.09071

25. Y.Zhou, W.Jiang, B.Wang (2025). NeSF-Net: Building roof and facade segmentation based on neighborhood relationship awareness and scale-frequency modulation network for high-resolution remote sensing images https://www.sciencedirect.com/science/article/pii/S0924271625002126?via%3Dihub

26. S.Medghalchi, et al. (2024). Automated segmentation of large image datasets using generative adversarial networks in architecture https://arxiv.org/abs/2401.01147

27. S. Dimitrova (2023). Generating and Evaluating City Building Facades Using Artificial Intelligence https://dergipark.org.tr/en/download/article-file/3625385

28. T. Ma, X. Liu, C. Wang, S. Ge, L. Du, Q. Ren, A. Zhu (2025). Building reconstruction based on Segment Anything Model guided by structural feature https://doi.org/10.1117/12.3073632