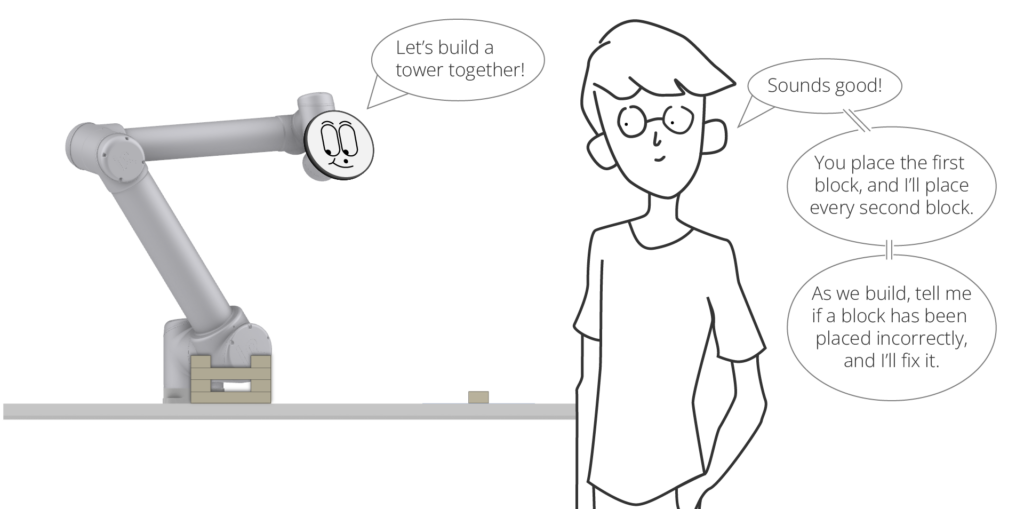

This project explores a framework for Human-Robot Collaboration (HRC) and behavioural fabrication, focusing on constructing a Jenga-like tower using small timber blocks.

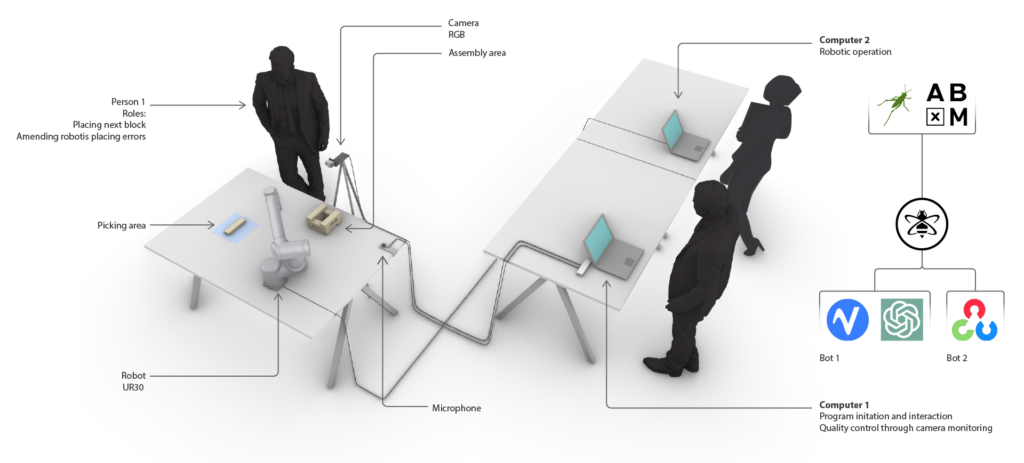

Using the Agent-Based Modeling system (ABxM) from the Institute for Computational Design and Construction (ICD), a communication network was established integrating a human participant, a computer vision system, and an interactive audio interface. This system provides voice assistance during the tower-building process, enhancing collaboration through real-time dialogue and feedback.

This approach aims to ensure precision, a crucial factor in the construction industry for reducing costs and minimizing maintenance. By incorporating verification at each step, the likelihood of errors by either the robot or the human is minimized, thereby ensuring quality and robustness in the construction process.

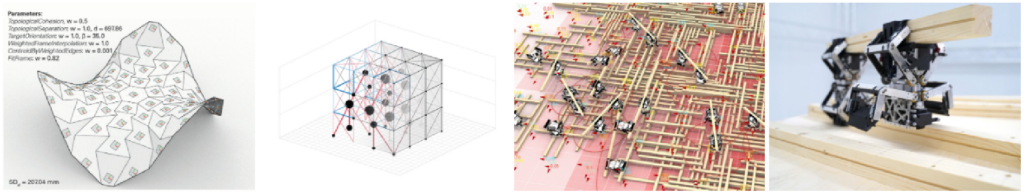

ABxM

The ABxM Framework is an open platform designed to experiment with agent-based (individual-based) systems. It aims to standardize modelling and simulation tools to enhance transparency and repeatability in research. It focuses on dynamic systems with locally interacting, autonomous, goal-oriented entities and supports both exploratory and goal-oriented simulations, such as optimization.

The framework comprises class libraries centred around the core agent library, ABxM.Core, which includes functionality for agent-based modelling and an interoperability library for Rhino 6 and later. ABxM.Core defines base classes for behaviors, agents, agent systems, environments, and a Solver class. It provides implementations for various systems, including vector-based (“Boid”), point-based (“Cartesian”), matrix-based, mesh, and network systems, allowing for the extension of its functionality through add-ons while using the core as the common infrastructure.

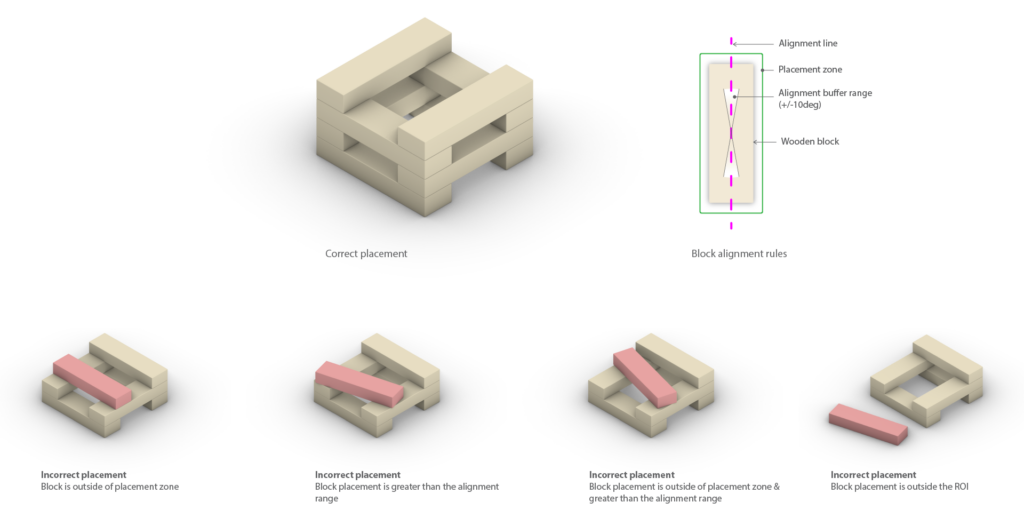

Placement rules

Creating a rule set for what constitutes as a correct block placement would directly inform the logic for constructing the various nodes used in this project.

The rule set developed would allow for a margin of error so that the system can cope with slight misalignments, but also ensure a stable tower.

Methodology

For this project, the goal was to create a Jenga tower that is as high and stable as possible through a Human-Robot collaborative process. To achieve this, the focus was on precision and improving communication between the robot and the human.

The task was divided among two computers:

Computer 1: Runs the MQTT Broker (HIVEMQ), facilitating communication between all computers. It is also responsible for human interaction, using speech-to-text and text-to-speech libraries and a trained Generative AI to answer task-related questions, handling validation, and computer vision techniques using OpenCV to ensure each block is positioned correctly and aligned properly.

Execution Logic

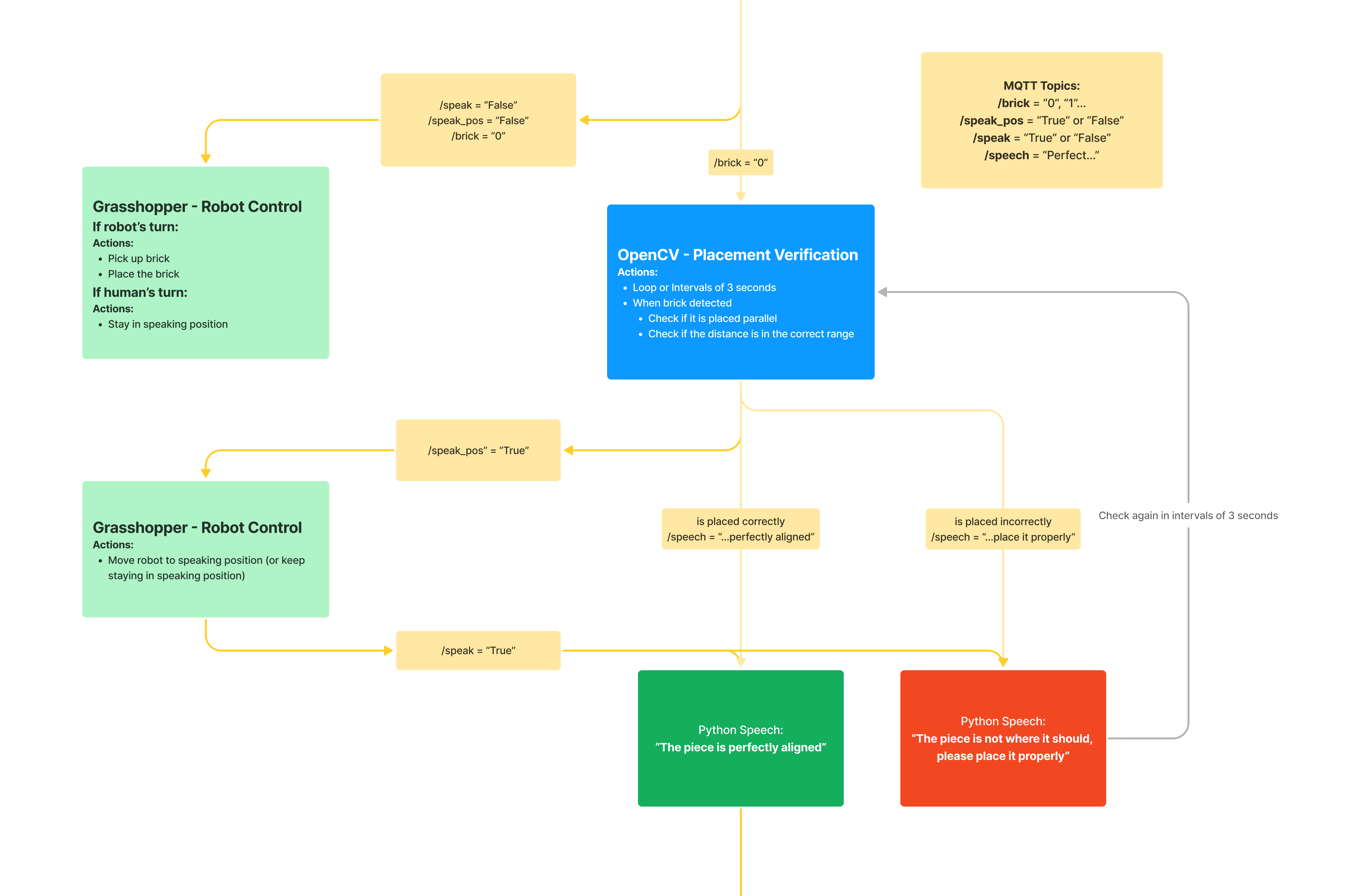

The following briefly describes the runtime logic used for this task, utilizing MQTT topics to coordinate interactions between the robot and the human participant. The overall logic is as follows:

- The robot receives the brick number. If the number is even, it is the robot’s turn to pick up and place the brick. If the number is odd, the robot waits in the speaking position while the human picks and places the brick.

- Once the corresponding brick is placed, Computer 2 checks if the brick has been placed correctly. If so, an approval message is sent to the communication bot. If the brick is not in the correct position after a certain amount of time, a misalignment message is sent to the communication bot until the human corrects it.

- The communication bot is programmed to only discuss the correct or incorrect position of the brick while in the speaking position, making the conversation feel more natural.

- Once the current brick is correctly placed, the next brick number is sent, and the process repeats.

Interaction/Communication Node

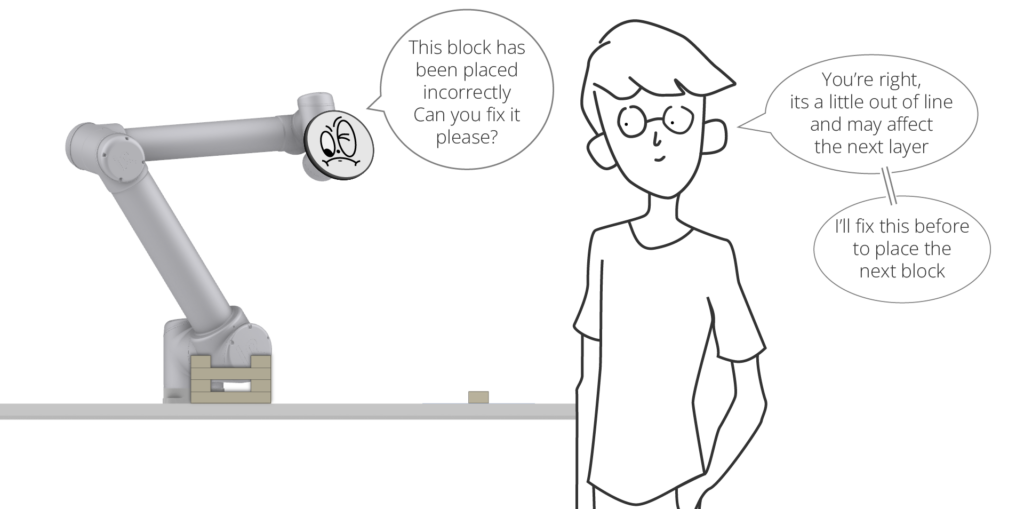

This node was created to enhance communication between the human and the robot while also exploring the possibility of the robot taking the lead and being able to respond to any task-related question.

A wake-up word, “Hey, Jarvis!” is implemented so the human can signal when the robot should listen. Jarvis then asks if the human is ready to collaborate on the task and waits for confirmation before proceeding.

To make the interaction more natural, the node is also connected to ChatGPT 3.5, trained to provide more realistic responses, enhancing the user’s experience.

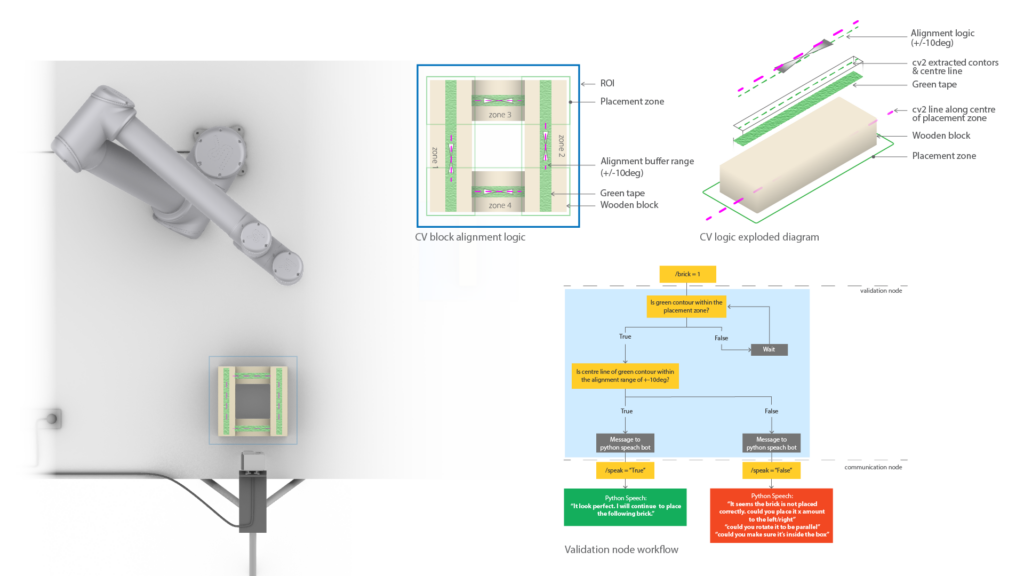

Validation Node

Using computer vision, a pixel-based square bounding box (blue) was created to track the placement of each piece. This box was subdivided into four quadrants (orange), with each turn designating a target quadrant (green) that rotates until the tower is complete (the target quadrant is determined by the brick number sent through MQTT).

To facilitate tracking, each piece was marked with green tape along its middle line. If the detected line falls below a certain pixel threshold, it is disregarded, indicating that the piece belongs to a previous round and preventing false positives.

Pieces must be placed within the targeted quadrant and properly aligned. A buffer range was established around the middle line to ensure correct alignment and orientation. If the piece falls within this range, it is considered aligned; otherwise, adjustments are needed.

Execution Node

Using ABxM, the robot, developed as a point-based (Cartesian) agent, was controlled to pick and place the pieces. This configuration allows precise movement and positioning of the robot, ensuring each piece is accurately placed in the Jenga-like tower.

Additionally, the robot was programmed to exhibit speaking behaviour. When it has something to communicate or when it is the human’s turn, the robot moves to a designated speaking position.

Results

Although the communication bot was connected to a Generative AI, the developer’s computer being set to German resulted in the bot speaking with a strong German accent. This issue is planned to be addressed in a future iteration. However, this unexpected accent adds humour and entertainment to the interaction.

The validation system worked smoothly overall. Initial false positives due to shadows were quickly resolved by improving the lighting conditions. However, building a very tall tower was challenging because the camera was positioned at an angle rather than a top-down view. This perspective issue caused bricks to appear outside the designated quadrants even when correctly placed.

There were also coordination issues with the robot moving to the speaking position in sync with the rest of the system. This was resolved by implementing necessary delays, ensuring smooth transitions and accurate communication during the task.

Further Research

To enhance the methodology, the following changes are identified as promising areas for exploration:

- Constraining the LLM for Task-Specific Understanding: Enhancing the language model’s comprehension of each specific task to ensure more accurate and relevant interactions.

- Adaptive CV for Dynamic Placement Zones: The computer vision system could be improved to include an adaptive placement zone that accommodates perspective changes as the tower rises. Further testing with depth sensors could be beneficial for more accurate placement.

- Deeper Integration with ABxM Library: Gaining a better understanding of the ABxM library to refine control over robotic motions and implement additional behaviours for more efficient and precise operations.

In addition, a user study is planned to test our hypotheses and refine the user experience. This will help eliminate any biases we, as the system’s creators, may have and ensure a more objective evaluation.

Considering Human-Centric HRC through the lens of Feminist Technoscience

MRAC workshop 3.2 in partnership with the University of Stuttgart

Faculty: Gili Ron (ICD), Lasath Siriwardena (ICD), Samuel Leder (ICD), Nestor Beguin (IAAC)