Intro

Designers know the pain: a perfect image—ruined by one wrong material. A distracting object. A missing texture. An awkward composition. You could retouch it manually, but how long will that take? And will it ever really blend? What if you could just circle the problem area and ask for something better? Something that fits your vision, your style? With the right model—and the right interface—maybe image editing doesn’t have to feel like damage control. Maybe it can feel like storytelling. So what would you change first?

The challenge

Our goal for this seminar was to explore how generative models can become design tools — not just for image generation, but as design instruments.

The result is a working web app that lets users experiment with different architectural materialities using AI.

——————————————————————————————————————————————————————————————————————————-

Training Sets

The initial goal was to build an app where users could design by blending two distinct architectural languages: the first one was inflatable translucent structures and the second one sculptural rammed earth proposals.

We started by building two datasets: ‘NFLTD’ and ‘RMMD’ (Their name would be also their trigger word to apply them with the prompt). We carefully collected over 60–70 images each, focusing on clarity, framing, and consistency. For each of the images there was a second dataset. We created a small text file with their respective caption. This information would later be used to train the LoRA

Training Sets

The next step was to load all these information in a Google Colab notebook — one for each style. We used a base Flux model to train our LoRAs because of their reasonable compute time and good results.

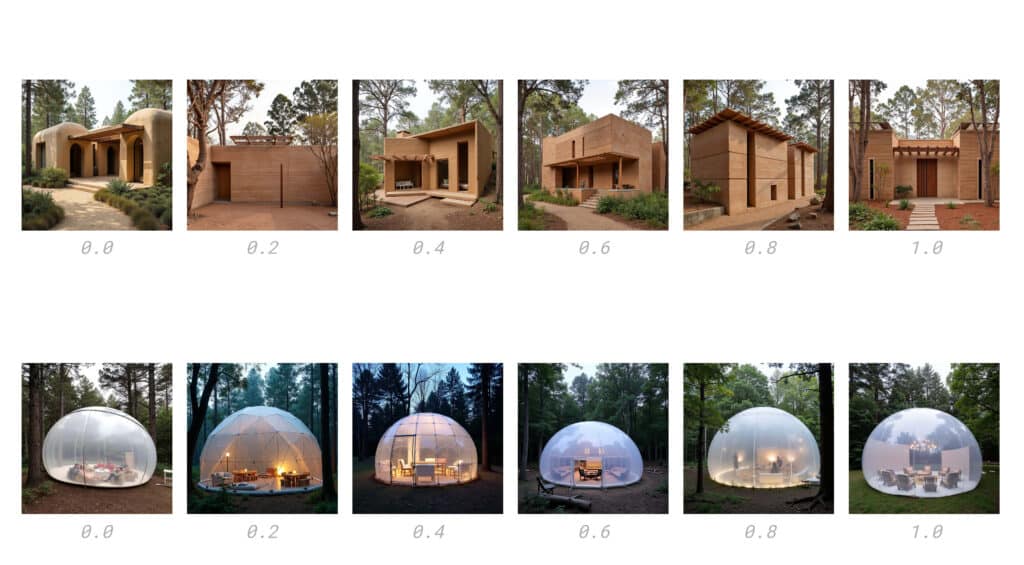

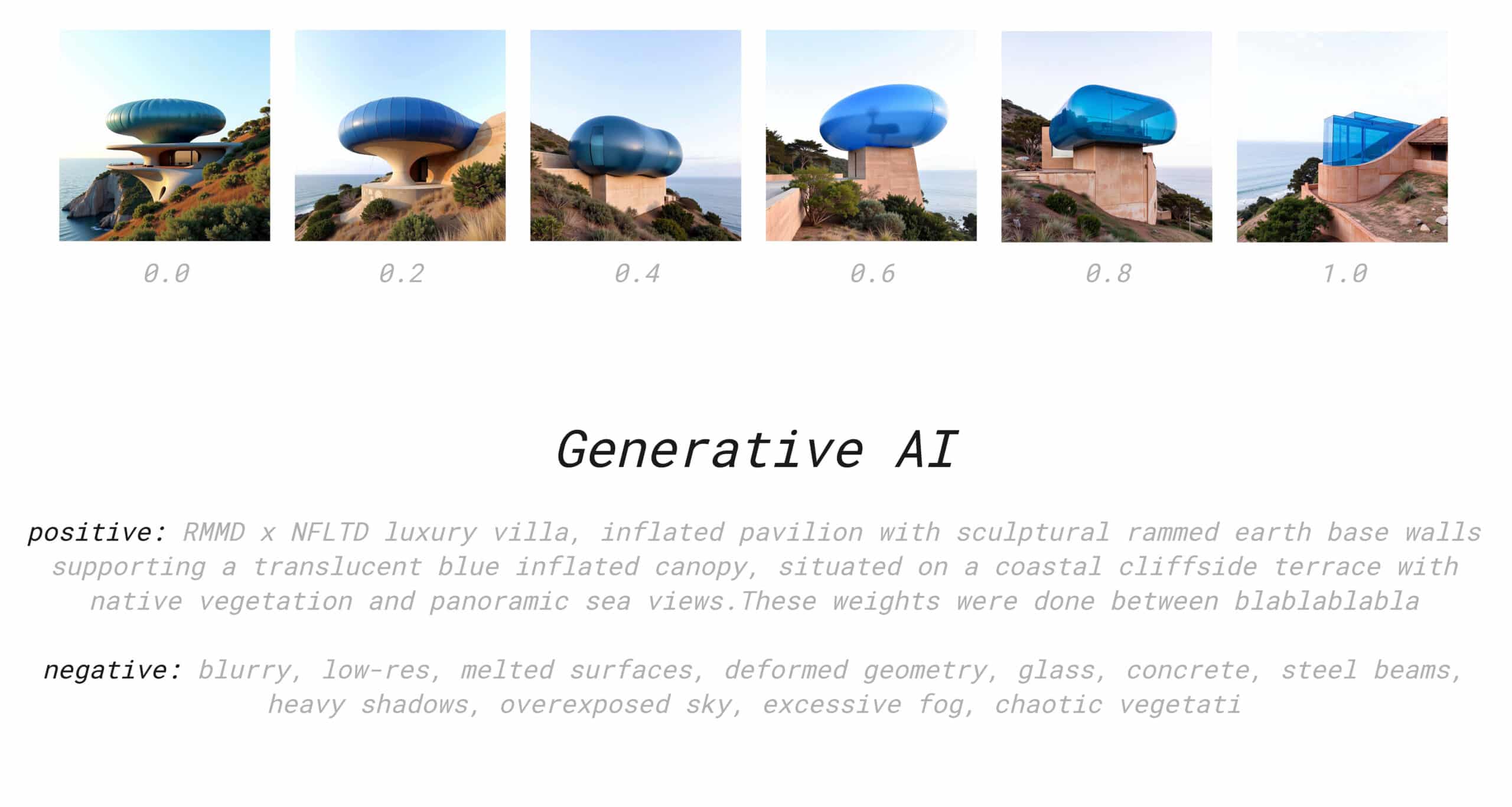

Here you can see how each model responds to interpolation — going from 0 to 1, the architectural identity of each becomes more pronounced.

Workflows

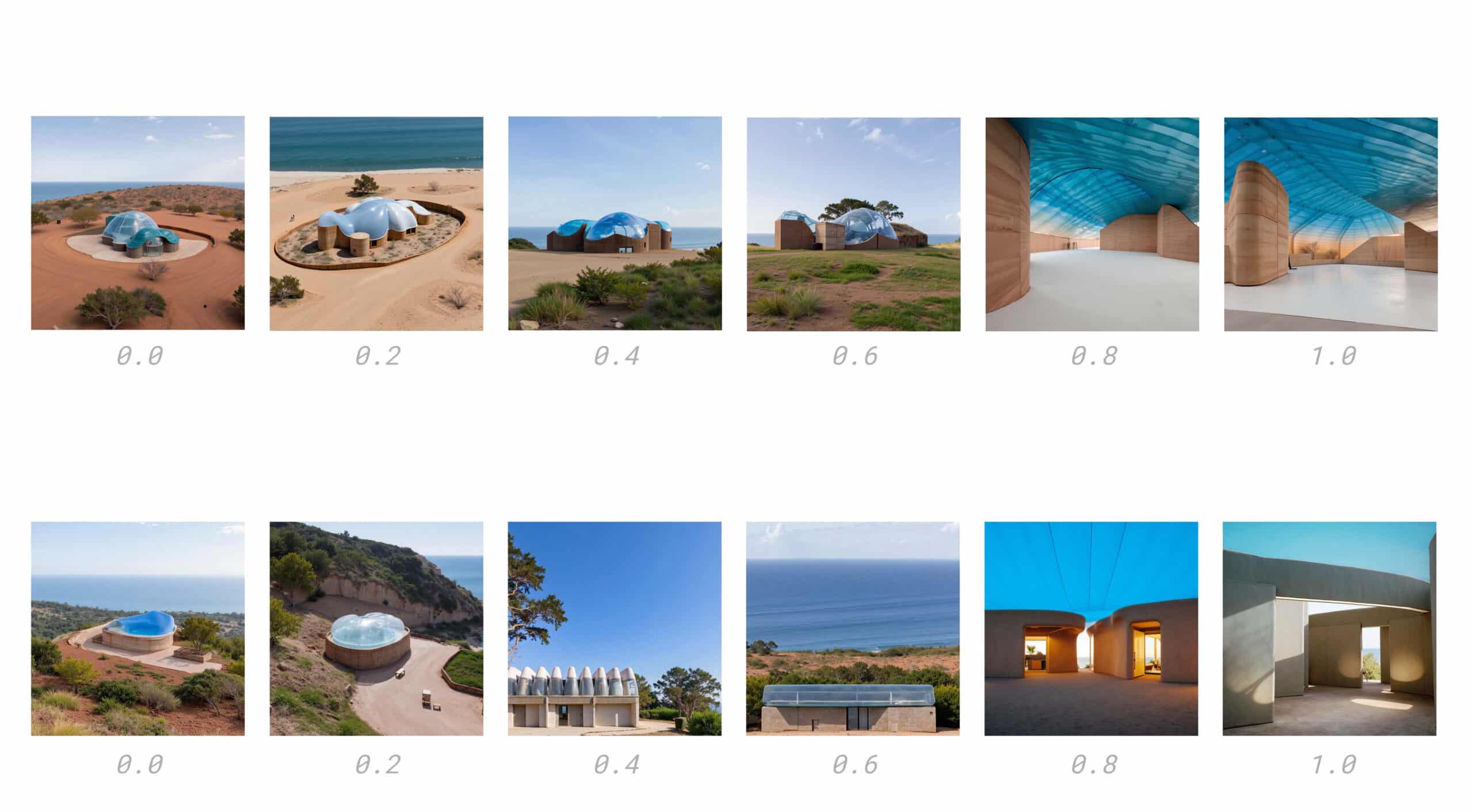

Once training was complete, we started building generation workflows using ComfyUI. This gave us a visual and modular way to test combinations and prompt structures. We ran a series of tests, exploring transitions and mixing outputs — trying to understand how controllable and expressive the models really were.

Mixings LoRAs

We then developed prompt and weight mixing strategies to blend the two LoRAs together. This enabled hybrid outputs — like inflatable villas rooted in earth materials, placed on real terrains.

First Tests

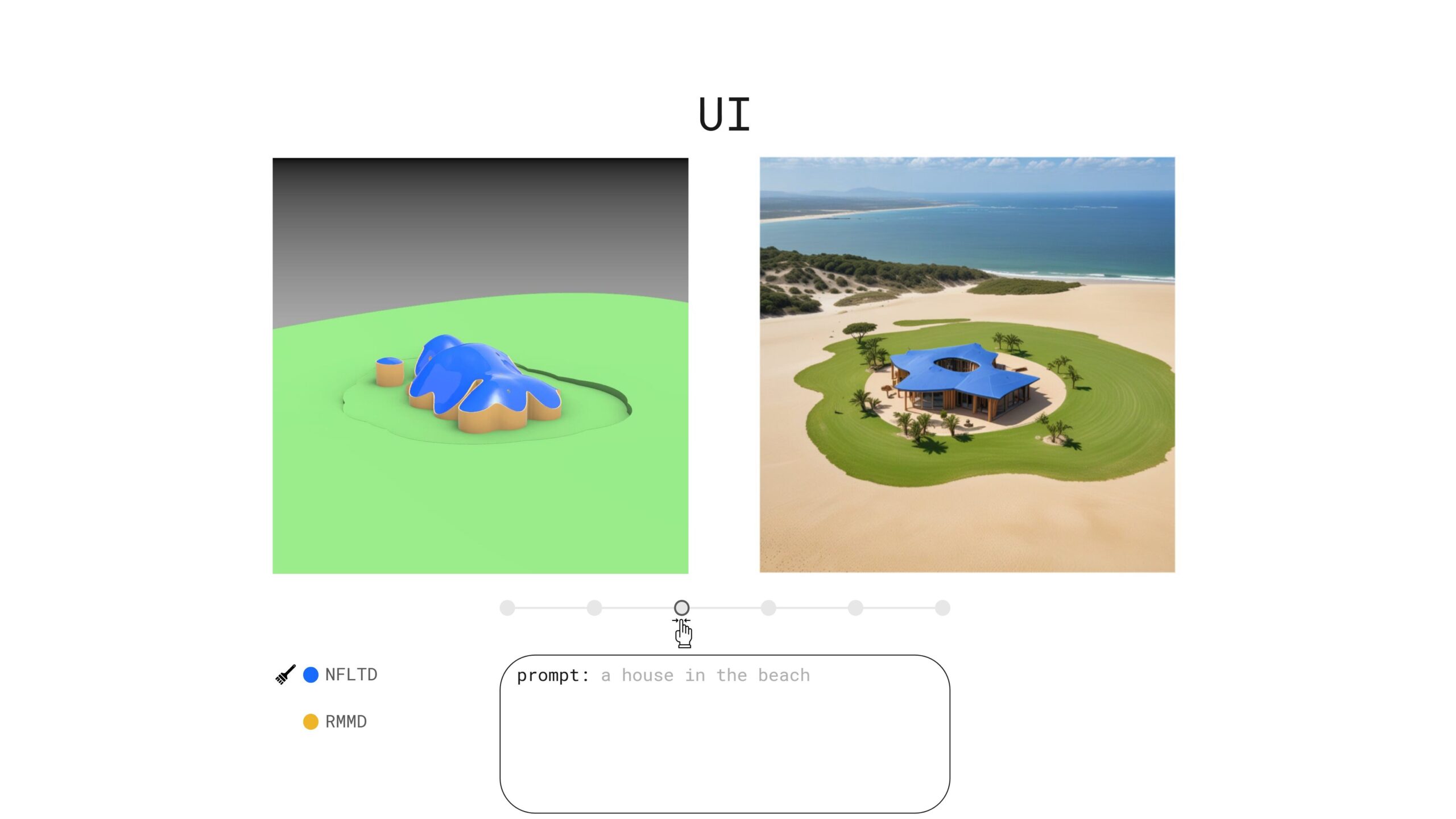

Here we started to shape the idea of interaction: what if the user could ‘paint’ with these weights and not just choose one or the other?

Prototype

On the left, you see our 3D mockup: a stylized preview of the generation zone. On the right, you see a sample output based on the prompt: ‘a house on the beach’, generated by blending both LoRAs.

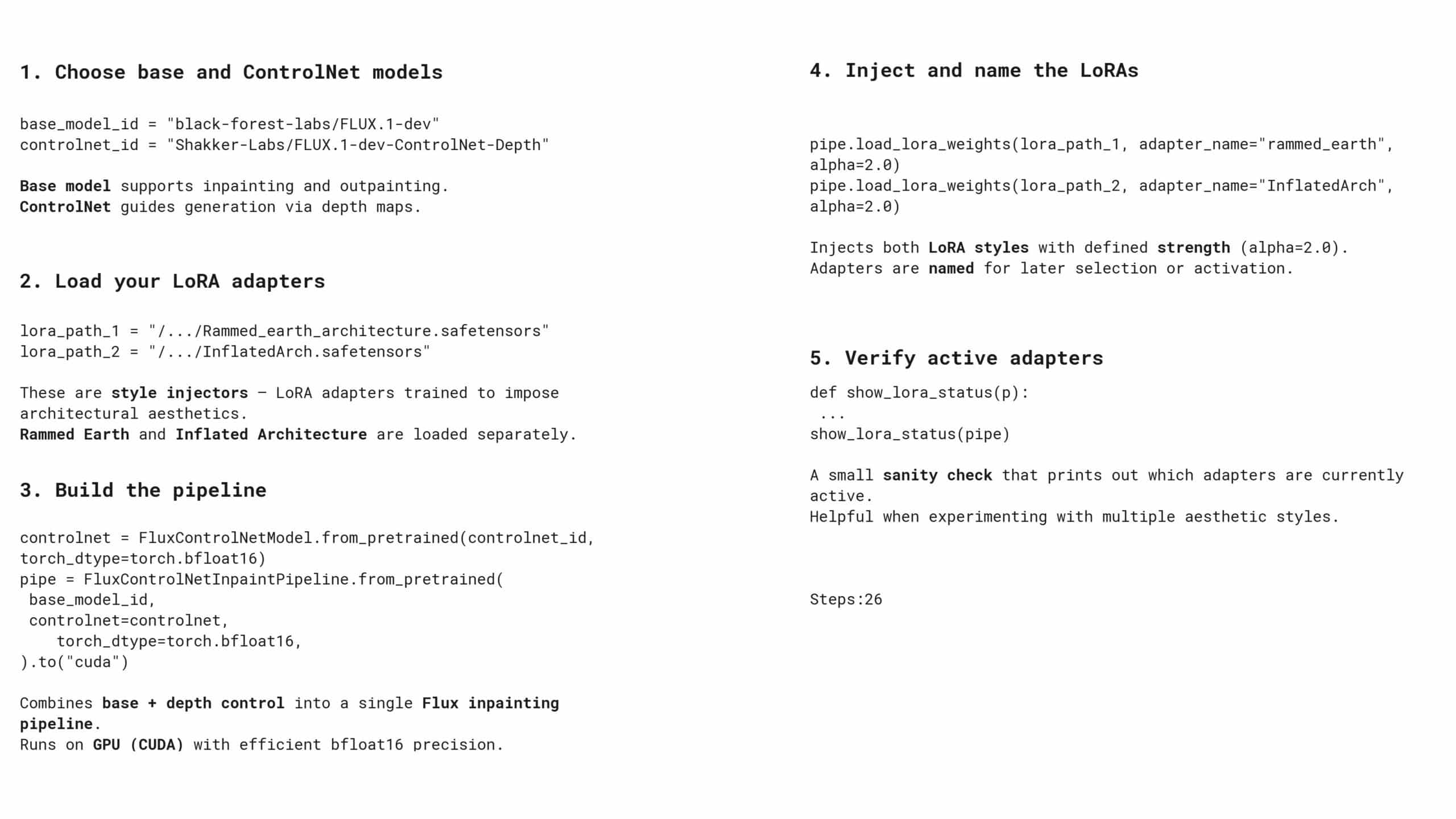

FLUX + CONTROLNET INPAINT SETUP

APP

RESULTS

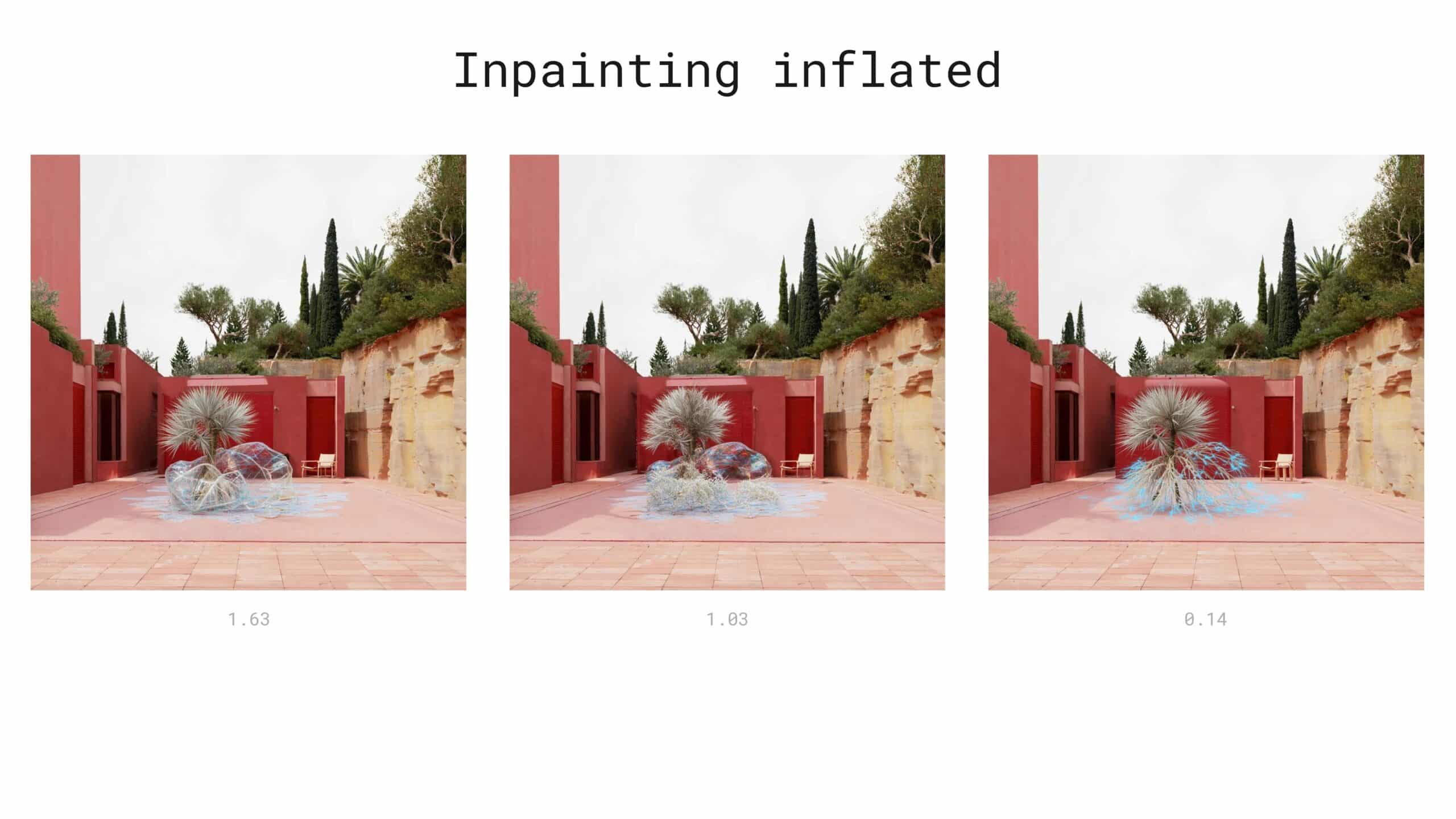

We can also achieve striking results when aiming for more sculptural or unexpected outcomes. In this example, we apply the second of our custom LoRA models—designed specifically to explore soft, inflated forms with expressive geometries.

We can clearly see how our LoRA model enhances the realism of the wall’s texture, capturing subtle material qualities like layering and depth. By adjusting the strength parameter, we influence how dominant the LoRA becomes—shifting from a light stylistic touch to a fully transformed surface.

CONCLUSION

This seminar was more than a technical exploration—it was a rethinking of how designers interact with their tools. By combining generative AI with material sensibilities, we moved beyond static image creation into an interactive, interpretive design space. But this is just the beginning. While our models show promise, challenges remain: improving prompt responsiveness, expanding dataset diversity, and refining the UI to better reflect the designer’s intent in real time.

Looking ahead, we’re excited about giving users even more agency—imagine dynamic brush-based blending, real-time material previews, or even embedding this tool within traditional 3D software. What we’ve built isn’t just a generative app; it’s a prototype for a new kind of design dialogue. One where AI doesn’t just generate images—but co-authors visions.

So, the question isn’t just “What would you change first?”—but “What story will you tell next?”