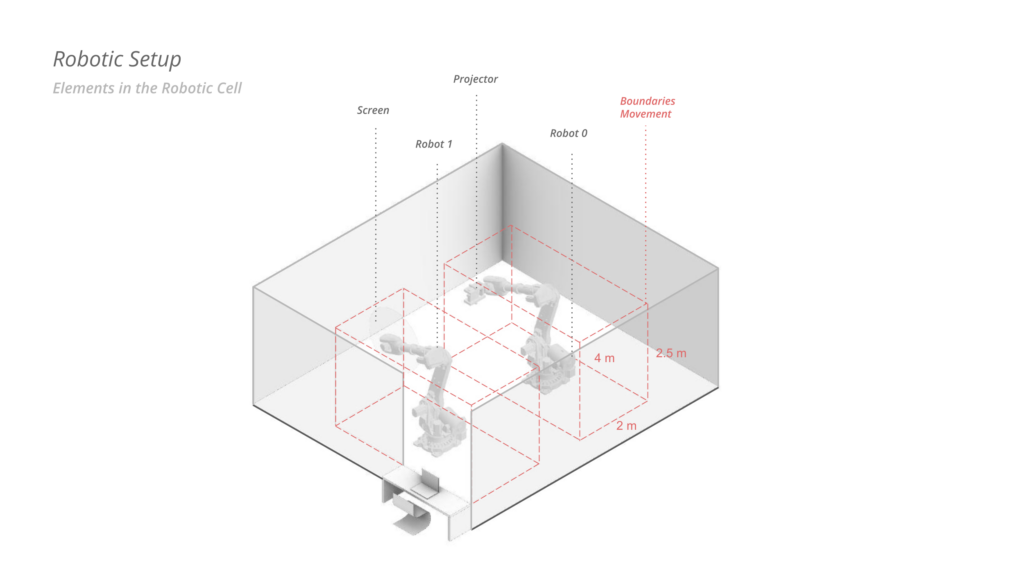

At the workshop led by Madeline Gannon, we had the chance to delve into robotic movement, prioritizing creative freedom over conventional targets like accuracy and repeatability. The workshop was designed as a breeding ground for creativity, facilitated by software components that allowed exploration without the constraints of a kinematic solver. Given the project’s one-week duration and certain safety limitations, we opted to use two giant ABB robots collaboratively. Our goal was to project from one robot onto another, fostering a dynamic interaction between them.

STATE OF THE ART

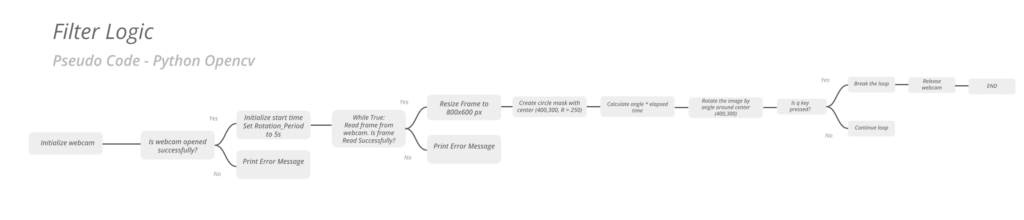

We envisioned a setup where the robots worked in tandem, incorporating human interaction directly into the project. Specifically, we chose to project images of audience members onto a rounded projection surface, creating a lively and interactive experience. This was achieved using Rhino Grasshopper and OpenCV, which ran on separate laptops. The video feed from OpenCV was processed through OBS Studio to map it onto the circular projection surface. Meanwhile, another laptop executed a predefined, randomly generated path in Grasshopper, which then interfaced with Madeline’s laptop. She managed a kinematic solver that controlled the robots in real-time.

Model Making

Final Example

The project was an immensely enjoyable experience, and we left wishing for more time to refine our ideas further. In future iterations, we would like to enhance the use of human movement to manipulate the projector and screen more dynamically. We are also interested in exploring adjustments in the distances between the two robots, digitally correcting these measurements, and introducing additional visual effects such as water splashing based on audience movement.