Our project began with an engaging challenge: to digitally map the future home of the Institute of Advanced Architecture of Catalonia (IAAC) using cutting-edge technology. We harnessed photogrammetry, a method that transforms a series of photos into a comprehensive 3D model, using both drones and smartphones to capture the images. This technique allowed us to construct a detailed virtual representation of the IAAC building.

Scanning:

*New IAAC Campus Location

*Point Cloud Reconstruction (with our own pictures)

*Drone Flythrough Reconstruction

Color Detection:

Following the completion of the scanning and the digital reconstruction of the building, our attention shifted towards the analysis and data extraction from the painted surfaces. This step was critical for developing a comprehensive plan for potentially repainting these surfaces. Our analysis aimed to map out a workflow that would encompass everything from identifying areas of damage to estimating the total volume of paint required for the project.

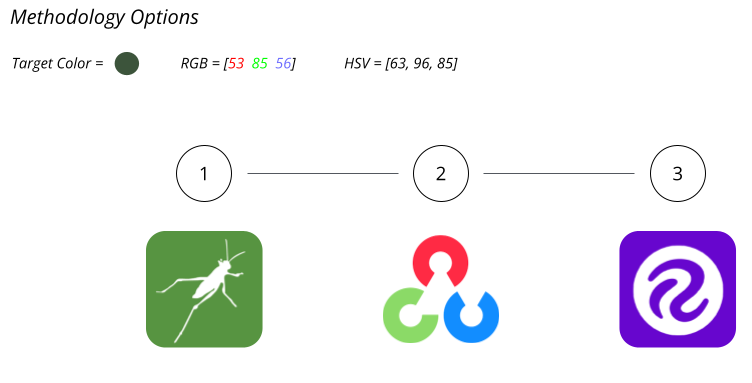

We experimented with the following methodologies:

- Grasshopper

- Computer Vision

- Deep Learning

Grasshopper:

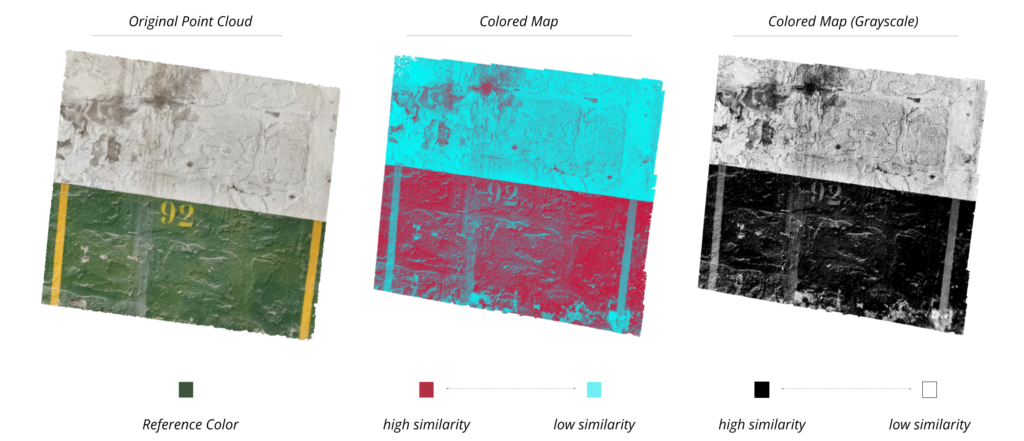

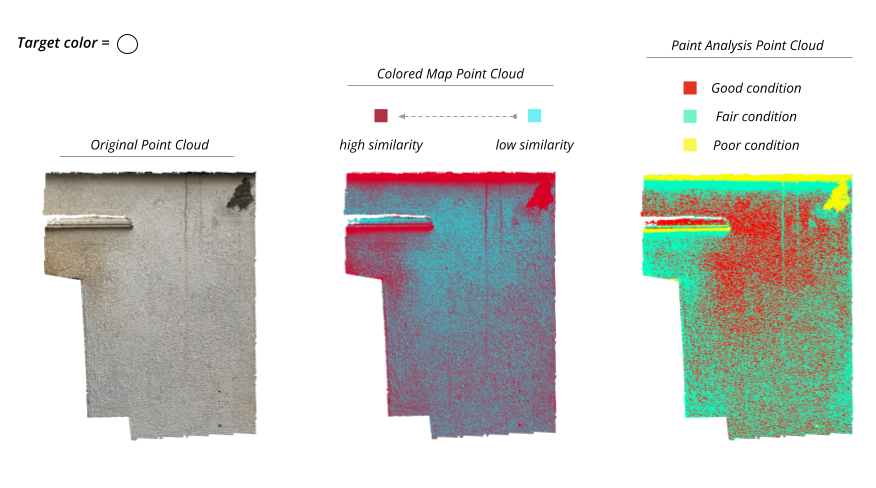

In Grasshopper, we created a color map to help us detect how close we are to achieving a color. In this specific case, we configured the reference color as the color we want to obtain, which is a dark green. In the following images, we can see how other colors or parts of the wall are approaching this color. We have used vectors to handle this data, which can be utilized in the future to create different toolpaths, vary pressure, distance, or any variable required to paint the wall correctly.

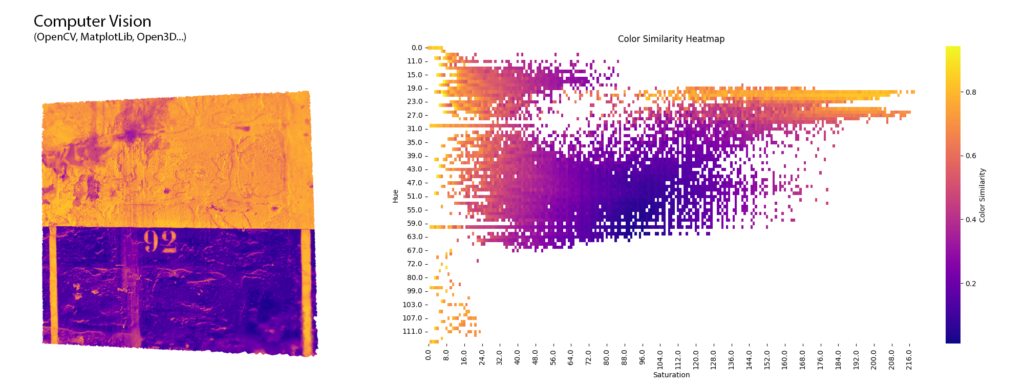

Computer Vision:

This Python workflow uses libraries such as OpenCV, Open3D, and Matplotlib, enabling us to replicate the results obtained in Grasshopper with the scanned wall data. This achievement is made possible through the calculation of color (HSV) distances between each vertex and the chosen green value (reference value), followed by normalization. Moreover, these tools facilitate the generation of compelling graphics and visualizations (as shown below), providing deeper insights into the underlying data and enhancing our understanding of the process at hand.

Deep Learning (YOLO V8.0):

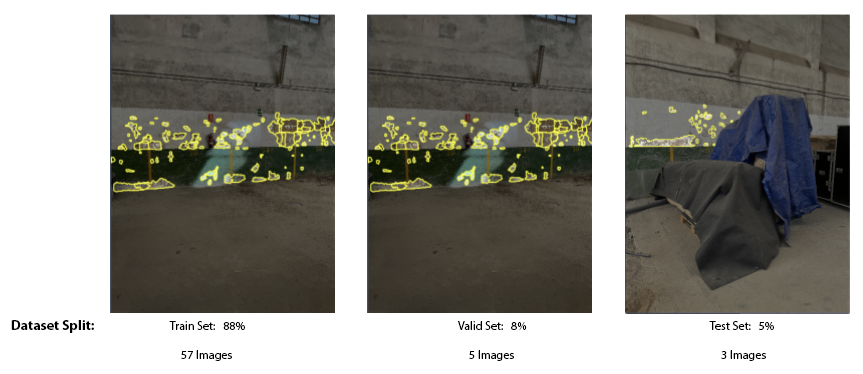

We employed Deep Learning techniques, specifically utilizing YOLO V8.0, to analyze a series of images captured from the building and identify areas of damage, such as holes, in the walls. To train our model, we meticulously labeled 65 images, accurately bounding the detected holes. Subsequently, we allocated 57 images for training, 5 for validation, and 3 for testing purposes.

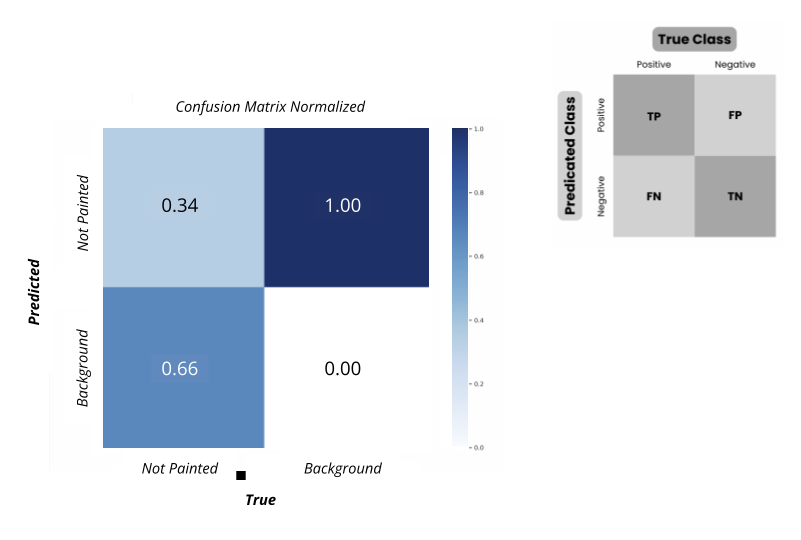

However, our analysis reveals a considerable rate of false positives and false negatives, indicating that the detection accuracy is suboptimal.

To enhance the reliability of our detection system, we propose several strategies:

- Increase Dataset Size: Augmenting the dataset by collecting more images of damaged areas can help improve the model’s ability to generalize and accurately detect holes in various scenarios.

- Data Augmentation: Introducing data augmentation techniques, such as rotation, scaling, and flipping, can effectively increase the diversity of the dataset and improve the model’s robustness to variations in image appearance.

- Changing the Labeling Technique: Transitioning to a bounding box labeling technique, rather than solely focusing on the hole, will provide additional context to our model, potentially improving hole detection accuracy.

- Optimize Training Parameters: Fine-tuning parameters such as the number of epochs during training can help optimize the model’s performance. Careful calibration of these parameters based on validation results can enhance the model’s ability to identify holes accurately.

- Explore Different Deep Learning Architectures: While YOLO V8.0 is a powerful tool, exploring alternative deep learning architectures tailored specifically for tasks like color detection may yield better results. Models optimized for color-based detection tasks might offer improved accuracy and reliability in identifying damaged areas.

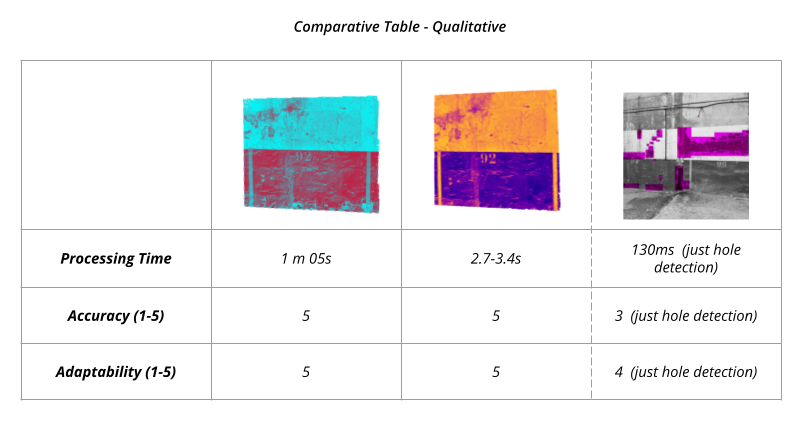

Methodology Comparison:

Methodology Comparison Conclusions:

- Using Computer Vision in Python is the most efficient

- Deep learning may not be necessary for this application; however, better results could be achieved with more time or by exploring alternative approaches.

- Grasshopper was chosen as an easy way to analyze visually the point cloud and to generate a paint analysis and potentially, a toolpath for the robot.

- With more time, it could also be done using Python and then, ROS-MoveIt.

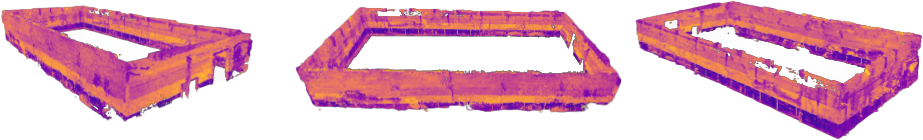

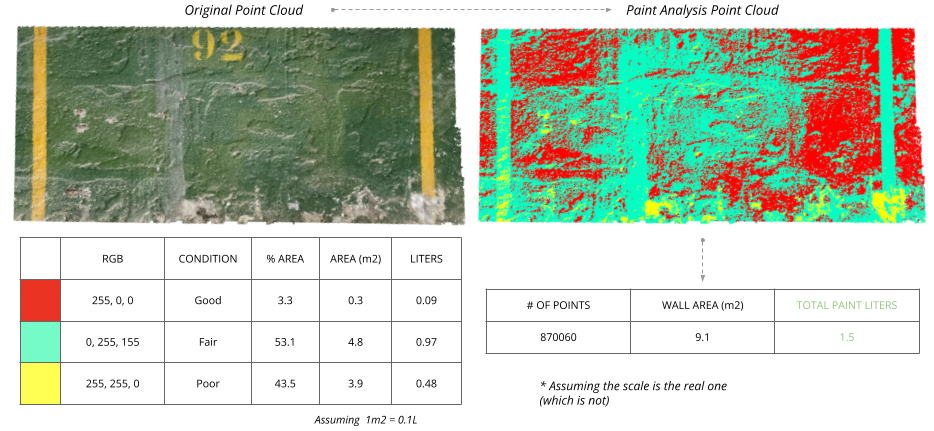

Paint Analysis:

To determine the amount of paint required to repaint the damaged areas identified through color detection and analysis, we categorized the points into three groups based on their condition: poor (yellow), fair (light turquoise), and good (red). Each category necessitates a different number of paint passes—three for poor condition, two for fair condition, and one for good condition. We calculated the surface area of each group by multiplying the original area of each group by the corresponding number of passes. Then, using the assumption that 1 square meter requires 0.1 liters of paint, we estimated the liters needed for each group and totaled them to obtain the overall quantity. It’s important to note that this estimation is rough and actual paint requirements may vary depending on factors such as paint brand and application method. Nevertheless, it provides a valuable estimate of the anticipated cost for the necessary intervention.

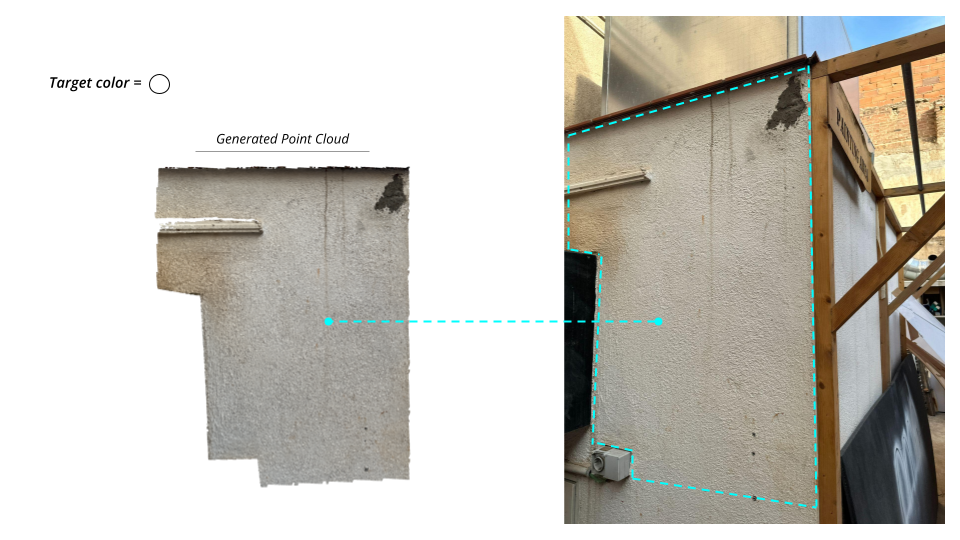

The following example demonstrates the versatility of this methodology, showcasing its applicability to any wall.

Potential Robotic Exploration:

This exploration opened up the possibility of leveraging autonomous systems for the application process, specifically for repainting deteriorating surfaces with minimal human intervention. By integrating robotic technology, we can envision a scenario where robots are programmed to identify, prepare, and repaint affected areas, optimizing efficiency and precision. This approach not only promises to revolutionize the way maintenance and restoration projects are conducted but also significantly reduces the risk and labor traditionally associated with such tasks. Through the use of these advanced robotic processes, we aim to streamline operations and achieve a higher standard of refurbishment with considerable time and cost savings.