real-time autonomous object detection

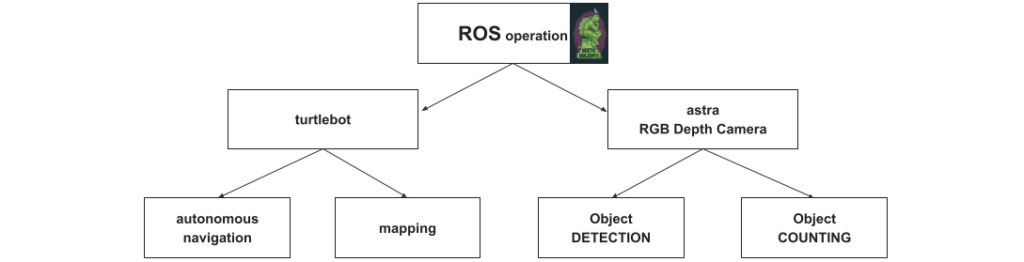

The project “RTAOD” in the workshop 2.1 was developed to navigate and localize a robot autonomously through room and create a map of the scanned area. In the meantime it collects data of detected objects and counts the quantity of them.

Required tools

This project got realized by the Software “ROS noetic” and the packages “YOLO” for object detection as well as the package “frontier exploration” for the autonomous movement.

To make navigation and data collection possible we used a “Turtlebot” which is our tool for mobility, a “2D Lidar scanner” for environment sensing to localize the robot and calculate a 2D map, as well as the “Astra depth camera” for vision. We only claimed a 2D RGB image from it to detect diverse objects.

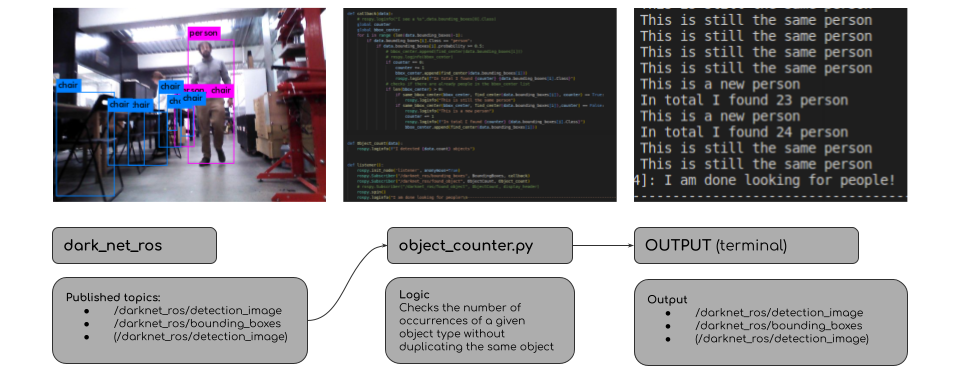

Darknet_ROS

Darknet_ROS is a ROS package that comes with a pre-trained library that allows us to detect objects from a 2D RGB image.

We wrote a subscriber node in order to dissect the information published by Darknet. This allows us to acquire data about the detected object class, the probability of detection, and the coordinates of the detected object’s bounding box.

This data is then formatted and printed in a terminal window, informing the user about the number of occurrences of a specific object as well as the detection of a new or already detected objects.

The following diagram explains the “Darknet – workflow“

Frontier exploration

Frontier exploration is the package which allows us to navigate the robot autonomously. “Slam” is the “simultaneous localization and mapping” which means that your robot is life located by creating a map of a new, unknown space. This gives you the opportunity to navigate your robot through an unknown / life constructing place. Therefore the “frontier exploration” comes into play, because it detects where the scan hasn’t collected data and calculates the optimal motion planning to reach that area. So lets say it detects free / open space and tries to close the scan.

In the following you see a demo which shows its behavior.

The following shows the real world application.

Future steps

The future step of “RTAOD” to increase information would be to use the 3D collected image data from the “Astra depth camera” for object localization due to the 3D information. That would mean that the autonomous navigating robot would create a map of the space with the localization and count of its objects. Another improvement would be to implement the “Kalman filter” for object tracking. In that case we would be sure that moving objects wouldn’t be counted twice and we even could visualize the motion-path of a specific object. This could give many opportunities and chances for companies.