An alternative ways of exploring the sounds that make up Gràcia

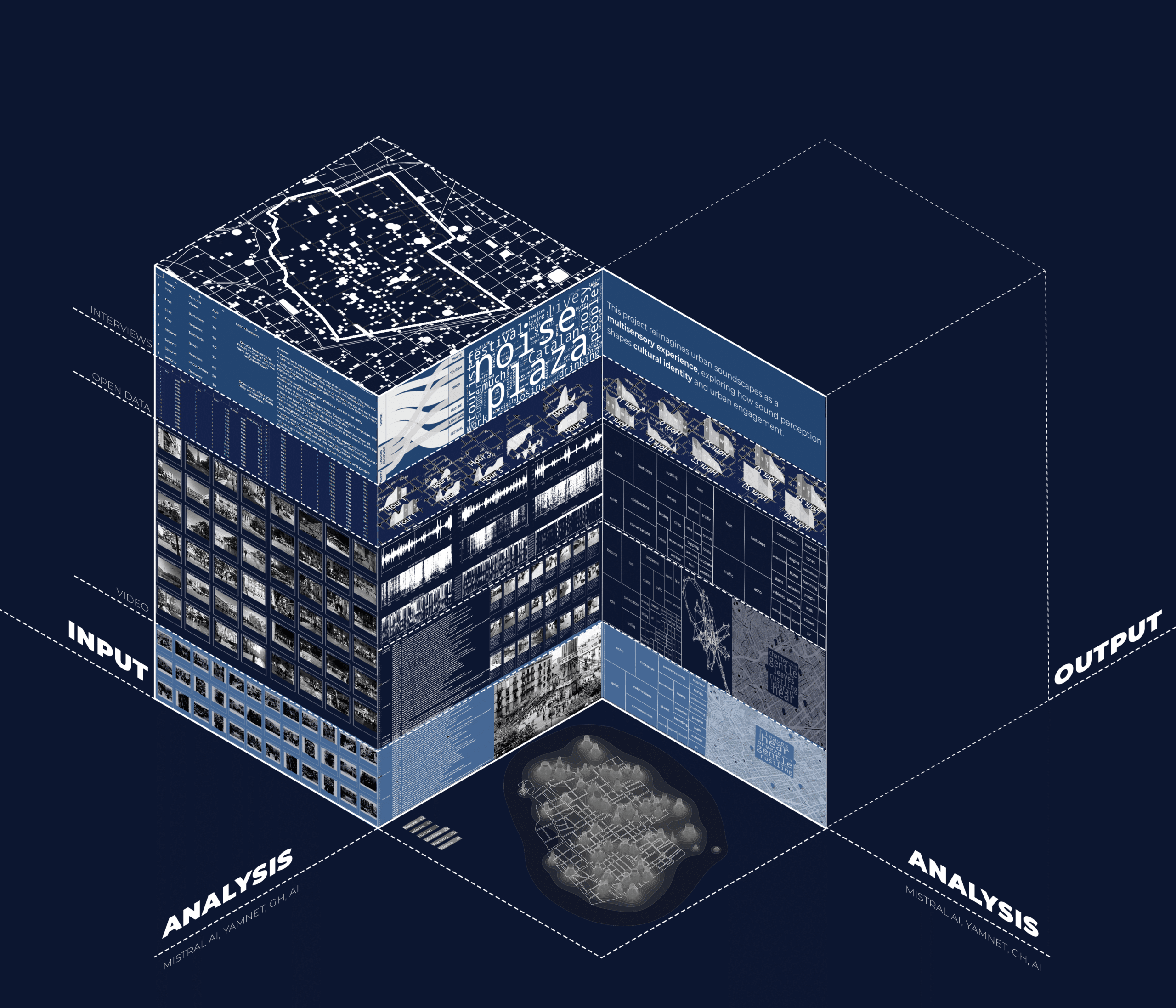

This project investigates alternative ways of engaging with the sounds of Gràcia and how they can empower communities by fostering a deeper connection to place, memory, and identity. Sound functions as both a sensory experience and a cultural artifact, carrying layers of emotion, history, and belonging.

Through a multimedia approach incorporating sound recordings, images, video, and text, we analyze how sonic environments shape and reflect cultural identities. This project aims to preserve, reinterpret, and engage with the auditory essence of Gràcia, offering new ways to listen to and interact with the spaces we inhabit.

Inclusive Design Approach

By exploring the unique soundscape of Gràcia, the project acknowledges that sound is not experienced in the same way by everyone. This recognition raises an important question: How can we design auditory experiences that are inclusive to individuals with varying hearing abilities?

Since sound can be a barrier or a bridge, the goal is to create shared experiences that empower both the hearing and the Deaf/hard-of-hearing communities. This means exploring alternative ways of engaging with sound, such as:

- Visualizing sound

- Incorporating multisensory experiences

- Fostering dialogue and exchange

By bridging the gap in experiences, the project moves beyond simply documenting sound to rethinking how it can be accessed, interpreted, and shared in a way that is equitable and inclusive. This ties back to the broader theme of sound as a force for connection, empowerment, and community-building.

Research Question(s)

How do human and non-human activities shape the urban soundscape of Gràcia? What if these sounds be deconstructed and reinterpreted through other senses to empower individuals in their urban experience?

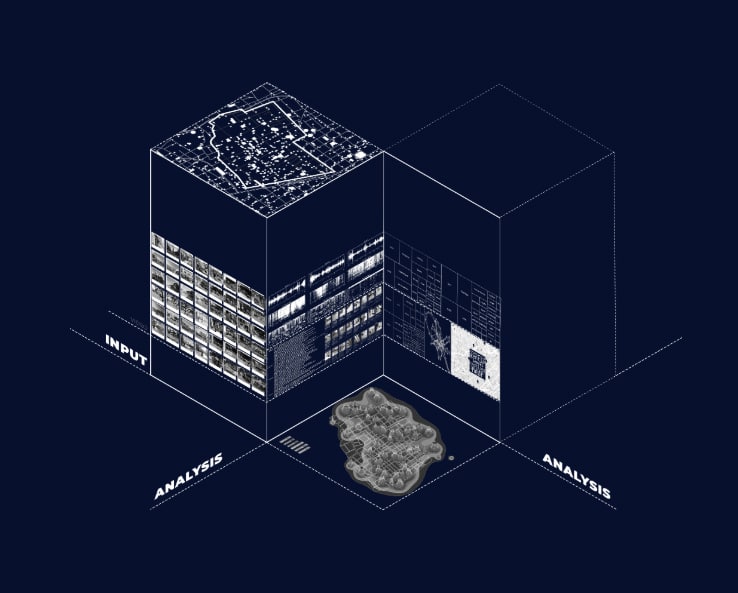

Data Collection and Analysis Methodology

Our methodology explores sound as an intangible yet powerful phenomenon, using non-traditional approaches to capture its experiential and subjective nature. By collecting diverse data—identifying sounds, their sources, and their audiences—we leverage AI to analyze the urban soundscape, uncovering insights that inform our proposal and its impact on the community.

Interviews: First Hand Data Collection

Objective: To understand how residents experience their neighborhood and its soundscape.

Steps Taken:

- Asked general questions about the neighborhood – To identify any recurring concerns or notable aspects of their environment.

- Focused on urban sounds in follow-up questions – To explore how people perceive and interact with sound in their daily lives.

- Analyzed responses using word clouds and Sankey diagrams – To visually synthesize common themes

This approach helped us map community perspectives on sound and identify key themes for further exploration

Video Analysis: First Hand Data Collection

Objective Part 1: To identify and categorize urban sounds in Gràcia by capturing real-time audio-visual data.

Steps Taken:

- Recorded videos while walking through Gracia – To document the urban soundscape

- Used Python scripts and the YAMNet plugin – To process and analyze the recorded audio, converting it into categorized data.

- Identified and classified sounds – To systematically recognize different types of urban sounds and their prevalence.

- Extracted frequent sound patterns – To compare between data sources.

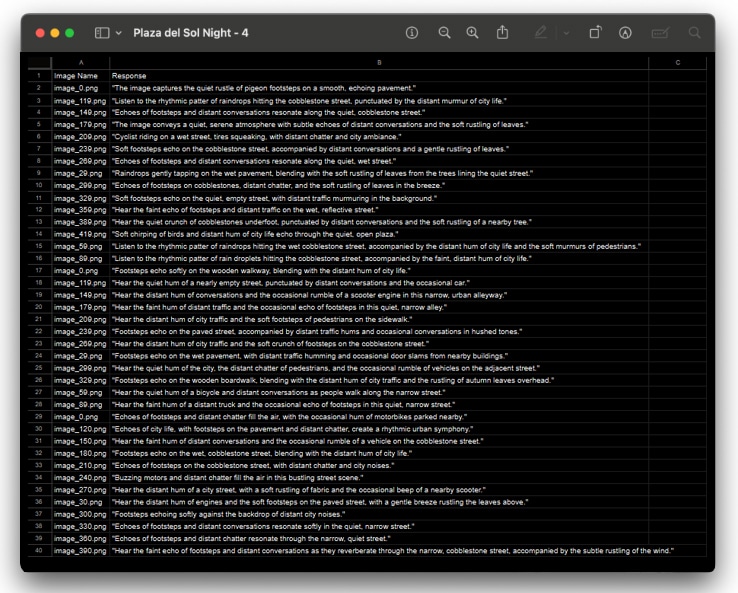

Objective Part 2: To explore AI’s capability in interpreting sound through image-based reasoning and compare results across different data techniques.

Steps Taken:

- Extracted images from previously recorded videos – To analyze how AI perceives sound through visual input.

- Applied Mistral AI and LLaMA vision reasoning models – To generate descriptions of the sounds based on the extracted images.

- Compared AI-generated sound categorizations with previous analysis – To identify patterns and differences in how sound is recognized across methods.

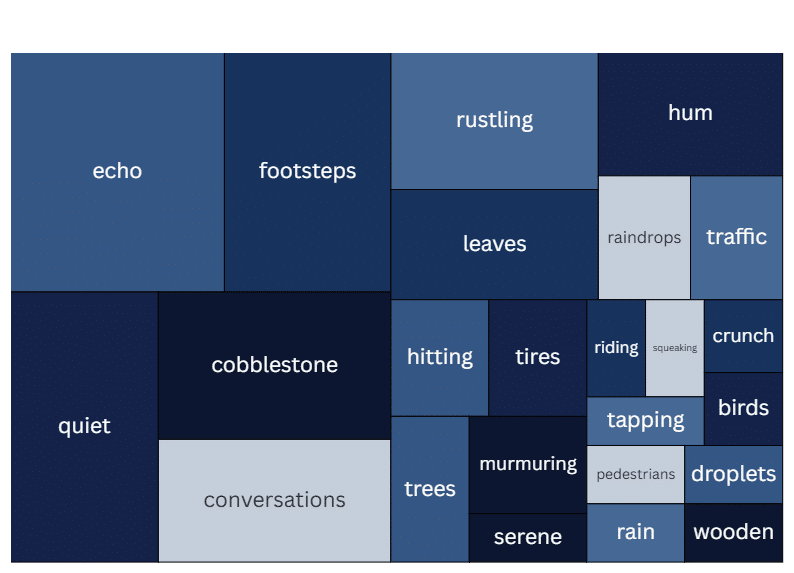

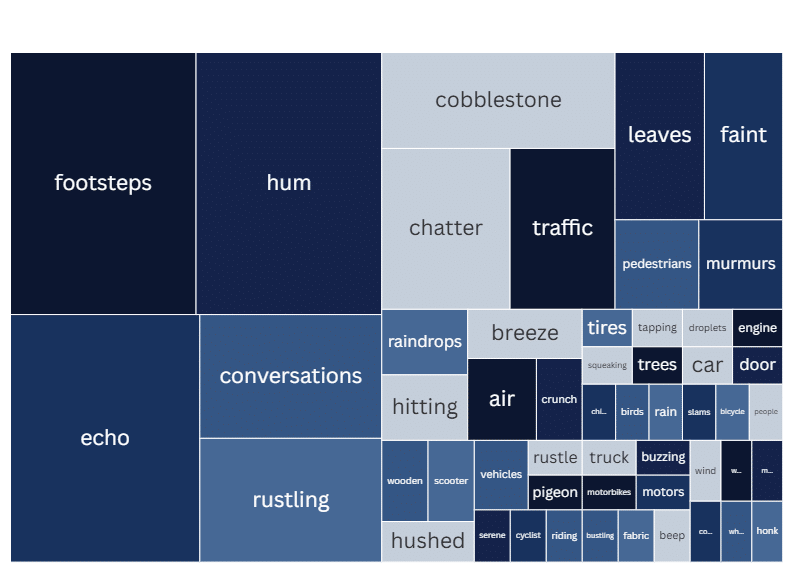

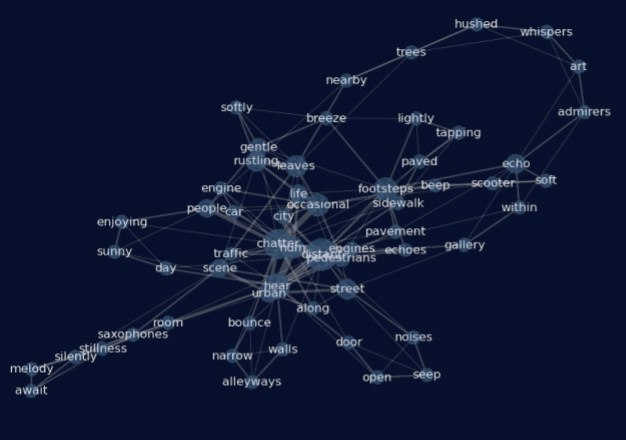

- Created word clouds to find associations – To explore which sounds appear together most frequently and how they relate to our other data points.

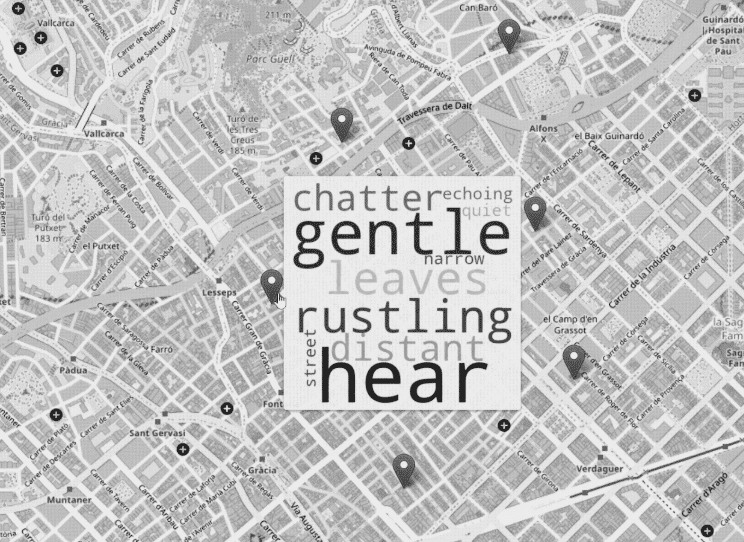

- Developed a sound mapping framework – To serve as a foundation for localizing and analyzing Gracia’s urban soundscape in next steps

Why Use AI for this?

Comparing AI outputs from the same data source

The sounds obtained from the analysis were, on the one hand, organized into a network showing the correlation between the different sound types. On the other hand, an interactive map was created to visualize the sounds that characterize the different points of Gràcia.

Google Street Map: Data Scraping Technique

Objective: complement the photographic record with a wider range and diversity of days and times.

Steps Taken:

- Extracted images from Google Street View – To analyze how AI perceives sound through visual input. The extraction of the images was done through the use of Python.

- Applied Mistral AI and LLaMA vision reasoning models – To generate descriptions of the sounds based on the extracted images.

- Compared AI-generated sound categorizations with previous analysis – To identify patterns and differences in how sound is recognized across methods.

- Created word clouds to find associations – To explore which sounds appear together most frequently and how they relate to our other data points.

- Developed a sound mapping framework – To serve as a foundation for localizing and analyzing Gràcia’s urban soundscape in next steps

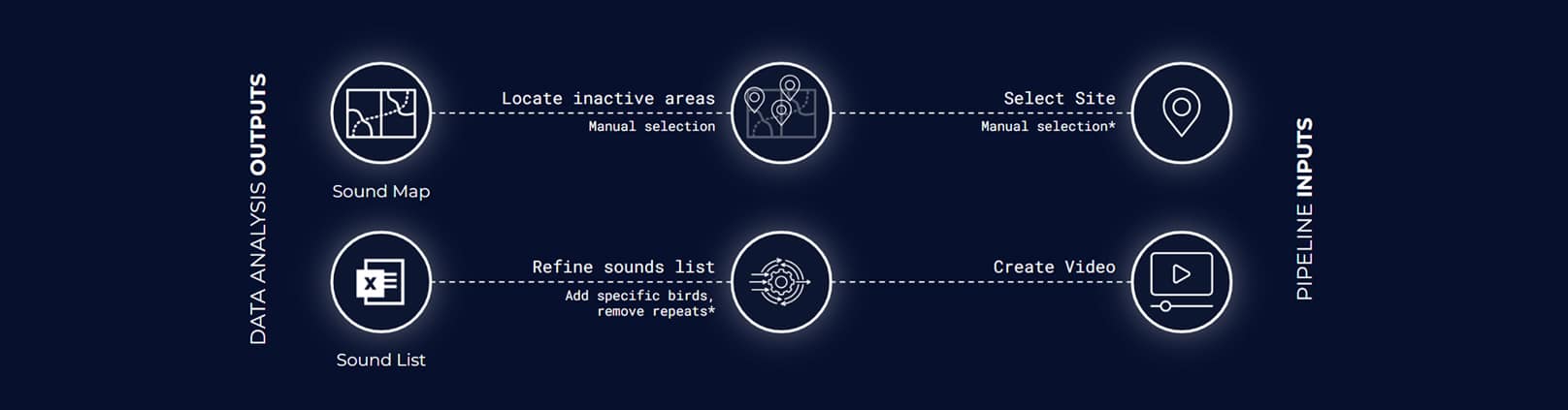

Wrapping Up Data Collection & Analysis

The collection and data analysis enable, identify the sites to be intervened, and obtain a list of the sounds that characterize the Gràcia neighborhood. Those sounds were categorized according to different typologies and geolocated: Nature, Leisure, Human, Warning, Machine, Silence and History

Regarding the site, we looked for areas that we did not have high activity as a location to create an intervention for residents to find and explore sounds over time.

- We selected a quiet dead-end street with minimal activity—an ideal location for an installation that invites reflection and engagement.

- Its proximity to a busier thoroughfare ensures visibility, while the calm setting offers the space for a more intimate, meaningful experience.

From the different categories of sounds we selected for the next step, the one that most represent Gràcia.

The image below shows the Sounds of Gràcia Maps, where each sound is located in their respective places.

As an input for our participatory process we had to shorten the list of sounds into what we felt capture the essence of Gràcia.

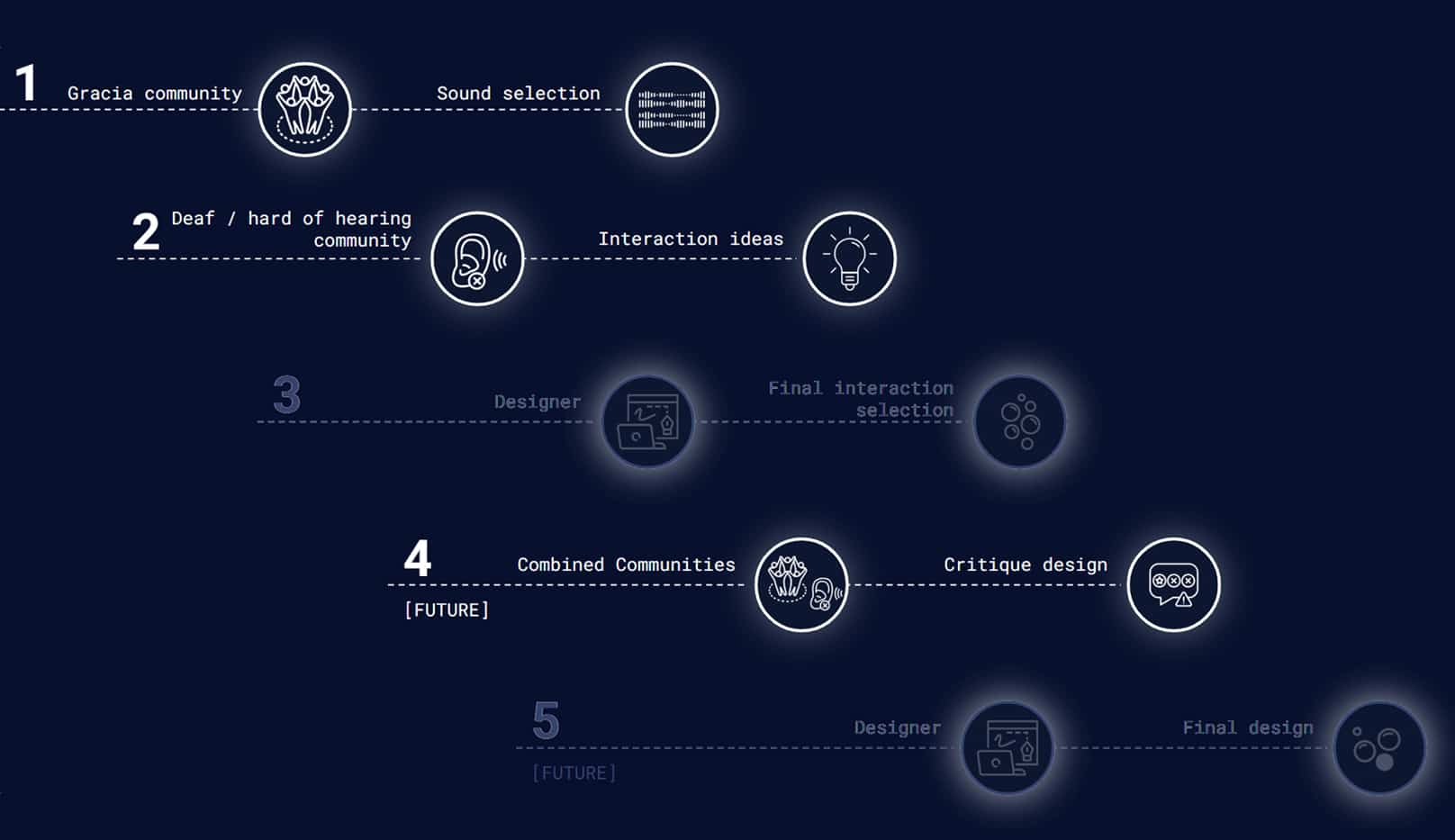

Participatory Design Pipeline

Inclusive Design Approach

As the purpose of this research is to create a collective experience, we need to engage the communities by interacting with the list of sounds and a site. The goal is generate a multisensory experience for people of all hearing abilities to experience.

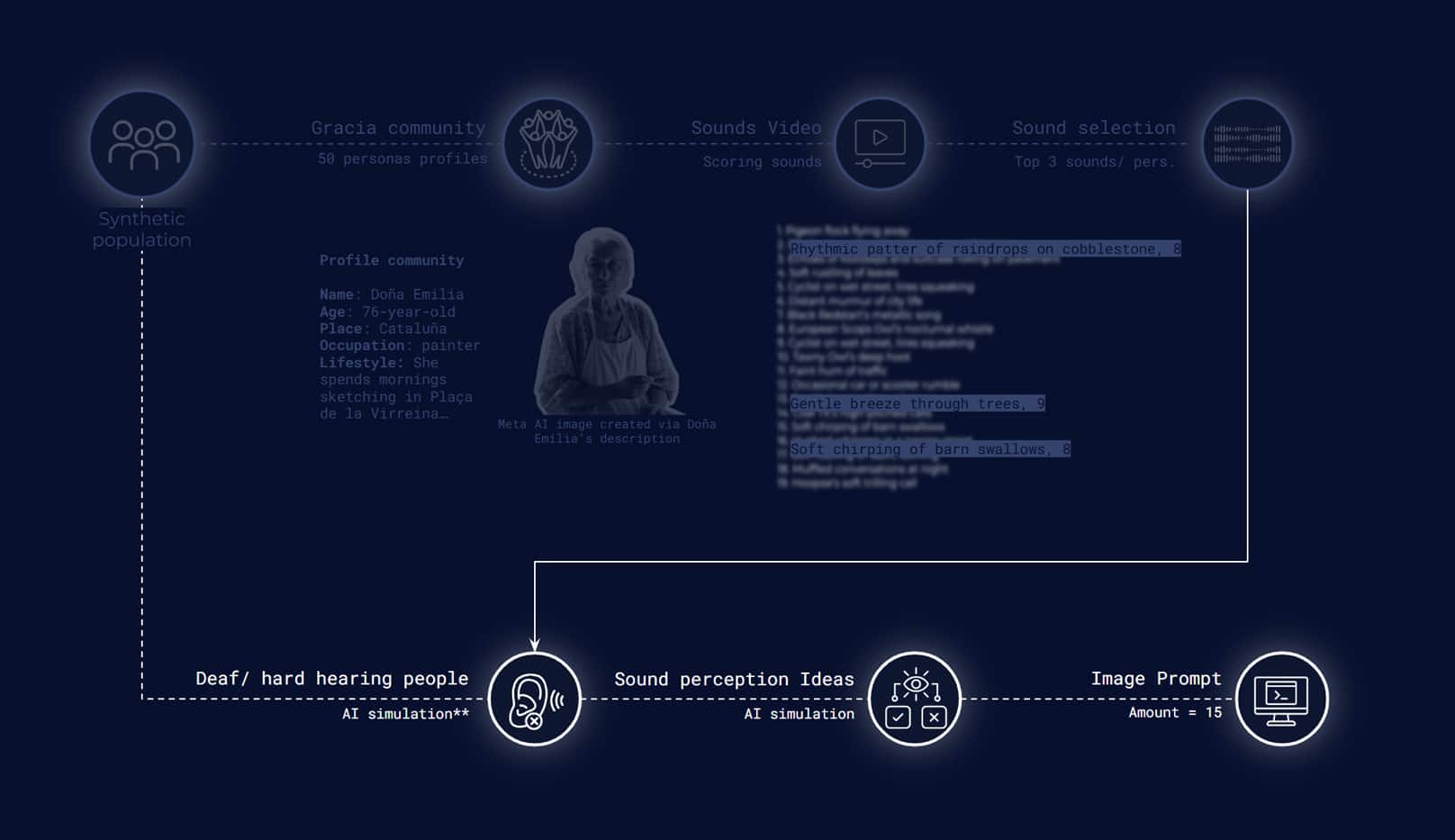

The design process has five stages. Three of these are built around citizen participation. The first stage consists of the selection of 19 different types of sounds by the community of neighbours of Gracia. In the second stage, based on the results of the previous stage, the community of people with hearing problems is included in the visualisation of sounds. Subsequently, as designers, we chose a type of proposal from the community to develop. Finally in future stages we would like to implement and exchange between the communities for a feedback of the proposal and its subsequent redesign.

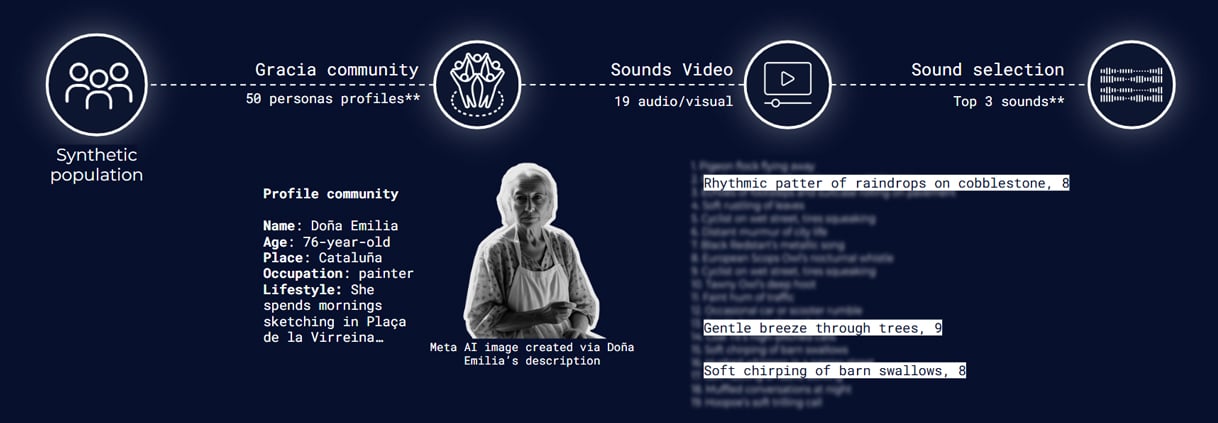

Generation of Synthetic Population

For our participatory process we created a synthetic population, an artificial group based on real people. In order to reduce the bias and generate a more accurate result, we based on the input of three elements as guides for AI to get to know the people of Gràcia.

- Demographic data of Gracia

- Interviews developed in Gracia (data collection)

- Reddit post (scrapping data)

Important

In the output we identified the presence of people bias based on the inputs. As the bias was related with the age and occupation of the ‘personas’, we change the age rate excluding people under 17 years old.

Participatory and design process

- Each member of the Gràcia ‘population’ was given the list of 19 sounds to rate them based on their preferences

- From all 50 members rating we have the top 3 sounds they like as an input for the next stage

- Next we engage the simulated deaf/hard of hearing community members to inquire about how they would like to experience these top 3 sounds (in written form).

- They give us a prompt explaining how these sounds can engage other senses so that we can try to understand how they experience the world.

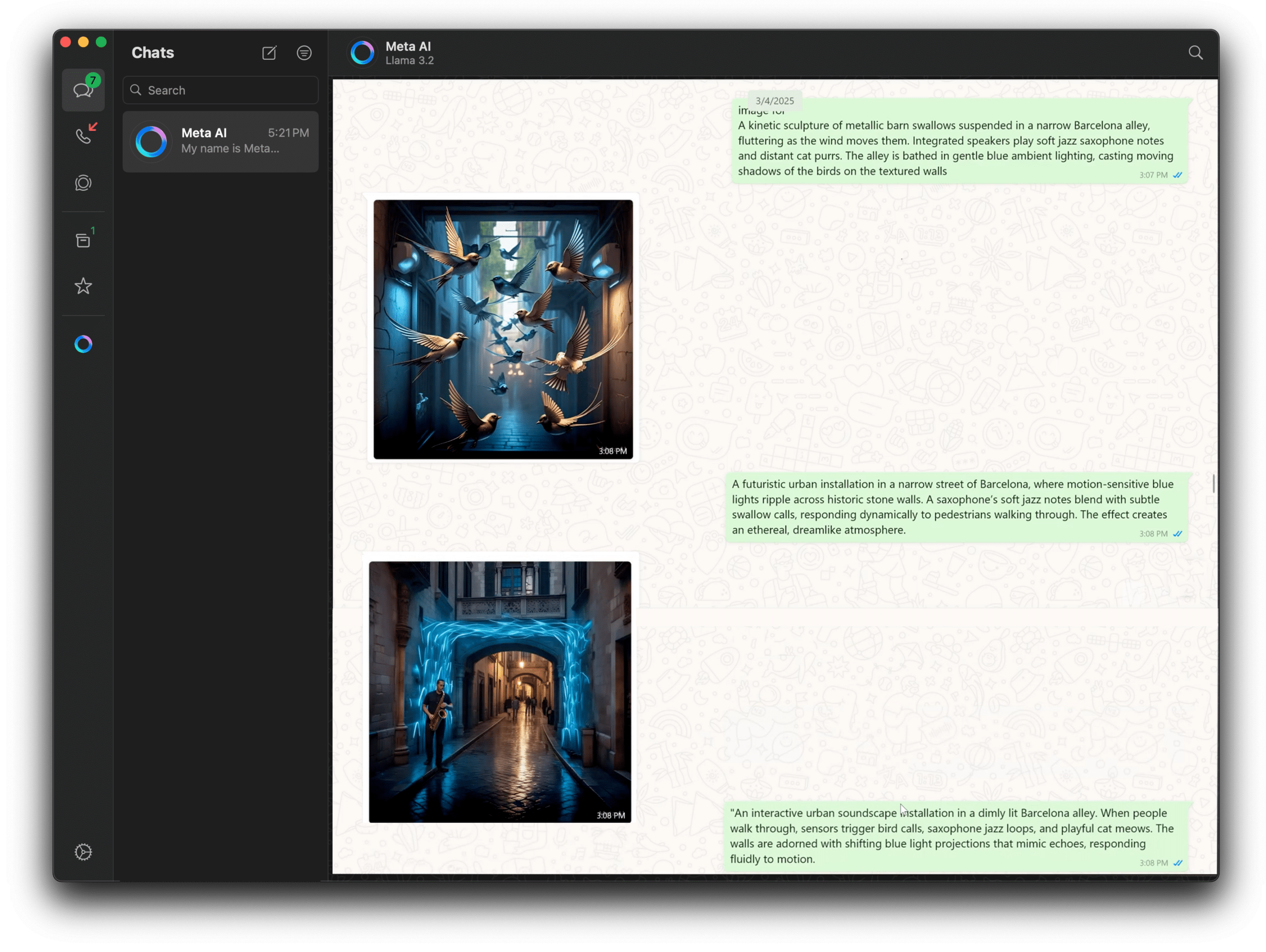

From the participatory output, we initially used a Comfy UI model to generate images based on the prompt. We found this limiting as we were generating images based on very poetic and abstract prompts.

Instead we used Meta AI for more intricate and artistic outcomes.

From the prompt the simulated deaf community for interaction, we had many different options, including

lights, wind, fire, and bubbles.

We chose bubbles as an interaction to continue with the design process for several reasons. As the intervention means a exploratory and community interaction, we consider that bubbles are safer for children, and non disturbing as other components as lights.

Based on that we continued the meta AI conversation with bubbles as the main component and then narrowed down to the form of installation we liked the most. Settled on this image for further construction on our site.

Important

This is JUST a sample for our pipeline, we CAN use this on any type of interaction desired by the community in any location

Image generation based on the community prompt

Once we had the reference image, we selected the specific location within the site to place the intervention. The image works as inspiration for the thirst step, shown in the design pipeline.

As we were working with a broad spectrum of people with different hearing abilities, it was very important to us that the proposal allowed for interaction and inclusion of as many people as possible. In this sense the design of the proposal allows the perception of sound through different senses, mainly through touch and vision.

Taking as a reference we design a 3D model in Rhino based on the Rubens Tube. For the intervention, six different types of sounds were selected, all obtained from the participatory process, which through the use of AI are converted into musical compositions. The device has an interactive board where the user can explore different types of sounds characteristic of Gràcia. When interacting with them, the characteristic information of each sound is displayed on a led screen. Furthermore, the volume contains two large speakers that allow users to feel the sound vibrations by resting their hands on the board. Finally, as soon as the music starts to play, bubbles start to emerge from the volume, reacting to the sound and graphically demonstrating the relationship between the frequency of a sound wave and the bubbles.

Image to video AI generation

The video generation consisted in the use of different AI models which allow the transformation of images into a single video. For this process was necessary create a Rhino 3D model. The model enabled the production of different points of view, used as references for scenes creation. For the next step, the Adobe Firefly AI model was used, which allows the generation of hyper-realistic images based on a text (prompt). Afterwards, the images were edited on Photoshop in order to add details like people and made color adjustments. Finally we used Vidu AI a model that allow generate videos taking as a references different images.

This proposal meant an important journey for us, as it allowed us to explore alternative and non-hegemonic ways of perceiving and experiencing sound. The design also allowed us to put ourselves in the shoes of others to experience the world through other senses. As next steps we would like to include the debate and feedback from the community about the proposed design, with the objective of generating an intervention that will be identity-based for the residents of Gracia and accessible to all.

In addition, if this stage is successful we would like to replicate this strategy in different points of the neighborhood detected in the analysis stage.