Aim

The main objective is to generate a new formation of point cloud of the new IAAC building, that is inspired from a style (Artistic /Architectural) using AI / Machine learning and change the existing point cloud with the the new stylized point cloud.

State of The Art

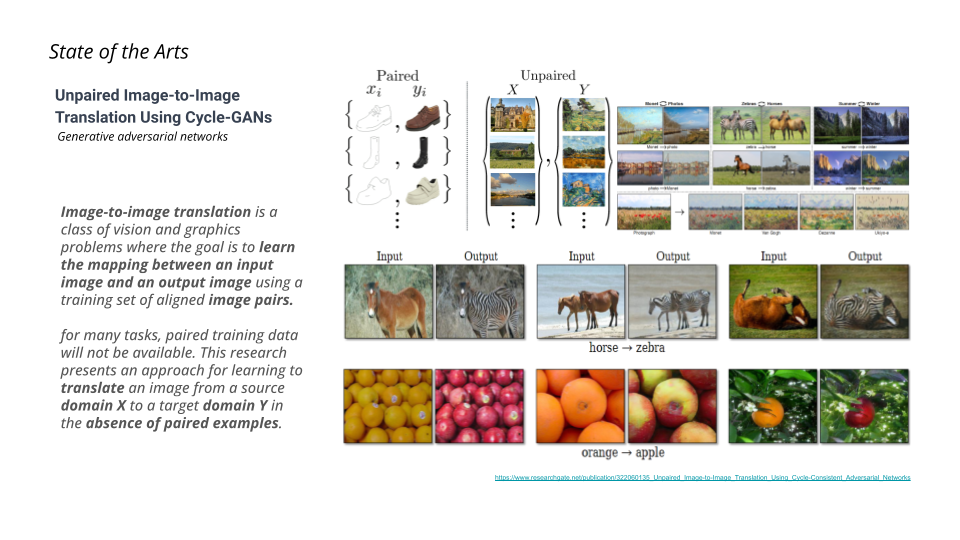

Image-to-image translation is a class of vision and graphics problems where the goal is to learn the mapping between an input image and an output image using a training set of aligned image pairs. For many tasks, paired training data will not be available. According to the research “Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks” for many tasks, paired training data will not be available, in this case, a method of learning to translate an image from a source domain X to a target domain Y in the absence of paired examples is applied in order to apply the attributes from an image to each other.

Zhu, Jun-Yan, Taesung Park, Phillip Isola, and Alexei A. Efros. “Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks.” Paper presented at the International Conference on Computer Vision (ICCV), Venice, Italy, October 2017. DOI: 10.1109/ICCV.2017.244.

In the architectural field, most of the images produced are a byproduct of 3D models, aiming to humanize a scenario and present it to a client. Nowadays, with the advance of MidJourney, the creation of images and scenarios controlled by programming is an advancement for brainstorming ideas in the initial phases of a project and optimizing rendering tasks during the design phase. In this work, a method of understanding a point cloud from a target space, applying an unpaired image-to-image translator using images from MidJourney, aims to explore how various images can contribute to a 3D visualization of a space. The goal is to apply new styles from MidJourney to extract different angles from the scene once generated in 2D, fostering a better understanding of the proposed architectural space.

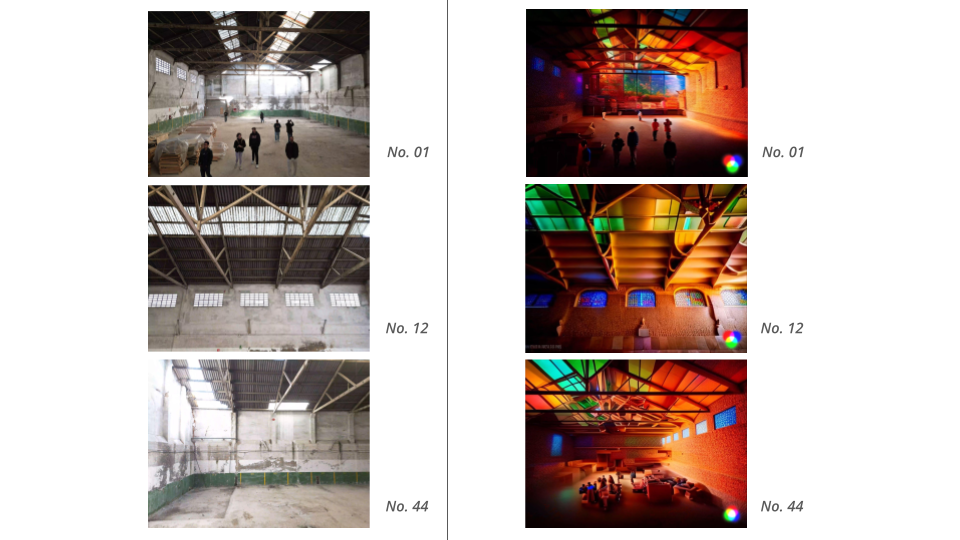

Figure 1 – Stylizing Images from Unpaired Image to Image Cycle Gans

Methodology

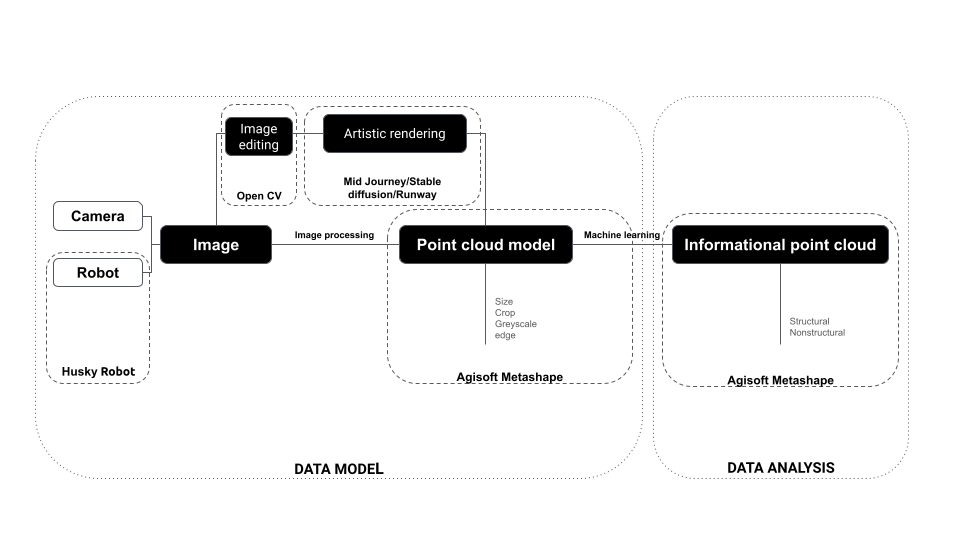

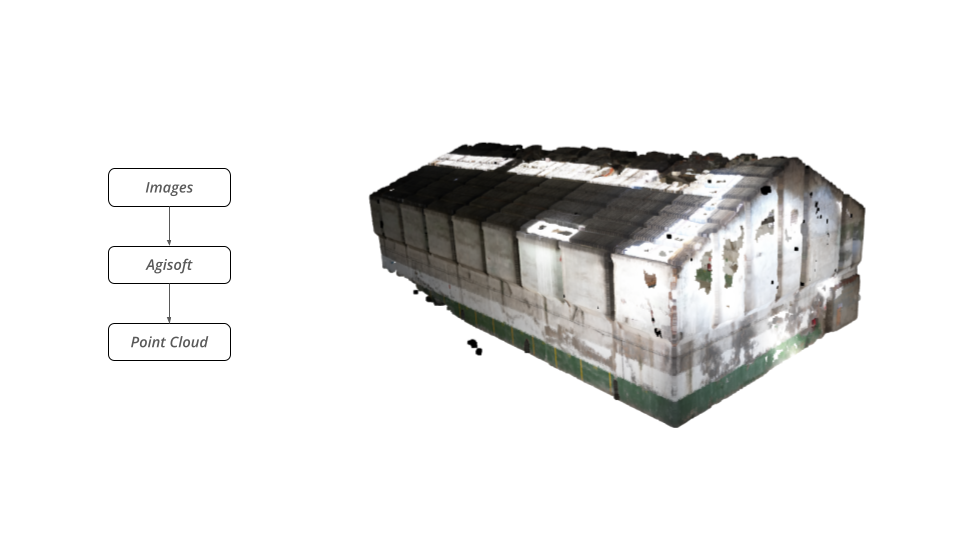

The working methodology is divided into two phases. The first one being the data model extraction and the second one the data analysis phase. During the first phase hardwares such as conventional cellphones cameras and cameras from the husky robots are used to extract photos from the IAAC new Building. After, this images are then transformed and edited to be used as a point cloud using Agisoft Metashape, this point cloud is then feedback with images from AI platforms to be turned into additional pointcloud data. The original pointcloud and the additional pointcloud are then processed into a machine learning filter on Agisoft Metashape so it could be used to generate 3D spaces or discretization of architectural elements.

Figure 2 – Workflow Methodology

From Images to Architectural Elements

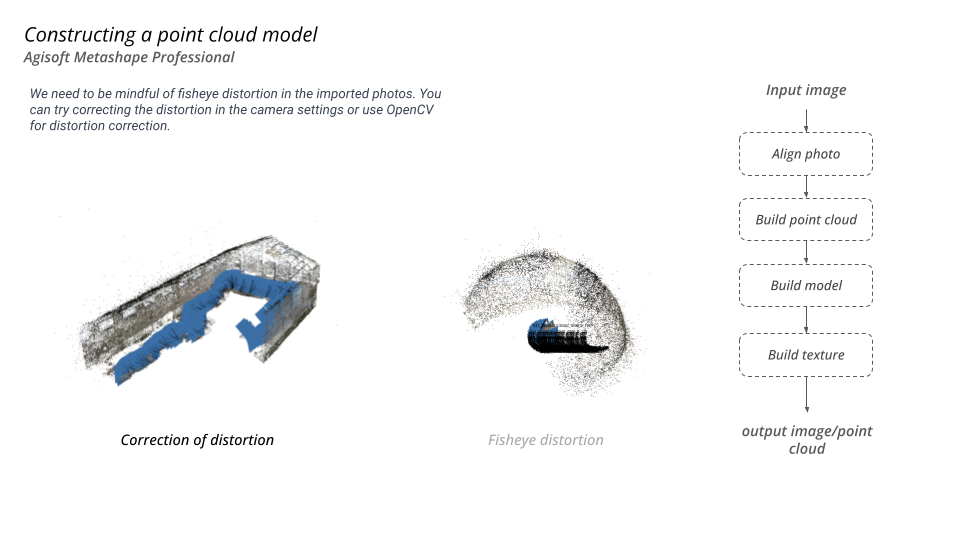

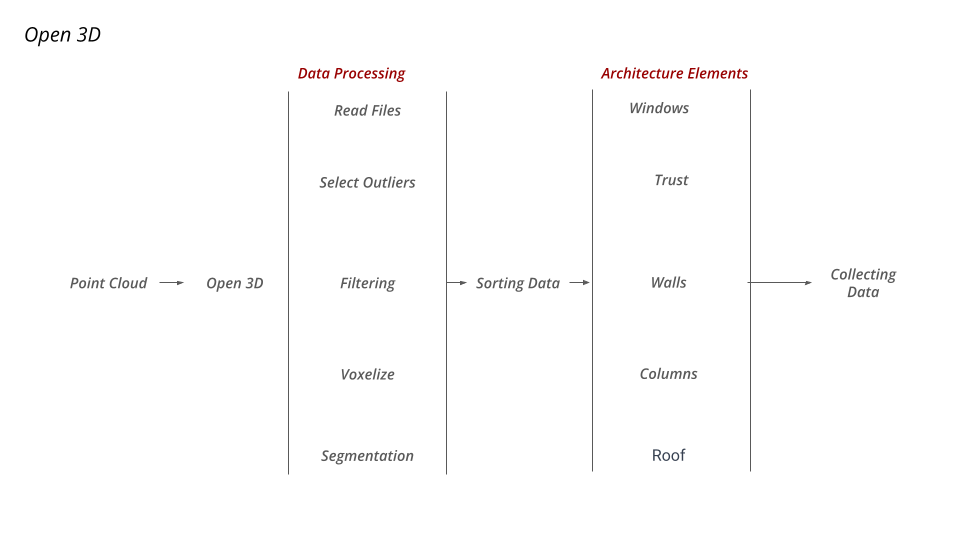

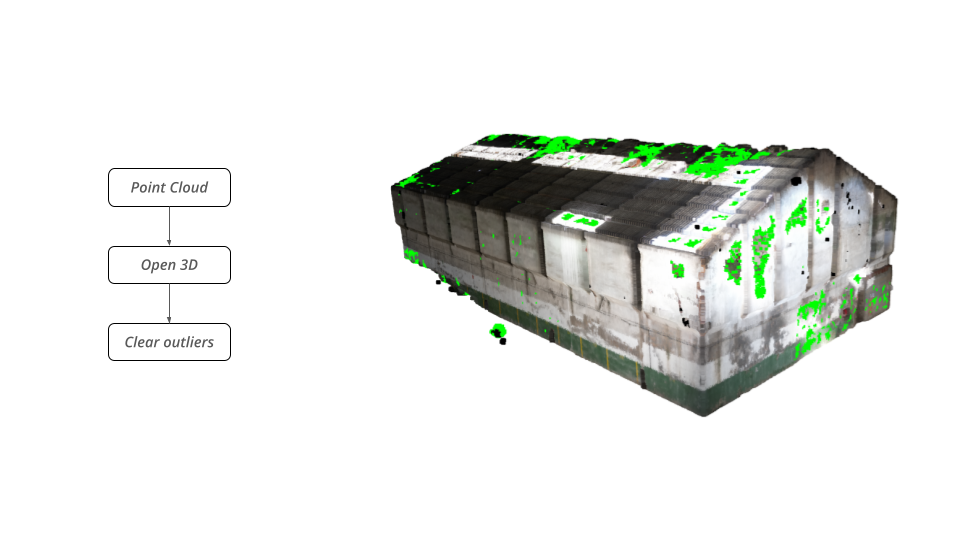

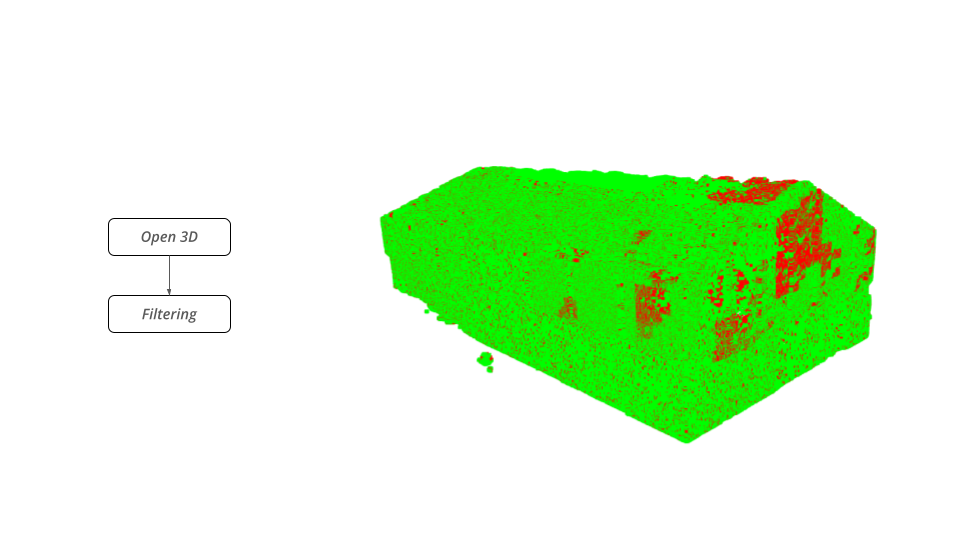

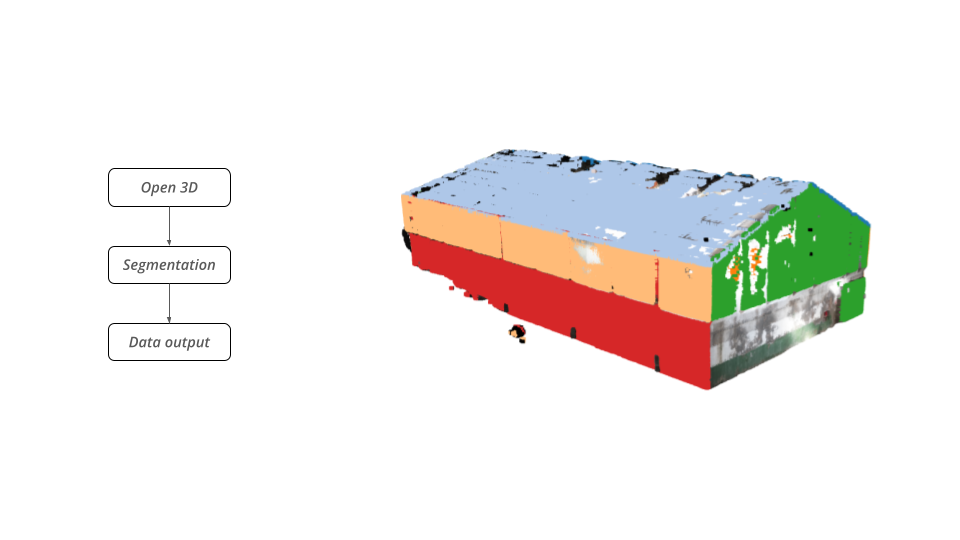

Once the images were taken by the camera a process of alignment on Agisoft were conducted in order to acquired the points without the fisheye distortion. The point cloud were then built as model and applied a build texture to the model. After, data processing of the point cloud were conducted in order to filter, segment and voxelize the points and sort them according to architectural elements.

Figure 3 – Construction of Point Cloud Model

Figure 4 – Open 3D Process

Figure 5,6,7,8,9 – Sourcing Process of Architectural Elements using Open 3D

Reconstruction Images with AI

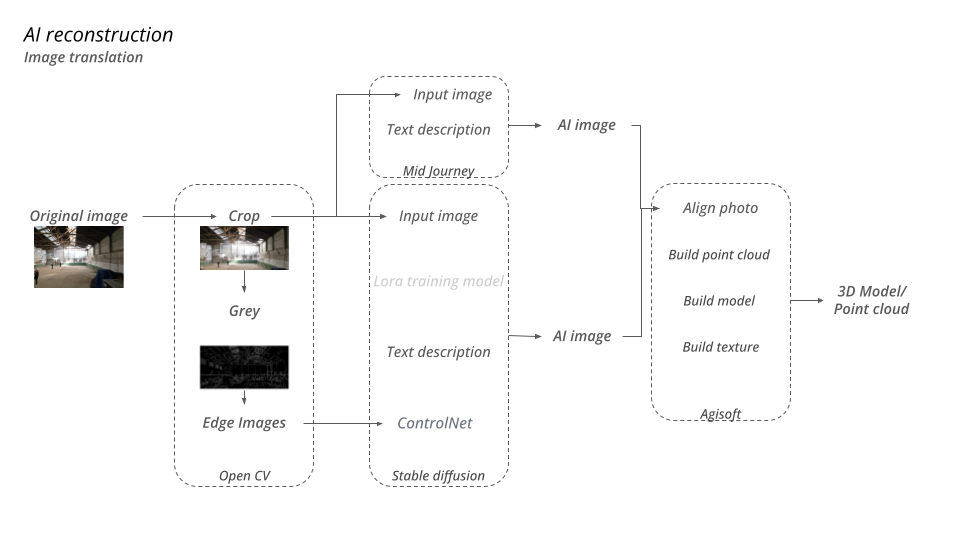

For this part of the work, the photos taken were redimensioned and processed on Open CV in order to obtain the outlines. The cropped ones were putted on Midjourney and transformed according to a photo style filter controlled by a text description, same number of images were taken as the original ones from the camera. For Stable Diffusion the outline images were used as input for ControlNet and the style filter was controlled with text description also, same number of pictures were generated as the original ones. With both AI generated group of images a point cloud is going to be constructed to build a 3D model using Agisoft.

Figure 10 – Workflow for AI Reconstruction Image

Figure 11 – From Original Images to AI Reconstruction

Figure 12 – Processing original images to be used on Stable Diffusion

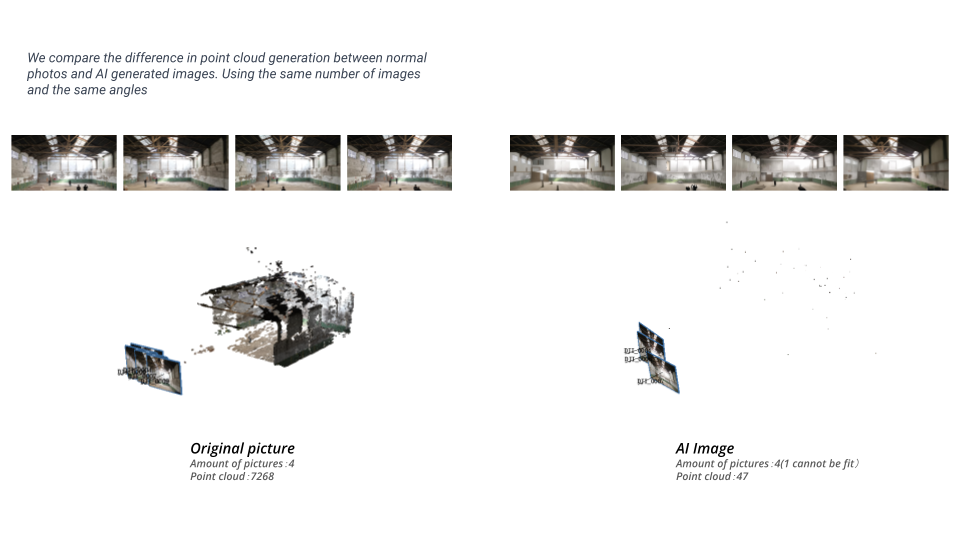

Point Cloud using AI Images did not had the same amount of Points as the Original Pictures taken, this is due to because each AI generated image is being calculated individually. Even if ControlNET is used for edge control in stable diffusion. The uniformity of the generated objects cannot be achieved except for the edges.

Figure 13 – Point Cloud Comparison Original Pictures vs Ai Images

Reconstruction Images with AI Videos (Runway)

Tests were also conducted using the software runway and the original photos, advantage of using runway is to write in the prompt the types of styles the image could be turned in and the frames of the video could be extracted and applied on Agisoft to rebuild the 3D envinronment. Compared to the original pictures point cloud the new images from the runway video did not result as many point cloud disposition as it was expected. However the control of the frames and the point cloud quantity was larger than the tests done with Stable Diffusion.

Figure 14 – Video Generated from Original Pictures

Figure 15 – Stylized Configuration of Space Using Runway AI

Figure 16 – Comparison original pictures and AI frames from generated video