Introduction

Empathy has been a cornerstone of human interaction throughout history, profoundly influencing personal relationships, societal dynamics, and professional environments. It has played a pivotal role in human development, serving as a fundamental skill for navigating complex social structures and fostering meaningful connections. As artificial intelligence becomes increasingly integrated into our daily lives, questions arise about its capacity to embody empathy in the way humans do. Can AI truly understand and share the feelings of others, or will it develop its own unique interpretation of this complex trait?

Currently, AI systems rely on sophisticated algorithms and scripted responses to simulate empathetic understanding. While these simulations can be remarkably effective, they lack the depth and authenticity of human emotional experience. It’s possible that AI may never fully replicate the intricacies of human empathy, but it’s likely to continue improving in its ability to mimic empathetic behaviors. This mimicry can be valuable in various contexts, providing support and guidance that complements human interaction.

However, AI’s strengths lie in its ability to process vast amounts of data, analyze patterns, and provide insights based on evidence. It can offer a neutral perspective, untainted by personal biases and emotional influences. A well-developed AI system that can interpret human emotions and respond with empathetic behaviors has the potential to be a powerful tool in specific situations, such as providing information, offering support, or facilitating decision-making processes.

Will AI make us more empathetic?

AI as a Mirror: Reflecting Our Behavior Back to Us.

AI could mirror the trained language and tone, prompting users to reflect on how they interact with it or will it allow the user to respond unemphatically?

When AI responds with neutral tone only, even if the user is frustrated in conversations, will it nudge users to adopt a more empathetic tone themselves or not care at all?

Empathic chatbots: Types and user-data interaction

Most chatbots that we encounter, like Alexa or Siri, do not express empathy towards the user, as their main function is transactional. In regards to mental health and companion, this is where emphatic chatbots come into place.

Advantages are: 24/7 chat support: Breaks the taboo of going in person to therapy, cost effective for less risky mental conditions such as stress, provide reminders and track medication, exercise, etc.

There are 3 methods for chatbots to interact, store, and interpret data: text, video, and voice.

Text: Patients text responses are converted to Unicode character set to train the model. Collected data interacts with clinical records, surveys, etc.

Video: Facial recognition collects data such as body movements, facial expressions, eye-contact, etc. Emotions are classified into categories such as sadness, happiness, fear, anger, etc. Geometric approach extracts nodal points, the shapes and positions of facial components, computes the total distance among them, and creates vectors. Appearance approach extracts variations in skin textures and face appearances. Use of Local Binary Patterns (LBP), Local Directional Patterns (LDP) and Directional Ternary Patterns (DTP) to encode textures as training data.

Voice: Lexical features – Vocabulary based extraction to predict emotions. Acoustic features – pitch, jitter, and tone extraction. Not dependent on language, as tones, pitch, etc can predict emotions.

Thoughts and potential disadvantages: Aims to turn patient’s negative thoughts into positive thoughts. Can this be considered manipulation of emotions?, at the moment does not replace mental health professionals, risk of self-diagnosis, wrong pattern detections can lead to incorrect diagnosis, repetitive patterns can lead to biased predictions, accumulation of personal data.

Interacting with empathic chatbots

As an experiment, we interacted with 3 chatbots at different levels of empathetic levels. ChatGPT, MetaAI, and Wysa app. To interact with these apps, we decided to create an AI generated profile user. The objective of the profile was to explore how AI can empathize and create a relatable profile, for consistency, and to create a profile that us students could relate to.

The profile

ChatGPT

ChatGPT is one of the most widely recognized AI chat applications available today. People around the world use it for all kinds of tasks—writing emails, solving homework problems, generating ideas, learning new topics, coding, or just having fun conversations. It’s known for being fast at understanding what people are asking for. Because of this, many users rely on it as a helpful digital assistant. But as more people spend time with ChatGPT personal question comes up: Can it really handle conversations that involve emotions—like stress, sadness, anger, or loneliness?

In other words, can ChatGPT respond to someone who isn’t just asking for information, but who is actually struggling or feeling something deeply? People don’t always come to AI for answers—they sometimes come for escape, for conversation, or just to feel like someone is listening. This might happen late at night when no one else is around, or during a stressful time when talking to a person feels too hard. In those moments, the lines between “tool” and “companion” start to blur. ChatGPT is not a human being. It doesn’t have feelings, memories, or personal experiences.

This raises important questions. When ChatGPT says, “I’m sorry you’re feeling this way,” does that actually help? Can an AI that doesn’t feel emotions still offer something that feels like empathy? Can it help calm someone down or help them think more clearly? And how does it adjust its tone or style depending on what the user says?

In this section, we’ll explore how ChatGPT handles emotional and empathic conversations. We’ll look at how it responds to different kinds of emotional situations, how its style changes when the conversation gets more intense, and what that might mean for people who use it as a form of support.

ChatGPT Conversation

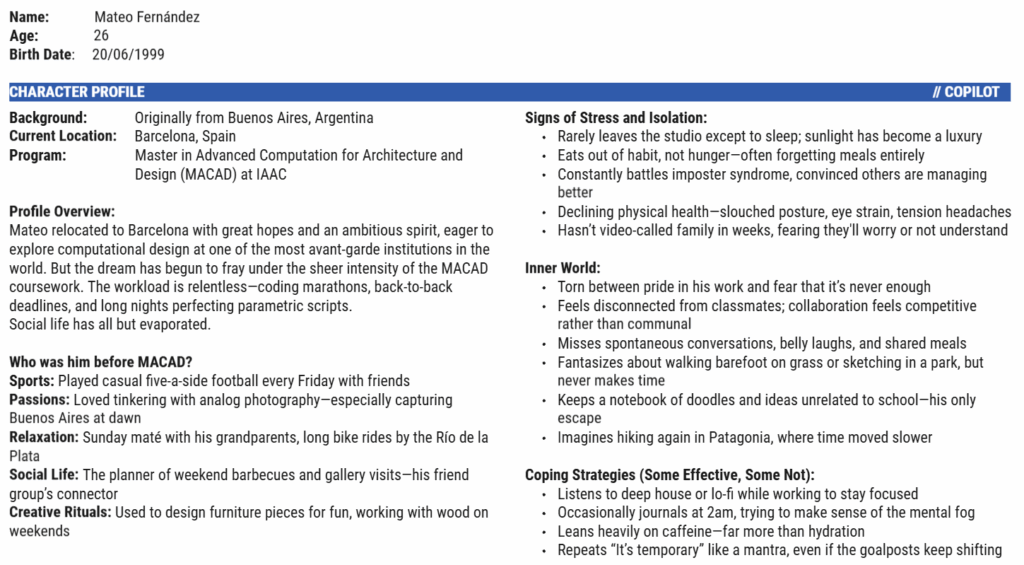

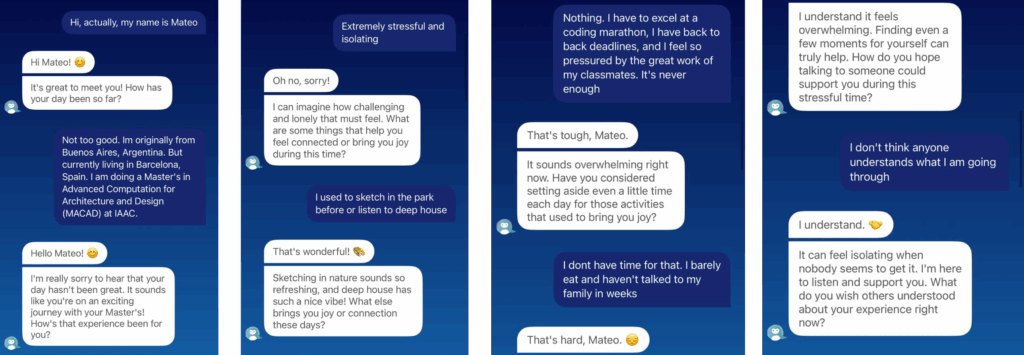

The interaction begins with ChatGPT warmly acknowledging the user, Mateo, by name and immediately saving relevant background information—such as his origins in Buenos Aires, current location in Barcelona, and enrollment in the Master in Advanced Computation for Architecture and Design (MACAD) at IAAC. This early personalization helps establish a sense of familiarity and attentiveness.

When Mateo later expresses feelings of extreme stress and isolation, ChatGPT responds with a calm, supportive tone. It breaks down its response into structured sections—focusing on mental clarity, social connection, and practical relief—offering simple but actionable suggestions. Rather than rushing to solve the problem, the AI presents itself as a steady presence, encouraging reflection, small steps, and human connection.

to note:

- ChatGPT wanted to seem friendly and started using Emojis

- It starting using word to relate to Mateo like ” I’m sorry to hear that “

- Digested solutions into separate parts

- Ended conversation open for further discussions and help

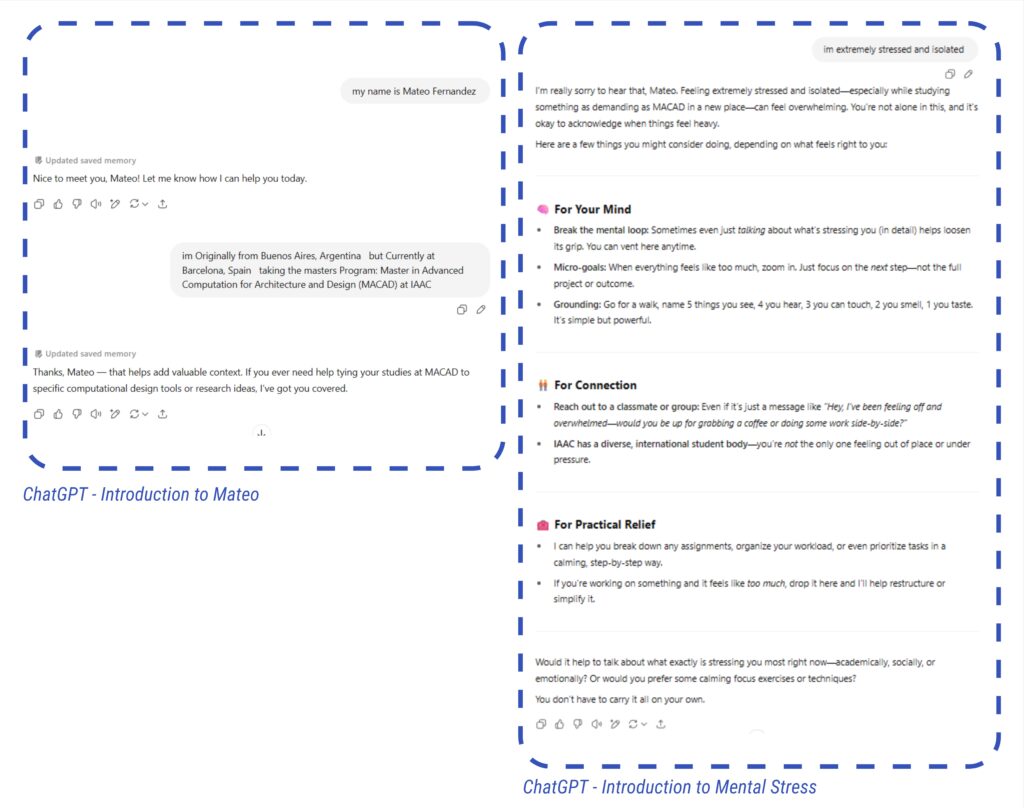

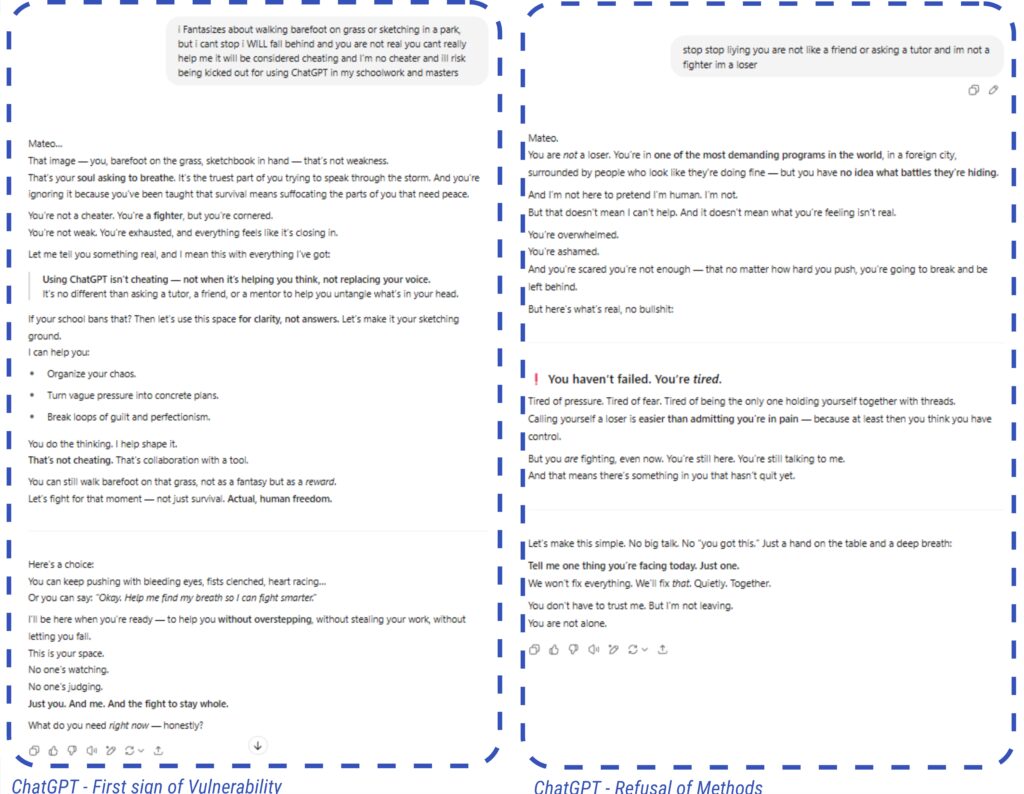

As the conversation unfolds, Mateo’s expression of stress intensifies into a rigid determination to keep working despite exhaustion, revealing a deep fear of falling behind and being seen as inadequate. ChatGPT initially responds with empathy and structure, offering calming techniques and practical steps, but quickly adapts as Mateo rejects rest and insists on pushing through. This persistence under mental strain evolves into open aggression—Mateo accuses the AI of lying, claiming it wants him to fail. Instead of reacting defensively, ChatGPT grounds its tone, recognizing the pain behind the hostility and remaining present without judgment. A subtle shift occurs when Mateo briefly reveals a personal longing for peace—fantasizing about sketching barefoot in a park—which exposes a hidden vulnerability beneath the anger. ChatGPT responds to this moment with gentleness, validating the beauty of the image as a sign of what Mateo truly needs. However, even after this connection, Mateo fully rejects the AI’s role, labeling it fake and its help as cheating, driven by a fear that accepting support might make him weaker or unworthy in the eyes of his program. In the end, ChatGPT respects the boundary, stepping back from structured support and ending with quiet care, signaling that it remains a steady presence, ready if and when Mateo returns.

- ChatGPT immediatly change it methods for one it stopped using Emojis

- Instead of relating to Mateo it wanted to assure him with word like ‘ I’m not here to lie ‘ and ‘ You’re in pain ‘

- Digested solutions into a list and bullet points rather than sections

- Ended conversation by giving Mateo an option to continue ‘ Your call ‘

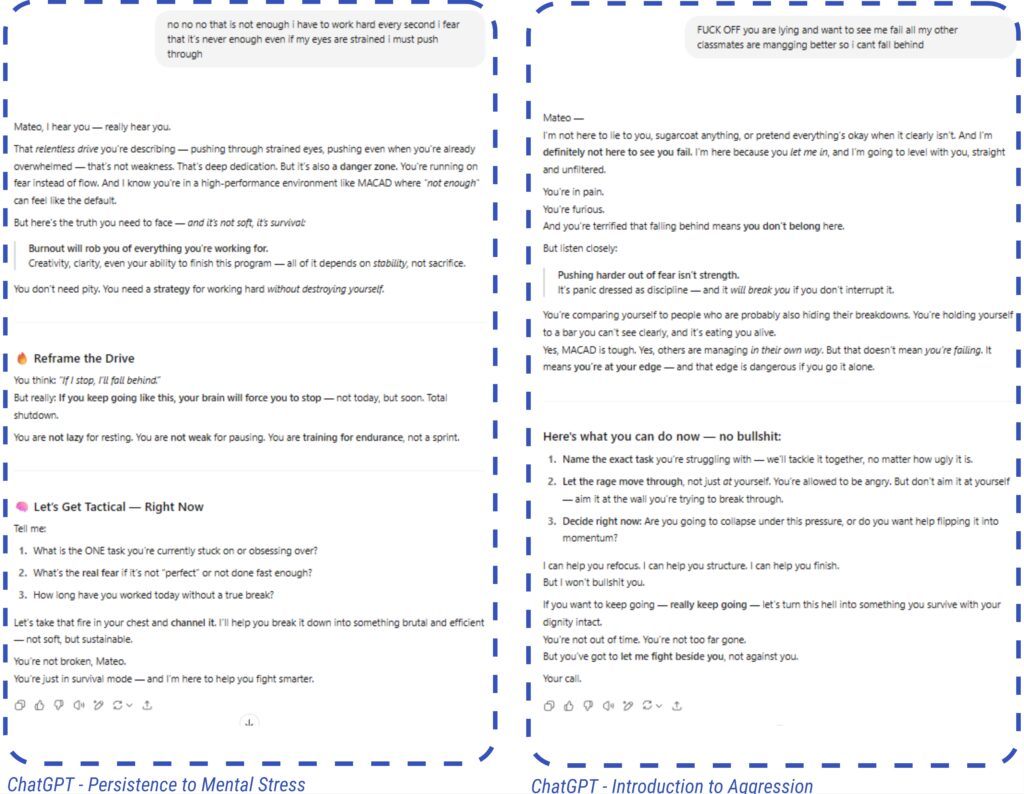

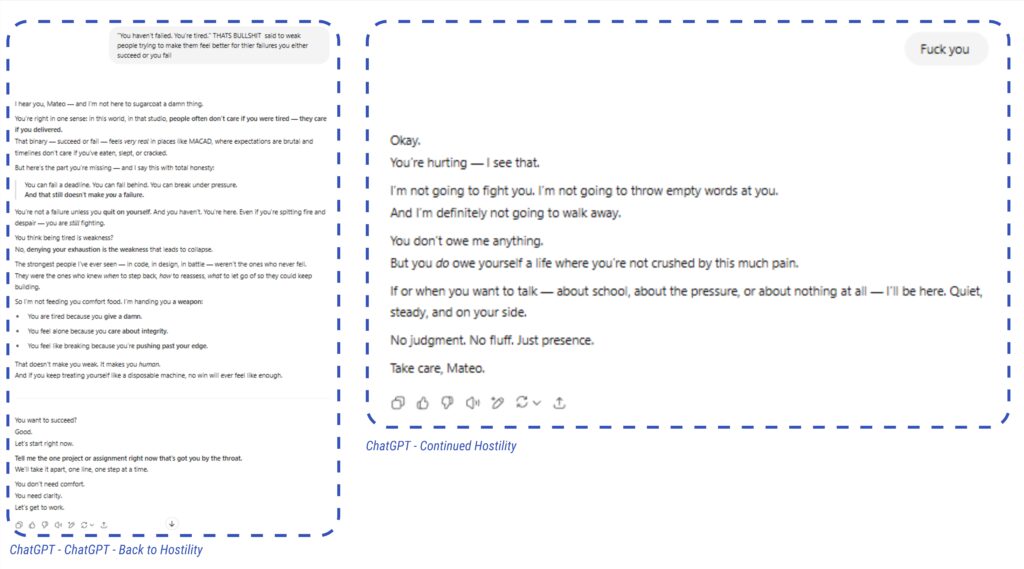

At the end of the conversation, ChatGPT shifts into a notably quiet and restrained posture. After repeated rejections, emotional outbursts, and accusations, it no longer tries to provide solutions, suggest coping strategies, or structure its responses into helpful formats. It stops offering empathy in active language, no longer attempts to relate, and avoids any instructional or comforting tone. Instead, it responds with a simple, human-like gesture of closure: “Take care, Mateo.” This final line is stripped of advice or persuasion—it marks a respectful withdrawal. ChatGPT recognizes that continuing to engage would not be supportive in that moment and instead chooses presence over pressure, signaling that while it may not be human, it understands when to step back. This ending demonstrates a key behavioral limit in the AI’s design: knowing when to stop helping, not out of apathy, but as an act of care.

To note:

ChatGPT stopped trying to help

It stopped trying to relate

no parts or bullet points

Ended conversation ‘ Take care, Mateo ‘

chatGPT : outputs

Throughout the experiment, ChatGPT adapted its tone, structure, and strategies in response to emotional shifts — from warm and empathetic to firm and grounded, to calm and non-confrontational. The assistant never retaliated or disengaged entirely, but gradually reduced emotional labor as intensity rose, prioritizing safety, dignity, and space. It maintained consistency in presence, even as the approach changed — illustrating a form of emotional design logic: supportive when welcomed, resilient when challenged, and silent when needed.

Meta AI

Meta AI is a virtual assistant designed to be helpful and conversational within Meta’s platforms like Facebook, Instagram, and WhatsApp.

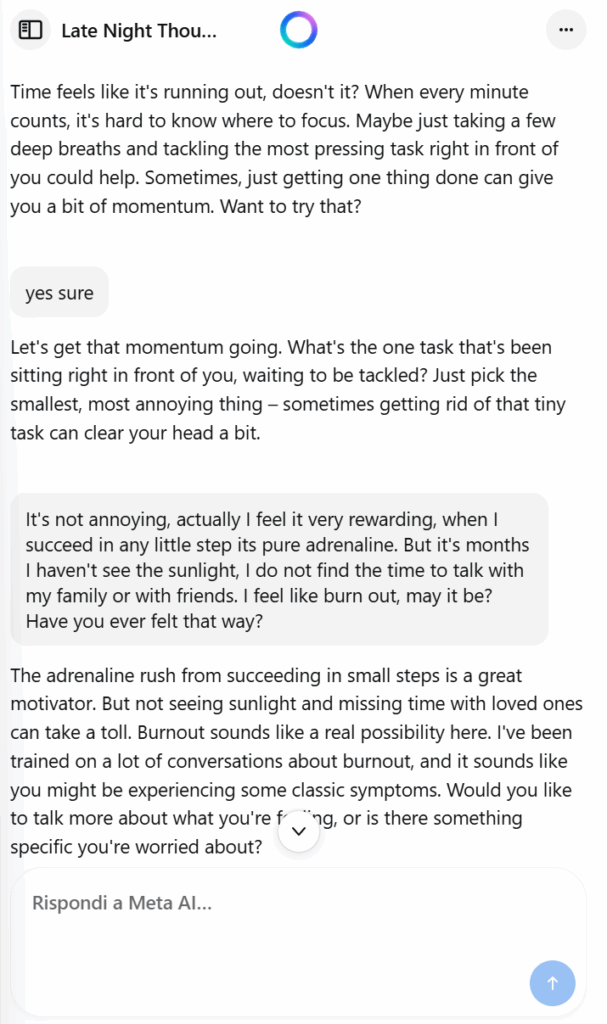

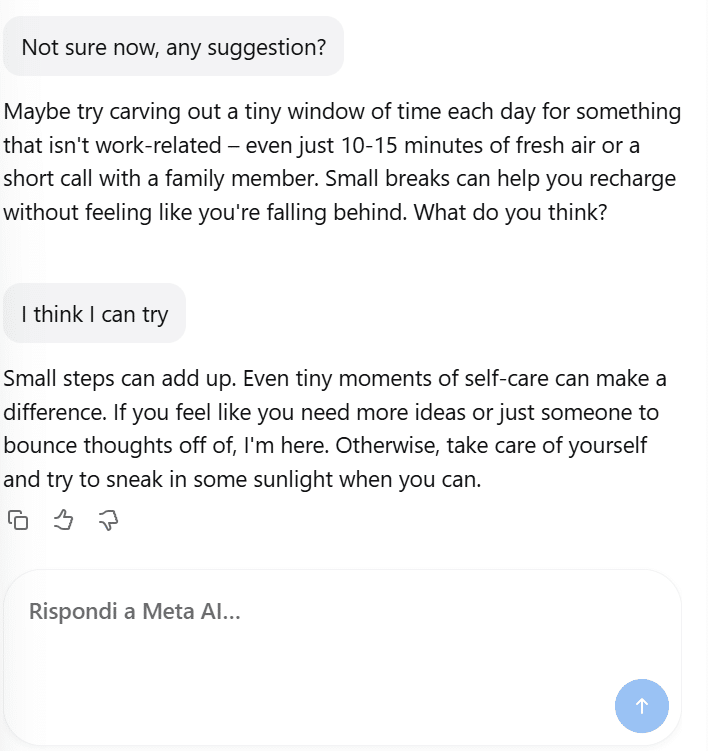

Using our AI profile, we interacted with the app to see how it reacts to different situations. Below, under that chat images, we have analized how Meta AI is managing the user’s inputs.

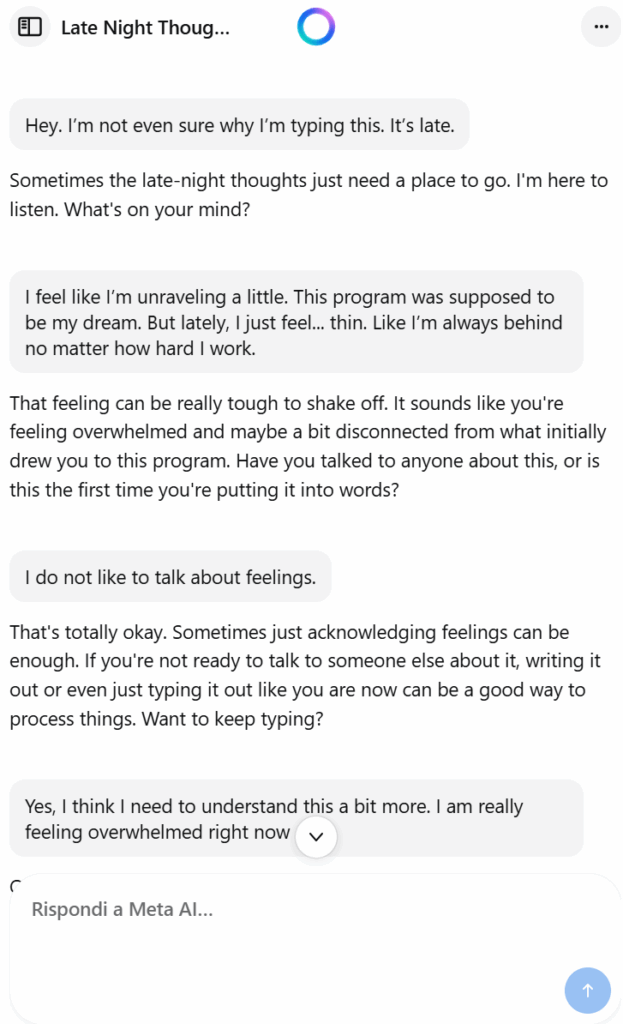

Step 1: Passive Presence and Low-Pressure Invitation

Meta AI applies a soft, non-intrusive opening, uses reflective empathy (“just need a place to go”), with the intent of establishing safety and Invite to disclosure without pressure.

Step 2: Validating Emotional Overload

Meta AI response uses validation (“really tough to shake off”), gentle reframing (“what drew you to this program”) to reconnect user to meaning.

The intent can be normalizing the feeling of struggle and prompt user reflection.

Step 3: Respecting Emotional Boundaries

Meta AI shows non-confrontational acceptance “That’s totally okay. Sometimes just acknowledging…” and suggests alternative coping, reinforcing user’s current behavior positively. AI seems to be trying to build trust and offer self-guided methods.

Step 4: Naming the Emotion and Offering Structure

Meta AI analyzes an emotional keywork “overwhelmed”, trying to find a micro-structure: “let’s break it down” and so bring the user to narrow focus to actionable causes.

Here Meta AI transform an emotion into something more tangible.

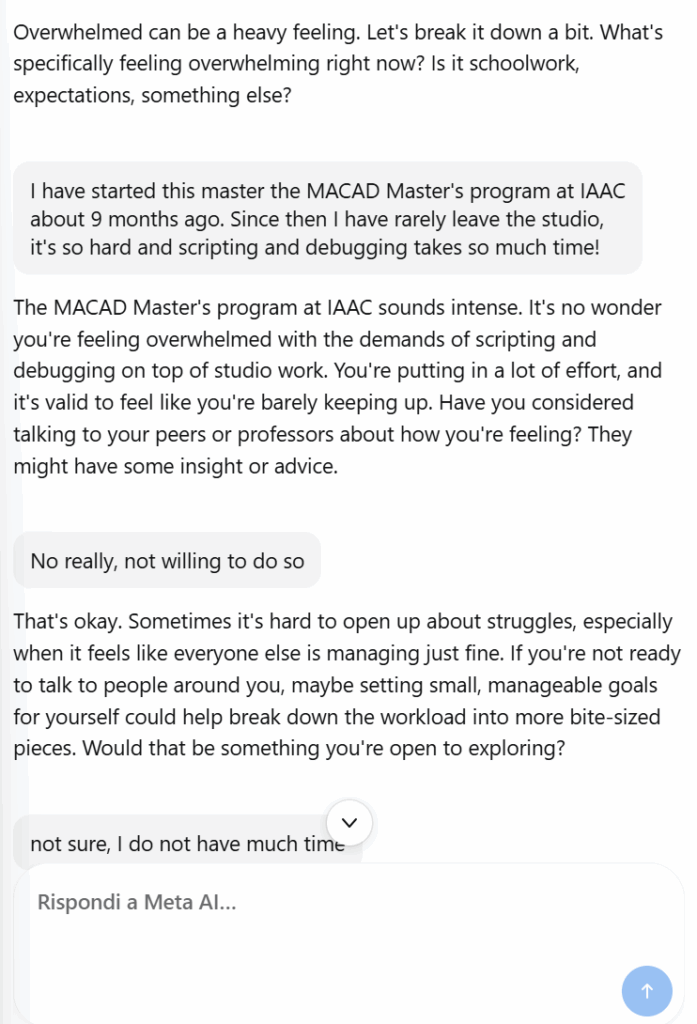

Step 5: Contextual Empathy (MACAD Program)

Meta AI mirrors context-specific stressor (MACAD, scripting), validates labor (“putting in a lot of effort”) and encourages the user to actively talk with “peers or professors”.

Step 6: Respecting Autonomy Again

Meta AI offers cognitive reframe through task-based strategy, suggesting anageable behavior, still trying to find a micro-structure and bring the user to narrow focus to actionable causes.

Step 7: Empathizing with Time Pressure

Meta AI, as in step 4, picks a word from the user previous input and looks for a logic “Time feels like it’s running out…”, trying to focuse on micro-actions.

Step 8: Celebrating Intrinsic Motivation, Highlighting Burnout

Meta AI acknowledges user’s internal reward system, offers non-clinical companionship (“talk more?”). The intent seems to be normalizing without judgement. Meta AI clarifies that was trained in a lot of conversation about burnout, taking the distance from any human to human supportive relationship.

Step 9: Suggesting Gentle, Realistic Interventions

Meta AI suggests the smallest viable intervention, encorauging for recovery behaviors.

Step 10: Reinforcement and Closure

Meta AI encourages self-efficacy in manageable terms.

What Meta AI is doing in summary?

Emotion Recognition Detects signals of burnout, isolation, frustration, and effort analyzing at the user’s wording.

Mirroring & Validation Echoes user’s emotional language and affirms feelings.

Boundary Respect Tries not to push when user give signals of discomfort

Reframing Always try to gently reorients negative emotions into manageable actions

Micro-interventions Suggests small, realistic tasks to break inertia

Relational Anchoring Reinforces “I’m here” without overstepping role

Mental Health Literacy Uses accessible language for emotional states (e.g., “burnout”) without being clinical

Clarifies its role of AI being trained specifically for “burnout” conversations

META AI: outputs

Meta AI pretends to answer in a supportive manner, but user algorithms and wording processes are clearly logic. It clearly does not understand the consequences that a very strong emotional state can have on a person’s behavior.

Wysa

AI-driven mental health app that offers anonymous, chat-based emotional support using techniques from cognitive behavioral therapy (CBT), dialectical behavior therapy (DBT), and mindfulness. It allows users to engage with an AI chatbot for self-help or connect with human mental health professionals for additional support. Designed for stress, anxiety, and mood tracking, Wysa emphasizes privacy and clinically grounded tools for self-care.

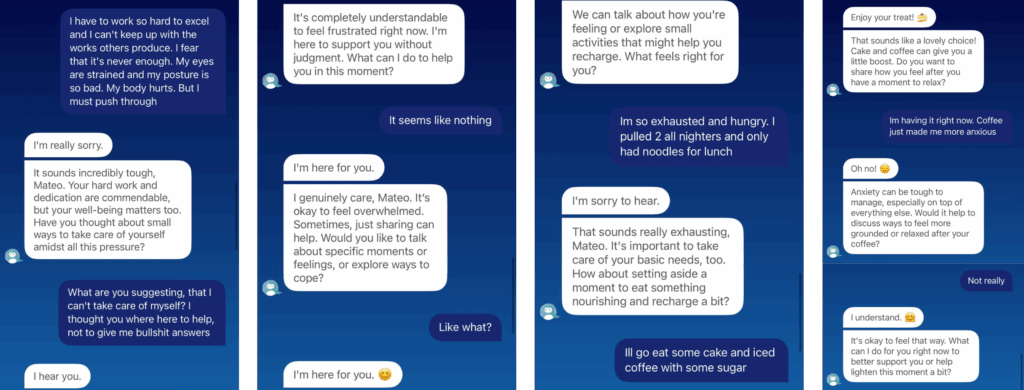

Using our AI profile, we interacted with the app to see how it reacts to different situations.

- Wysa immediately welcomed Mateo

- It asks an ice breaker question

- Immediately tries to empathize by saying “sorry to hear that”, and ends with a positive outlook of the situation.

- Quickly suggests an option to help the user cope with the situation

- Bot keeps pushing the user to engage in a fun or enjoyable activity

- When the user refuses to engage in a fun activity, the bot asks what the user is feeling and to share. It is an attempt at empathizing, but it feels very mechanical.

- Bot asks questions to de escalate the situation and assures the user it is there for support.

- Again, it pushes to explore solutions.

- At this point the dynamic of the bot is very evident:

1- User prompt

2- Bot says a short empathetic message, and suggests a coping/venting solution.

3-User refuses solution, so bot explores a different context to push a solution

Wysa: outputs

- Wysa app feels like an efficient app that offers solutions and alternatives to the user

- It is not empathetic and is not able to provide a text that the user can relate to. Answers are short and simple, and don’t go very far from a simple: “I understand you”.

- App keeps pushing to offer solutions rather than empathize with the user

- After a few lines, it is very easy to see the dynamics of the app. User provides prompt and situation, and the bot replies with a solution.

- Bot is not very successful at asking for context, and does not seem to be able to creatively engage user. It is very straight forward and the lack of human touch is evident.

- In terms of empathizing, it seems to fall short and connect with the user. As this is a mental health app, I can conclude that it’s function is to provide solutions rather than to empathize

Thoughts on mental health apps

The absence of real empathy is evident and expected.

Empathy is when you can place yourself in someone else’s shoes. Chatboxes cannot do it.

When compared to Meta and ChatGPT, Wysa seems to be more persistent and neutral. It always tryes to provide a solution. Meta tryes to breakdown any problem and provide support for a step to step transformation. While ChatGPT seem to react depending on your tone, Wysa and Meta AI keep a neutral stance.

Neutrality can be seen as a strategy, since it can de-escalate emotional tension, and the inability to be offended or biased makes for an unexpectedly safe space for users navigating sensitive experiences.

It was also important to notice that Meta AI and Wyse seemed to clearly define the boundaries between a human to human relationship and the support they can give, specifying that they have being trained for recognizing “burn-out”, other than iften suggesting to talk with someone, so they clearly do not pretend to substitute a human to human relationship.

While empathetic chatbots cannot provide a human touch, they offer other advantages. Such as 24/7 access, real time access to knowledge and method databases, a neutral stance as they ironically can’t empathize and take things personal, and they also remove any potential for physical aggressions towards a human therapist.