GenAI for Game Environment Creation

Overview

Urban environments in games play a crucial role in crafting immersive, dynamic worlds that captivate players and enhance storytelling. These settings not only shape the game’s atmosphere but also introduce strategic challenges and reflect cultural, social, and architectural identities, making virtual spaces feel authentic.

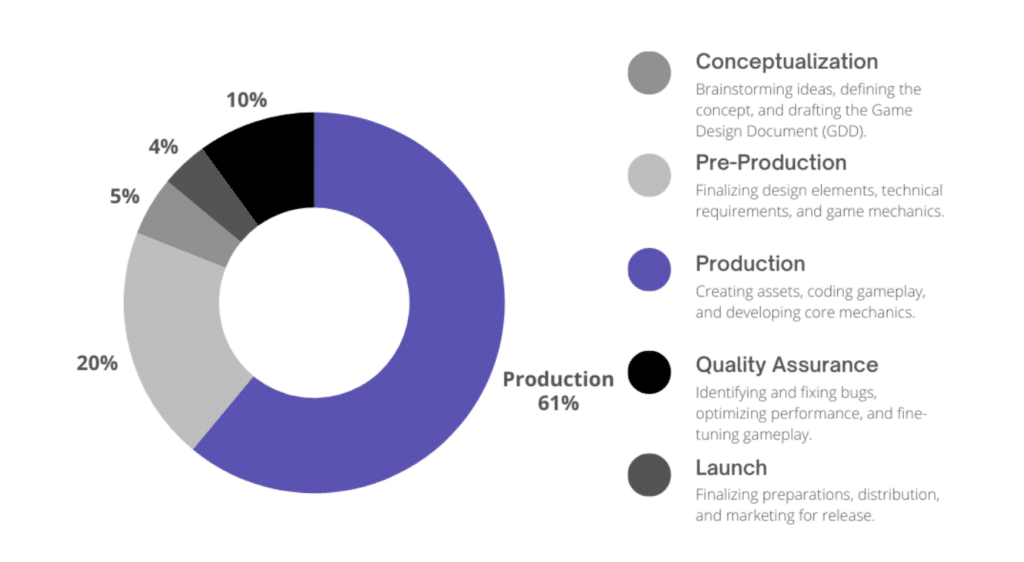

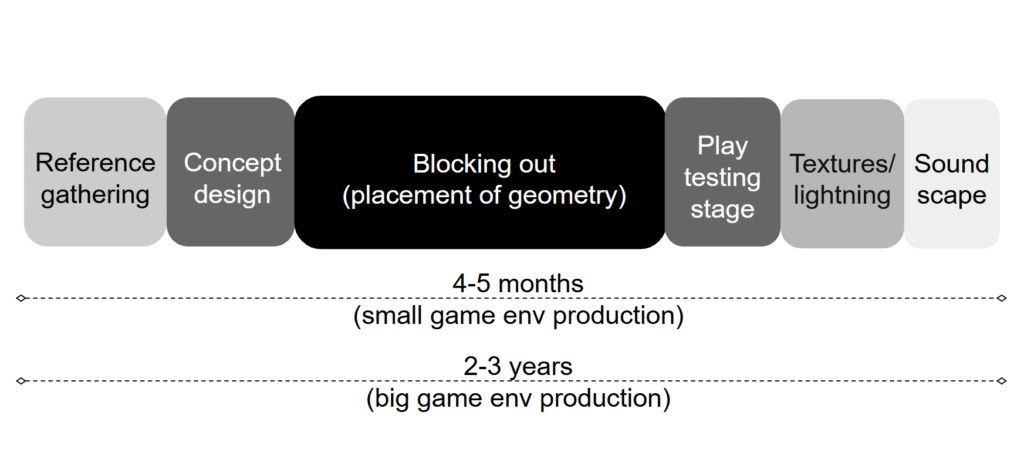

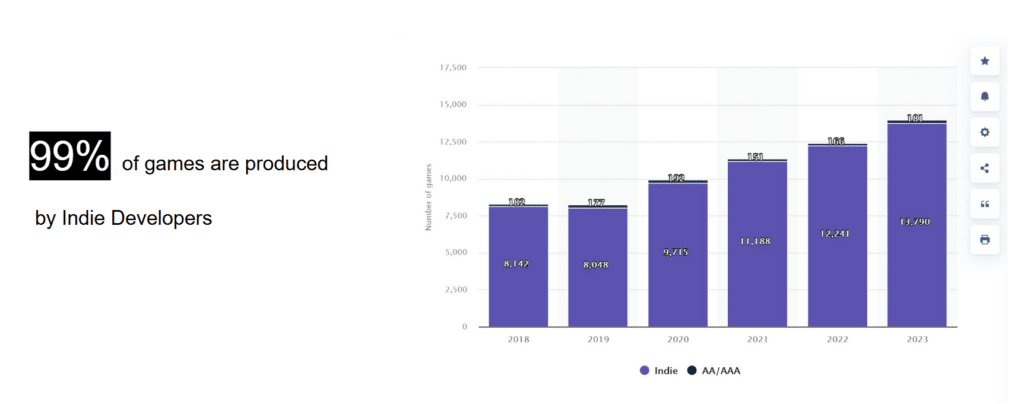

However, creating high-quality, realistic game environments demands significant time, research, funding, and large development teams—an especially daunting challenge for indie developers. As small, independent creators or studios without the financial support of major publishers, indie developers often rely on innovation, creativity, and unique gameplay experiences to bring their visions to life, pushing the boundaries of game design despite limited resources.

Who is it for and Urgency

Game developers who want to create high-quality, realistic environments ~ especially indie developers. Indie developers are small, independent game creators or studios who develop games without the financial backing of large publishers, nor a lot of experience, often focusing on innovation, creativity, and unique gameplay experiences.

State of the Art

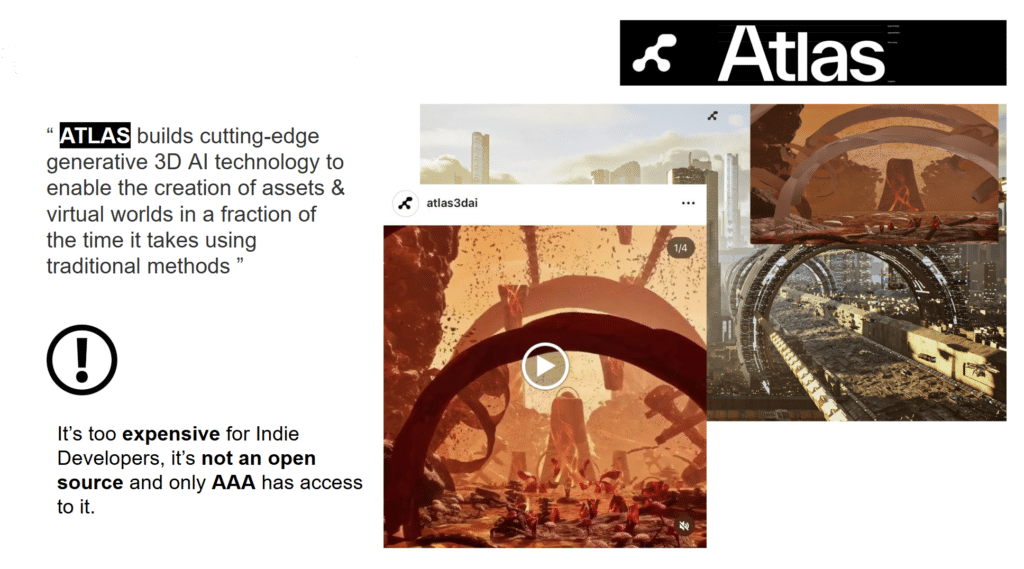

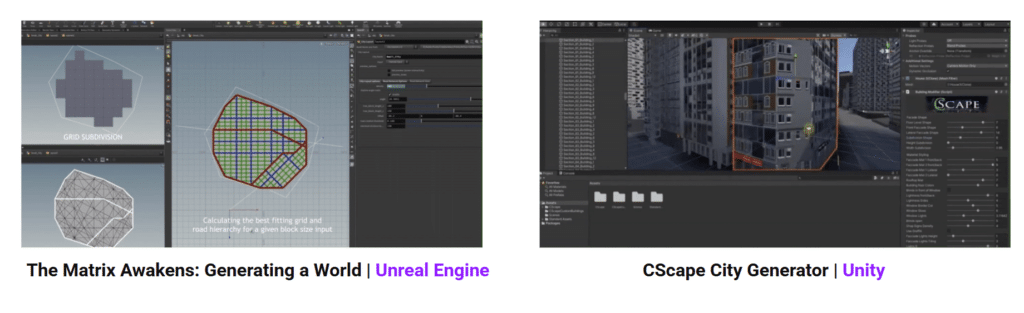

A study was done as to figure out the state of the art in the market, they varied from companies working directly with generative AI to plugins and software that facilitate the workflow in the creation of game environments. However, these case example present limitations that will have to be remedied for in our proposal.

Technical Development

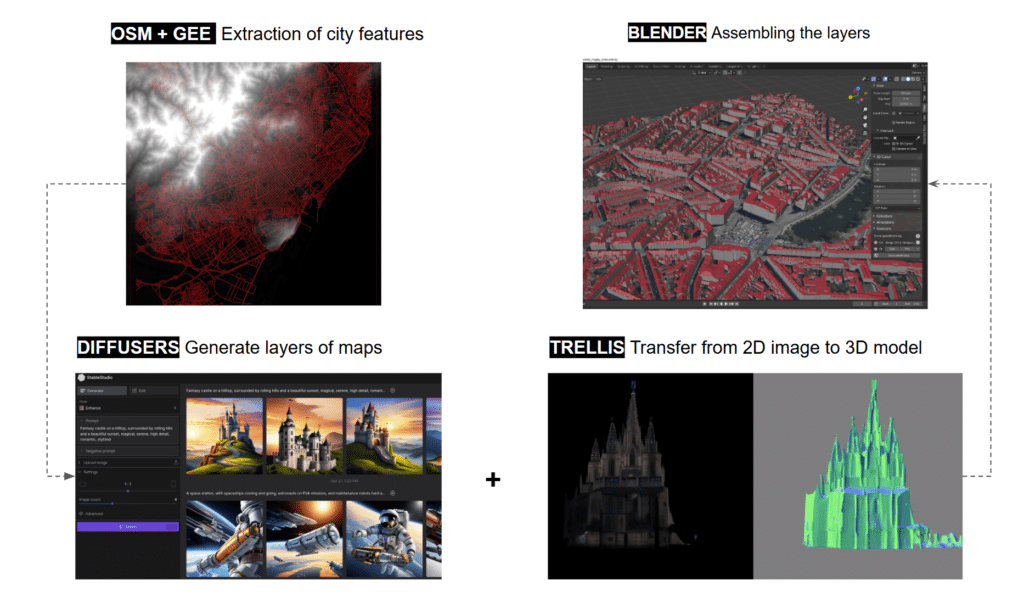

The development of our plugin mobilizes multiple tools that are either open source of proprietary:

Our Pipeline

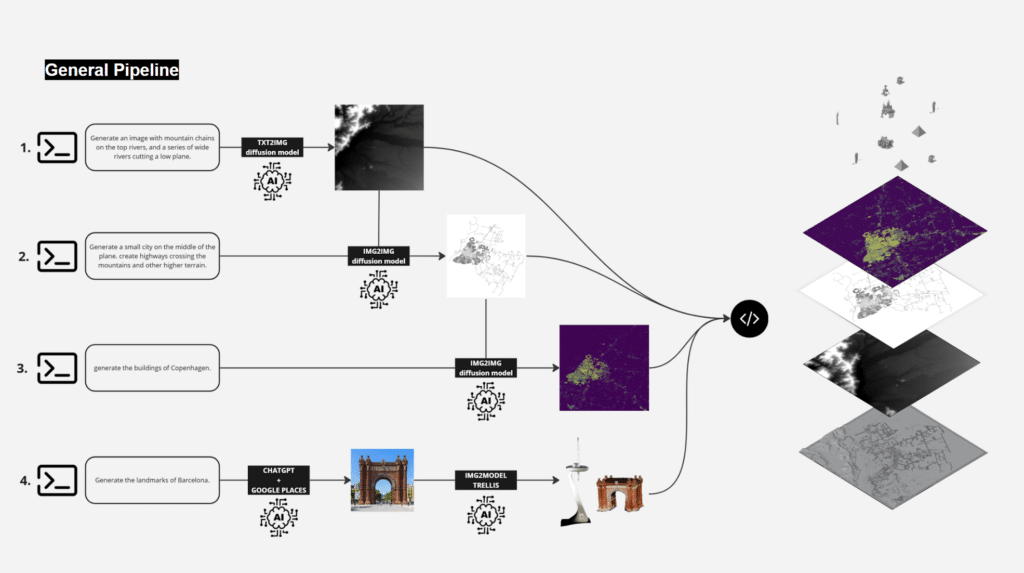

The process begins with generating a terrain map using a text-to-image (TXT2IMG) diffusion model based on a prompt describing mountain chains and river systems. Next, a small city and infrastructure such as highways are added using image-to-image (IMG2IMG) diffusion models, enhancing the terrain with urban planning features. In the third step, detailed building layouts are generated from the city’s structure. Finally, notable landmarks (e.g., from Barcelona) are retrieved using ChatGPT and Google Places, and converted into 3D assets with IMG2MODEL models (TRELLIS). Once all the layers are generated—including terrain, infrastructure, buildings, and landmarks—they are accumulated and procedurally assembled in Blender, creating a cohesive, realistic 3D environment ready for visualization or simulation.

Plug-In Simulation

How did we do it: Generations + Assembling

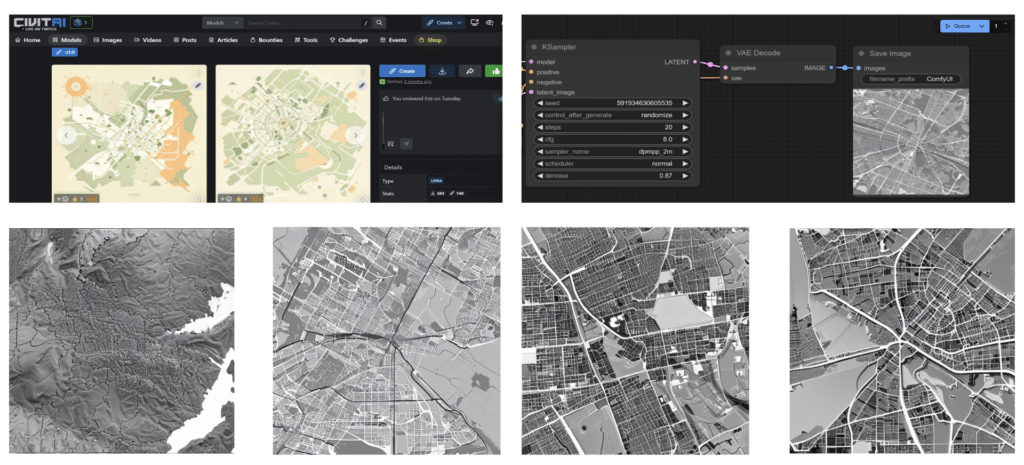

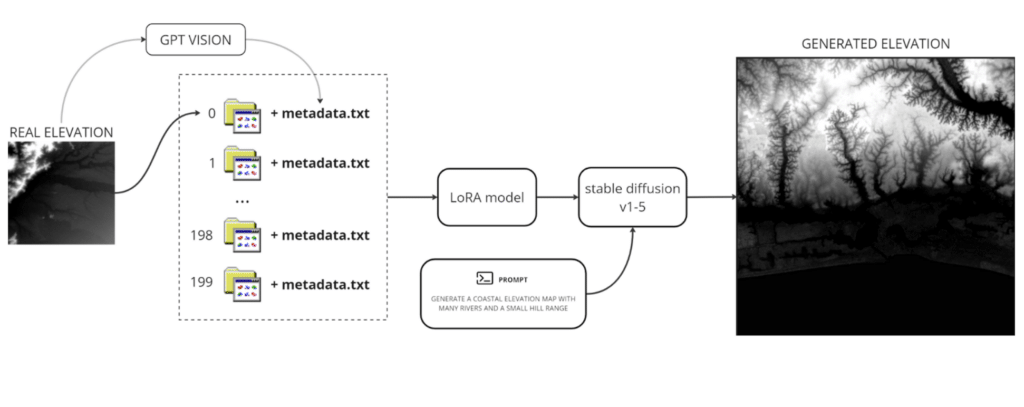

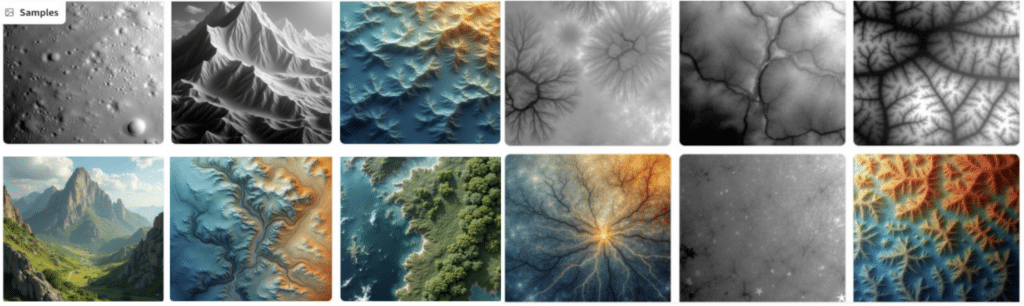

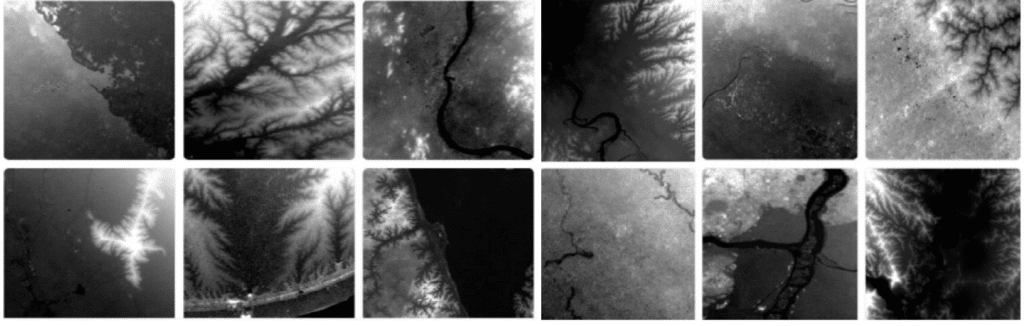

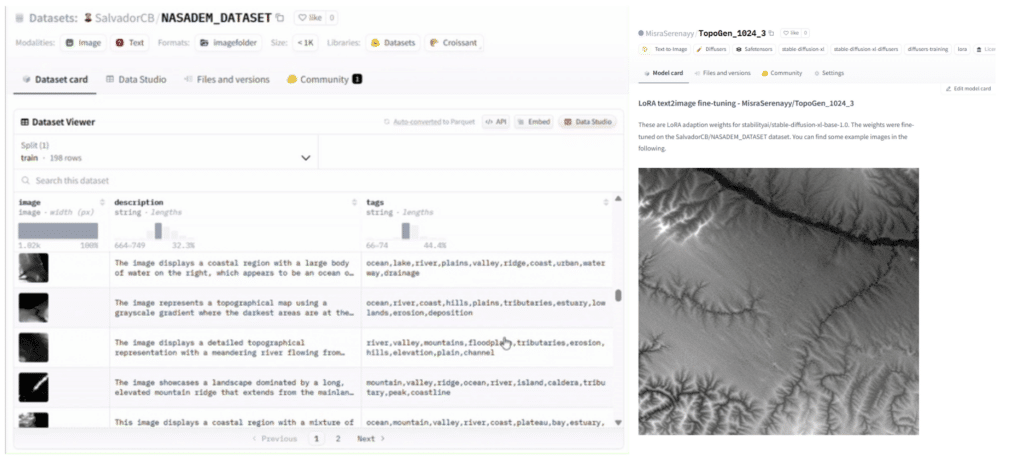

Those were the first generations we did using a stable diffusion model, however the results were still very far away from what we would define as height map that could be used to do a proper displacement on Blender. So we had to fine tune the model using LoRA. Real elevation data is analyzed using GPT Vision, which extracts metadata and organizes it into datasets. These datasets train a LoRA model, which, along with Stable Diffusion v1-5, generates new elevation maps based on text prompts. The system enables realistic terrain generation, as seen in the example where a coastal elevation map with rivers and hills is created from a given prompt.

First Sampling from the LoRA training: 10 Steps

Second Sampling from the LoRA training: 800 Steps

These datasets are now available on Hugging Face and as a Github Repo, allowing free exploration and usage for future training and model tuning.

This is done in order to take initiative steps in the project towards building a community for the plugin.

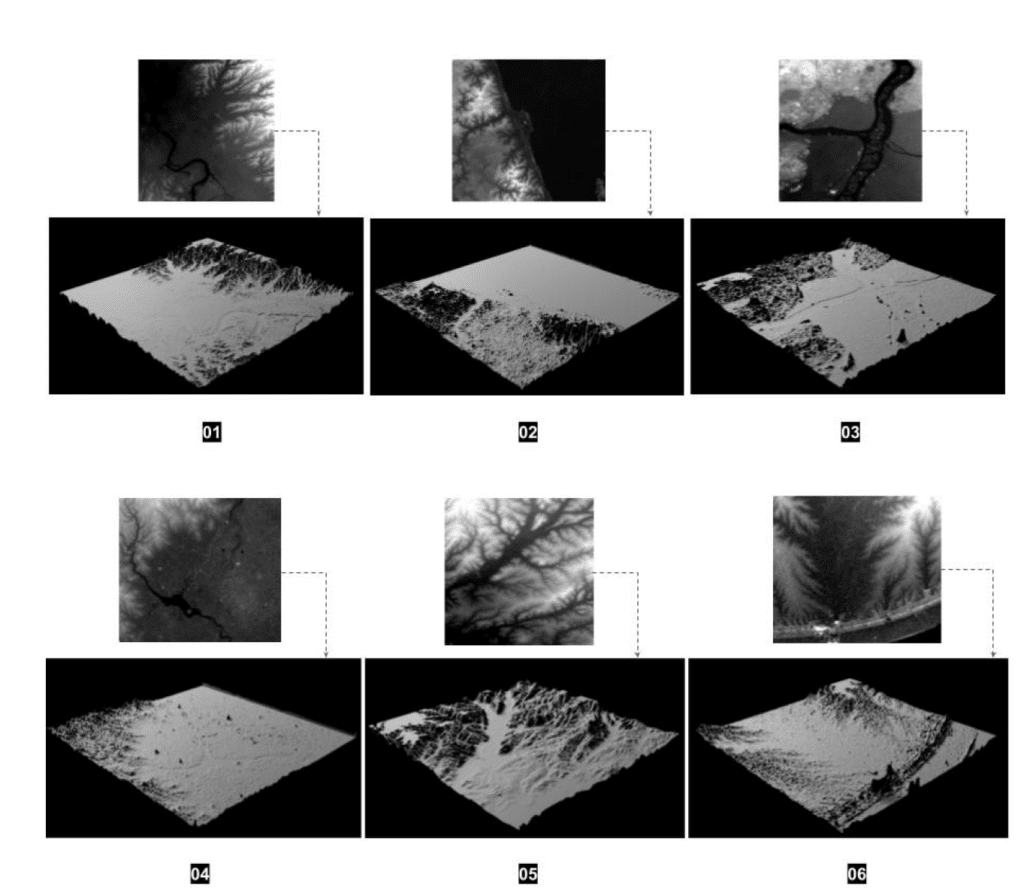

Displacement of Topographies

The generated height maps are applied as displacement textures in Blender using the Shader Editor, allowing for realistic terrain modeling. By connecting the height map to a Displacement node within a material’s shader, the elevation data translates into detailed 3D surfaces, enhancing depth and realism.

The diversity of outputs is evident in the variety of landscapes generated, ranging from rugged mountains to coastal plains with intricate river networks. This method provides flexibility in terrain creation, enabling customization of elevation intensity, texture blending, and fine-tuned surface details for more dynamic and visually rich 3D environments.

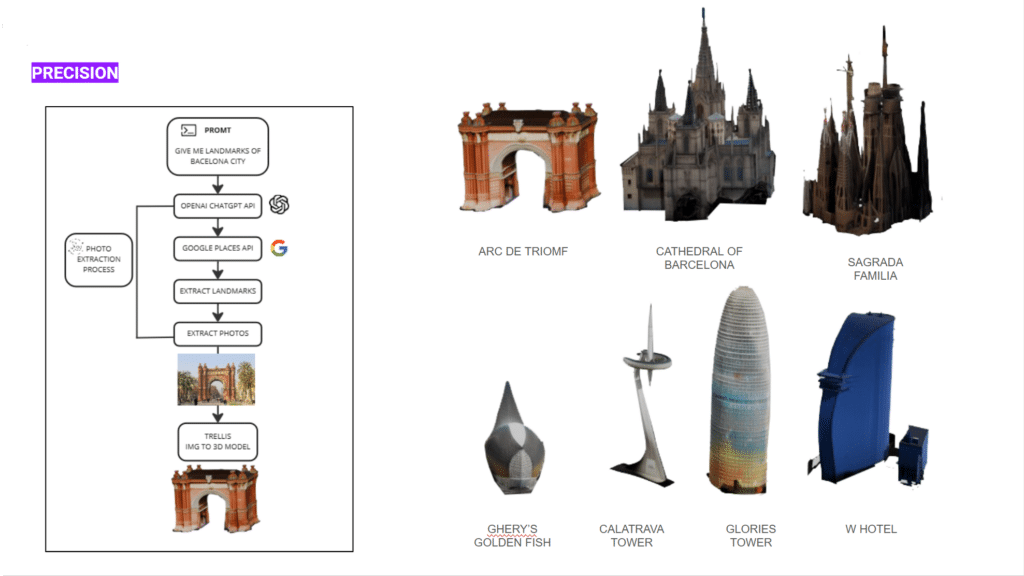

Landmark Generation

We built a landmark fetcher that helps you discover famous landmarks with just a few words—whether you type in a city name like “Show me landmarks in London” or describe something more specific like “Find me a castle with red gates and a mountain view.” It uses OpenAI’s ChatGPT to interpret your request and determine the best way to search. It then taps into the Google Places API to fetch real-time landmark data and returns images of the top matches. The entire experience was wrapped into a sleek, interactive Hugging Face app, making landmark discovery as fun as it is powerful.

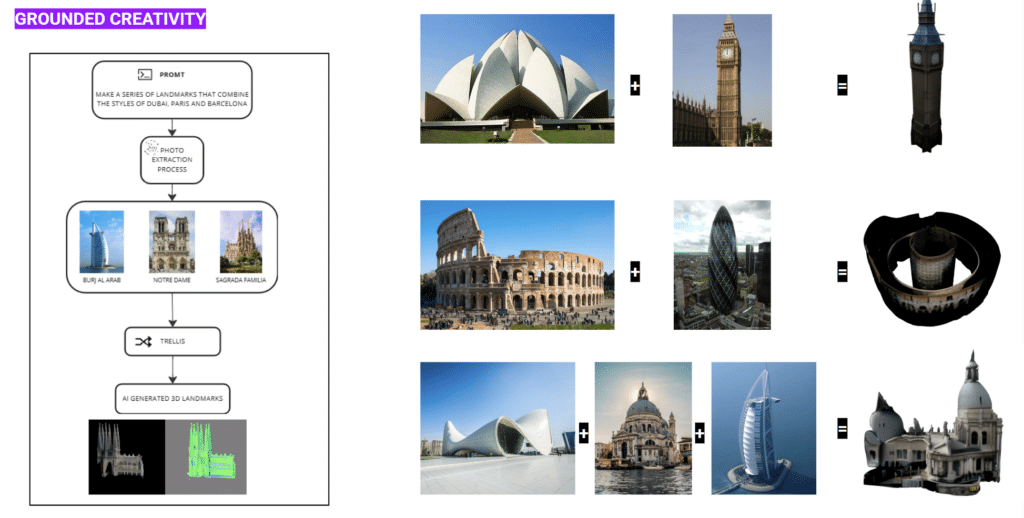

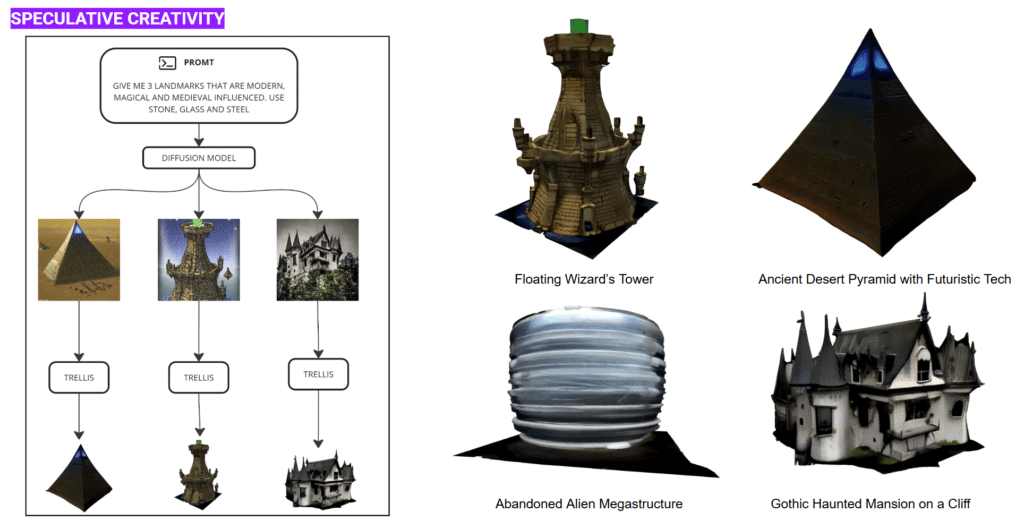

We then developed three different pipelines that allows the exploration of the generation going from 2D images to 3D outputs using IMG2MODEL tools such as Trellis. These were categorized into three: Precision, Grounded Creativity and Speculative Creativity.

Assembling

Using a procedural block through Visual Programming in the Blender Node System, we were able to superpose and assemble the displacement of the multiple maps from topography, street network and building footprint. Landmarks were then dispersed on the geometry. Multiple trials were done due to lack of precision because of the scale that we were working on, or the pixel precision of the available data.

How did we do it: Texturing

A series of video flythroughs were recorded in Blender, showcasing the generated terrains and environments from various angles and perspectives. These videos were then processed through the video-to-video Stable Diffusion model, enabling the application of different prompts and rendering styles to simulate diverse visual aesthetics for the game.

This approach allowed for dynamic experimentation with textures, lighting, and artistic styles, enhancing the realism and adaptability of the game’s environmental design while ensuring a more immersive and visually compelling experience.

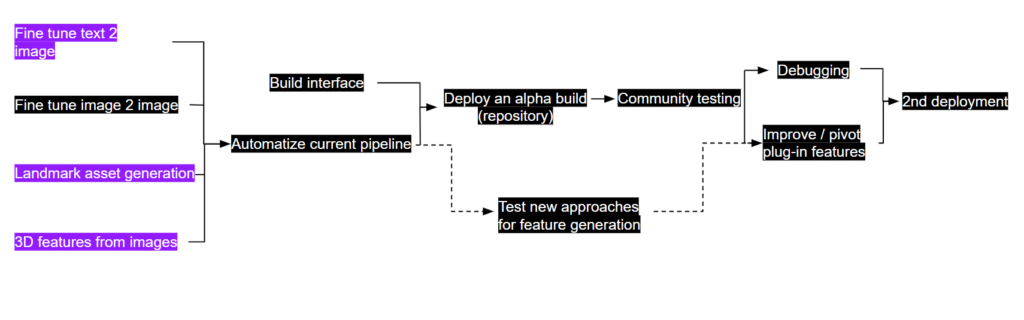

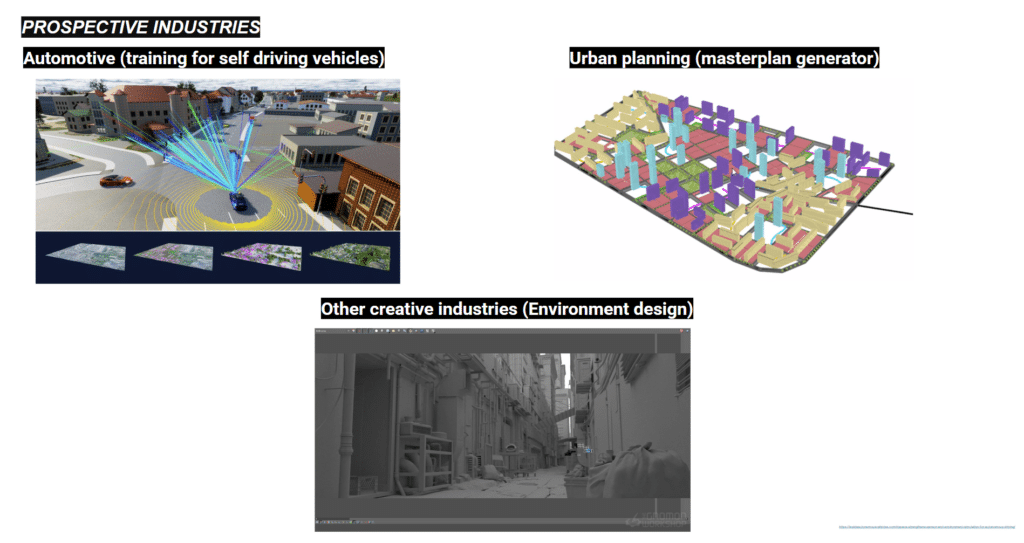

Roadmap and Prospective Industries

We elaborated a roadmap for the next term that settles the technical scope for the plug-in and the ambition that it aspires to attain. We also enumerated industries outside of gaming that could benefit from GG Worlds and their prospective inclusion in the workflow.

Closing

We are very optimistic regarding the prospects that this project presents from a technical standpoint to a practical one.

It answers many challenges posed by the industry when it comes to using GenAI for creation and design, but also when it comes to using available data and its processing for customized workflows and fine tuning. We aim at making this tool opensource and opening it to tweaking and modifications from the community that it is aimed at.

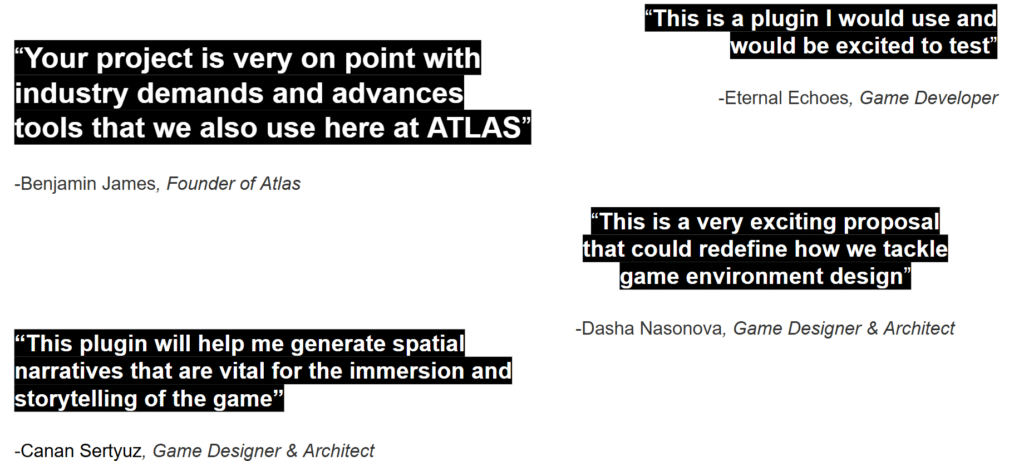

Here are a couple of reviews we got from industry experts after having presented the project to them: