Sketches in Space with AI and LoRA

What if architects could doodle inside their designs—live, in 360°?

ARchitect is a mixed-reality interface I developed for stylizing architectural spaces using expressive, cartoon-like overlays. Powered by LoRA fine-tuning and a custom-trained Flux, ARchitect reimagines how we interact with our built environment—by making architectural visualization playful, intuitive, and immersive.

The Problem

Architects still rely heavily on 2D renders, which often fail to convey spatial atmosphere—especially in immersive or interactive contexts. When it comes to storytelling, there’s a gap between concept and space.

To make these overlays truly meaningful, we trained a custom LoRA model.

First, we created a dataset combining realistic architectural views with playful, cartoon-like doodles. Then we fine-tuned Flux model using around 50 images — teaching it this unique mixed-reality style.

The Problem

Architects still rely heavily on 2D renders, which often fail to convey spatial atmosphere—especially in immersive or interactive contexts. When it comes to storytelling, there’s a gap between concept and space.

To make these overlays truly meaningful, we trained a custom LoRA model.

First, we created a dataset combining realistic architectural views with playful, cartoon-like doodles. Then we fine-tuned Flux model using around 50 images — teaching it this unique mixed-reality style.

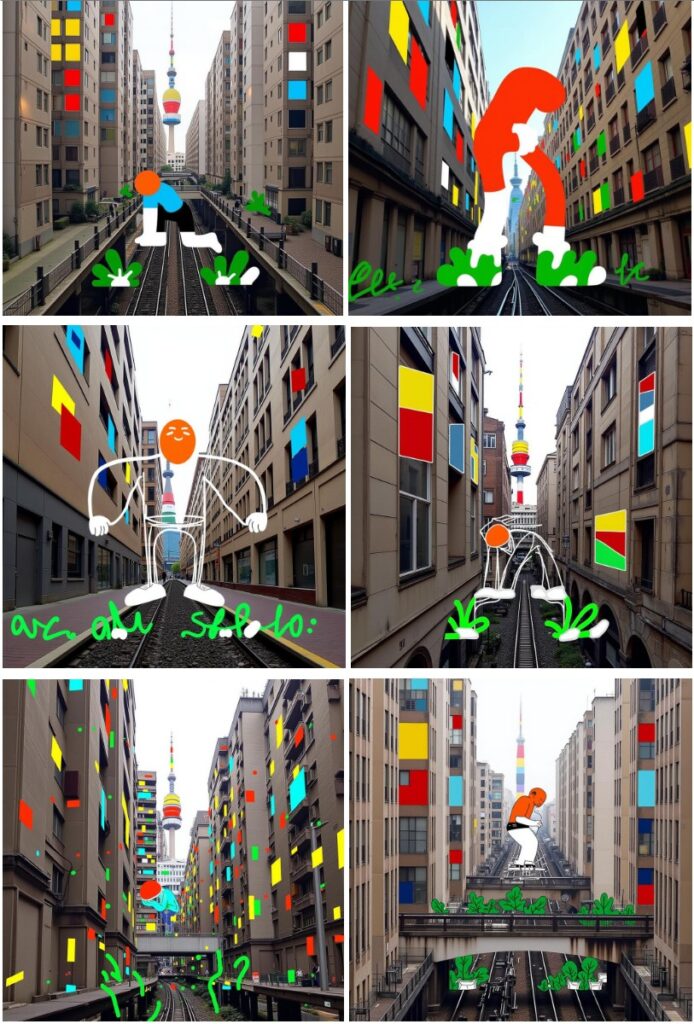

Initial Trials

Sketches in Space with AI and LoRA

For my 2 buildings.

What if I could quickly visualize how facade colors and patterns would look?

What if I want a doodle for a funny bridge or connection between my buildings?

You just write your idea, and the system overlays it onto your space.

This was one of our trials to see how the model could recognize and visualize facade patterns or even add playful structures for a bridge or connection between our two buildings.

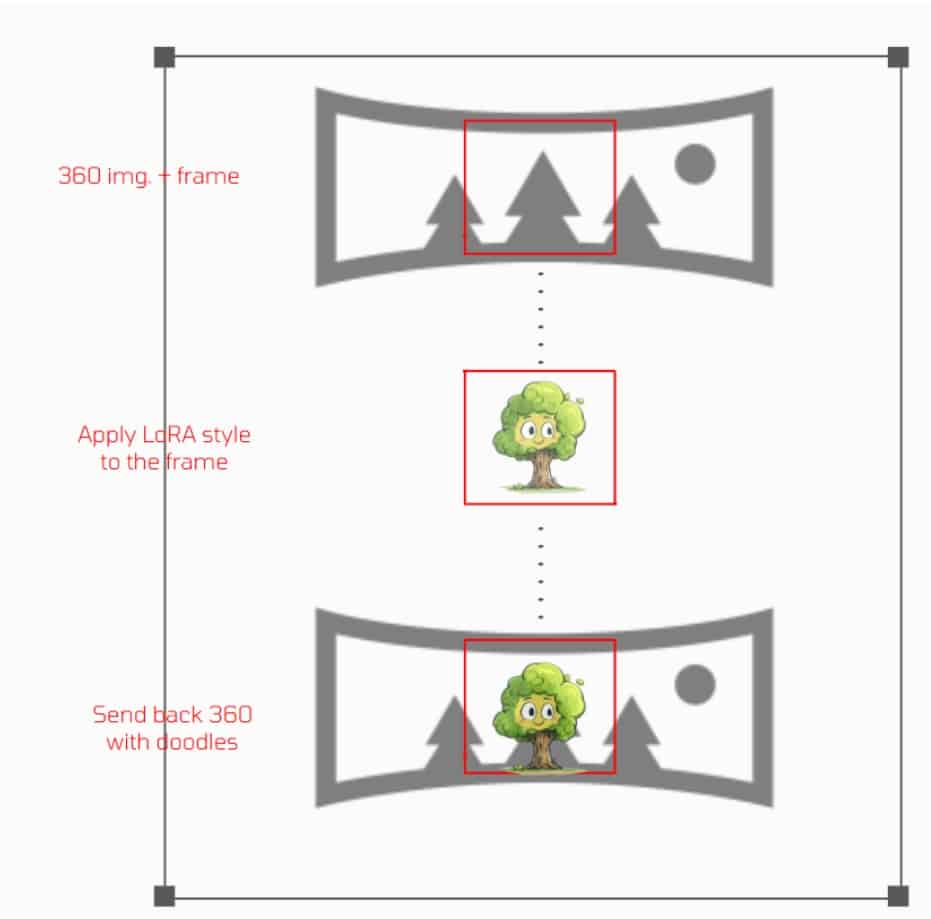

Workflow

- Prompt → Generate a 360° scene

Our next step was to convert this image editor tool to more AR immersive experience for the user, and to do so

Starting from a static image, the system expands it into a full 360 panoramic view. This transforms the editing process into an immersive experience—letting users rotate, scroll, and interact with the scene.

2. Select a frame/viewpoint

Once the scene is loaded, the user selects a specific frame. This frame is then sent to our LoRA model, which applies the doodle-style overlay based on the prompt.

The modified frame is to be sent back into the 360 environment—giving the user an immersive, stylized AR mockup.

Next step for interactive editing

3.Apply LoRA-based doodle styling

4.Reintegrate into the immersive scene