Artificial Inspiration – Dissecting the Creative Process

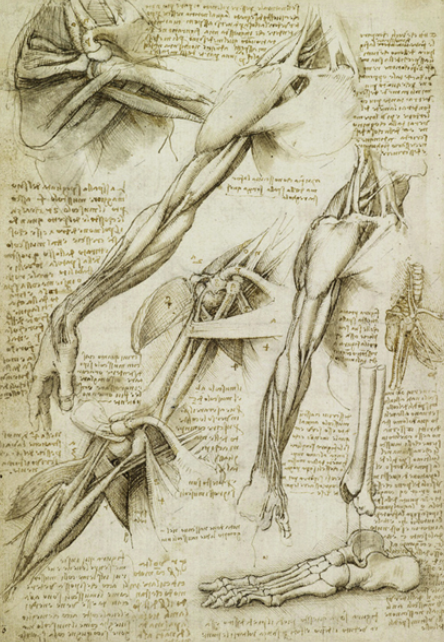

Creativity is often viewed as a uniquely human trait, emerging from the processes of study, interpretation, replication, and mixing variables to create new combinations and results.

This project explores whether it is possible to develop a framework based on these principles that AI can reproduce and whether this would make it creative. If the process of creativity comes from study, interpretation, replication, and mixing variables in search of new combinations, we have designed a workflow that uses Large Language Models (LLMs) and Diffusion models to analyze a given context and produce a creative result derived from what the AI has learned. The workflow involves providing a detailed description of a context or theme, allowing the LLM to analyze and extract key elements, themes, and styles, and then using the Diffusion model to generate visual or textual content based on these insights.

Finally, we assess the generated content for originality and relevance to the provided context. By replicating this workflow, we aim to question if replacing the steps that constitute this version of a creative process could genuinely be considered “creative.

Theme: The Chair & The Architect

We decided to explore the chair as a thematic for our project. Chairs, as design extensions from space to objects, have always fascinated architects. They encapsulate the essence of design, merging utility and aesthetics, and provide a focused study on morphology, materiality, and spatial harmony.

Subject: Designs as Extension to Architecture

In addition, architecture often guides designers in creating product lines that reflect specific styles. This combination of architectural principles and furniture design leads to a cohesive and attractive setting. By focusing on the chair in our AI-driven project, we emphasize the relationship between space and individual objects, showing how AI can enhance the design of a chair and improve the overall space.

Digital Process

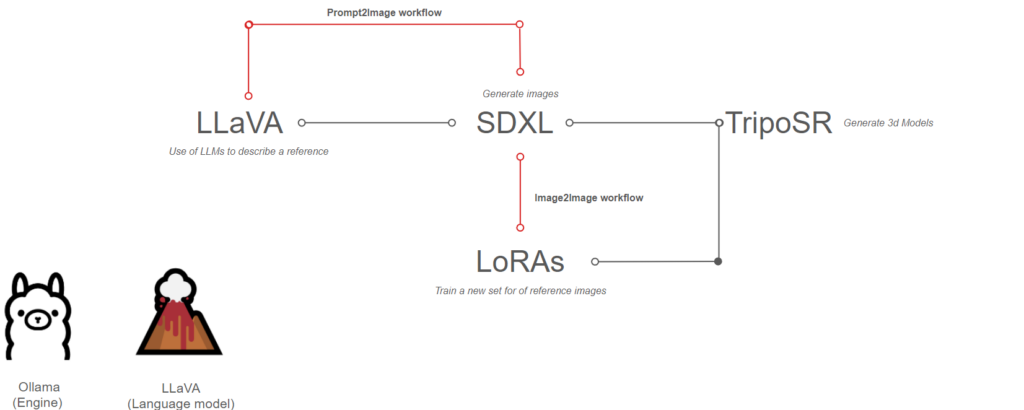

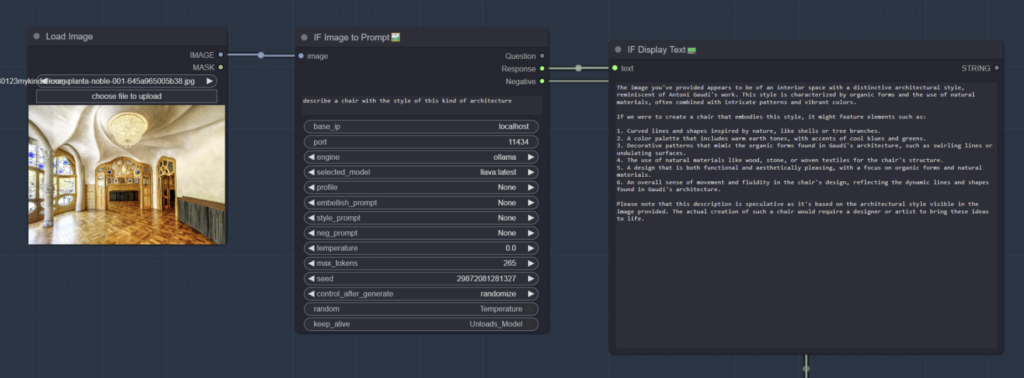

Initially, we utilize Large Language Models (LLMs) as an image description engine, specifically employing the Ollama and LLaVA models. These models help us generate descriptive prompts from reference images, which are then used in subsequent steps.

Following this, we use the prompts to guide image generation with the Stable Diffusion XL (SDXL) model. Additionally, we create a new set of images trained with reference images using LoRAs. For this process, we employ a LoRA trainer and an image captioner, known as the GPT Text Sampler.

Lastly, we use TripoSR to generate 3D models based on the images produced by Stable Diffusion XL. This allows us to transform 2D images into detailed 3D models, further enhancing our project’s scope and depth.

Use of LLMs

As mentioned earlier, we incorporate the use of Ollama, integrating the LaVA language model into our workflow. This enables us to generate descriptions of images depicting spaces designed by various architects. With these descriptions, we then create prompts that facilitate the production of furniture pieces using SDXL image generation.

Generating a New Result

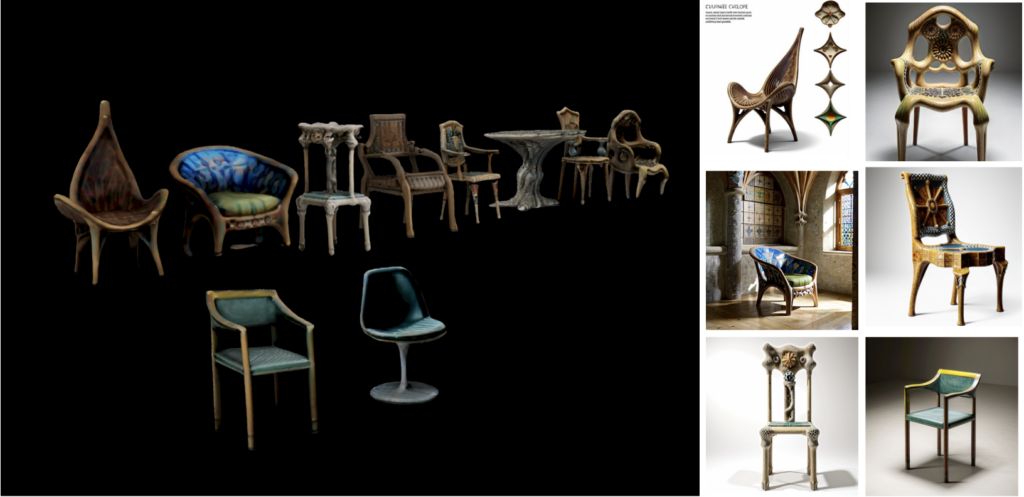

Antoni Gaudi

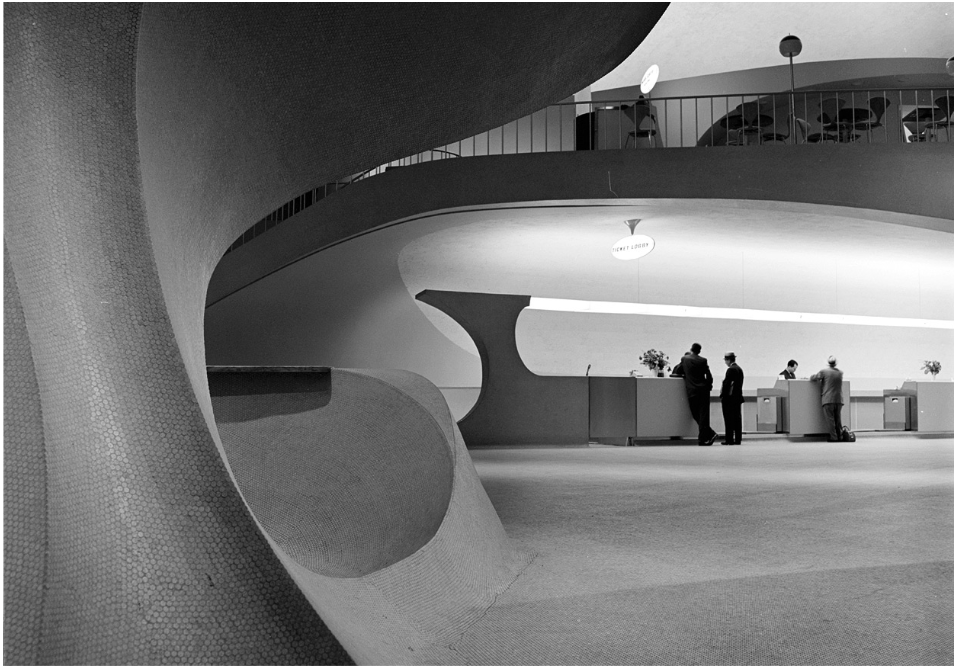

Eero Saarinen

Carlo Scarpa

Generating 3D Models

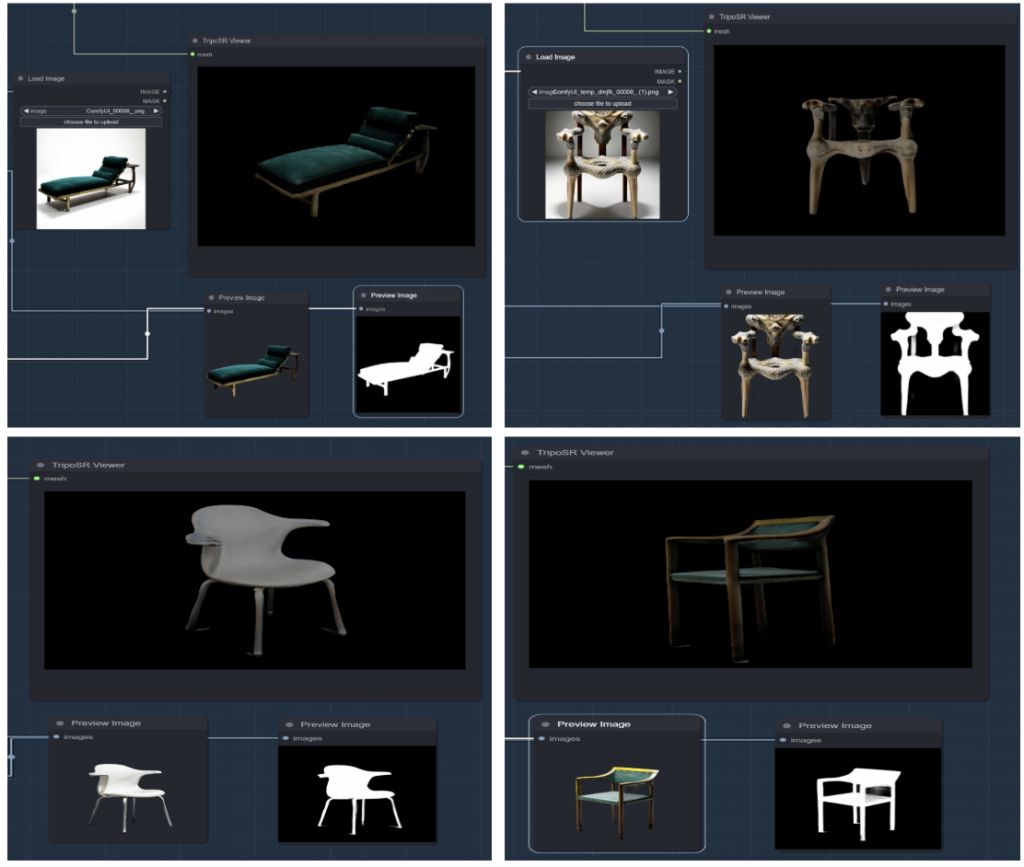

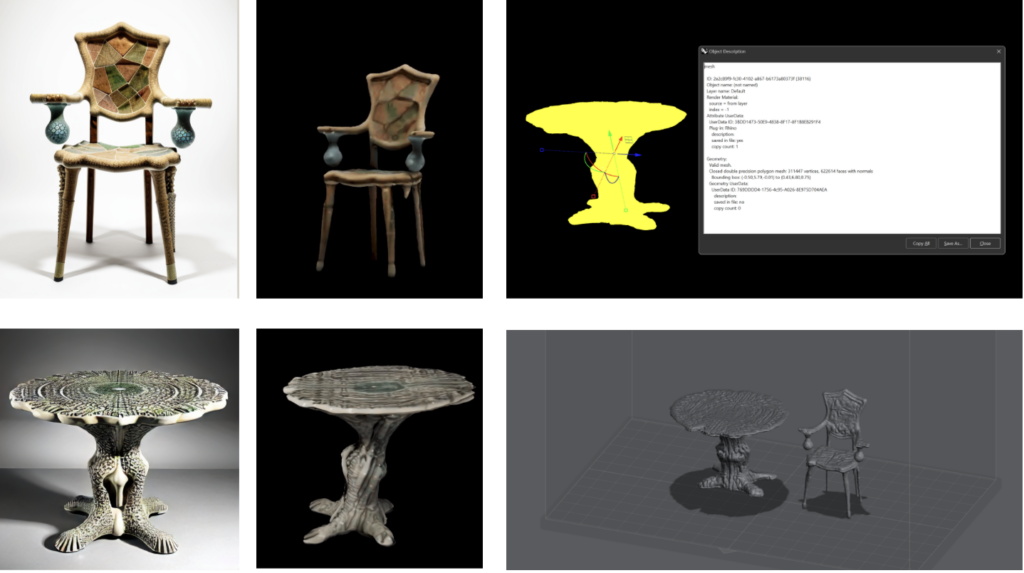

Once we had the images of the furniture generated, we were able to begin generating 3D models of the designs. This requires special treatment of the generated images to achieve the best results. To facilitate this, we removed the background from each image using masks. Following this preparation, TripoSR forms the final step in our digital process, used specifically to generate detailed 3D models based on these prepared images.

Finally, we ensured that these models are exportable as closed double-precision polygon meshes, suitable for 3D printing.

This completes our workflow from a referenced image to a physical object. However, it is still not sufficient to determine if AI can be considered truly creative. Nonetheless, its capabilities in this process make it perfectly suitable for debate on the topic.