DF Predictor aims to revolutionize daylight prediction in architectural design by implementing the conditional generative adversarial network (cGAN) Pix2Pix to predict daylight factors, motivated by the need to improve efficiency and accuracy in daylight analysis. Daylight factor analysis, an integral part of the early design stages and mandated by building codes, is typically lengthy due to ray tracing simulations. DF Predictor addresses this by providing quick daylight factor analysis in Revit via Rhino.Inside, enabling responsiveness to model changes. DF Predictor aims to revolutionize daylight prediction in architectural design by implementing the conditional generative adversarial network (cGAN) Pix2Pix to predict daylight factors, motivated by the need to improve efficiency and accuracy in daylight analysis. Daylight factor analysis, an integral part of the early design stages and mandated by building codes, is typically lengthy due to ray tracing simulations. DF Predictor addresses this by providing quick daylight factor analysis in Revit via Rhino.Inside, enabling responsiveness to model changes.

We chose to predict the daylight factor over other daylight metrics due to its simplicity. It ignores direct sunlight and factors like latitude, orientation, or climate. Representing a worst-case scenario under a cloudy sky, it is particularly suitable for evaluating daylighting in countries with limited winter daylight, focusing on health and well-being. This makes it ideal for high-latitude regions like the Nordic countries and the UK.

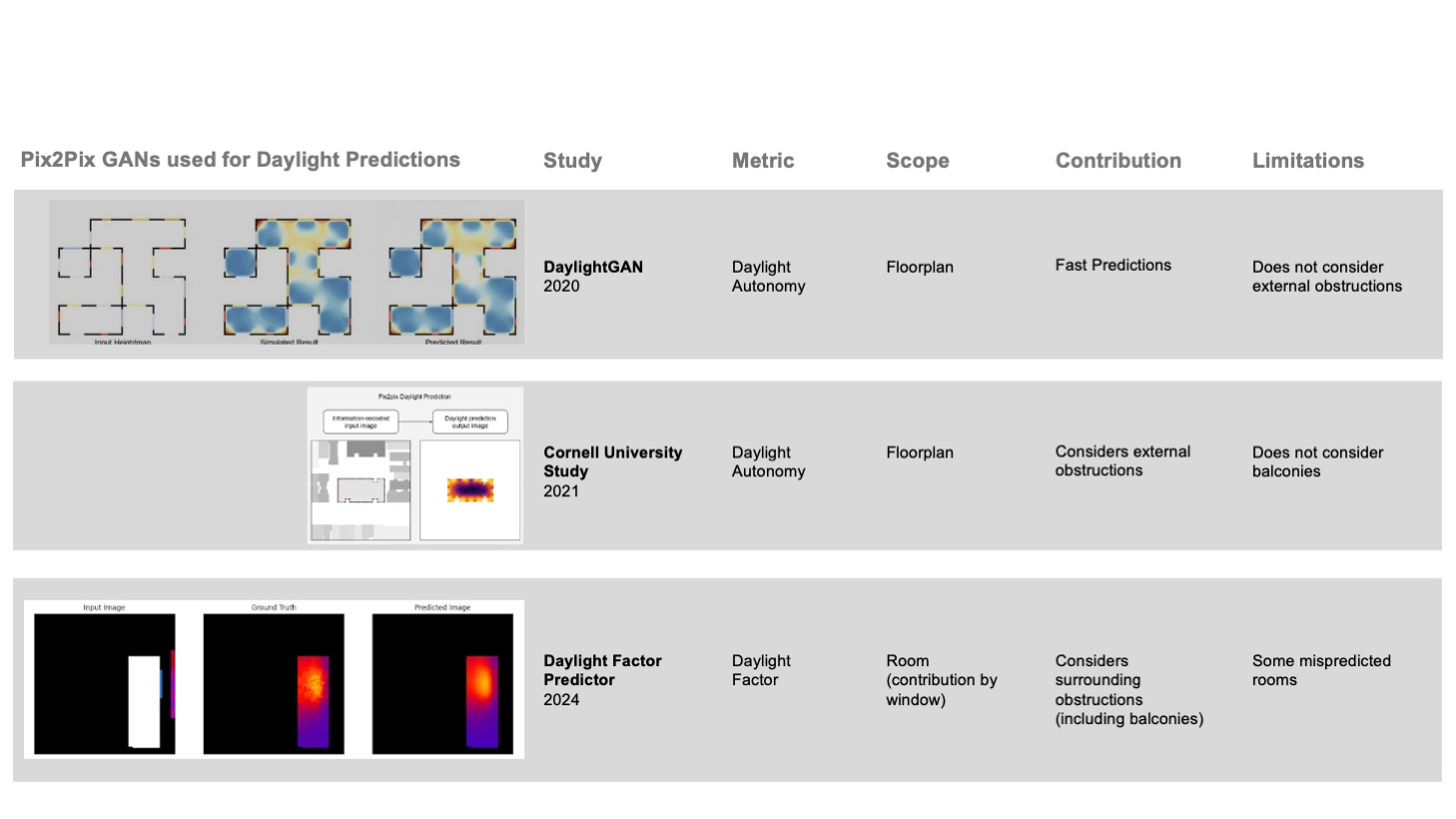

State of the art

Significant advancements have been made in daylight prediction using machine learning in recent years. DaylightGAN focuses on predicting daylight autonomy for entire floorplans, providing fast predictions without considering external obstructions. Cornell University introduced a study using Pix2Pix GANs for entire floorplans, considering external obstructions but not balconies. Our project takes a more detailed approach by focusing on daylight factors for room-by-room predictions. We encode each window’s contribution separately and consider all surrounding obstructions, including balconies. This method aims to achieve higher efficiency and accuracy. However, despite having high accuracy, we also had some mispredictions at the end.

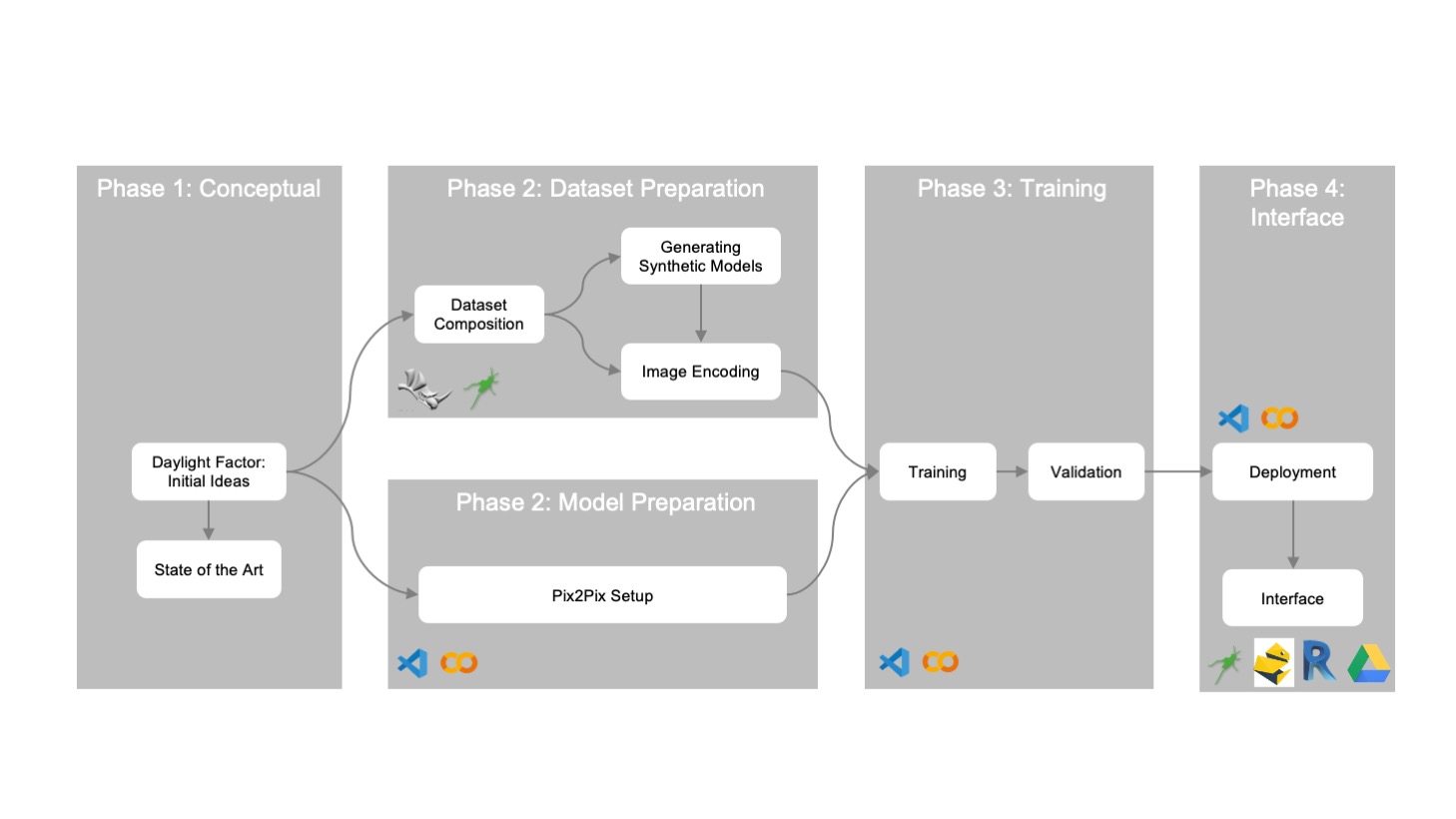

Methodology

Our project workflow started with the initial ideas on daylight factor analysis, looking at the precedent projects and evaluating the possible inputs that affect the result. Then we moved on to Dataset Preparation and the preparation of the Pix2Pix model simultaneously, where we learned a lot about the necessities of Pix2Pix and what kind of images we could feed the model with. Then, we prepared our dataset accordingly, generating our input and ground truth images. After training and validating the model, we moved on to the last stage of the project, where we deployed our model and created a friendly user interface for our target users.

Features

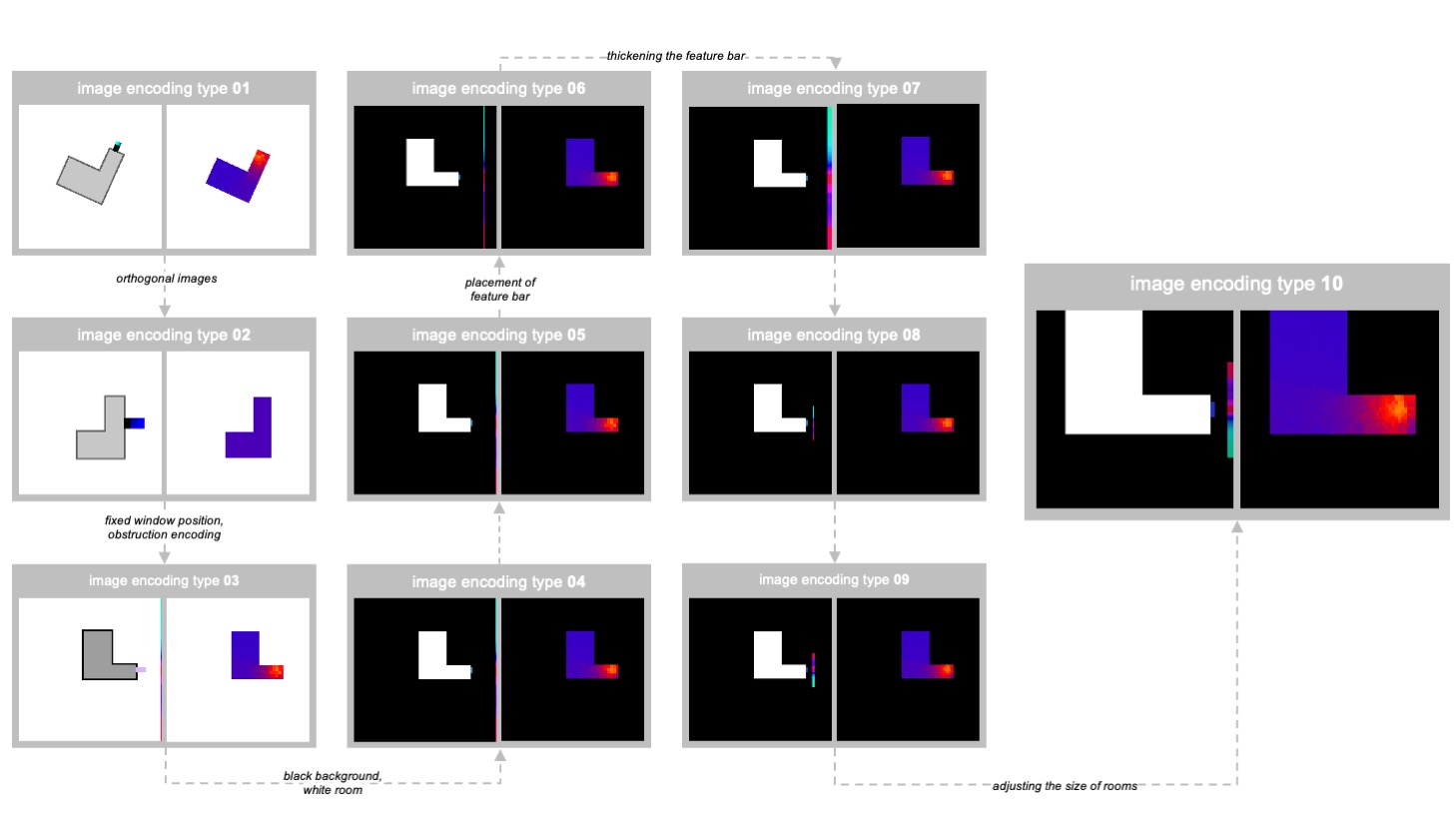

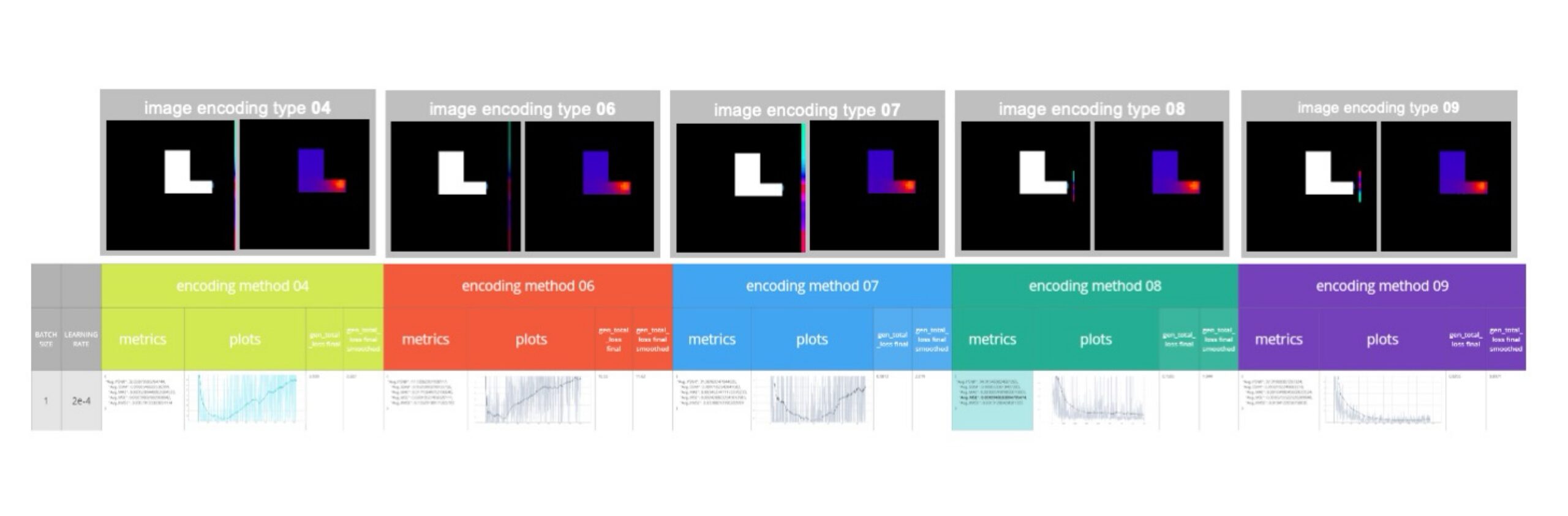

Given that the daylight factor is a purely geometrical metric, the most important thing that our encoding should describe is the view of the sky from different parts of the room. To simplify our model, we are encoding training in each room with one window at a time. Fortunately, daylight calculation for multiple windows is simply done by summing up the contributions for each window. In the first stage, we started encoding the room as it is in the rhino model, with the façade width and a simple encoding of the façade. Then, by trial and error, we added or edited the existing features individually at each step. We applied orthogonal rotation, fixed window position, and band encoding for the obstructions, tested different variations of the obstruction bar, and increased the room size.

Here is the explanation of our resulting encoded features one by one: room shape, window width, window height encoded as lightness channel, sill height as hue channel, frame factor as saturation channel, facade width also explicit by the window sill, obstructions angles from below (from calculating the intersections of these rays with surrounding buildings, encoded as the hue channel in the band on the right of the image), and obstructions from above, that is from balconies, as the lightness channel.

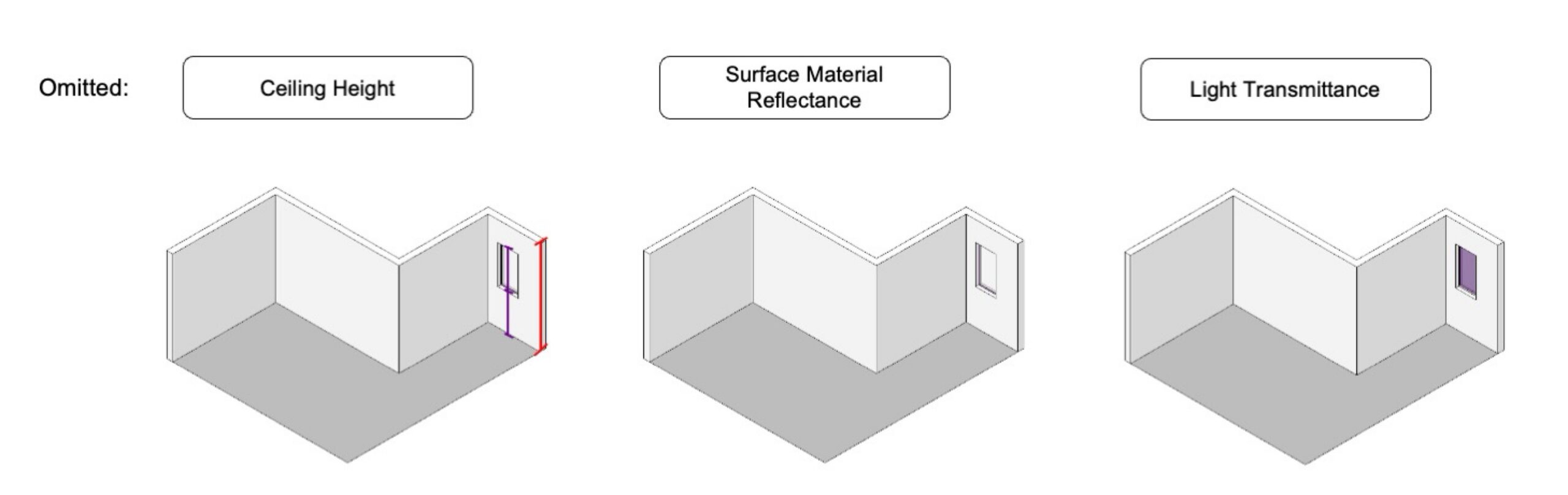

Ceiling height is omitted, as the key height is the window head height, which is implicitly encoded as the combination of sill height and window height.

Dataset

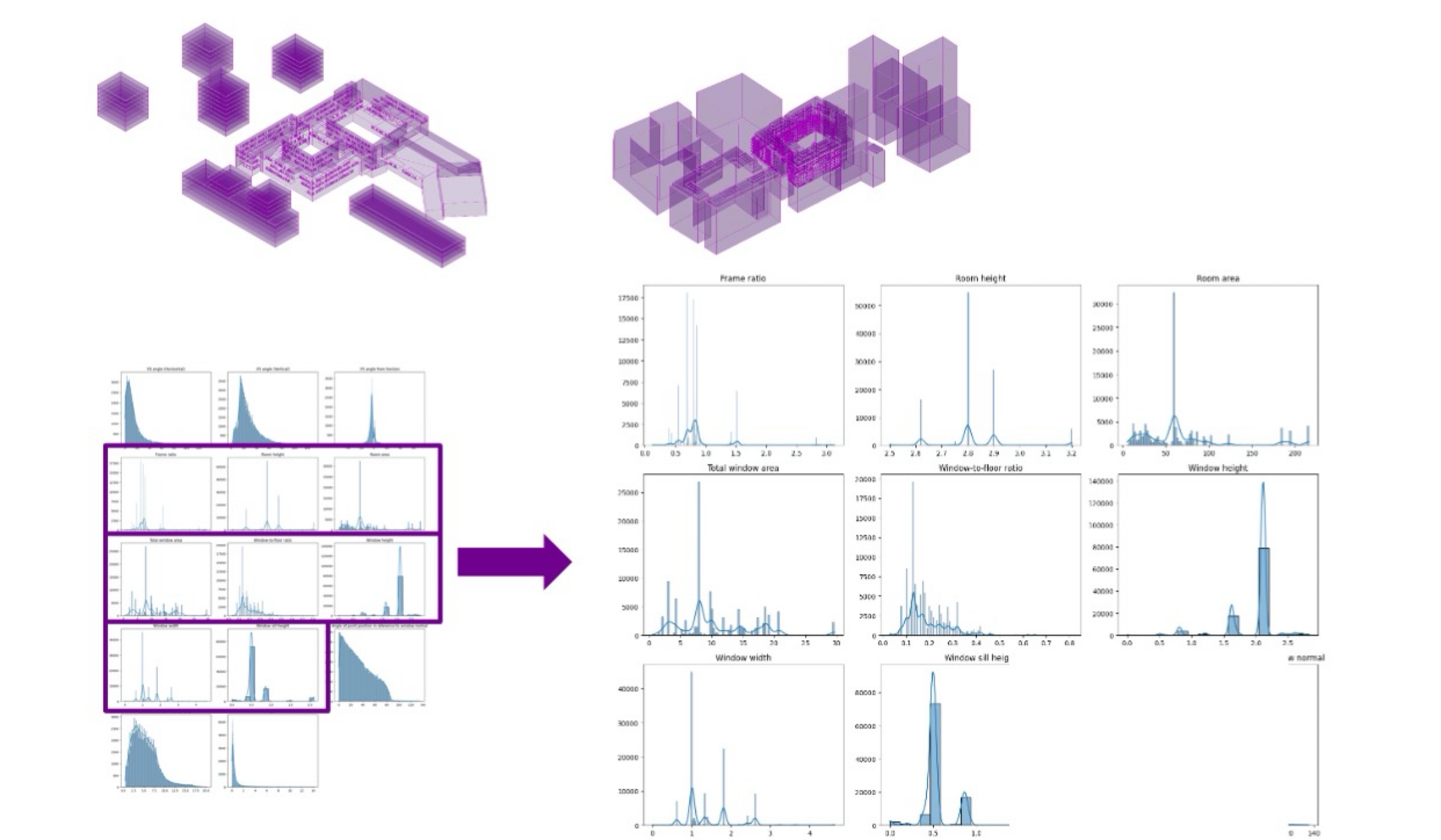

Initially, we collected the data from a model of actual buildings. However, upon evaluating our dataset via histograms, we found that the window geometry features did not have enough variation. Therefore, we created synthetic models with a great variety of geometrical features and ended up having 30 simulation models and approximately 14 thousand rooms as input for our ML model.

Training

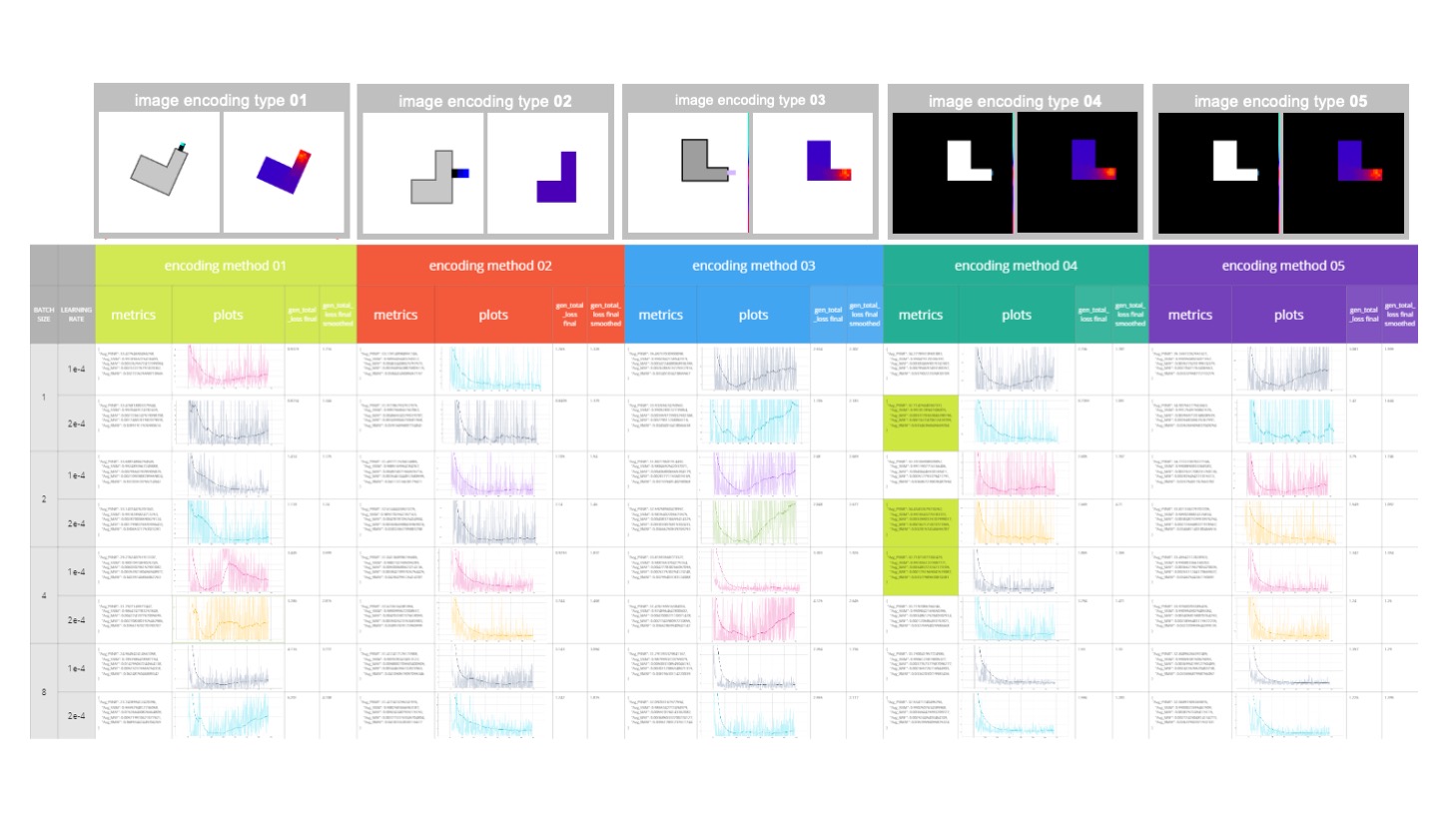

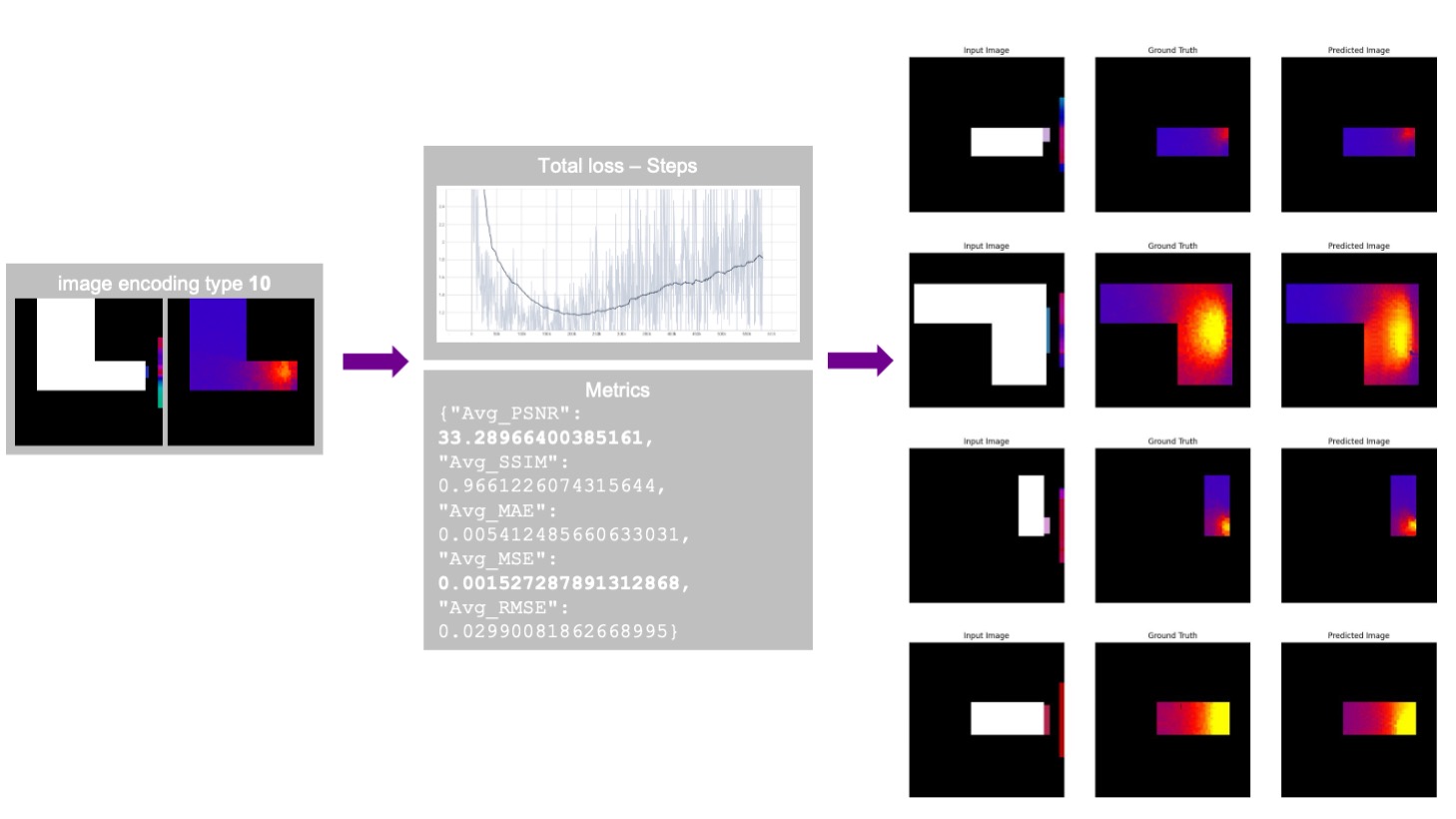

As mentioned earlier, we trained our model with 10 image encoding variations, changing a few features at a time, eliminating them, or changing how we express the feature. Throughout numerous model training, we had the best metrics in image encoding type 10, mainly focusing on the mean squared error and corresponding predicted images.

Validation

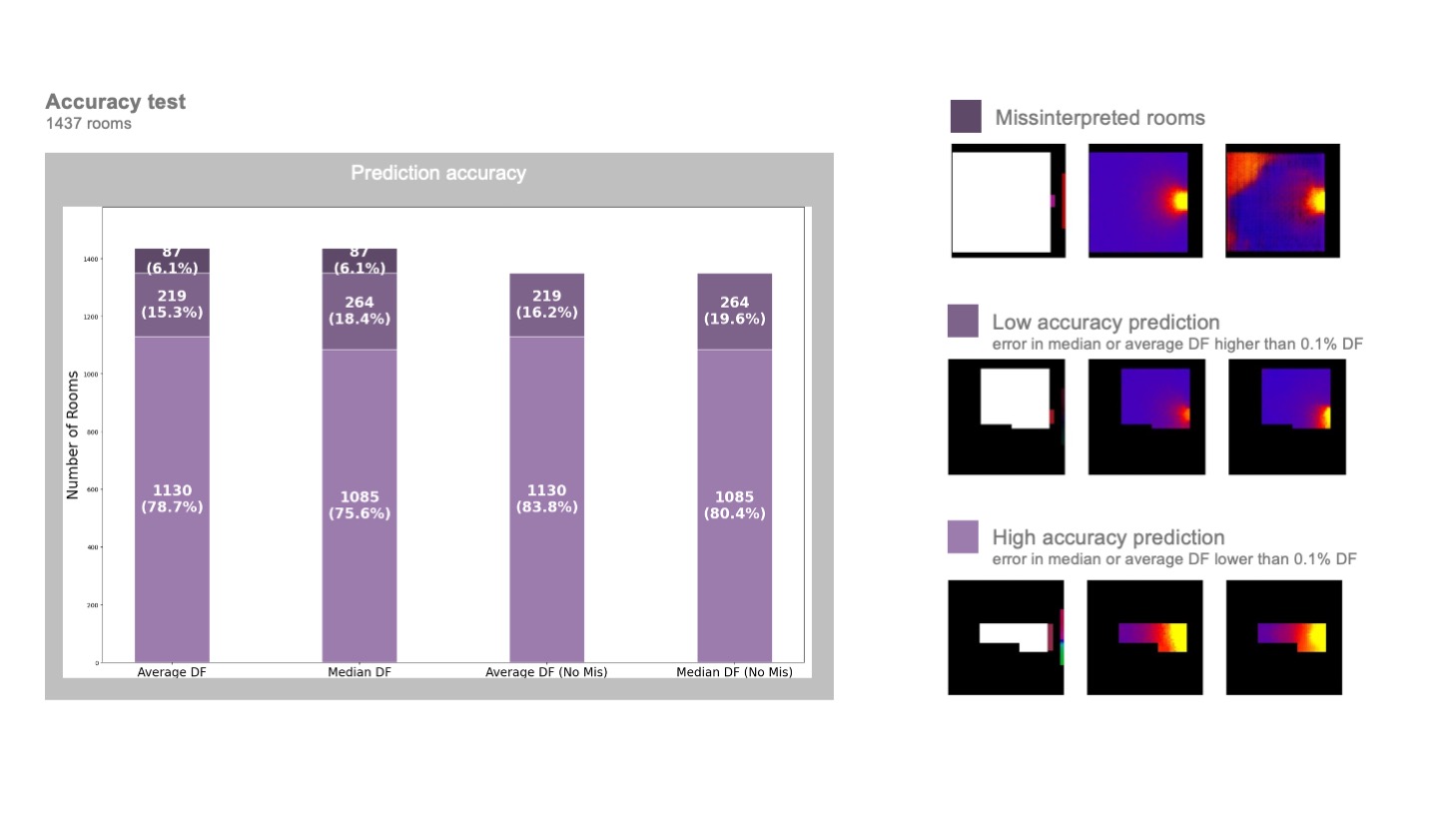

Here is the validation of our Pix2Pix model on a dataset of approximately 1400 rooms, comparing the average and median daylight factor (DF) for the ground truth and model predictions. Rooms were categorized into three groups: accurately predicted (error within 0.1% DF), inaccurately predicted (error exceeding 0.1% DF), and misinterpreted rooms (completely mispredicted, often due to room size exceeding the encoding frame). Accurately predicted rooms comprise 75-80% of the dataset, increasing to 80-85% when excluding misinterpreted rooms.

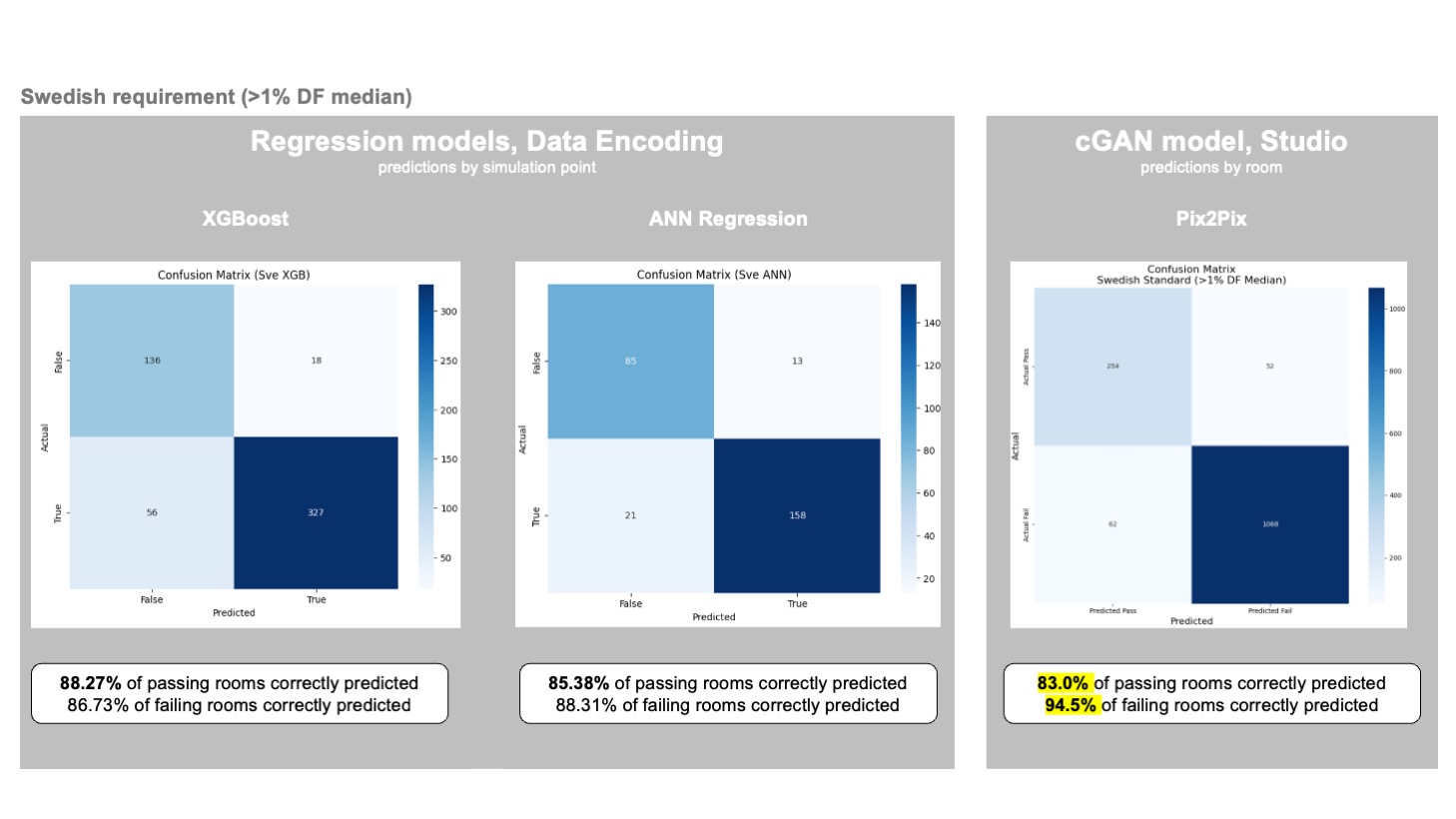

The following are confusion matrices of mispredicted and correctly predicted failing and passing rooms according to the Swedish daylight requirement (1% DF median). The left matrices show results from two optimized regression models from our Data Encoding seminar (in which we predicted by simulation points). In contrast, the right matrix shows results from the Pix2Pix model tested in this studio. Pix2Pix demonstrates higher accuracy for predicting fails than passes, likely due to a more significant number of rooms with low daylight levels in the dataset, enhancing the model’s effectiveness in such conditions. Compared to our previous DE assignment, Pix2Pix has similar overall accuracy but is more accurate for predicting fails and less precise for predicting passes. To improve, we should create a dataset with a balanced room distribution, peaking around 1% median DF and 2% average DF.

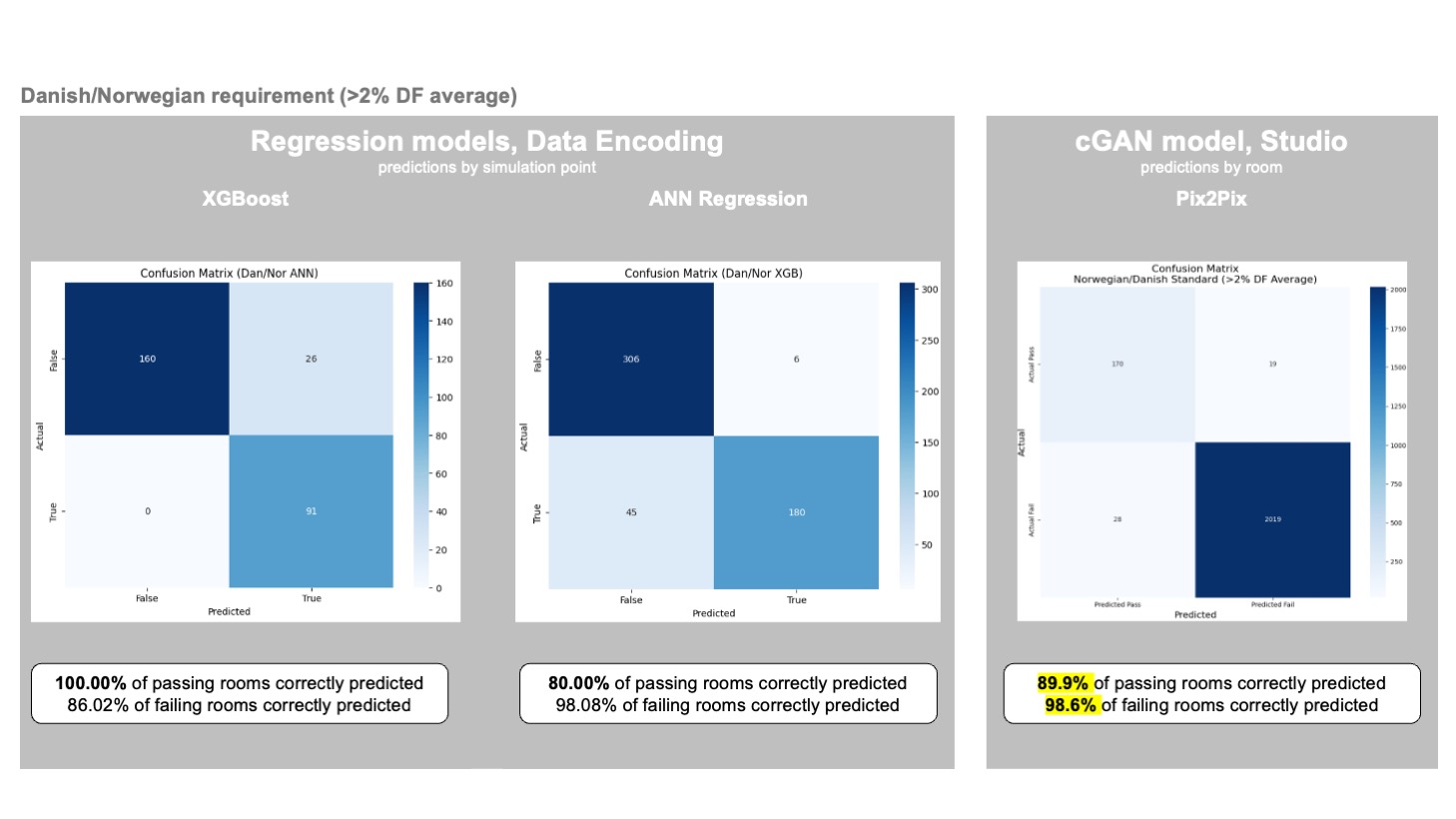

The results are the same, using the Danish/Norwegian standard (2% average daylight factor) as a reference. Additionally, the encoding process for Pix2Pix is significantly faster than the numerical encoding approach we used in the Data Encoding seminar, making it more suitable for practical applications.

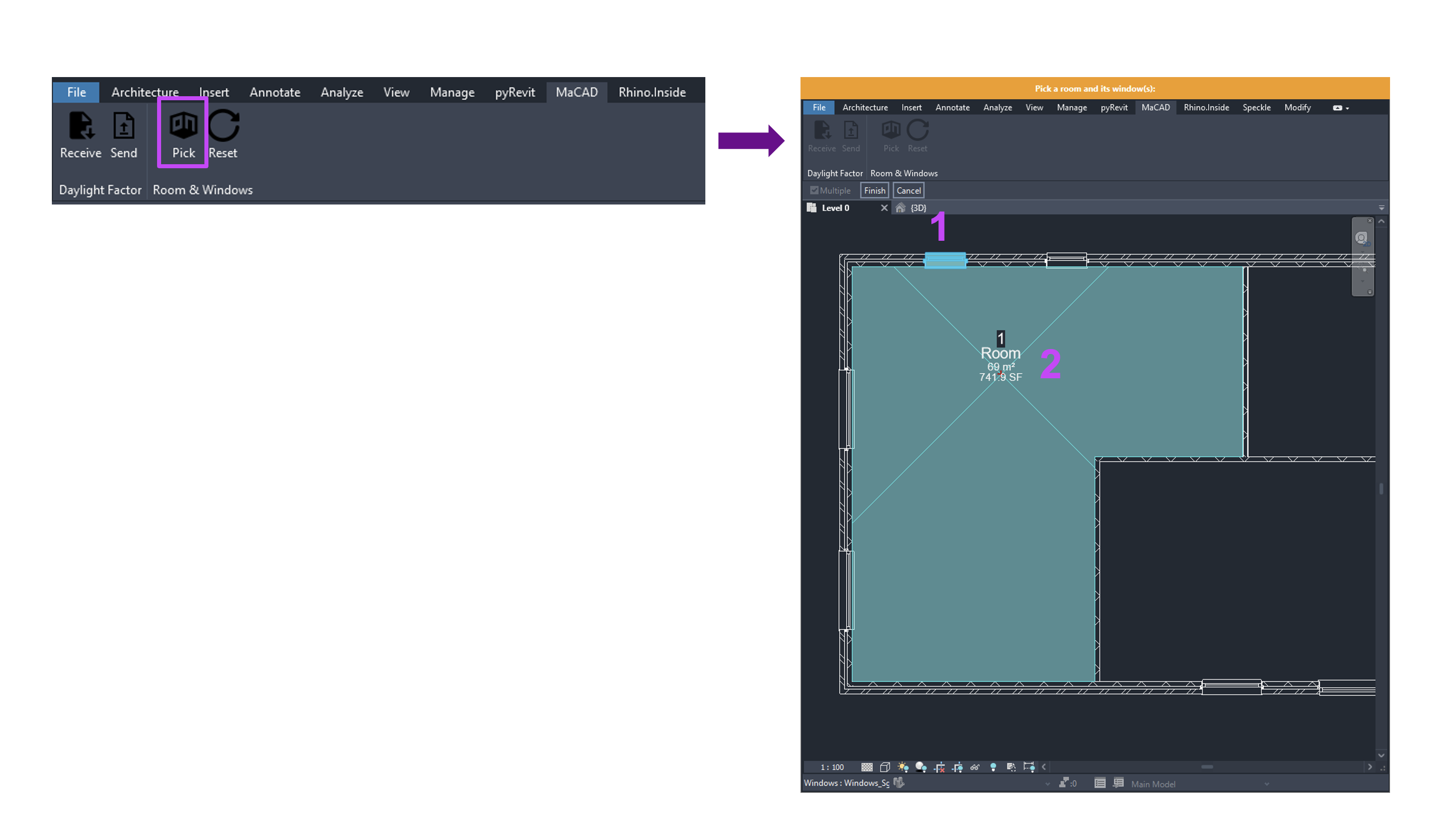

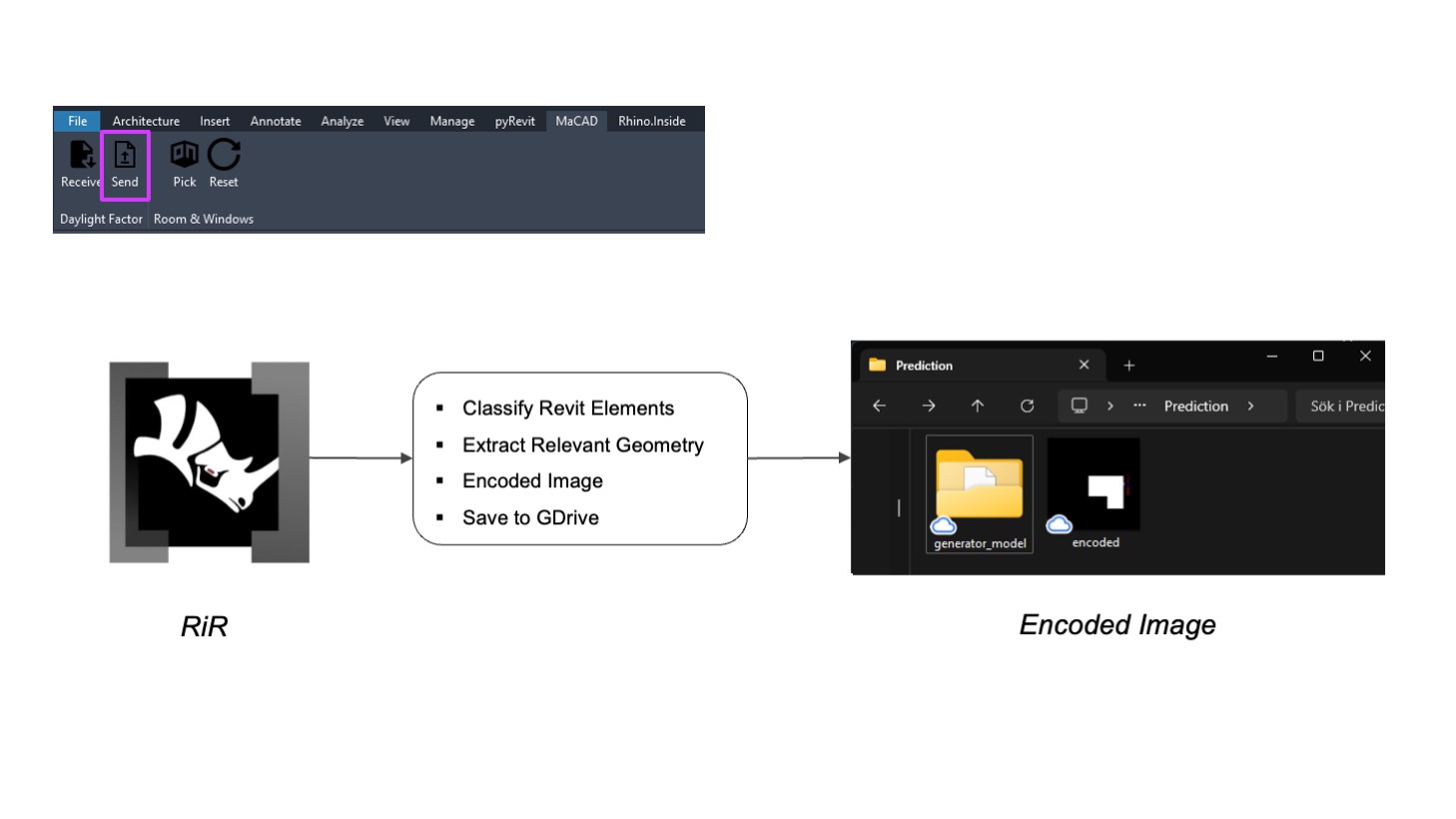

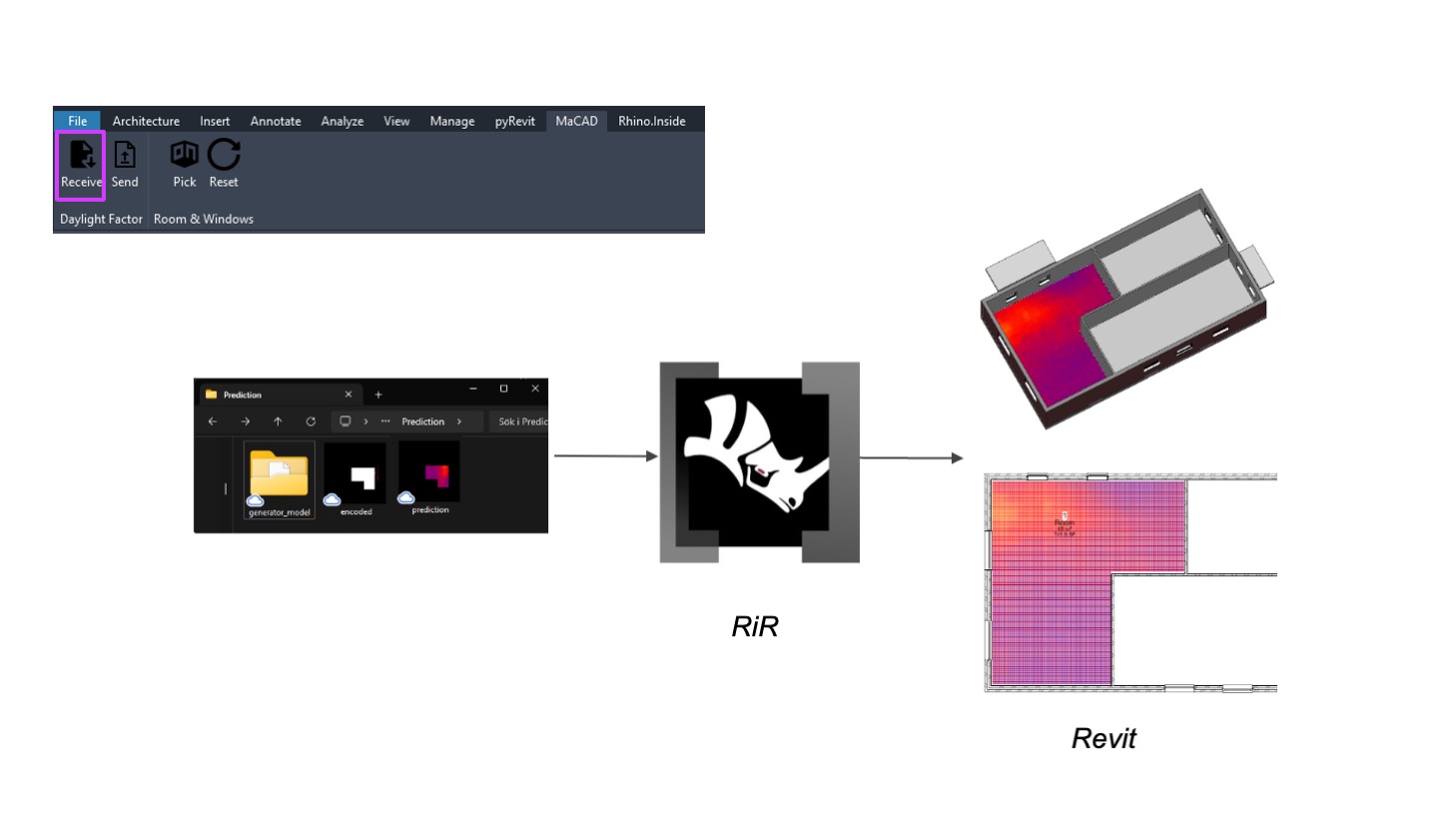

User Case and App

Our target audience for the app comprises typical commercial studios, so we assume users are proficient in Rhino and Revit and have both installed. It’s also important to note that predictions are conducted at a room level. The process begins with data collection: the user opens Revit, navigates to the plugin tab (prototyped with PyRevit), and selects the relevant parts—first the window, then the room. Obstructions such as balconies and context buildings are automatically identified and extracted from the model. The second part of the workflow is focused on encoding the data. In this part, the user sends the collected data to a Rhino.Inside.Revit script that runs in the background that encodes everything in a proper format and saves the file to Google Drive. The workflow’s third and final part focuses on processing the data and decoding it back to Revit. So, in this part, a Google collab script needs to be initiated (manually in our current stage of development) to process the encoded image and save it. After that, what’s left is to click the receive button that would trigger another Rhino inside the Revit script to decode and display the result. What’s important to mention is that the imported result can be displayed as a mesh or a set of numerical values.

Outlook

As part of this project’s outlook, we learned too late that Google Cloud Functions focus on CPUs. Our GPU-accelerated model would benefit from a service like Vertex AI. Deploying the model and sending direct web requests can simplify our user interface.

For our validation, we added up the analyses of all the windows in a room. However, we only show one analysis per room, per window for the user interface. This is a further development from which the app could benefit.

For our user interface, we have used only native components in our Grasshopper scripts. Porting them to Python and uploading them to a Github repo would make it possible to offer them as a pyRevit Extension.

Our next steps are to balance our dataset by focusing on rooms with middle daylight levels, targeting around 1% median DF and 2% average DF, to improve the model’s accuracy. Additionally, we plan to remove rooms that are too large, as these often lead to mispredictions.

Takeaways

Regression models tested in the data encoding course achieved high accuracy despite expert advice, making them suitable for quick predictions for a few rooms due to their relatively time-consuming encoding. The Pix2Pix model shows similar accuracy with the potential for even higher accuracy once we tweak a few parameters. Thanks to its faster encoding, it is more suitable for predicting many rooms at once. While both models are compelling, Pix2Pix holds promise for broader application and higher efficiency.