Github: https://github.com/elicolds/emotionreader/

Introduction

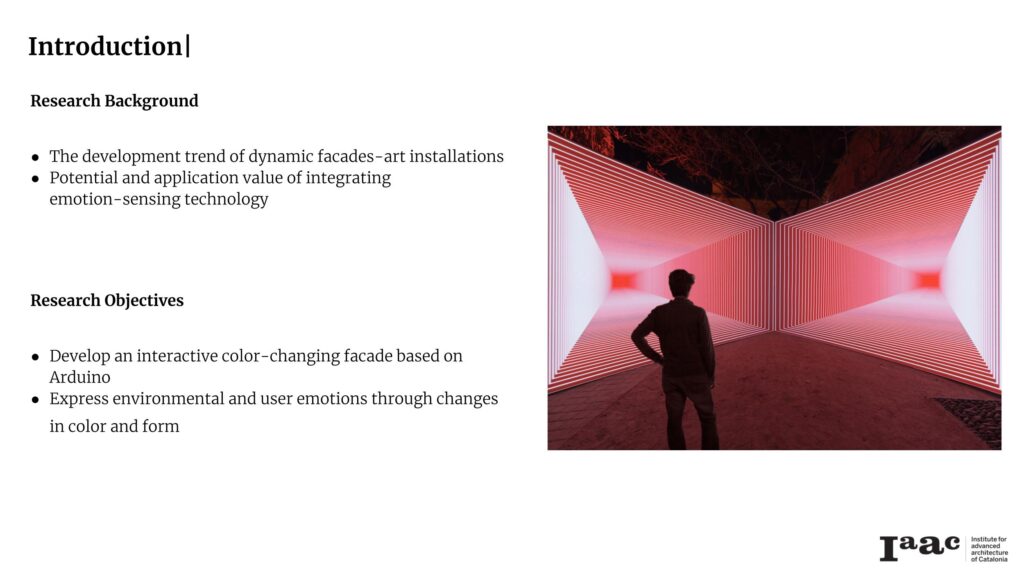

In the realm of architecture and art, installations serve as powerful mediums to explore the intersection of technology, human emotion, and design. Our project reimagines the relationship between viewers and spaces by creating an interactive structure that reacts dynamically to human presence and emotions. Combining hardware, software, and creative ingenuity, we designed a responsive art piece that not only engages but also communicates with its audience.

Concept

At the heart of our design lies the idea of emotional resonance. This installation was conceived as a way to merge physical movement with emotional interpretation, creating a unique dialogue between the viewer and the artwork. By incorporating real-time emotion detection and dynamic physical responses, we aim to evoke curiosity, introspection, and a sense of connection.

The installation is both reactive and reflective—detecting proximity, reading facial expressions, and responding with visual and mechanical changes. It becomes a living, breathing entity that acknowledges the emotions of those who interact with it.

Stages of Development

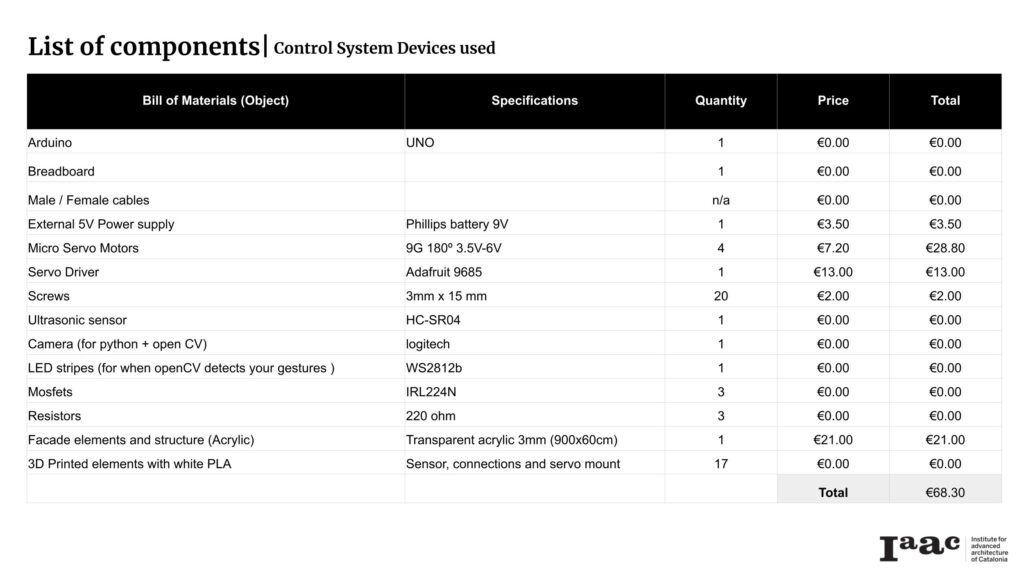

1. List of materials:

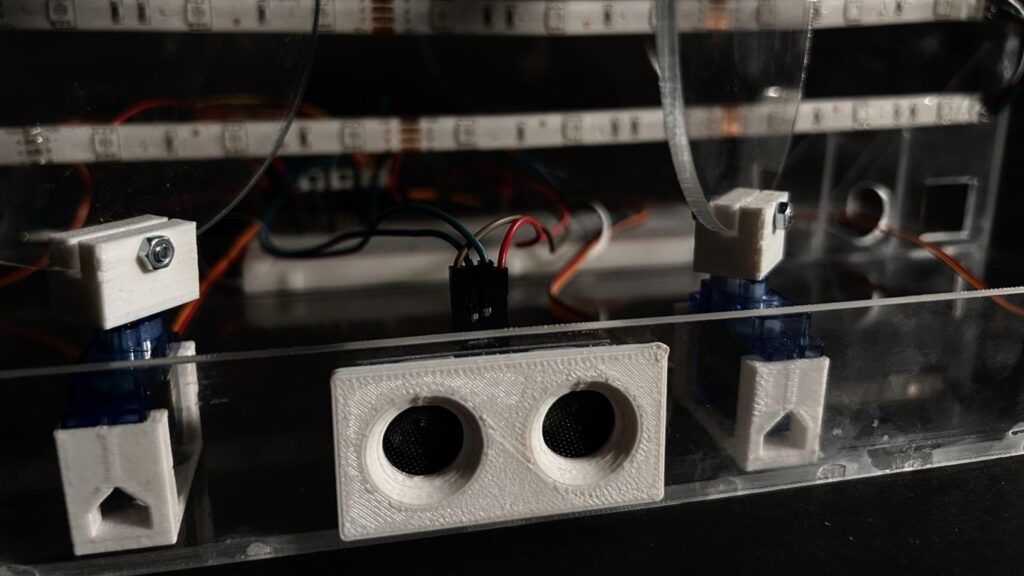

- Ultrasonic Sensors: Detect human proximity, acting as the trigger for the system.

- Servo Motors: Animate physical elements, such as panels or modules, which move in response to the viewer’s approach.

- RGB LED Strips: Express emotions through color (red for anger, green for happiness, blue for sadness).

- Arduino Uno: Orchestrates inputs and outputs, forming the “brain” of the system.

- Webcam: Captures facial expressions for emotion recognition.

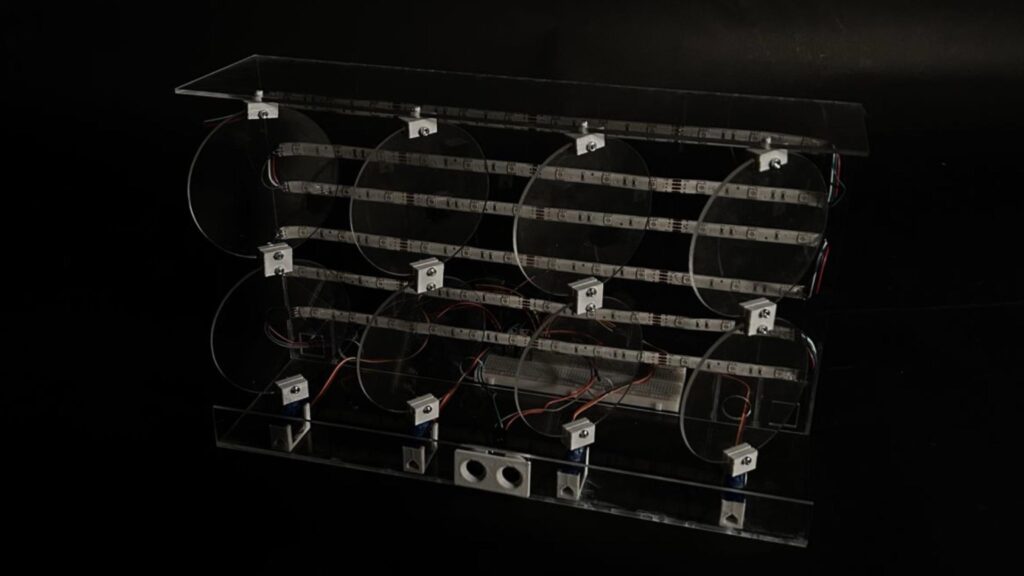

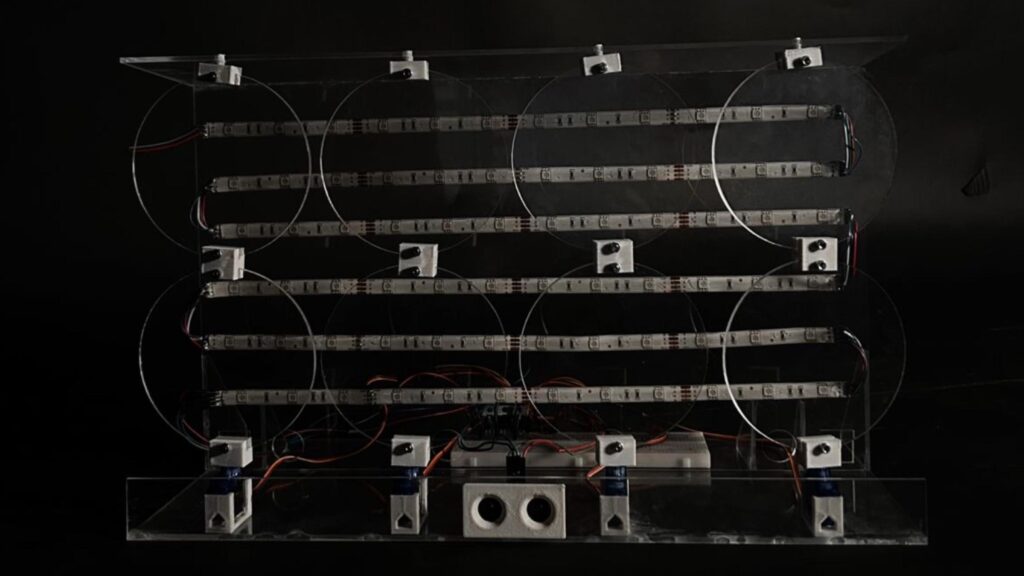

The physical design of the installation incorporates modular panels, arranged to respond dynamically to the viewer’s actions. These panels move, light up, and shift to create a multi-sensory interaction.

2. Workflow overview:

- When a person approaches the installation, the ultrasonic sensors detect their presence.

- The servo motors move the panels to an open position, welcoming the viewer and activating the RGB lighting.

- A webcam captures the viewer’s face, and Python processes the image to detect emotions.

- Depending on the detected emotion:

- Anger: The lights turn red.

- Happiness: The lights turn green.

- Sadness: The lights turn blue.

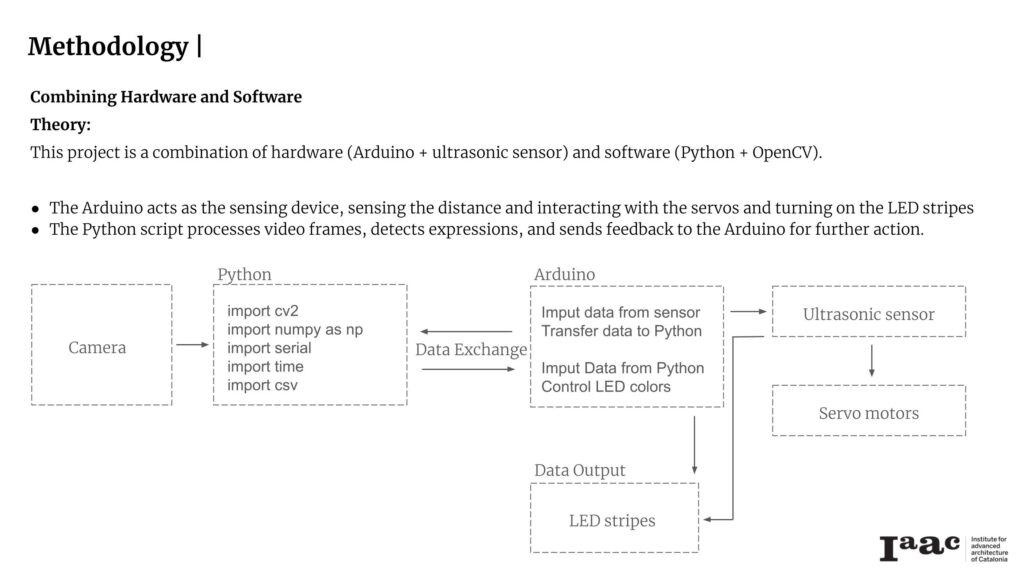

3. Methodology and Software Integration:

- Arduino Programming: The Arduino interprets distance data from ultrasonic sensors to activate servo motors and LED lighting. It also communicates with a Python script for emotion processing.

- OpenCV and DeepFace: Using Python, the webcam captures the viewer’s facial expressions, which are processed by DeepFace to identify emotions. These emotions are mapped to LED color changes.

Artistic Impact

The installation blurs the boundaries between observer and participant, turning passive onlookers into active contributors. Its purpose is to evoke reflection and awareness of the emotional states we project, demonstrating how art can become an empathetic mirror of our inner selves. This interplay between technology and human interaction creates a powerful commentary on how we engage with the spaces around us. Challenges and Lessons Learned

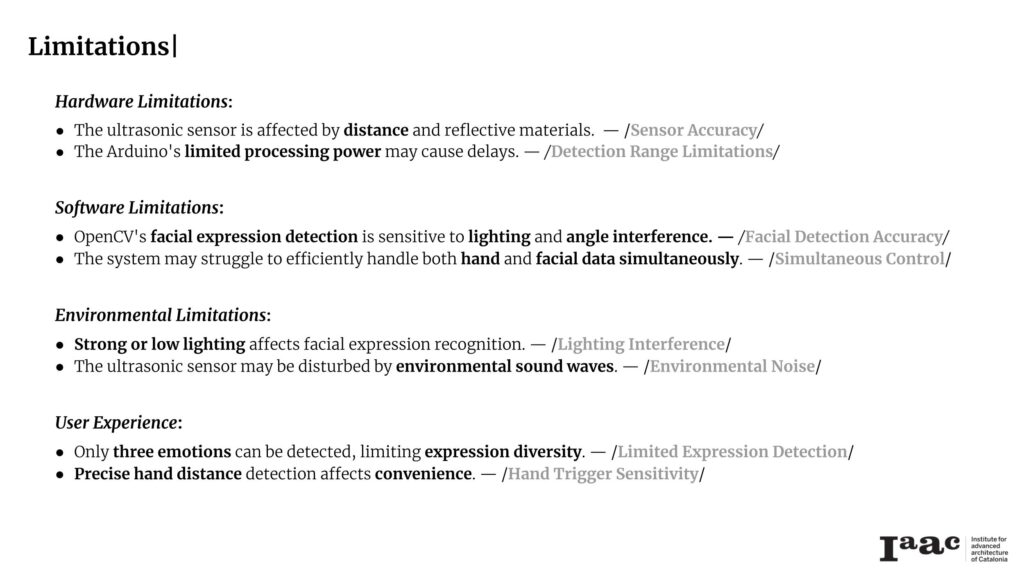

As with any experimental project, we encountered challenges:

Despite these hurdles, the installation serves as a proof of concept for integrating technology, emotion, and design into a cohesive experience.

Conclusion

This project showcases how technology can elevate art into an interactive, responsive medium. By combining sensors, actuators, and emotion-sensing, we designed a piece that transforms how audiences experience art. This project underscores the potential of using emotion as a design parameter, encouraging deeper connections between people and the spaces they inhabit