Introduction

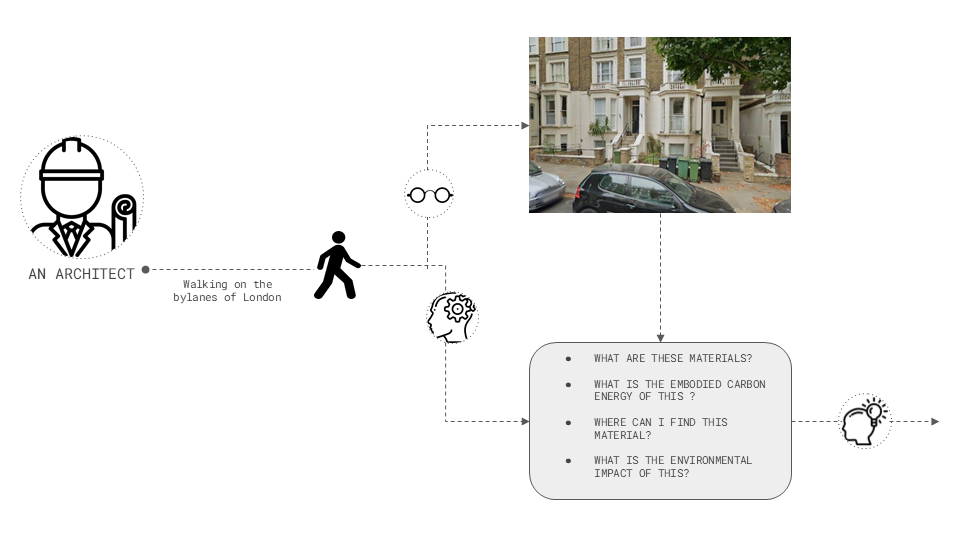

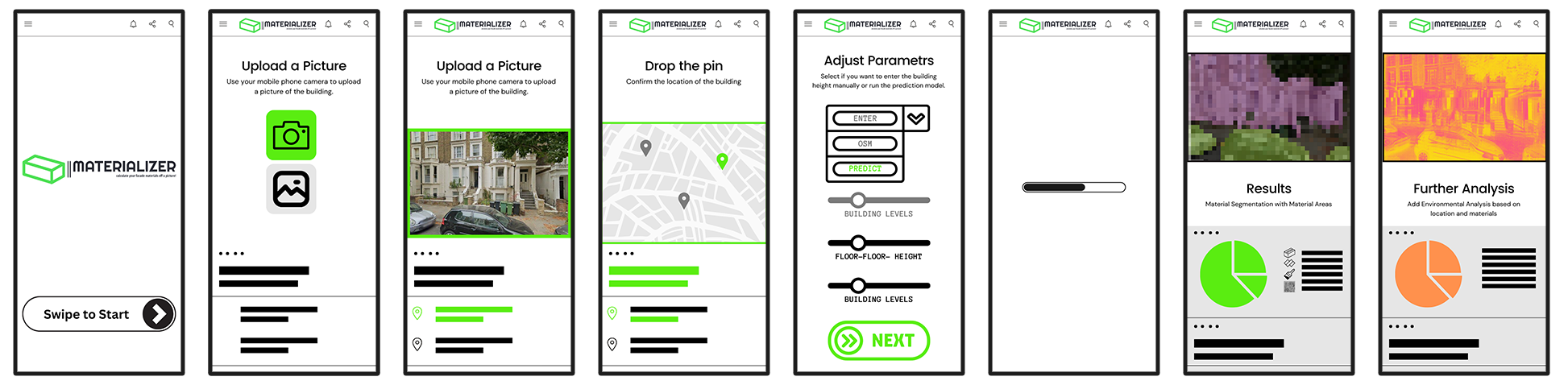

Our project, Materializer, leverages the power of multiple self-trained machine learning models to predict material quantities based on an image uploaded by the user and the building coordinates. This innovative approach utilizes image segmentation to isolate buildings, image classification to read material pixels, and a height prediction model for buildings lacking height information in OpenStreetMap (OSM) data. Our vision is to extend this technology into a mobile app, enhancing accessibility and usability for professionals in architecture, engineering, and construction (AEC) as well as sustainability experts.

Materializer aims to become a mobile application that architects and AEC professionals can use on-site to analyze buildings in real time. By simply uploading an image and providing the building’s coordinates, users can receive detailed material composition and environmental impact data instantly. This tool will significantly aid in material quantification, embodied carbon analysis, and sustainable material sourcing, ultimately contributing to more informed decision-making in the field.

Methodology and Process

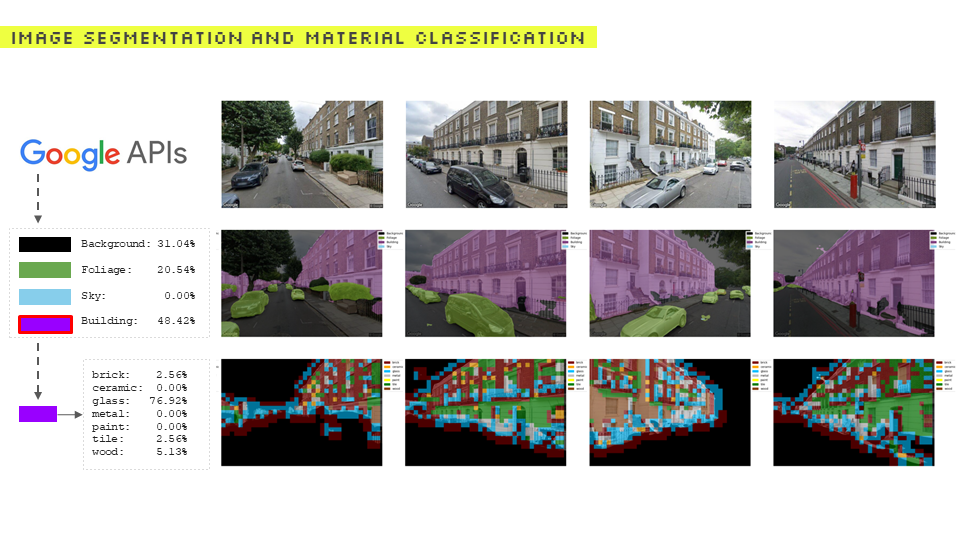

- Image Segmentation:

- We use image segmentation models to isolate buildings from other elements in an image.

- This step is crucial as it ensures that the subsequent material classification is applied only to the relevant portions of the image.

- Image Classification:

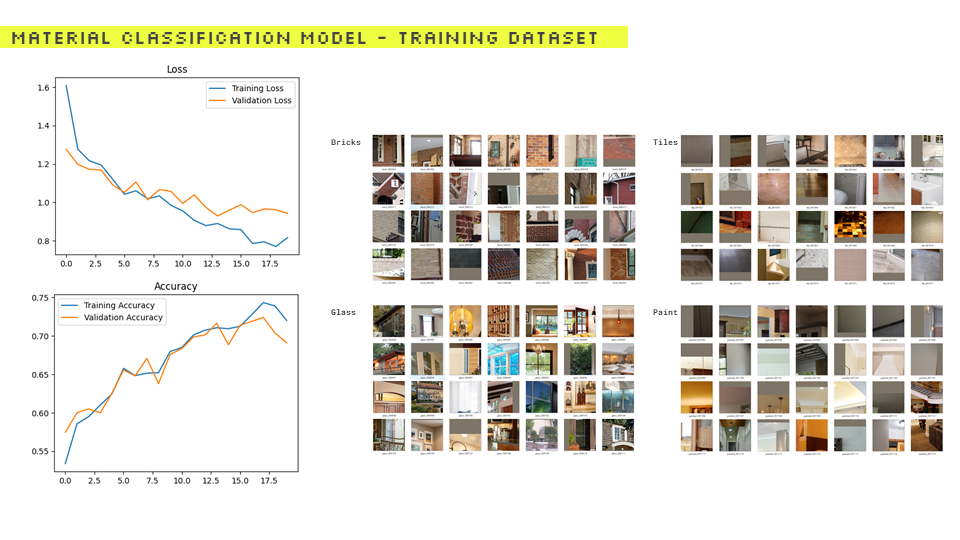

- Our image classification model identifies and quantifies different materials (such as bricks, glass, tiles, paint) within the segmented building image.

- The model was trained on a diverse dataset to ensure high accuracy in material identification.

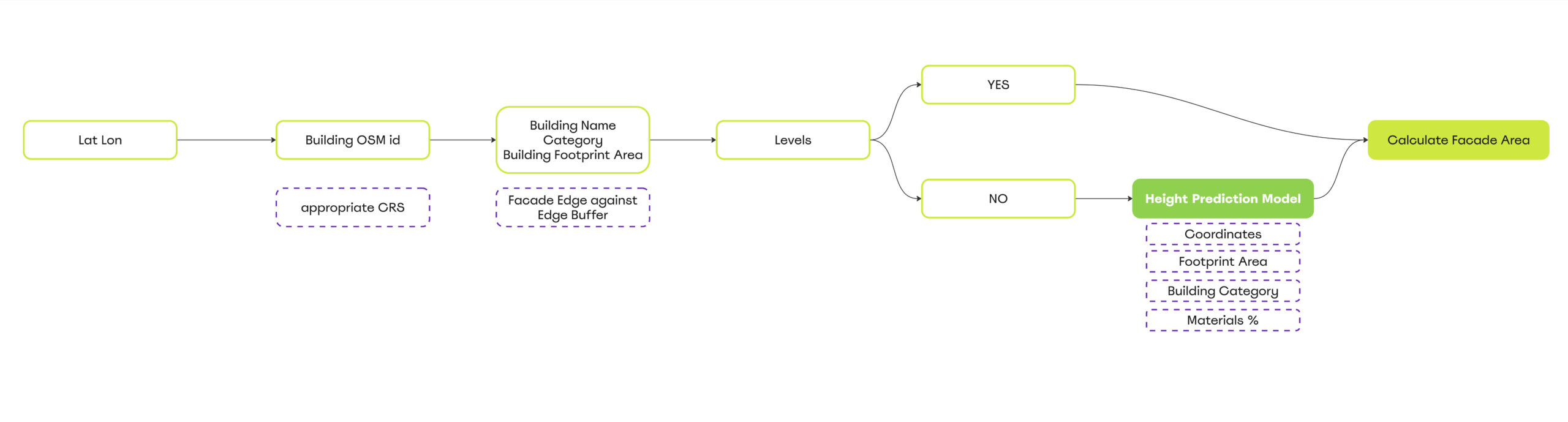

- Height Prediction:

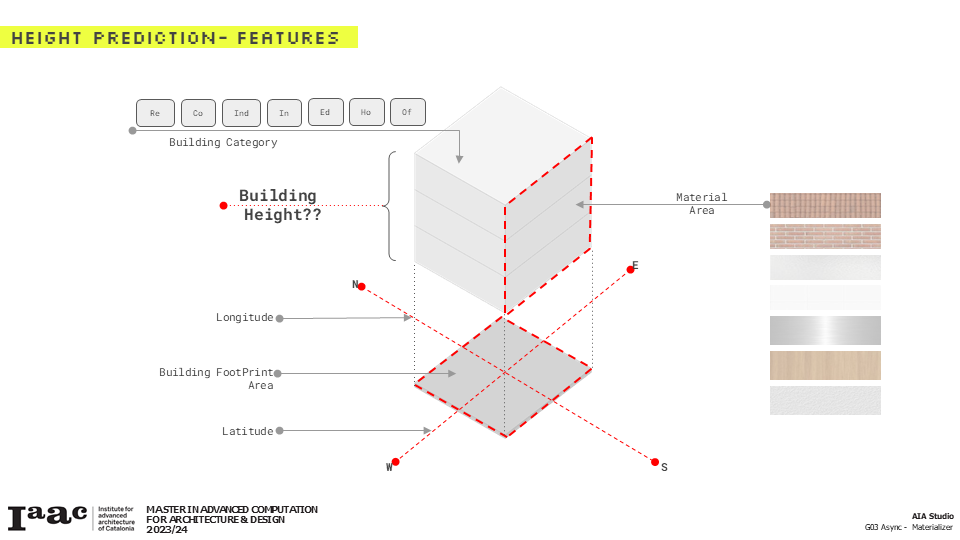

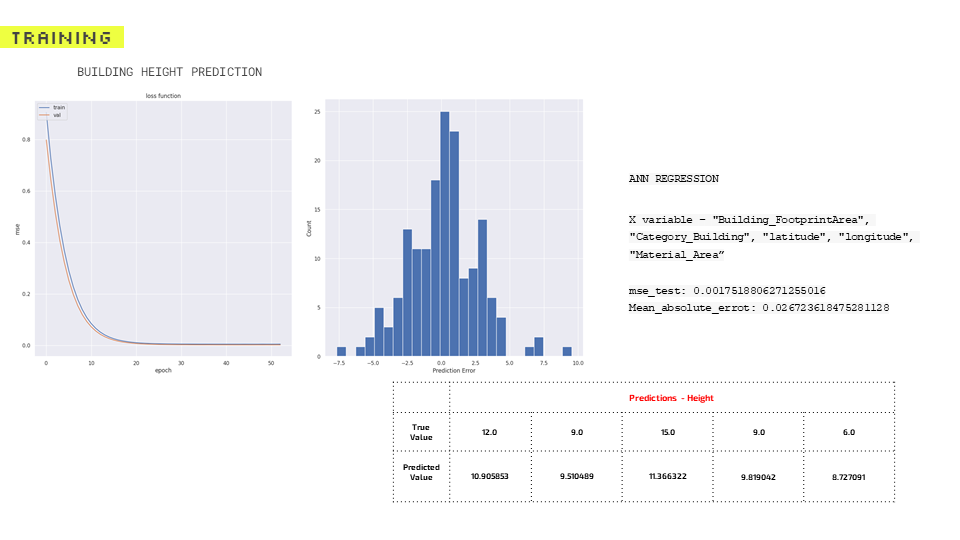

- For buildings lacking height information in OSM data, we developed a height prediction model.

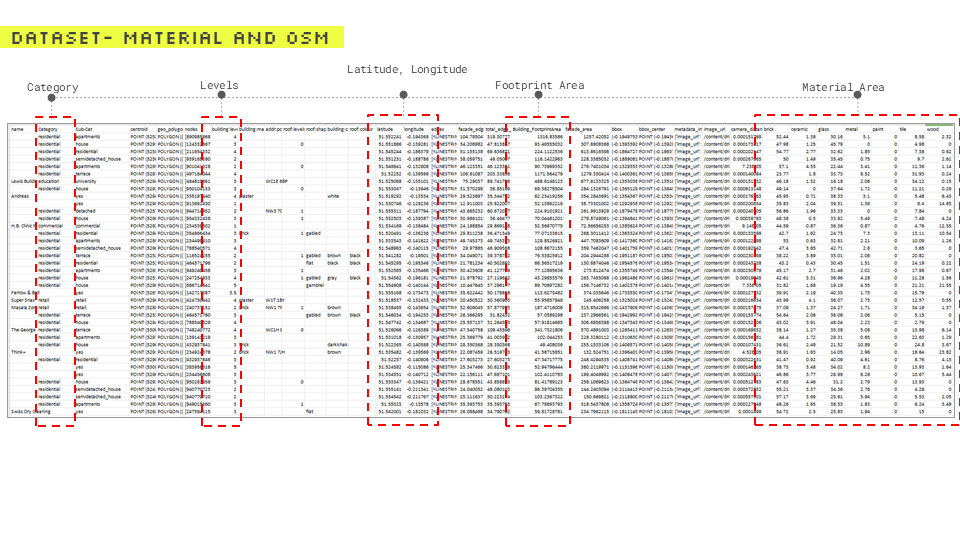

- This model uses inputs such as building footprint area, material percentages, building category, and location coordinates to predict the building’s height.

- The predicted height, combined with the footprint area and material percentages, allows us to calculate the total material quantities.

Workflow: Detailed Breakdown

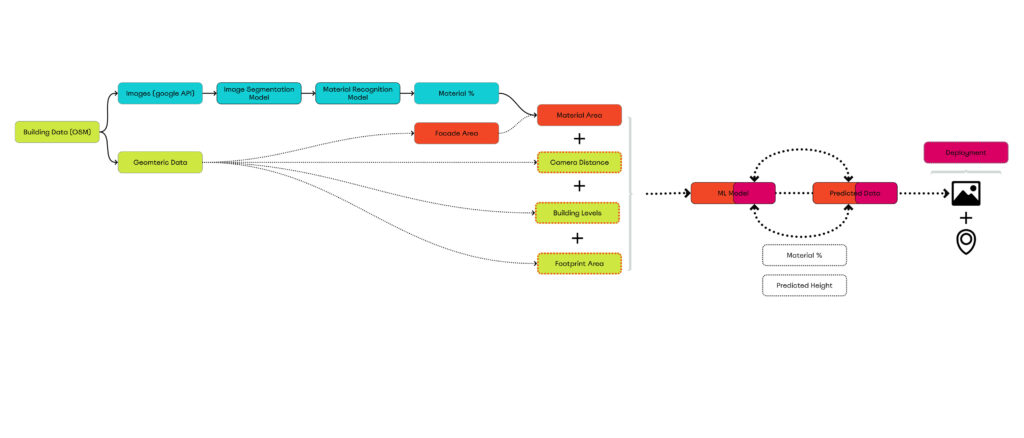

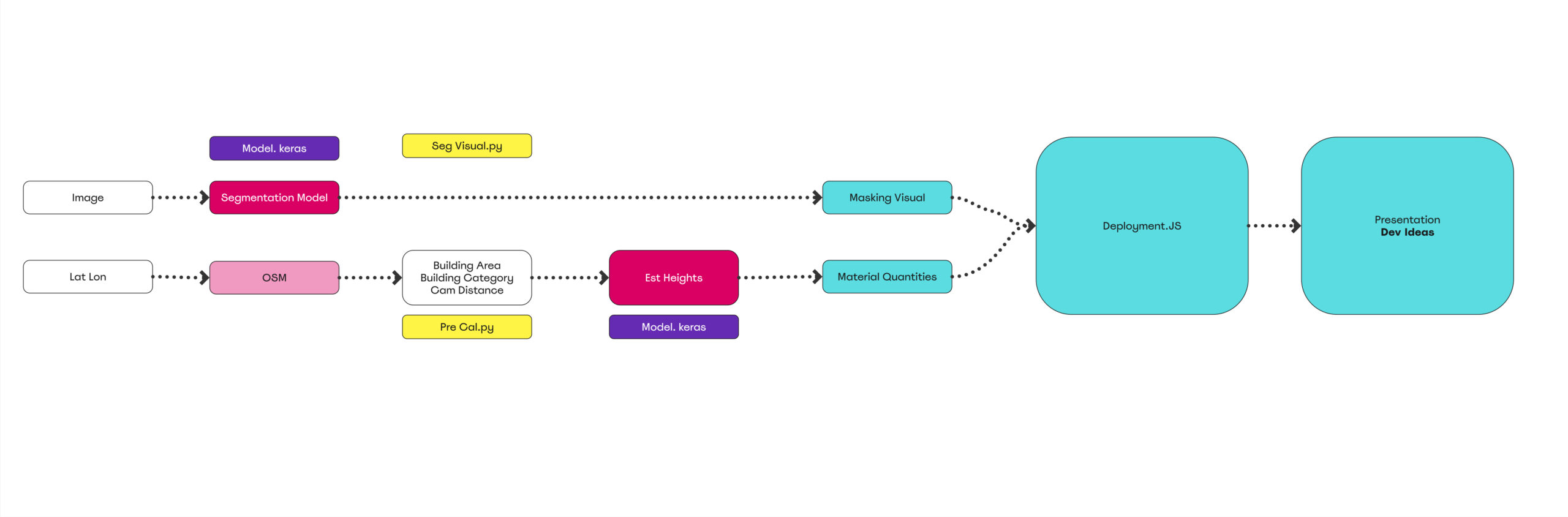

Our Materializer project follows a structured workflow designed to predict material quantities based on user-uploaded images and building coordinates. The workflow is divided into two main parts: Material Recognition and Height Prediction, culminating in the deployment of a web application. Here’s a detailed explanation of each part:

Part A: Material Recognition

- Image Segmentation Model:

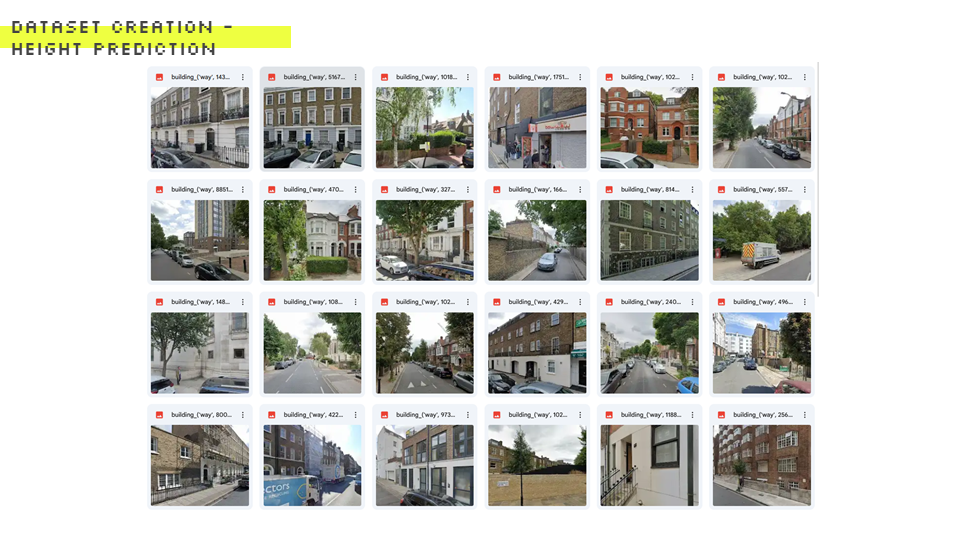

- Input: The process starts with collecting images of buildings using the Google API.

- Segmentation: These images are fed into an image segmentation model trained to isolate buildings from other elements in the image, such as sky, foliage, and background structures.

- Output: The segmented building image serves as the foundation for the next step.

- Material Classification Model:

- Input: The segmented building image is then processed by a material classification model.

- Classification: This model reads the pixel percentages of seven trained material classes: brick, glass, tile, paint, metal, wood, and ceramic.

- Output: The result is a detailed breakdown of the material percentages present in the building image.

Model Training & Performance

Part B: Height Prediction and Material Quantification

- Geometric Data Collection:

- Input: Building data from OpenStreetMap (OSM) provides essential geometric data, including building footprint area, building category, and coordinates.

- Enhancements: When height data is missing in OSM, we supplement this information using our height prediction model.

- Material Area Calculation:

- Facade Area: Using the material percentages derived from Part A, we calculate the facade area for each material.

- Camera Distance, Building Levels, and Footprint Area: Additional parameters such as camera distance, building levels, and footprint area are integrated to refine the material quantification.

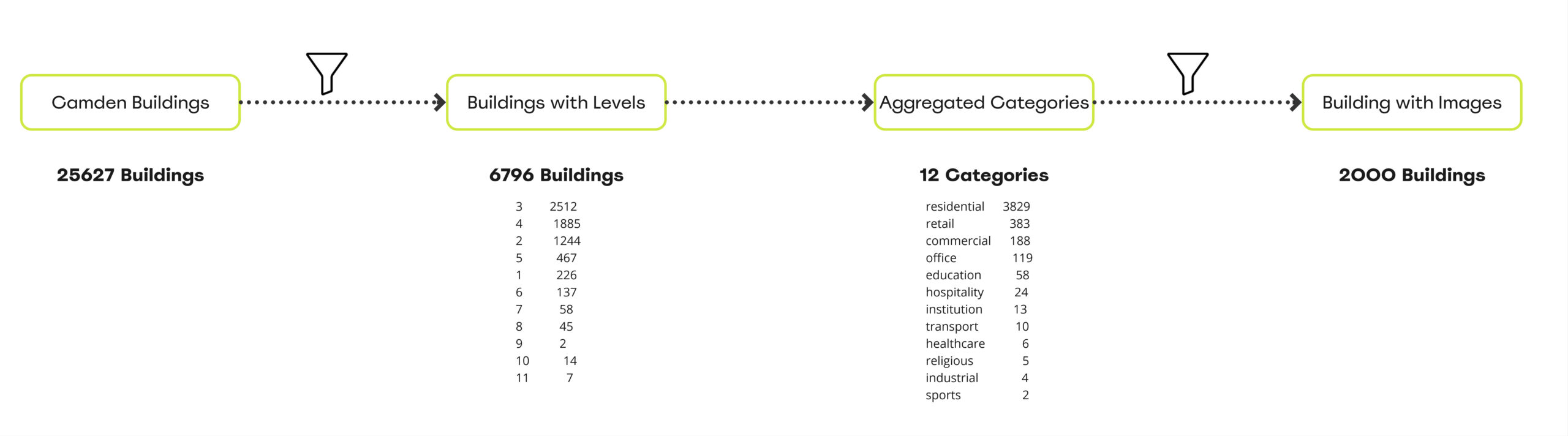

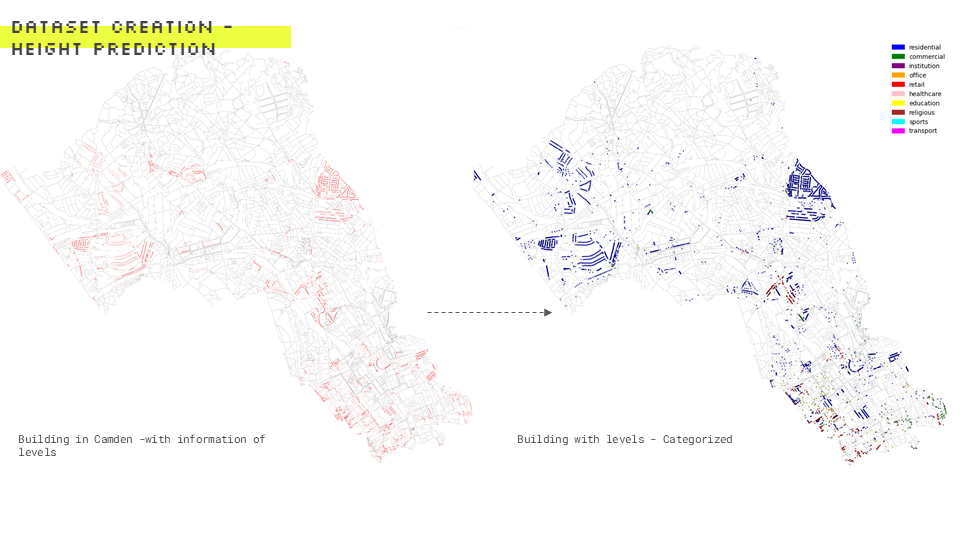

Dataset Creation

Model Training & Performance:

Combined Workflow for Deployment

- Integration into ML Model:

- Input Aggregation: All the data from Parts A and B, including material areas, geometric data, and height predictions, are fed into a machine learning model.

- Model Training: The ML model is trained to predict the material quantities based on the aggregated inputs.

- Output: The model outputs predicted data, including material percentages and predicted building height.

- Deployment:

- Web Application: The final step involves deploying the model through a Flask-based web application.

- User Interaction: Users can upload images and provide building coordinates through a user-friendly interface to receive detailed material quantification and analysis.

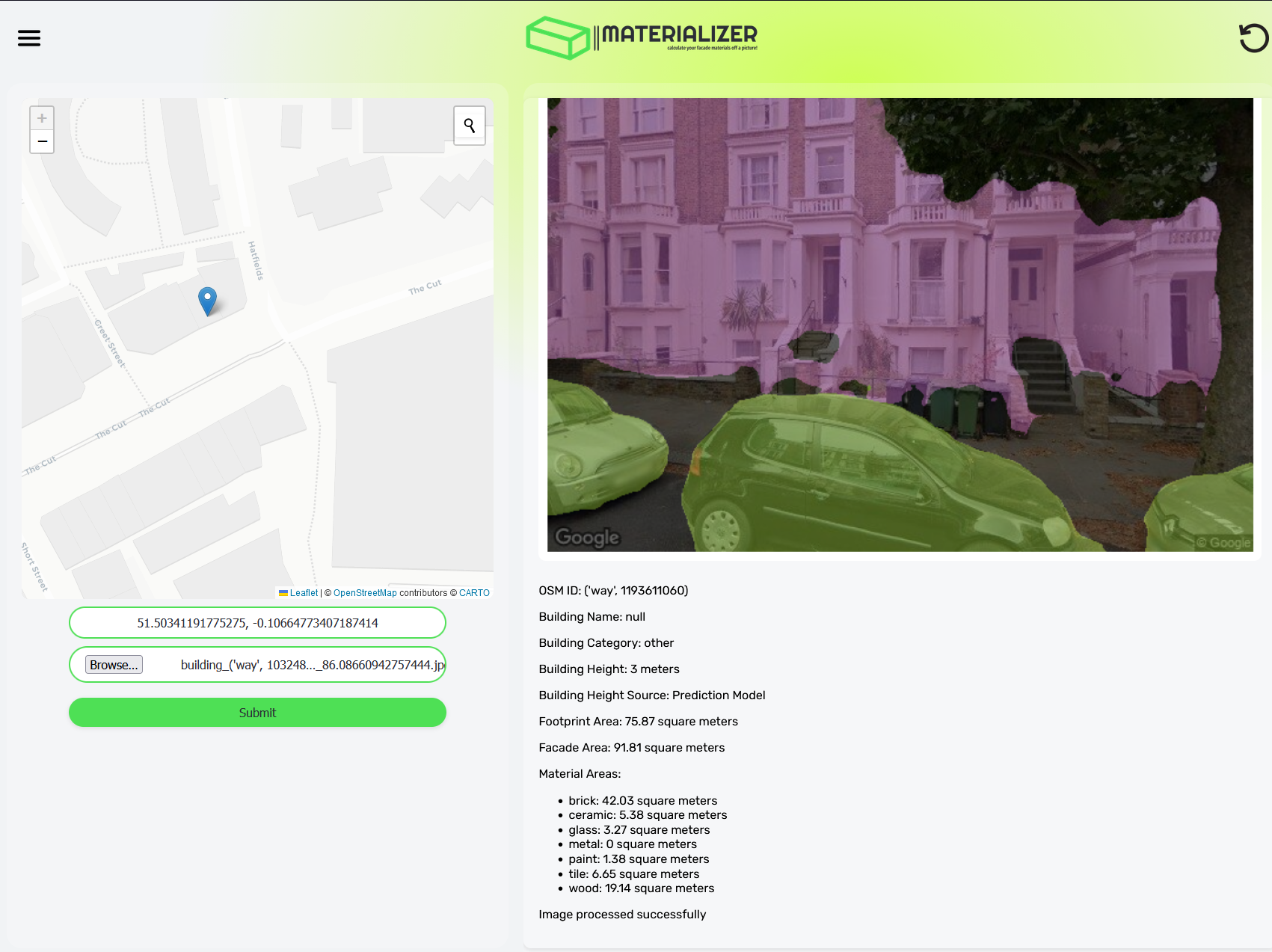

Current Deployment

Our current deployment is a web application built using Flask. Users can upload images and enter building coordinates through a user-friendly interface. The backend processes these inputs using our trained models to deliver material quantity predictions.

Workflow

- Upload Image and Enter Coordinates:

- Users upload an image of the building and provide the coordinates.

- Image Processing:

- The image segmentation model isolates the building.

- The image classification model identifies and quantifies materials in the building.

- Height Prediction:

- If the building height is unavailable, our model predicts it using the provided data.

- Material Quantification:

- The application calculates the total quantities of each material based on the predicted height and identified material percentages.

Conclusion

Materializer represents a significant advancement in material quantification and analysis in the built environment. By integrating cutting-edge machine learning models, we provide AEC professionals and sustainability experts with the data they need to make informed decisions. As we move towards deploying this technology in a mobile application, we look forward to revolutionizing how material data is accessed and utilized in the industry.

- Accuracy and Efficiency:

- Our models achieve high accuracy in material identification and height prediction, ensuring reliable results.

- The web application processes inputs swiftly, providing users with quick and actionable data.

- User-Friendly Interface:

- The intuitive design of our web application makes it accessible to users with varying levels of technical expertise.

- Future Potential:

- The transition to a mobile app will further enhance the usability and reach of Materializer, making it an indispensable tool for on-site assessments.

Next Steps

- Improve Image Classification Model:

- Enhance the accuracy and range of materials identified by incorporating more training data and refining algorithms.

- AI Vision Analytics:

- Utilize AI to extract additional information about buildings and materials.

- Sustainability Integration:

- Translate material statistics into sustainability metrics such as embodied carbon.

- Accessibility:

- Develop a mobile app to make Materializer widely accessible to professionals in the field.