Introduction

This project orients around robotic drawing system that uses a brush to create adaptive visual results. It focuses on turning basic painting actions; such as dipping, stroking, and rotating—into clear, programmable steps. By combining digital design tools with robotic movement, the work explores how changes in brush angle, paint material, and surface conditions shape the patterns that appear. Through repeated testing, the project develops a method for producing complex, non-repetitive drawings. The study aims to expand understanding of how automated systems can work with physical materials and how simple motions can lead to richer forms of mark-making.

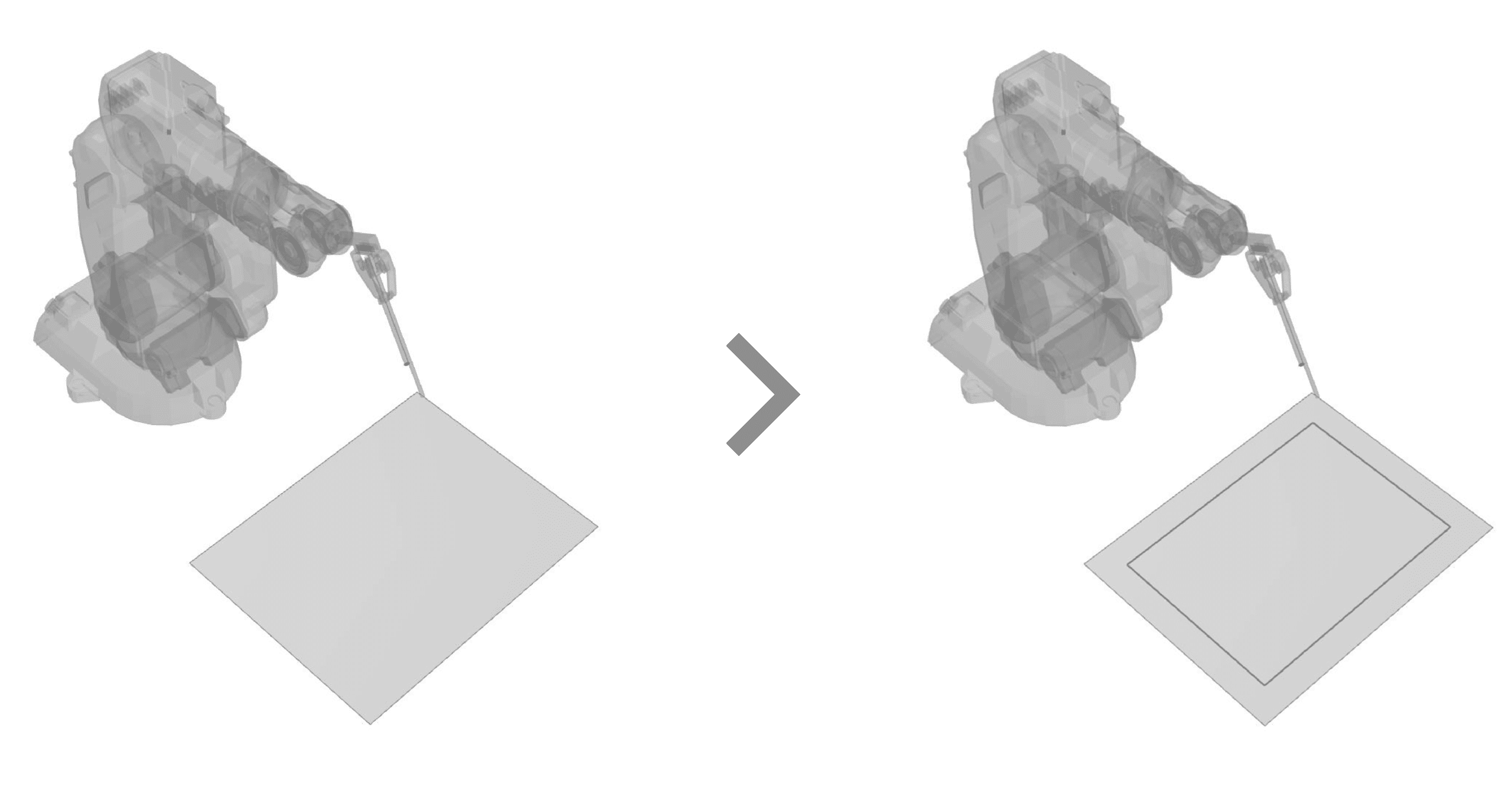

Step 01: Generating Workspace

The workflow begins by defining a workspace for the ABB-140 robot, followed by creating a safer, more controlled zone within that space where the brush and paint operations can take place.

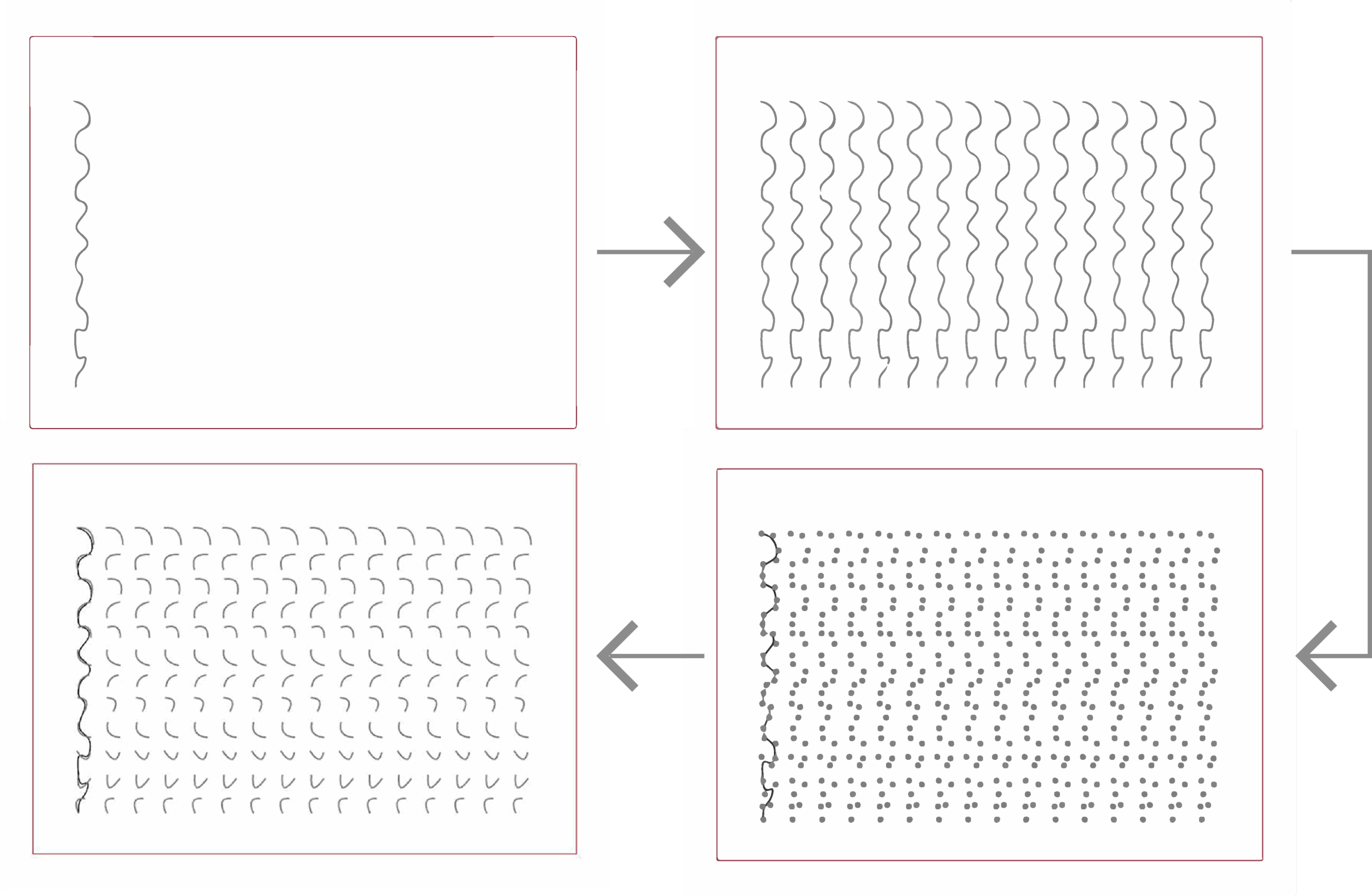

Step 02: Generating Strokes

The workflow continues by creating a base curve in Rhino and repeating it within the workspace to generate the overall layout. The base curve is then subdivided into equal intervals, producing sets of three-point curves used to compute precise stroke trajectories. These trajectories establish the geometric framework that guides the ABB-140’s controlled brush movements.

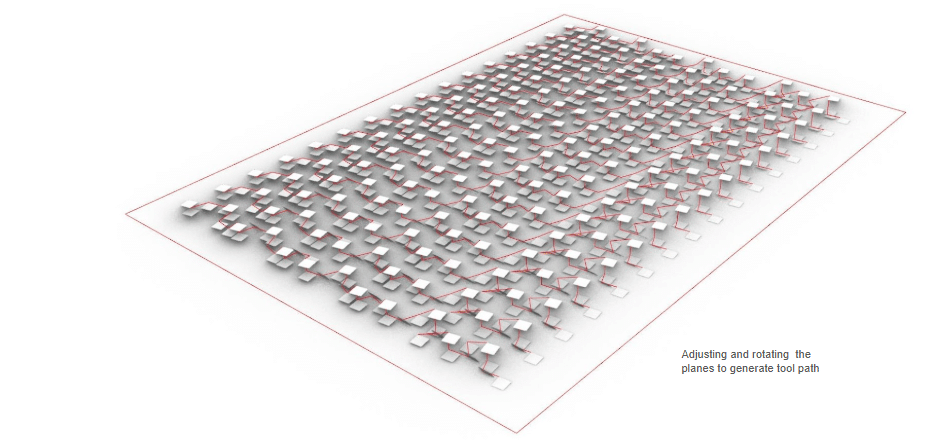

Step 03: Generating Tool path

Next, construction planes were generated at every point derived from the three-point curves, establishing localised coordinate systems for defining brush orientation. The axes of these planes were then remapped, enabling controlled adjustments in rotation and height. By gradually varying these parameters, a series of distinct trajectory profiles was created, each influencing the brush’s movement behaviour. These planes were subsequently connected and interpolated to form the final continuous tool path. This path provides the geometric and orientation framework needed for the paintbrush to glide smoothly through the workspace under the programmed motion of the robot.

Step 04: Simulation

Finally, a simulation was generated to visualise the robot’s expected output. This preview allowed the team to evaluate how the computed tool path would translate into real brush movements, identify potential errors, and confirm that the planned trajectories, orientations, and material interactions would perform correctly before executing the process on the robot.

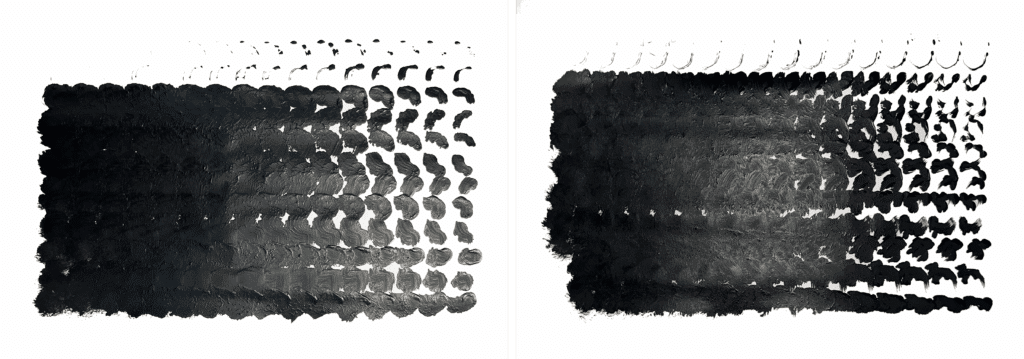

Final Result