Github : https://github.com/j-albo/robotic-3d-space-analysis

INTRODUCTION

Scanning irregular terrains with today’s scanning technology is crucial for obtaining precise environmental models, optimizing planning and execution in architectural projects. Its high-resolution capture capability allows for mapping complex surfaces and detecting floor level variations imperceptible to the human eye, improving efficiency and reducing errors in design and construction.

WHY USING A MOBILE ROBOT?

We chose to use the Go2 robot dog because of its exceptional mobility, allowing it to effortlessly adapt to uneven terrain, slopes, and obstacles. Its agility ensures efficient navigation in complex environments, outperforming manual and fixed tools. Additionally, its lightweight and cost-effective design enhances terrain exploration and operational flexibility.

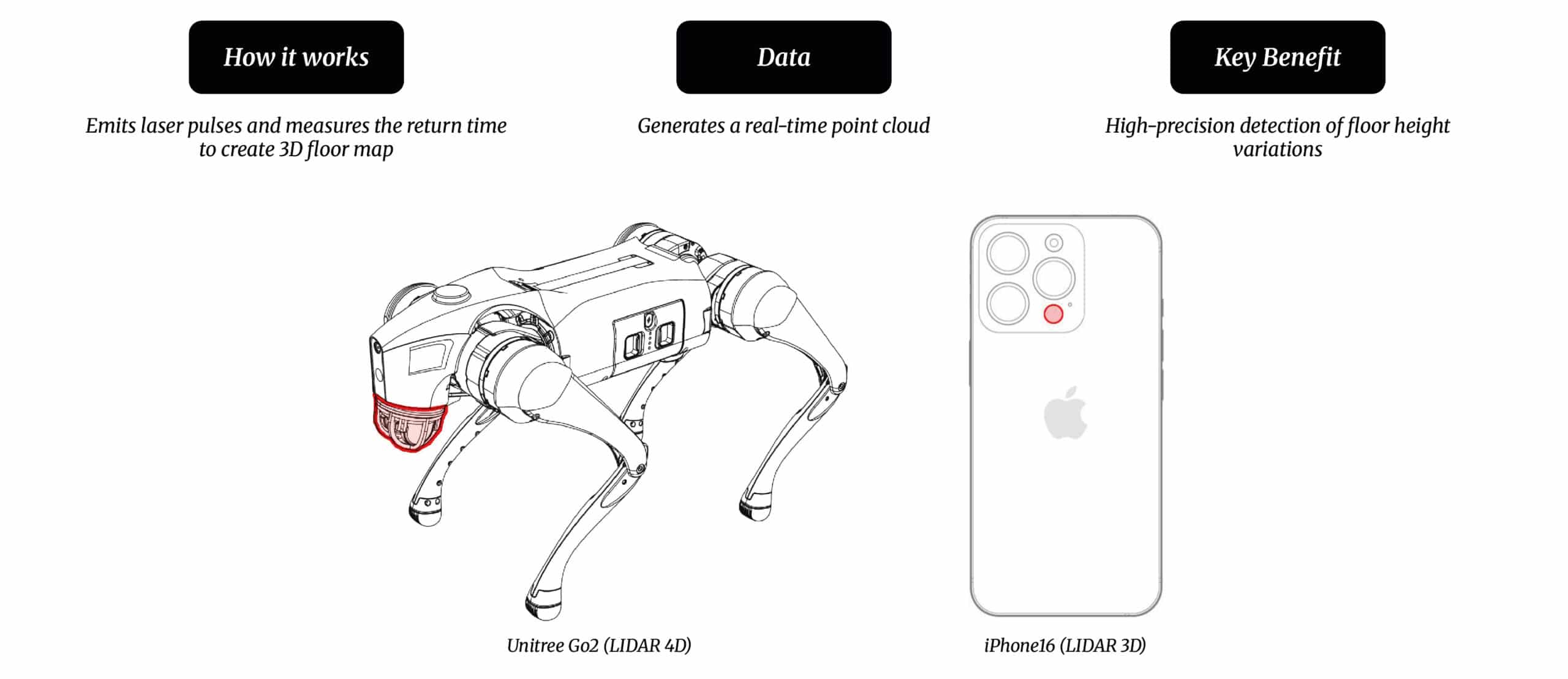

It excels in real-time, high-precision scanning with LiDAR, depth cameras, and IMU sensors. It accurately detects slopes, uneven terrain, and obstacles, generating detailed 3D models. Its autonomy enables large-area mapping with minimal human intervention, optimizing surveying and construction planning.

SENSORS USED DURING THE PROCESS

We used LiDAR sensors for precise floor scanning and terrain mapping. The Unitree Go2 (LiDAR 4D) provided real-time 3D mapping of height variations, while the iPhone 16 (LiDAR 3D) enhanced depth sensing.

PROOF OF CONCEPT

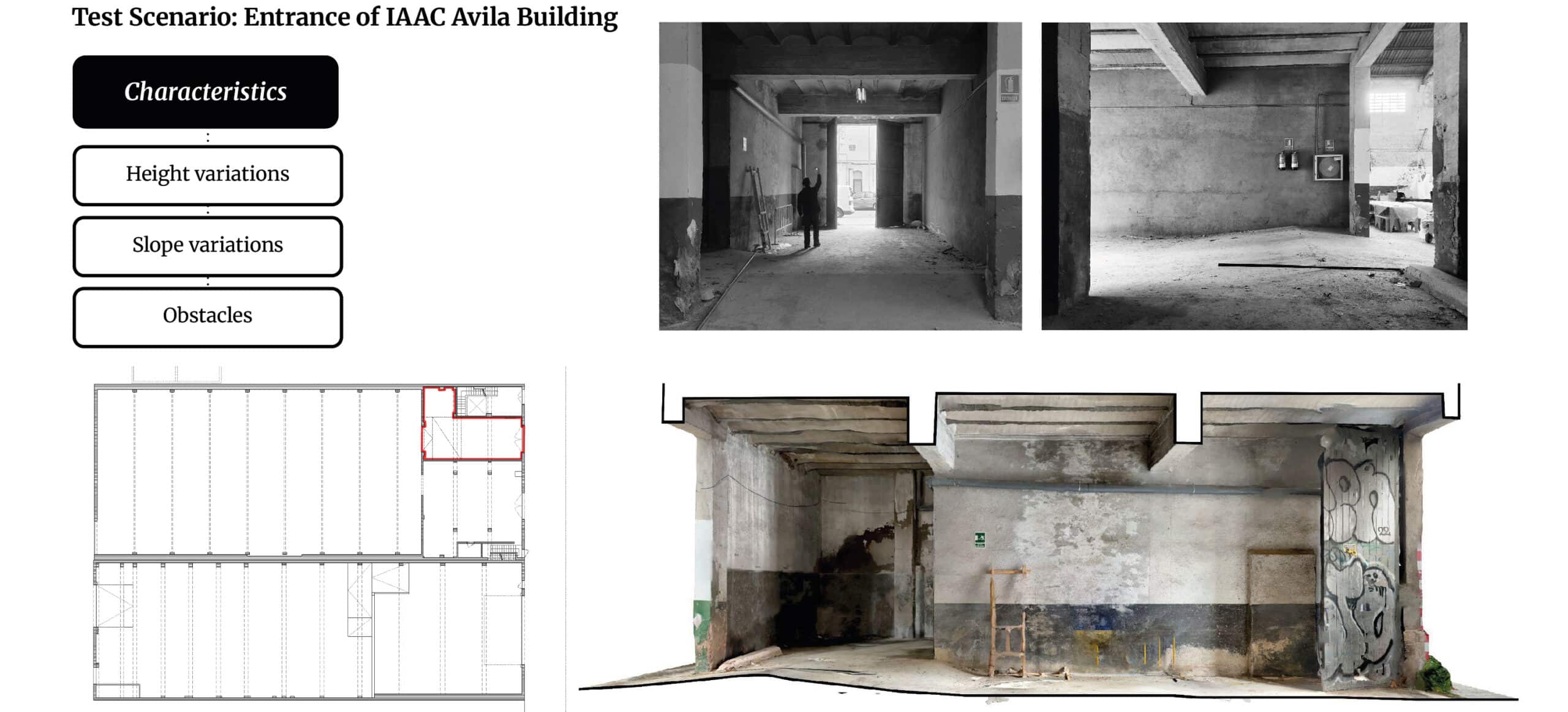

We selected the entrance of the new IAAC Ávila building as our scanning scenario due to its multiple height variations, slope changes, and obstacles. These challenges provided an ideal test environment to evaluate the Go2 robot’s mobility and LiDAR accuracy in mapping complex terrains.

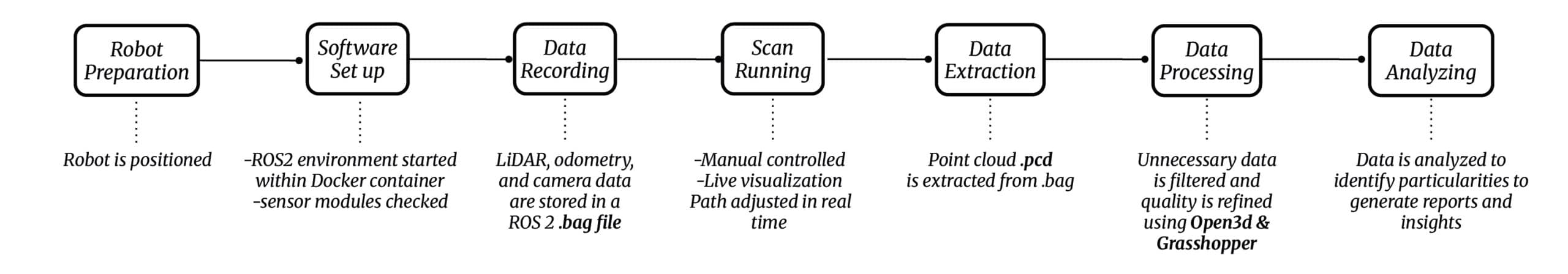

CONCEPT OF OPERATIONS

Our workflow begins with robot positioning and software setup, initializing the ROS2 environment and checking sensors. LiDAR, odometry, and camera data are recorded and manually adjusted in real-time. The point cloud is extracted, processed using Open3D & Grasshopper, and analyzed to generate reports and insights.

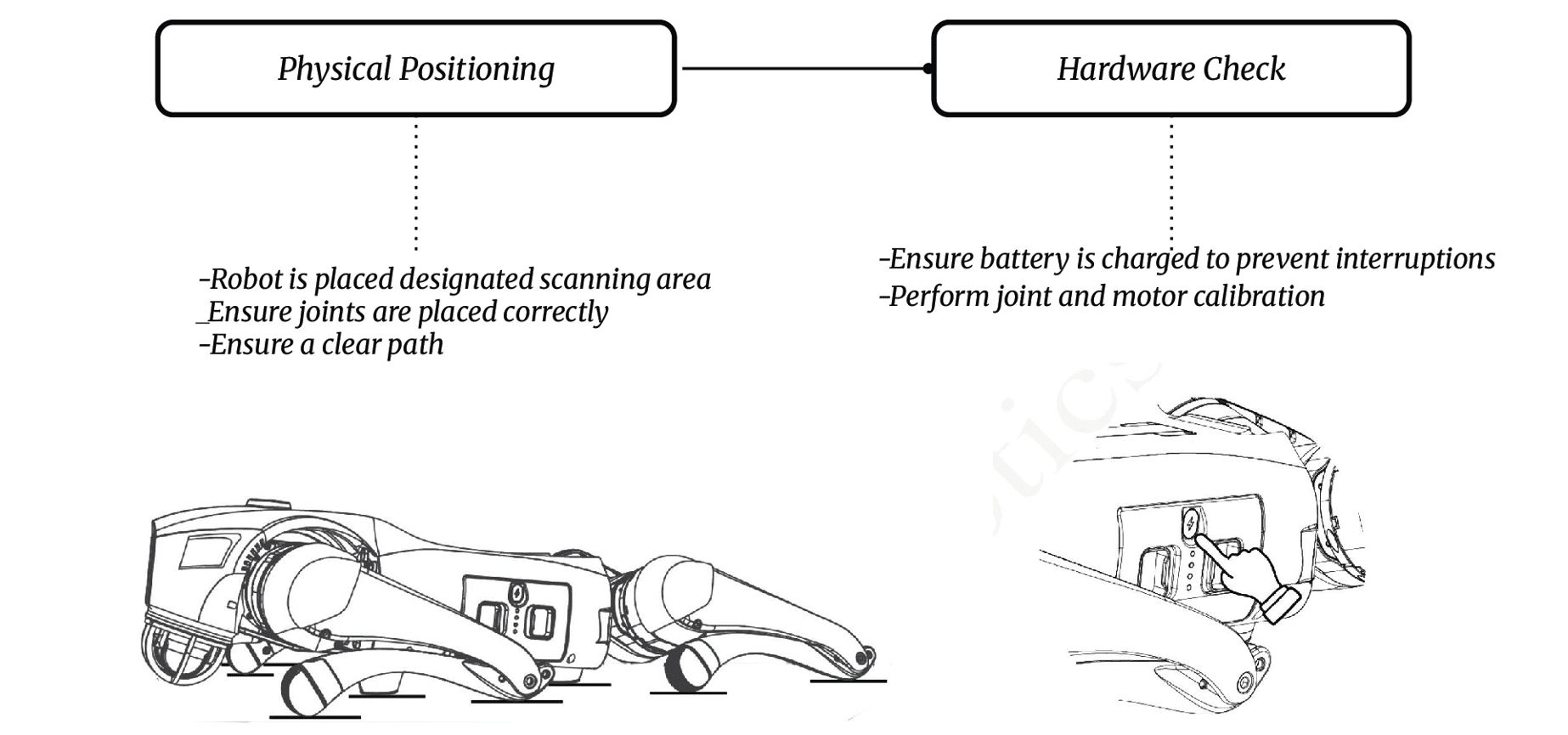

ROBOT PREPARATION

The robot preparation involves positioning it in the designated scanning area, ensuring joints are correctly placed, and verifying a clear path. Additionally, the battery is charged, and joint and motor calibration is performed to prevent interruptions.

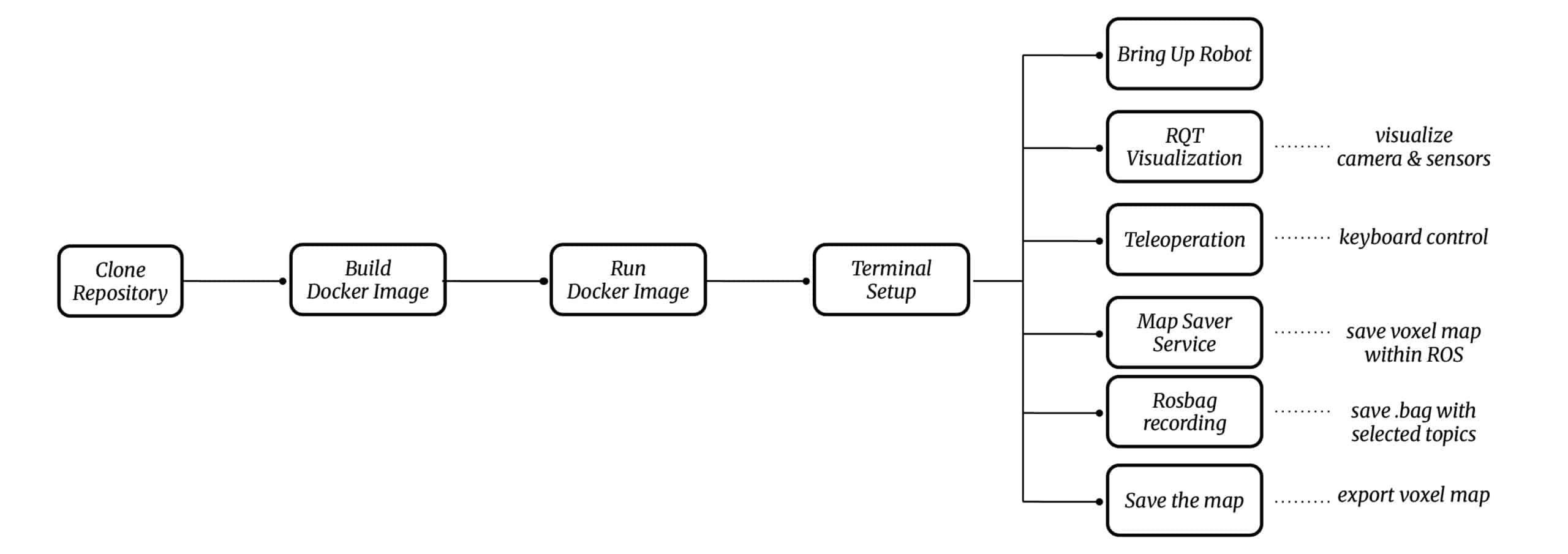

SOFTWARE SETUP

The software setup involves cloning the repository, building and running a Docker image, and configuring the ROS2 environment via terminal. It enables robot activation, sensor visualization, teleoperation, map saving, and data recording for accurate terrain mapping.

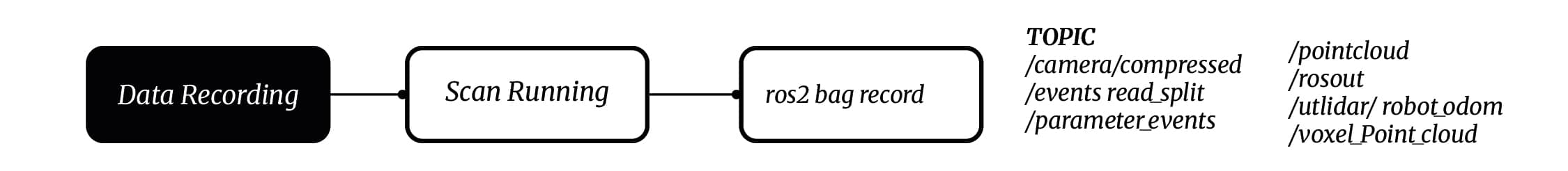

DATA RECORDING

We initiate the scanning process, capturing and storing LiDAR point clouds, odometry measurements, and camera data in a ROS2 bag file. This ensures a structured dataset for precise terrain mapping, localization, and post-processing analysis.

SCAN RUNNING

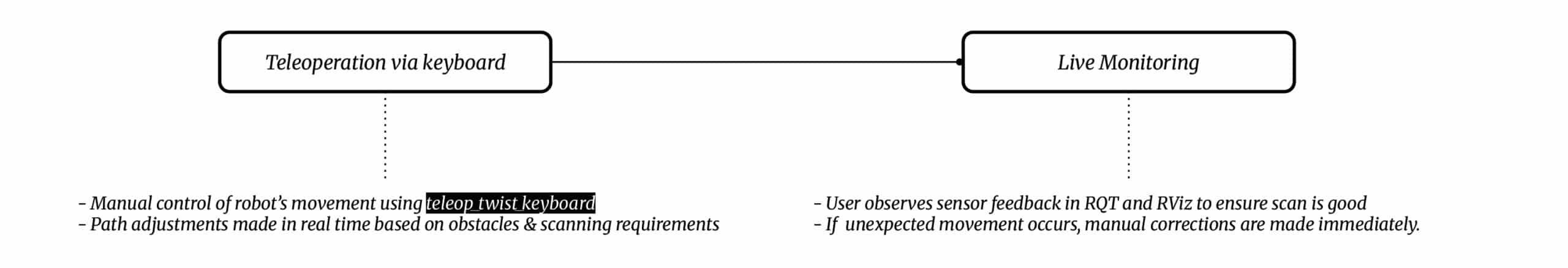

The scan running process involves manual teleoperation via keyboard, with real-time path adjustments based on obstacles and scanning needs. The user monitors sensor feedback in RQT and RViz, making immediate corrections if unexpected movements occur. Future autonomous scanning will enable predefined path planning, obstacle avoidance, and adaptive resolution scanning without human intervention.

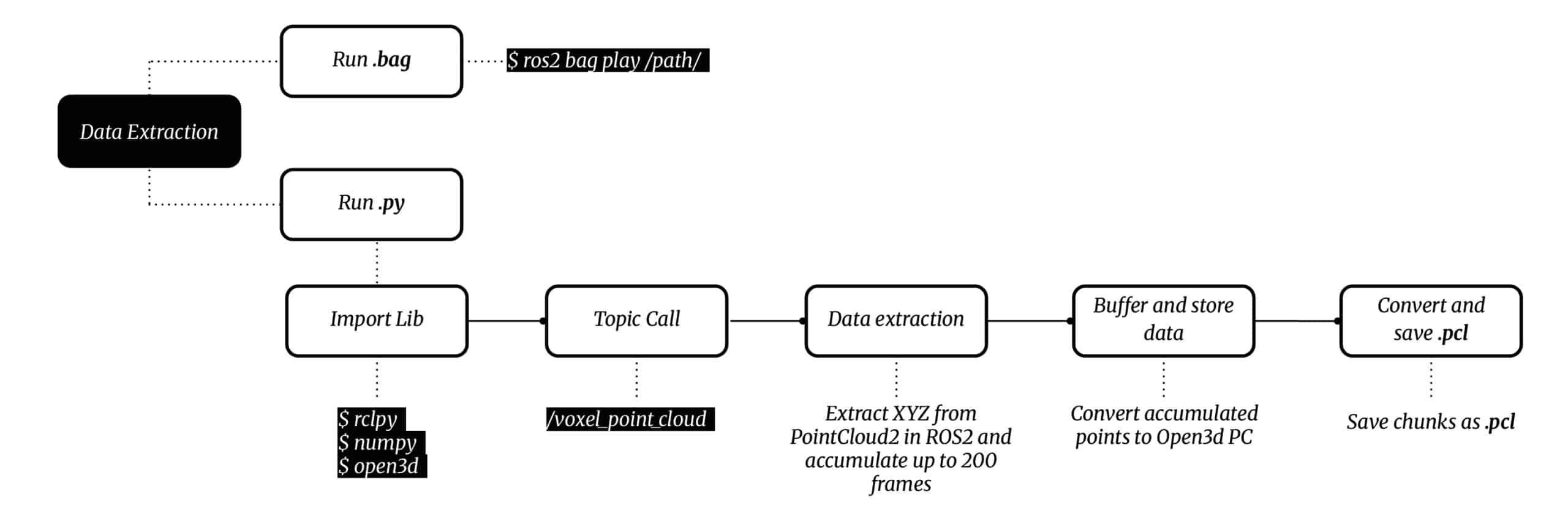

DATA EXTRACTION

The data extraction process begins by playing the ROS2 bag file, running a Python script to extract PointCloud2 data, and accumulating up to 200 frames. The data is then converted into Open3D format, buffered, and saved in .pcl chunks for further processing.

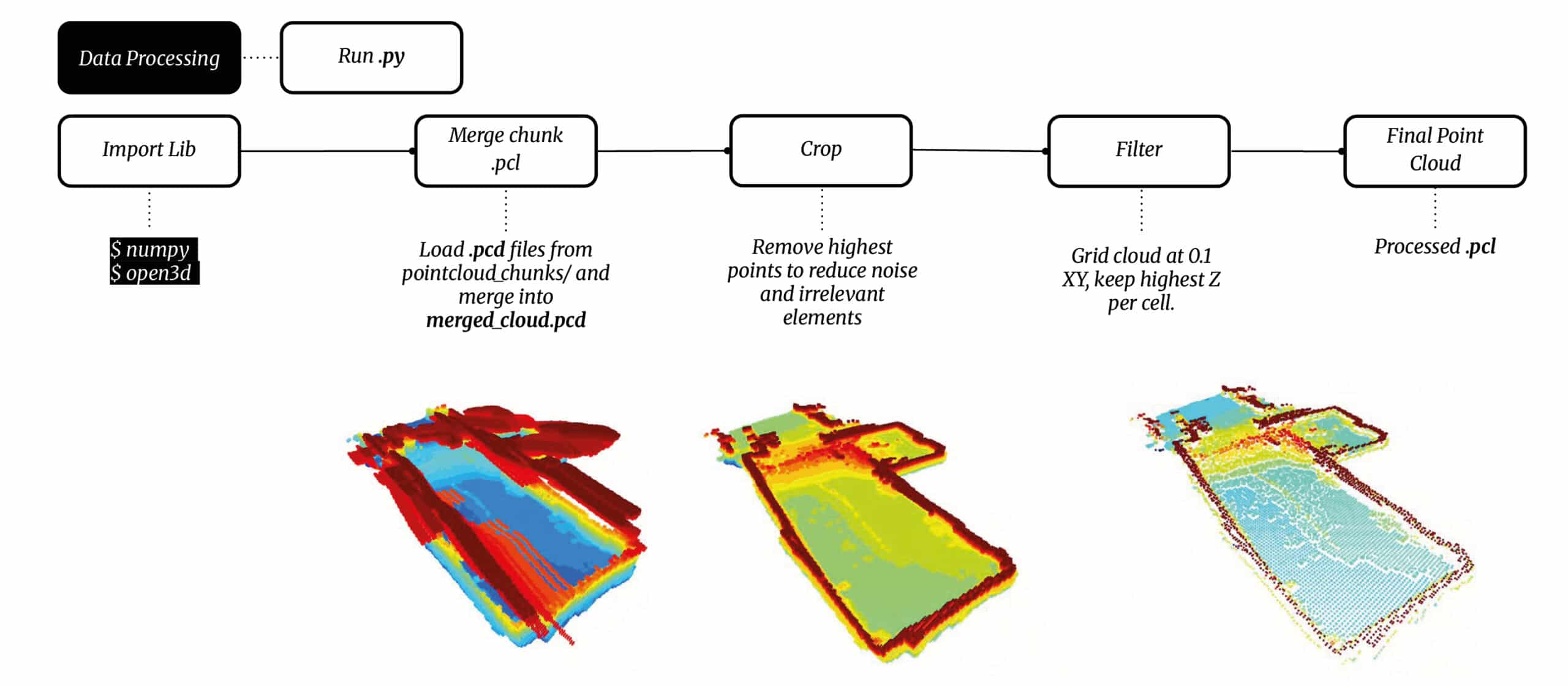

DATA PROCESSING

The data processing phase refines the point cloud by merging chunks, cropping noise, and filtering unnecessary data using Open3D. The highest points are retained, and the cloud is gridded at 0.1 XY resolution, keeping the highest Z value per cell and producing a cleaned and structured .pcl file for further analysis.

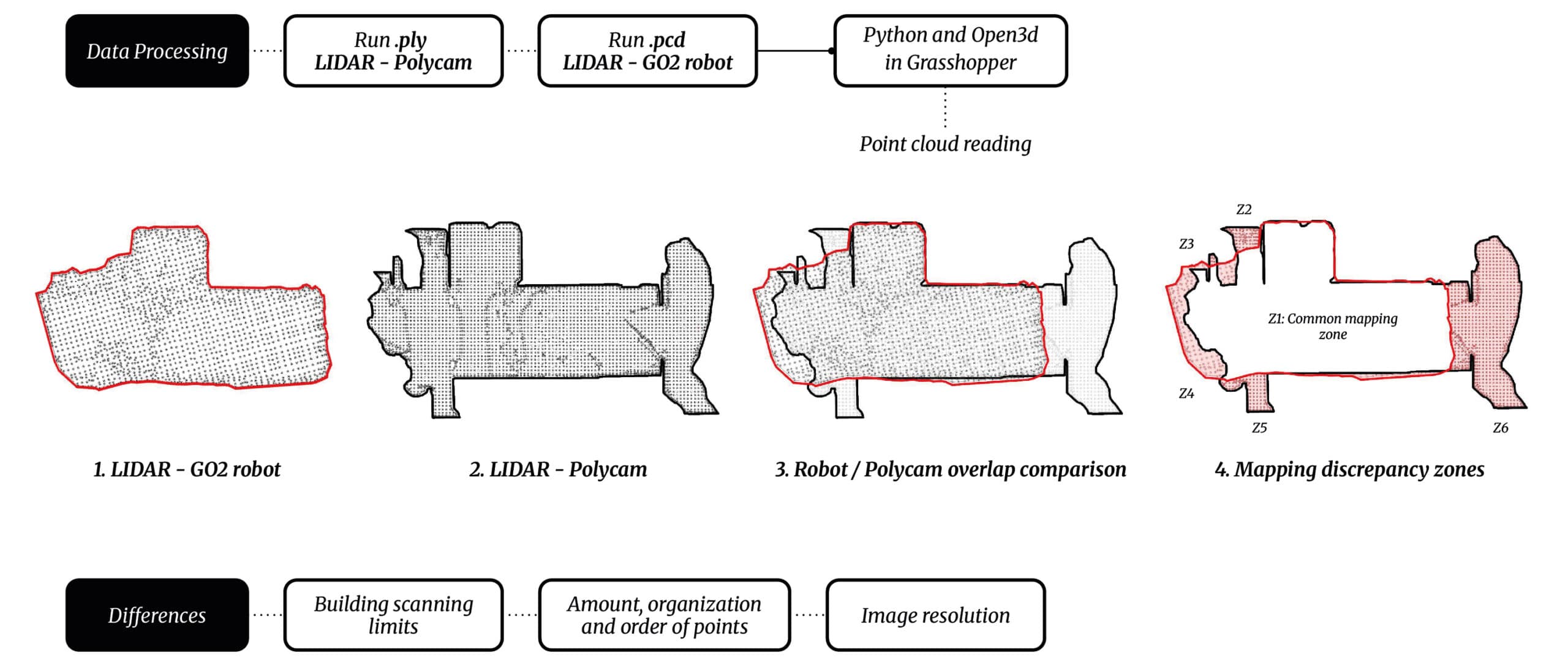

LIDAR DATA COMPARISON

During the process, we conducted a comparison between two scanning systems: the Go2 robot’s LiDAR and Polycam on a mobile phone. By analyzing point cloud data, mapping discrepancies, and resolution differences, we identified variations in scanning accuracy, point organization, and coverage limitations between both methods. The robot’s LiDAR provided higher precision and structured data but heavy pointcloud, while Polycam captured denser details but with less spatial limits accuracy. Both systems have strengths and weaknesses, and combining their data could enhance resolution and mapping reliability for more comprehensive results.

ARCHITECTURAL APPLICATION

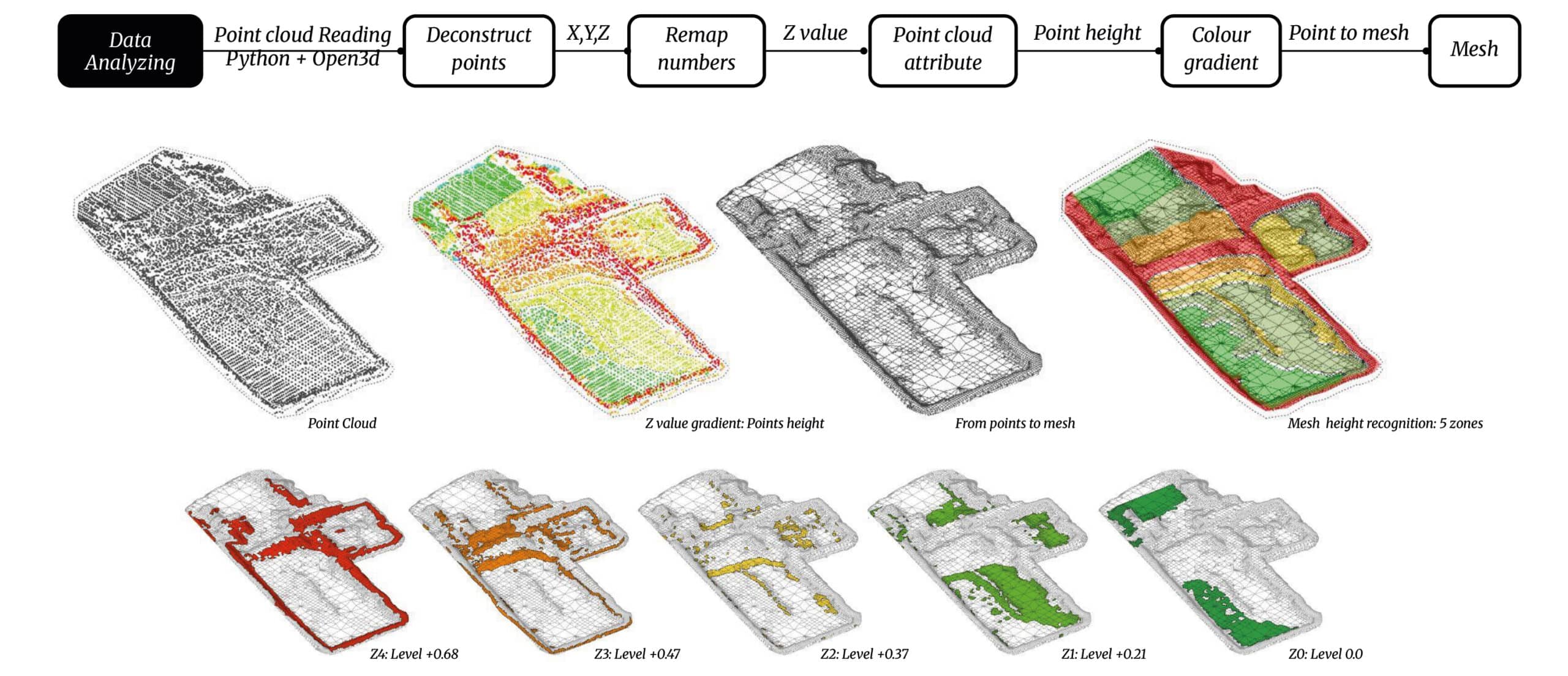

For the architectural application, we processed the point cloud data using Python, Open3D, and Grasshopper, deconstructing the XYZ coordinates to extract height variations. A gradient color mapping was applied to visualize elevation differences, categorizing the terrain into five distinct levels. The processed data was then converted into a mesh representation, allowing for precise floor height segmentation and facilitating architectural refurbishment planning based on existing topography.

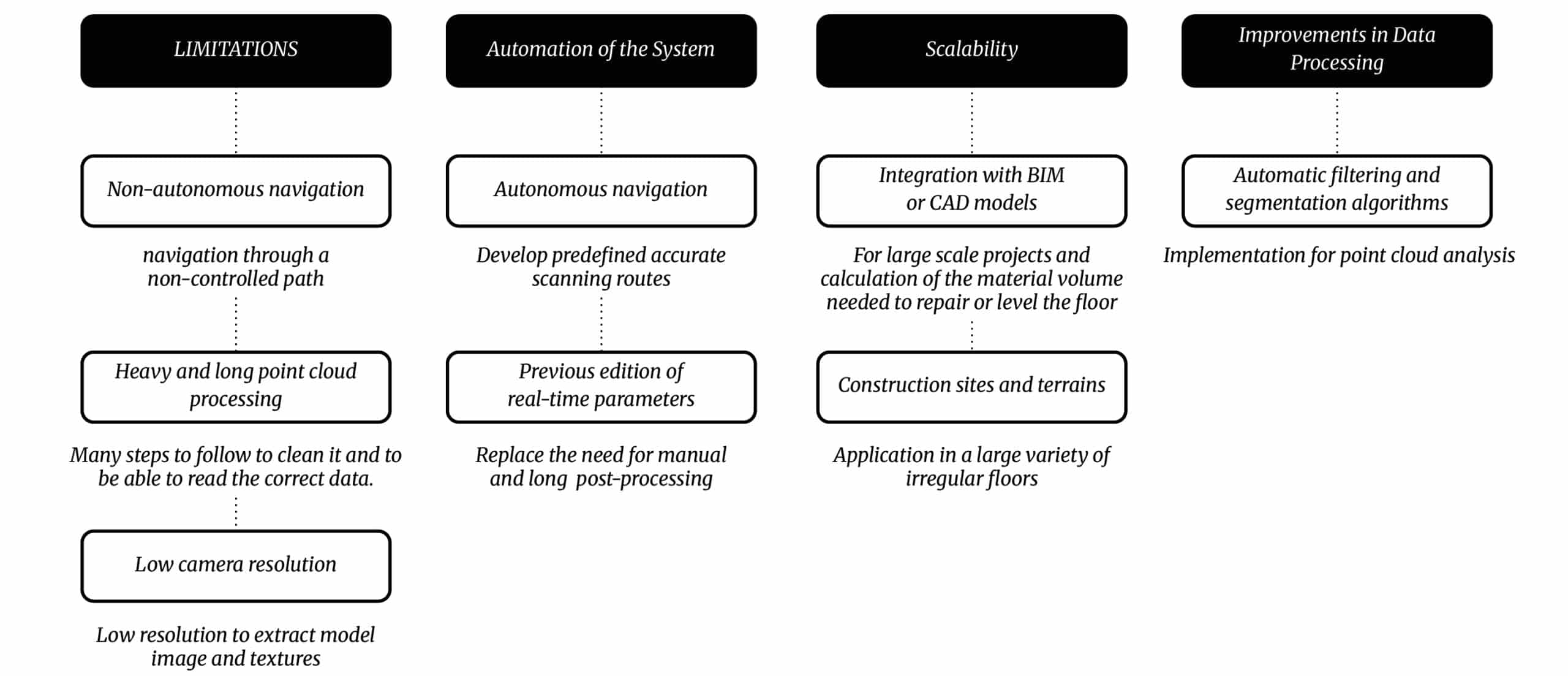

LIMITATIONS AND FURTHER STEPS

The current scanning workflow is effective but limited by manual navigation, time-consuming point cloud processing, and low camera resolution. Future improvements include autonomous navigation, integration with BIM/CAD for large-scale projects, and automatic filtering algorithms to enhance efficiency. These advancements will streamline data processing, improve accuracy, and enable broader applications in construction and architectural refurbishment. A potential application improvement could be calculating the material volume needed to repair or level floors according to renovation requirements.