Github : https://github.com/Clarrainl/UN_LOG-Factory

| INTRODUCTION |

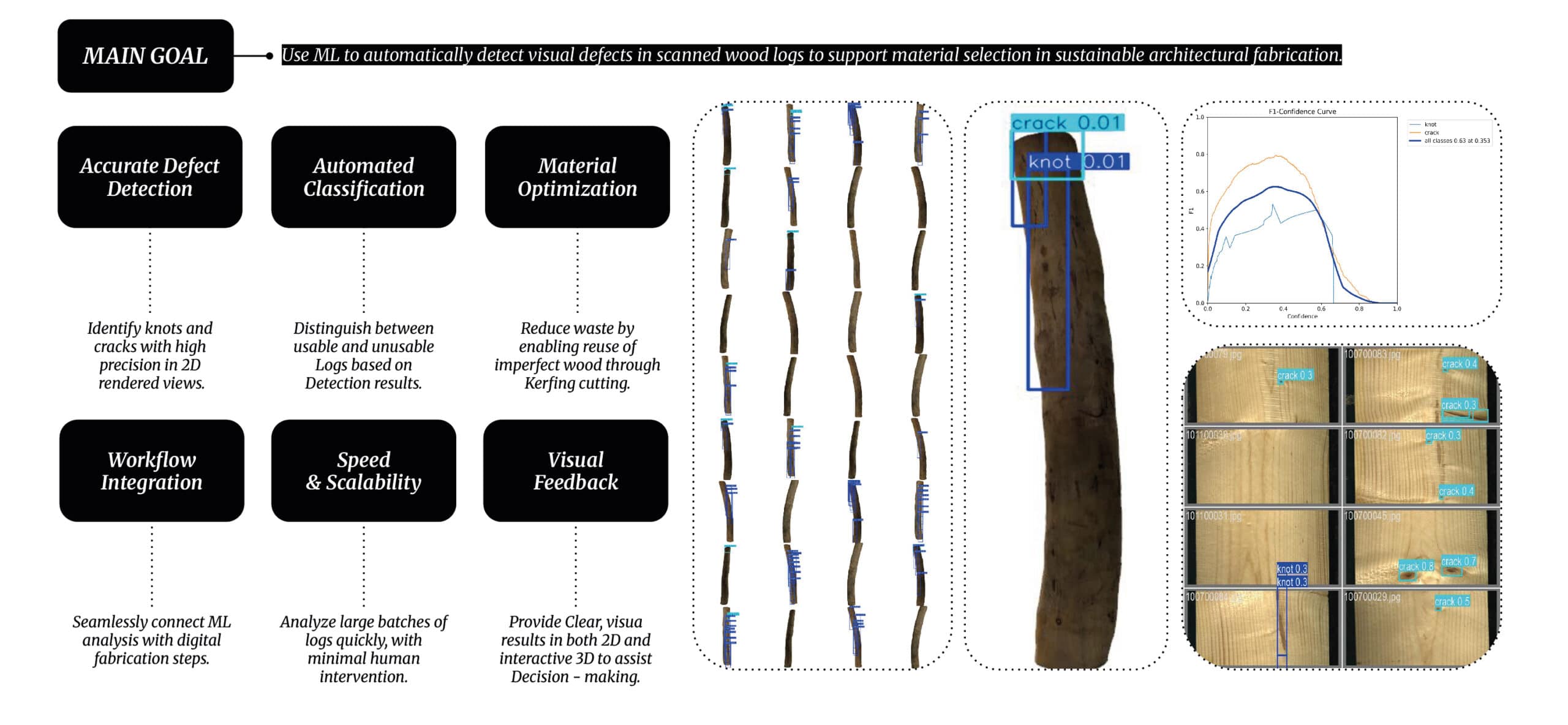

Detecting wood defects in 3D-scanned logs using Machine Learning

In the timber industry, a significant portion of wood gets discarded due to irregularities or defects that make it unusable under standard practices. However, many of these logs can still be used creatively or structurally if properly understood and classified. This project aims to leverage deep learning to detect surface defects—specifically knots and cracks—in 3D-scanned logs, enabling a smarter material reuse pipeline.

We developed a full software pipeline that converts a 3D model of a log into multiple 2D views, processes those views with a trained ML model (YOLOv8), and then generates a variety of visual and data outputs. The end result is a robust tool for designers, architects, and fabricators to better understand wood characteristics and use them intentionally rather than discard them.

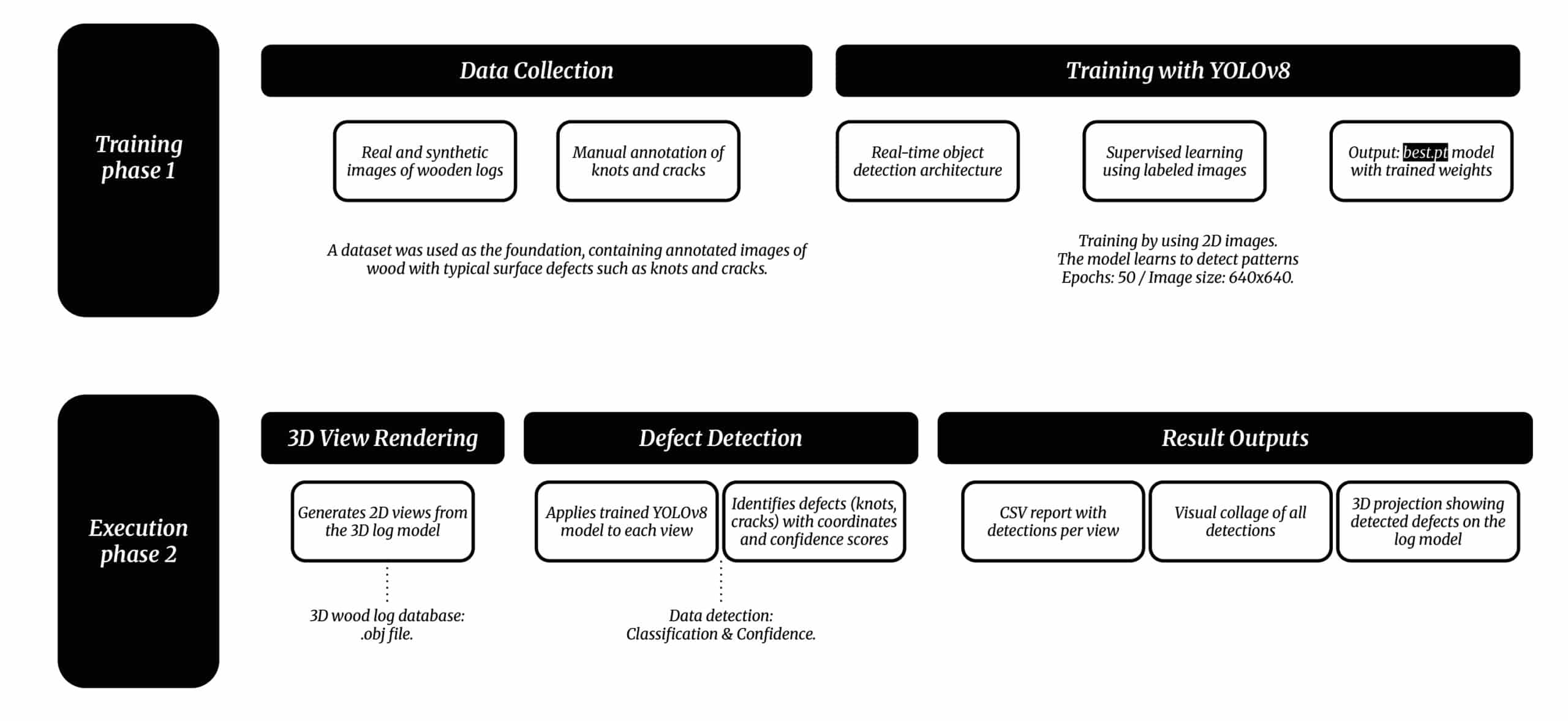

| PRODUCTION LINE WORKFLOW |

From scanned log to intelligent fabrication

Our system simulates a complete production line for handling logs with defects. It integrates digital scanning, machine learning analysis, and fabrication-ready output generation. The main stages are:

- 1. Scanning & Analysis: The log is 3D-scanned and processed through a trained ML model that detects knots and cracks from multiple angles.

- 2. Classification: Based on the defect density and location, logs can be categorized into usable, partially usable, or discardable.

- 3. Fabrication Integration: Logs that are selected for use are passed on to a fabrication system where digital design techniques (e.g., kerfing or reshaping) are applied according to their unique geometry.

This workflow allows for smarter material use, automated processing, and fewer rejected logs.

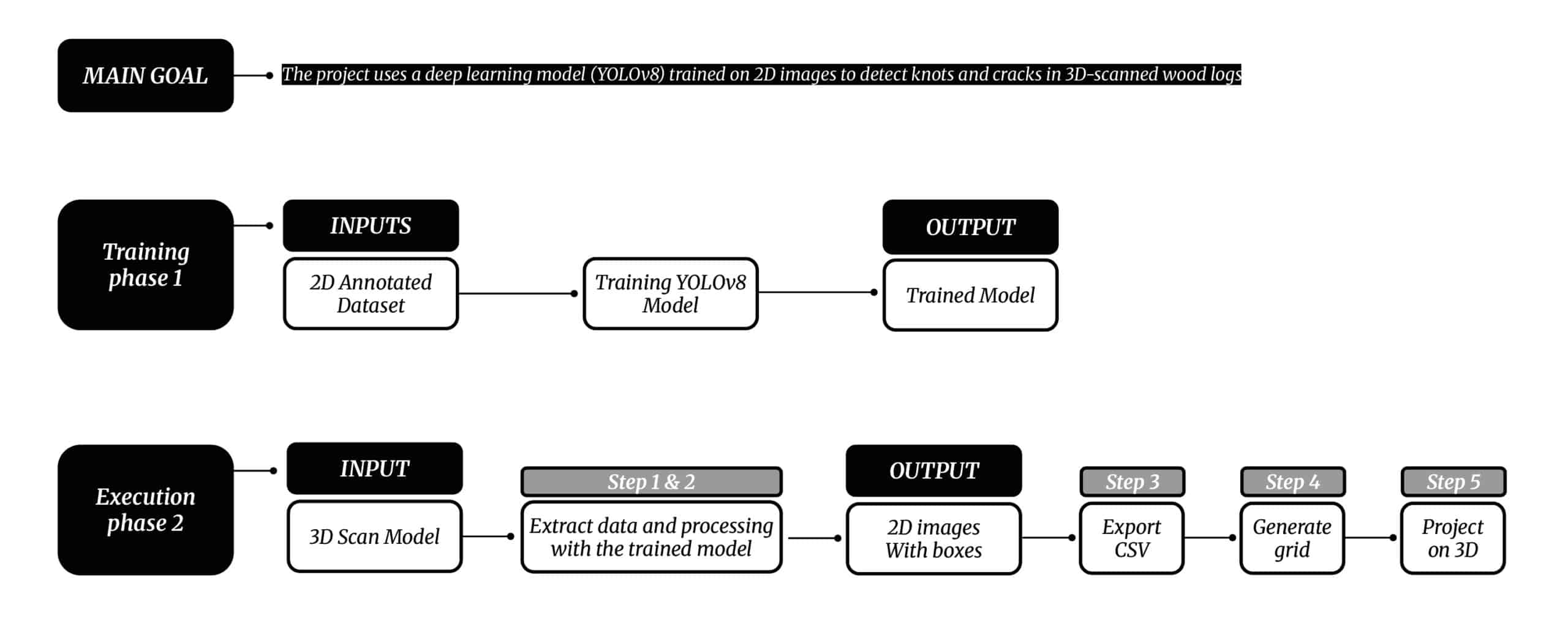

| CONCEPT OF OPERATIONS |

How the ML system operates behind the scenes

To detect defects on irregular surfaces like logs, we had to translate a 3D problem into a format that our 2D deep learning model could understand. We achieved this by rendering the 3D .obj files into 2D images taken from different angles, which simulate how a camera would scan the surface.

These views are then analyzed by the YOLOv8 model, trained specifically to detect knots and cracks in wood surfaces. The detections are saved and visualized, including:

- Images with bounding boxes

- CSV files listing detected defects with class and confidence

- A 3D projection of all detections back onto the original mesh

This combination gives users a complete understanding of the log’s condition, both in image form and as actionable data.

| DATA SET |

A custom dataset of logs and annotated defects

Our dataset was built using a collection of 15 real-world 3D log scans in .obj format. For each log:

- We rendered 36 views (every 10° around the vertical axis).

- Each view was manually annotated to mark knots and cracks.

- The annotated images were used to train the YOLOv8 model.

Details:

- Training split: 75% of images used for training, 25% for validation

- Image resolution: 640×640 px

- Defect types: knot (class 0) and crack (class 1)

This dataset was foundational for both the model’s learning and the visual results. It ensures the system is well-adapted to real wood textures and imperfections.

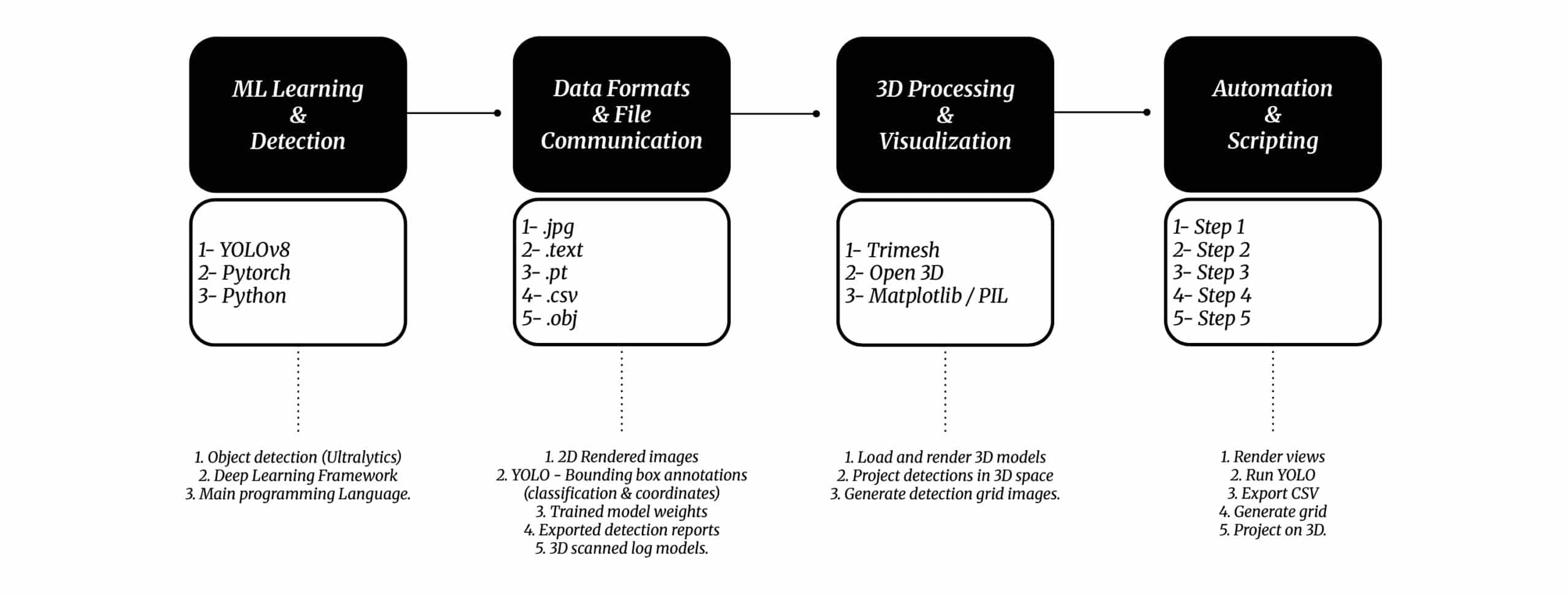

| SOFTWARE STACK |

Tools, languages, and file types

To build and run this pipeline, we used a combination of powerful open-source tools and libraries:

- Languages: Python 3.10

- Deep Learning: PyTorch + Ultralytics YOLOv8

- 3D Processing: Trimesh for geometry manipulation, Vedo for visualization

- Computer Vision: OpenCV for image IO and rendering

- Data Output: CSV for detections, PNG for visual outputs

File Types Used:

.png — Annotated view grids and 3D projections

.obj — 3D model input

.jpg — Texture maps and view renders

.pt — YOLOv8 trained model

.csv — Detection results

| ML EXPECTATIONS |

What we aimed to achieve with YOLOv8

Our goal was to create an ML system that could automatically, accurately, and robustly detect defects on complex organic surfaces. Key goals included:

- High accuracy in detecting small wood defects

- Fast inference time to allow batch analysis

- Lightweight model suitable for integration into design workflows

- Visual and structured outputs (images + CSV)

By using YOLOv8, a real-time object detection model, we gained a strong balance of speed and precision—ideal for processing logs in a production context.

| TEST & SIMULATION |

Testing on all angles of a log

We tested the trained model by rendering each log into 36 views (0° to 360°) and running detection on each image. For each angle, the model produced:

- Bounding boxes over detected defects

- Confidence scores per detection

- Classes (knot or crack)

Results were stored and summarized in a visual grid and exported as a 3D projection, allowing us to evaluate consistency and coverage across the log’s surface.

| INTERPRETATION & COMPARISON |

Mapping 2D results back into 3D

The most innovative step was projecting the 2D detection data back onto the 3D geometry of the log. This was achieved by using:

- Normalized bounding box coordinates

- Reverse projection through view angles

- Mesh point proximity matching

As a result, we could visualize the defects directly on the log’s mesh, giving a spatially accurate understanding of where issues are located. This has great potential for robotic selection or digital fabrication.

In addition to the projection of 2D detections back onto the 3D mesh, we also explored an alternative comparison method using Grasshopper (a visual scripting tool for Rhino). This approach involved generating an unrolled surface of the log geometry—a sort of flat “unwrap” of the cylindrical shape. By doing this, we were able to extract a cleaner, more continuous texture that could be analyzed in a single image. The advantage of this method is that the detections and visualization occur directly in 3D space, without requiring the multi-view rendering step, enabling a more immediate integration between analysis and digital fabrication workflows.

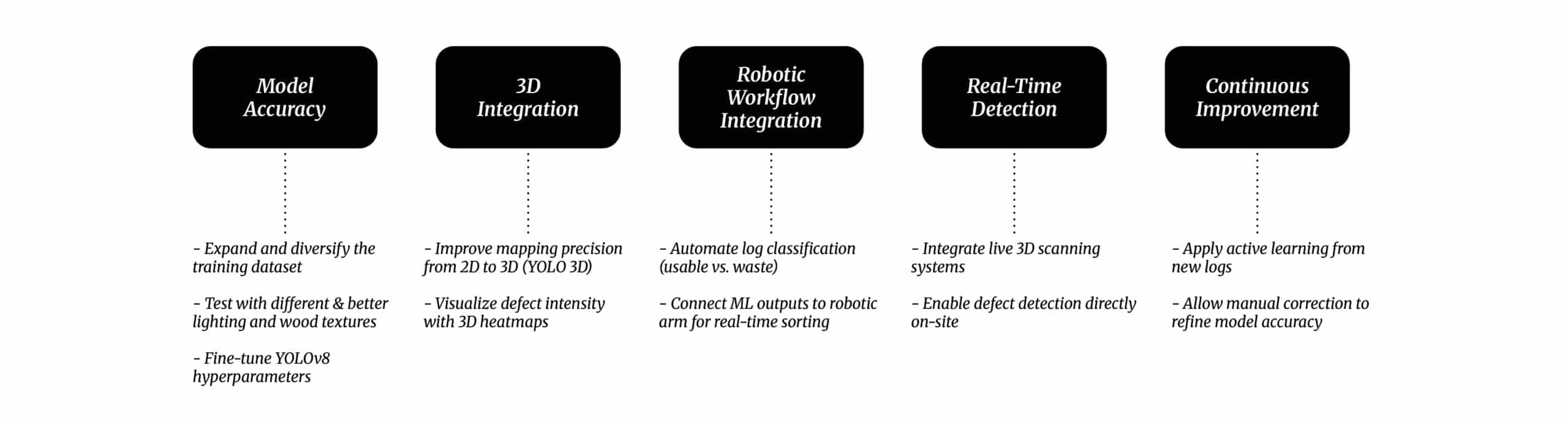

| FURTHER STEPS & IMPROVEMENTS |

Where we want to take this next

There’s significant room for further development in this system. Immediate improvements include:

- More training data: Improve accuracy with a broader dataset

- Live scanning integration: From camera to detection in real-time

- Robot integration: Use detection to guide cutting, reshaping, or labeling

- Web interface: Upload a model, get instant analysis and report

- Model refinement: Explore deeper or custom architectures for specific tasks

The system is modular and open enough to expand in multiple directions, depending on the final use case (design, automation, industry, etc.).