Project Concept

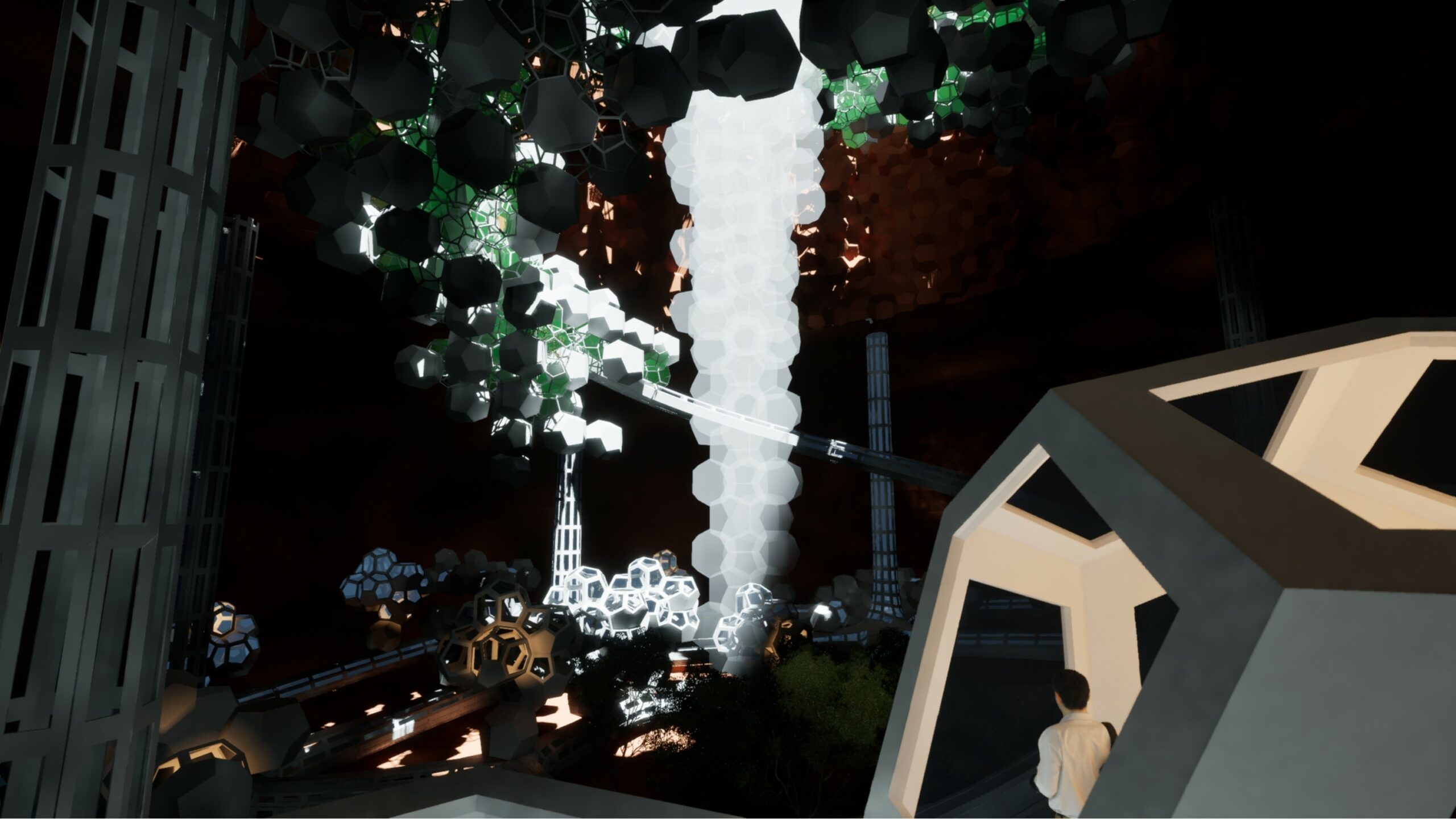

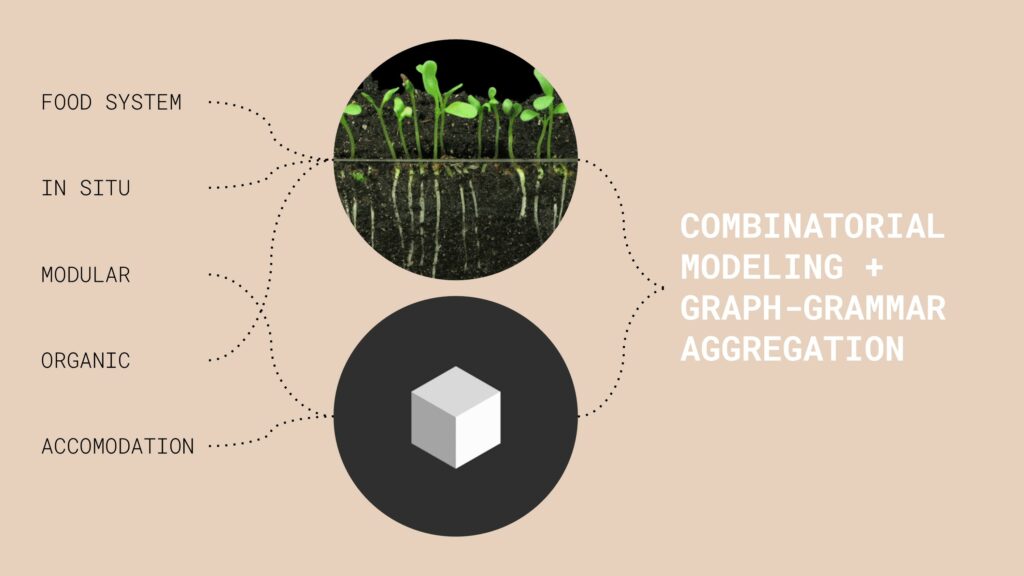

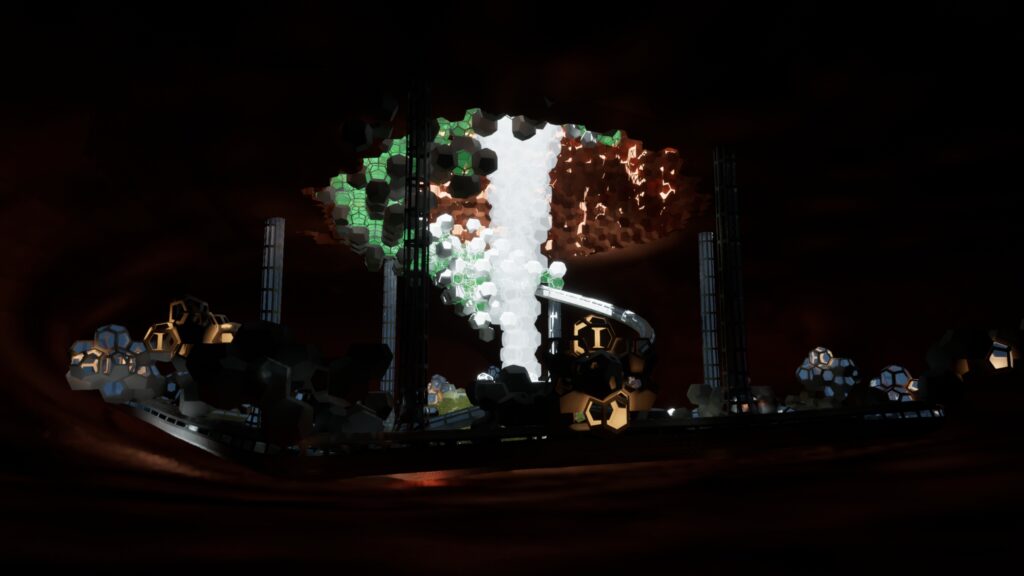

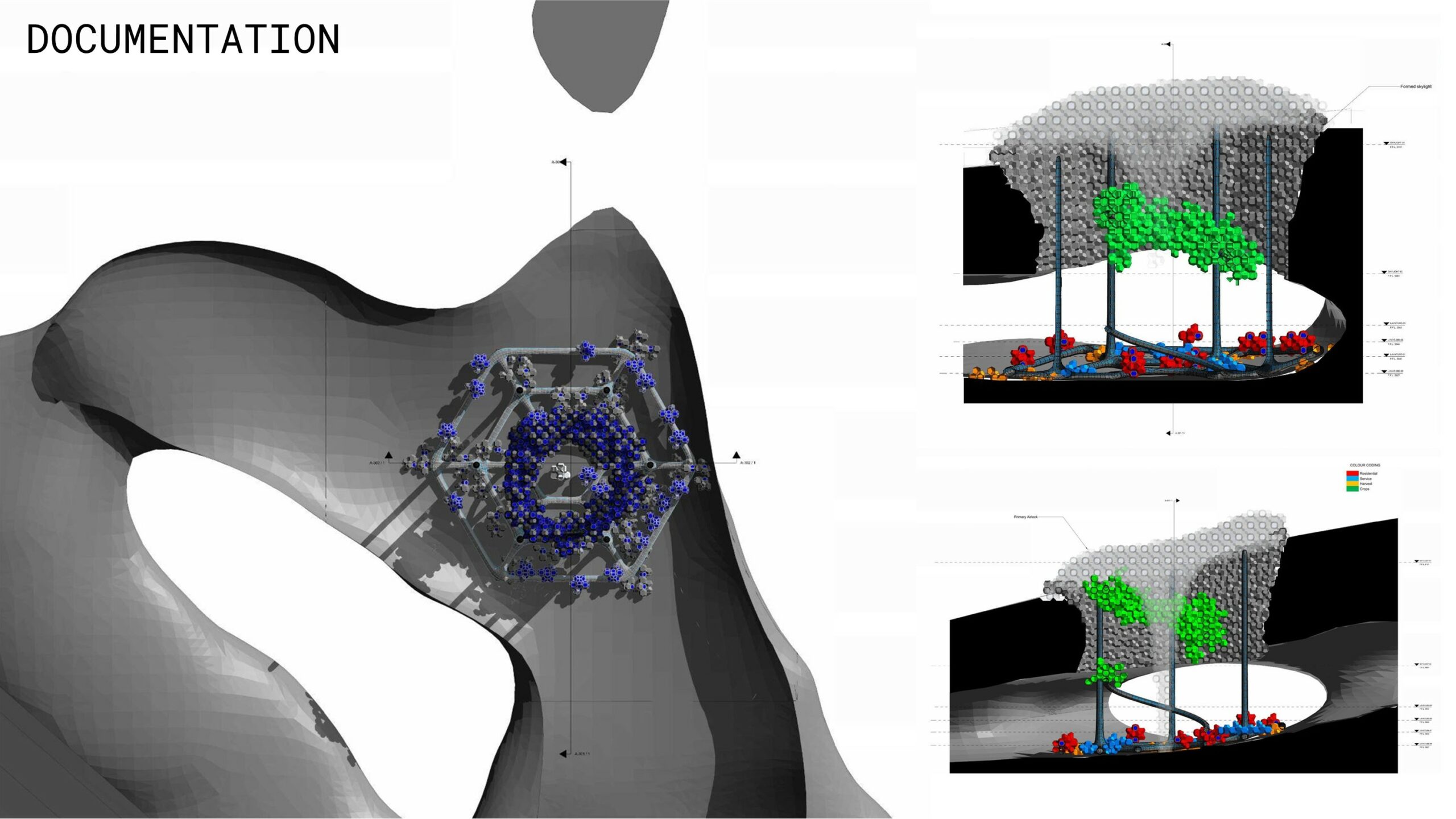

Aggreculture is a Martian colony architectural project focused on food production. The design concept is inspired by the way plant roots grow to find necessary nutrients within soil aggregate. Our project is unique in that it occupies a Martian lava tube. By building in a lava tube we use existing natural resources to provide radiation protection, dust storm protection, and a more regulated temperature. For these reasons, our computational method is focused on combinatorial modeling using aggregation algorithms.

Computational Method

Parametric Approach

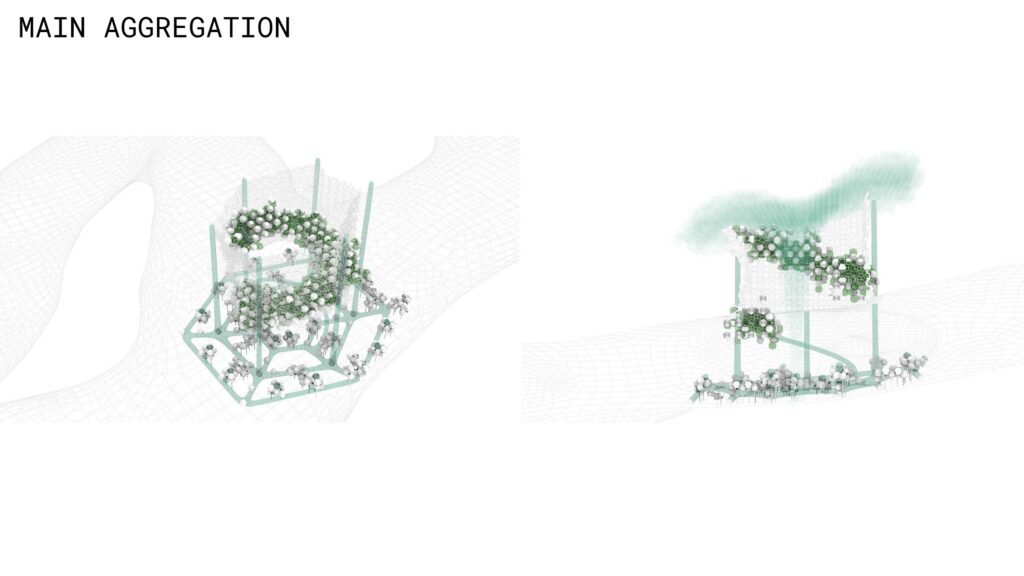

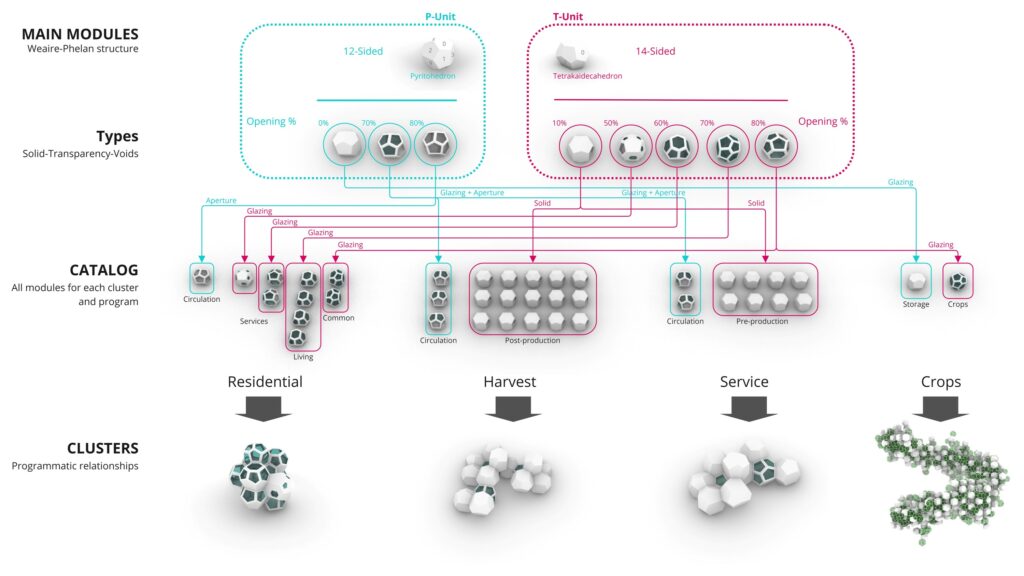

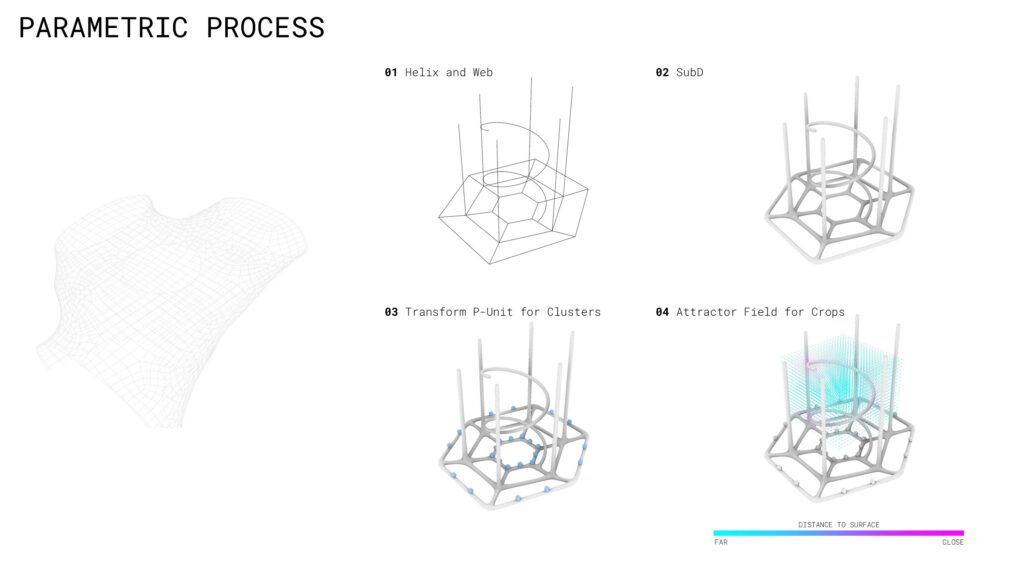

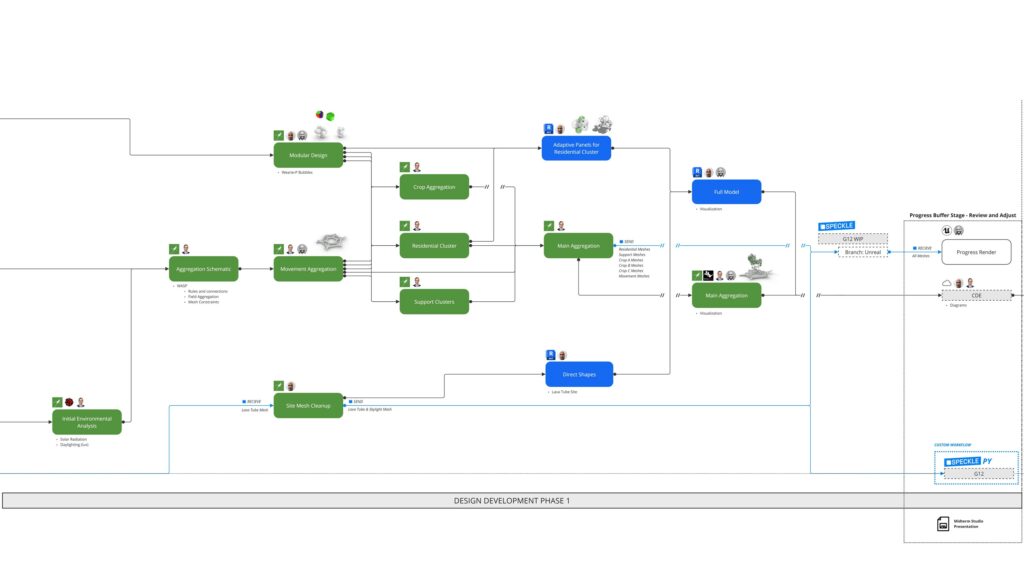

First, the lava tube is created using volumetric subtractive typologies. The Dendro plug-in was used to create a realistic Martian lava tube and skylight. Next, the aggregation is completed utilizing the Wasp plug-in within Rhino and Grasshopper. Graph-grammar rules helped define the three main clusters: residential, service, and harvest. Furthermore, field attractors were employed to stochastically aggregate the crop modules around the central skylight and along a helix movement corridor. The movement corridor is generated as a subD mesh based on an array of concentric curves and mathematically defined helix.

Importantly, the aggregation logic quickly increased the mesh count within our model. Therefore, we leveraged block definitions through the Elefront plug-in to increase computational speed as well as provide smooth interoperability between Rhino, Grasshopper, and Revit.

Collaborative Workflow

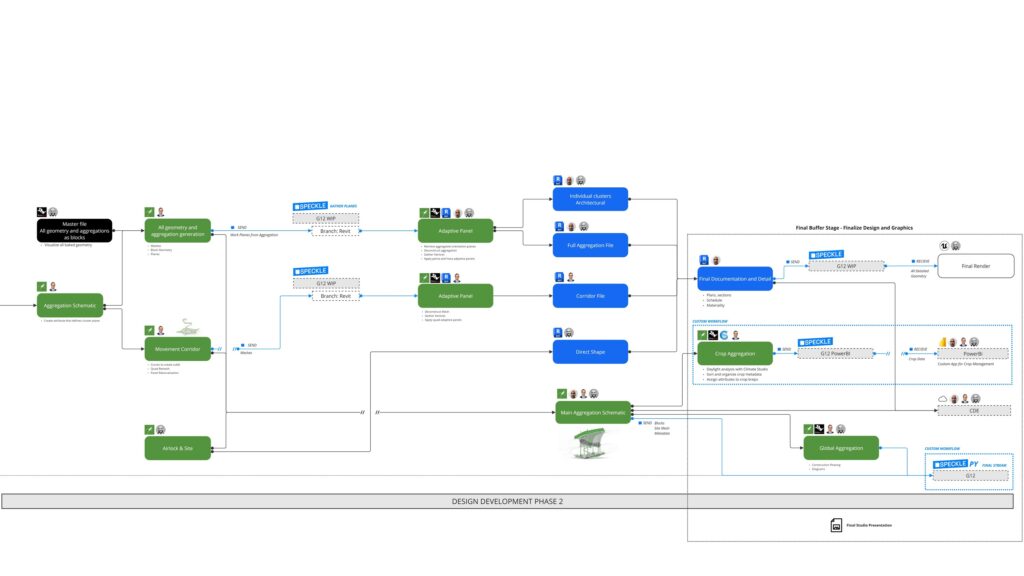

Process Diagram

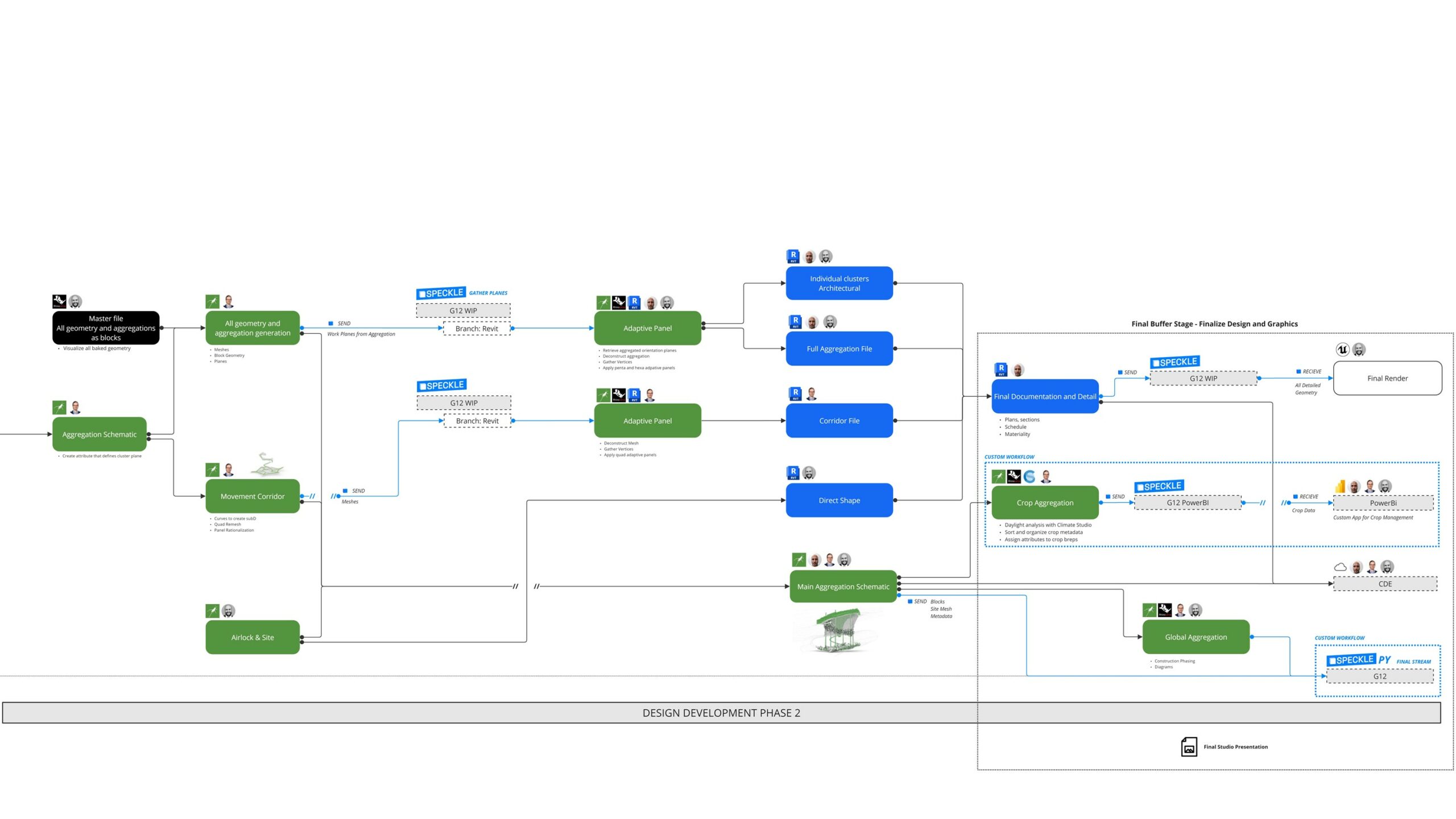

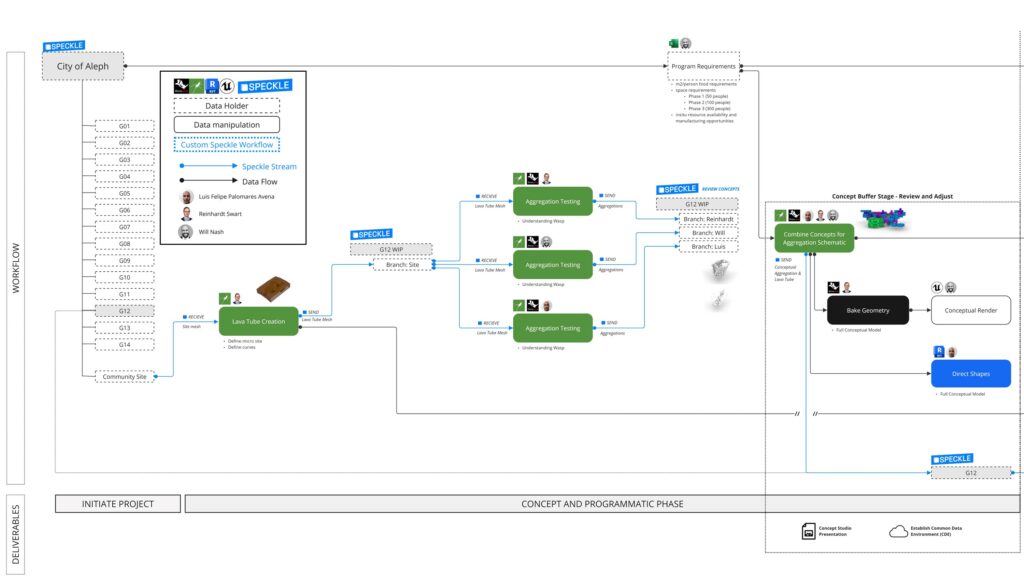

Establishing an effective collaborative workflow was critical to the project’s success. Throughout the project, Speckle was utilized to share geometry and data between group members. Speckle was used primarily for visually review during buffer stages, an interoperability tool for use with Rhino.Inside Revit, and a data repository for use in PowerBi. Our group found a Common Data Environment (CDE) was effective in sharing raw Grasshopper, Rhino, and Revit. The CDE provided an accessible and centralized location to save, open, and edit code and scripts. BY combing a CDE and Speckle, we leveraged the strengths of both workflows.

Below is a detailed diagram illustrating our groups collaborative workflow. In the conceptual stage, Speckle was used primarily to share conceptual design iterations. Our groups members explored aggregation logic using Wasp in Grasshopper; we shared geometry using Speckle to understand what was working and how we could implement graph-grammar aggregation in the final design. During the first phase of design development, Speckle was utilized as a data and geometry repository with direct connections to Unreal, our rendering engine. By connecting to Unreal via Speckle, we were able to continue design and quickly update Unreal as needed for progress renders. Importantly, in the final detailed design phase of the project, Speckle was used to receive and send block orientation planes which allowed us to gather, sort, and deconstruct our block definitions using Rhino.Inside for documentation. For our final Unreal renders, the Speckle connector was key for rapid and reliable detailed geometry transfer from Revit to Unreal.

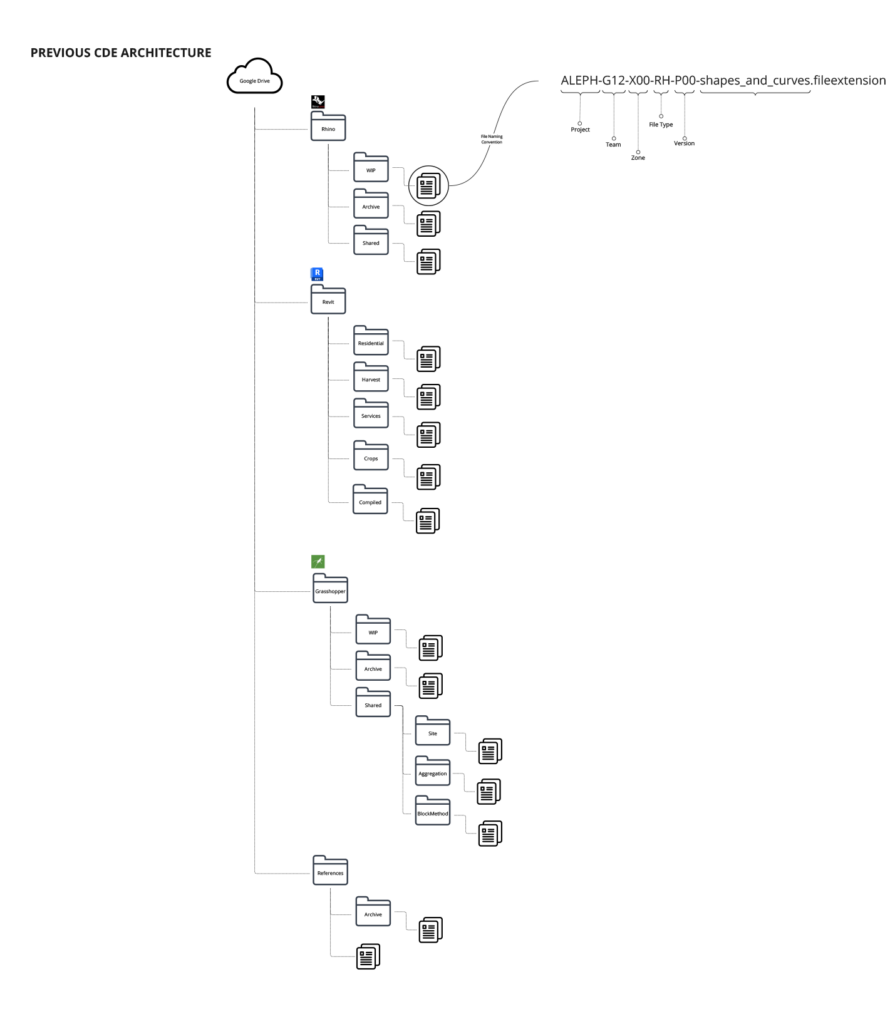

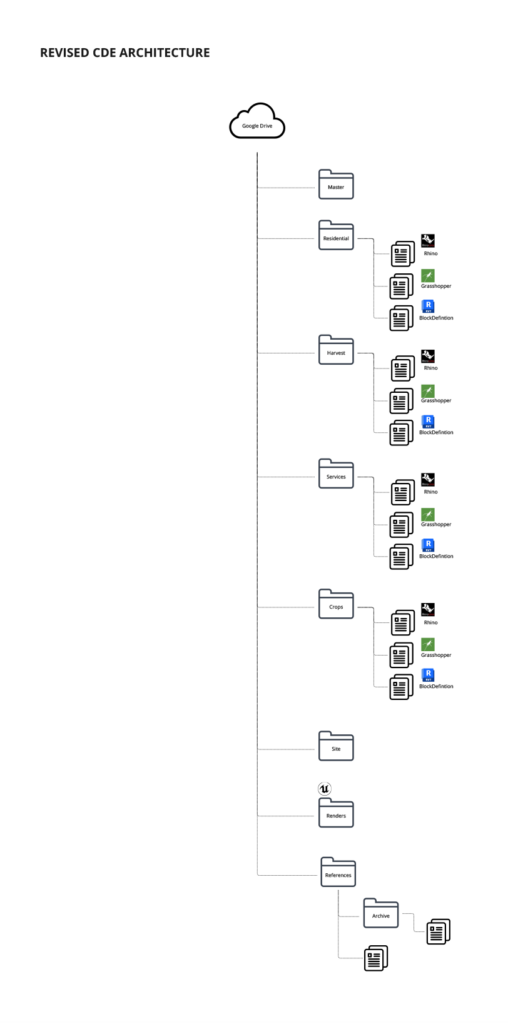

At first, our CDE was organized our software. As the project developed and complexities grew, we found it better to organize our CDE folder structure around the groups of clusters and program first, then software. In either case, we established a standard file naming protocol, seen below, for version control and folder maintenance.

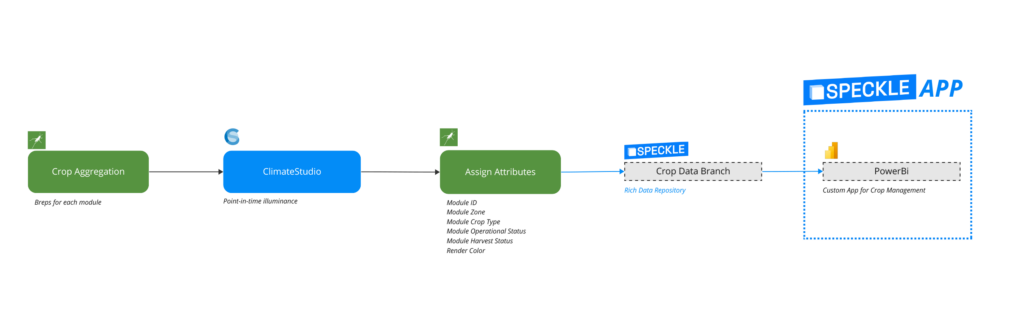

Custom Data Visualization Application

We imagined what people in a Martian food colony might need once crop production is functional. For this reason, we created a custom data visualization tool that Martians could use to track, maintain, and manage their food resources. To develop this, we utilized the Speckle connection to PowerBi which allows for flexible and customizable dashboards.

First, the main aggregation was generated in Grasshopper. ClimateStudio, an environmental analysis plug-in was used to study daylight illuminances on the crop aggregation. The crop aggregation is located in the lava tube skylight which allowed for natural illumination to reach crops, facilitating healthy crop growth with less demand for electricity. We researched the amount of light each type of crop needed, measured in photosynthetic photon flux density (PPFD), and translated into typical lux values based on daylight’s spectrum (here, we assumed an Earth atmosphere and utilized a weather file with similar a longitude and latitude as our Martian colony – this does not directly translate either sun angles, day length, or atmosphere but serves as a proof of concept).

Once the daylight analysis is complete, we used that data to sort and organize the crop modules. For instance, the tomato modules were spatially located where there was the most daylight and mushrooms, the least. Grasshopper was used to manage this data set as well add additional module information, including:

- Daylight Access

- Crop Type

- Crop Module ID

- Crop Module Zone

- Operational Status

- Harvest Status

There attributes were assigned to each respective crop module as a Speckle extended object in Grasshopper. Once streamed to Speckle, PowerBi was used to visualize the 3D geometry and display the above traits in a compelling and interactive way. In this method, Martians crop managers could quickly understand the location, health, and other important information about the crops.

There are promising opportunities for further development. For one, the entire Grasshopper data management script could be automated using something like a Speckle Bot which would “listen” for an aggregation to be streamed to Speckle. The bot would then perform the daylight analysis, automatically sort and assign attributes to the respective crops, and stream the data-rich geometry back to Speckle for use and auto-update in PowerBI.

Secondly, external third-party data sets could be tied into the PowerBi data query and dashboard. For instance, oxygen, H2O, and occupancy sensors in each module would send real-time feedback to the Crop Management app, for a real-time understanding of module health. If crops needed to rotate or modules re-purposed for another crop, more data could be built into the app to allow for each management of complex geometry and layered data sets.

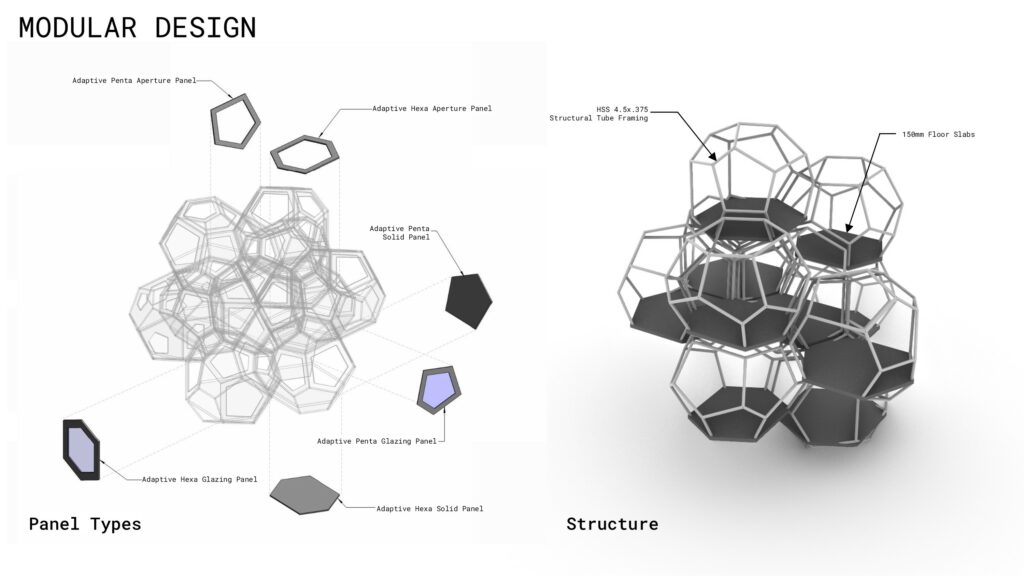

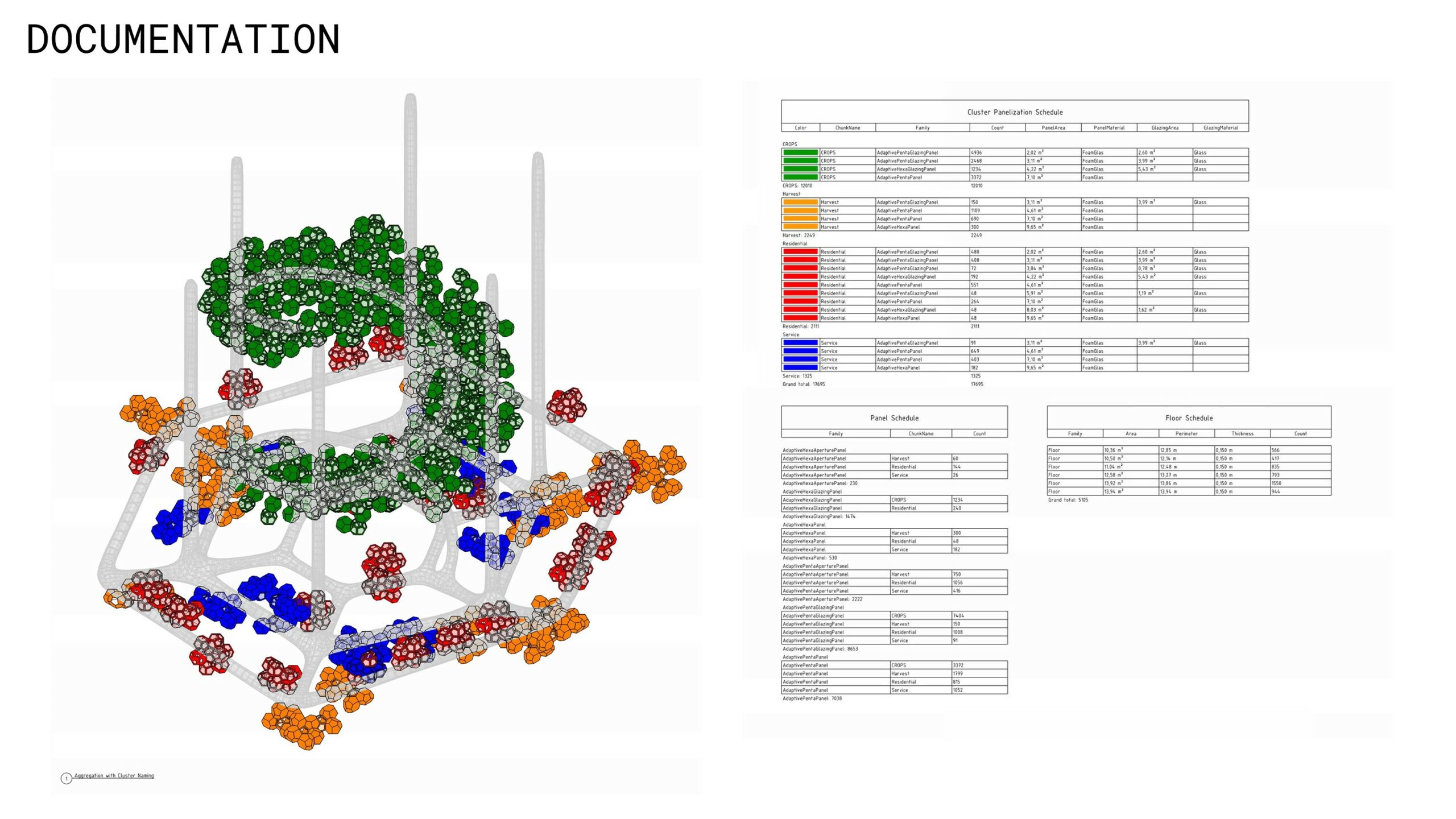

Documentation

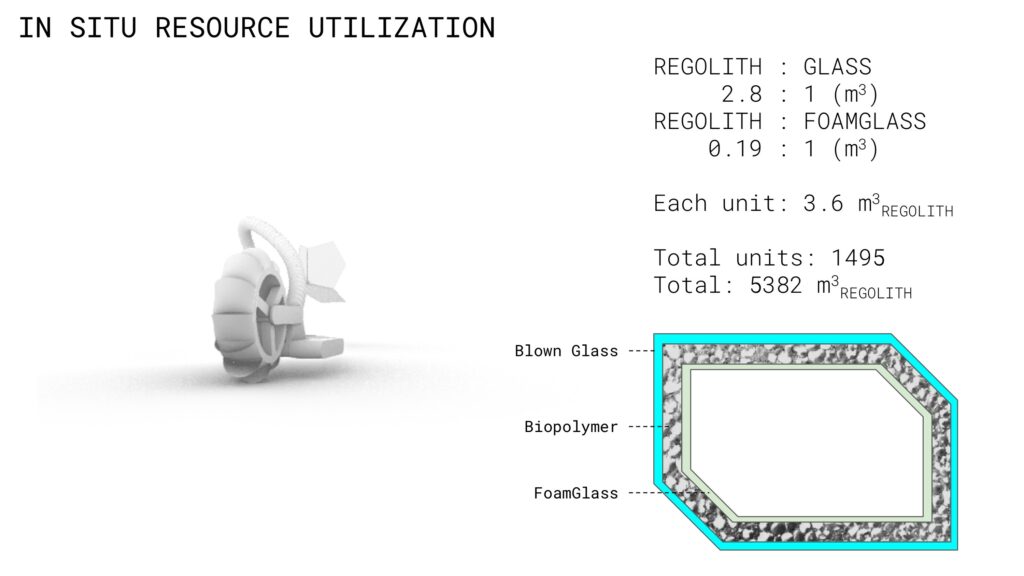

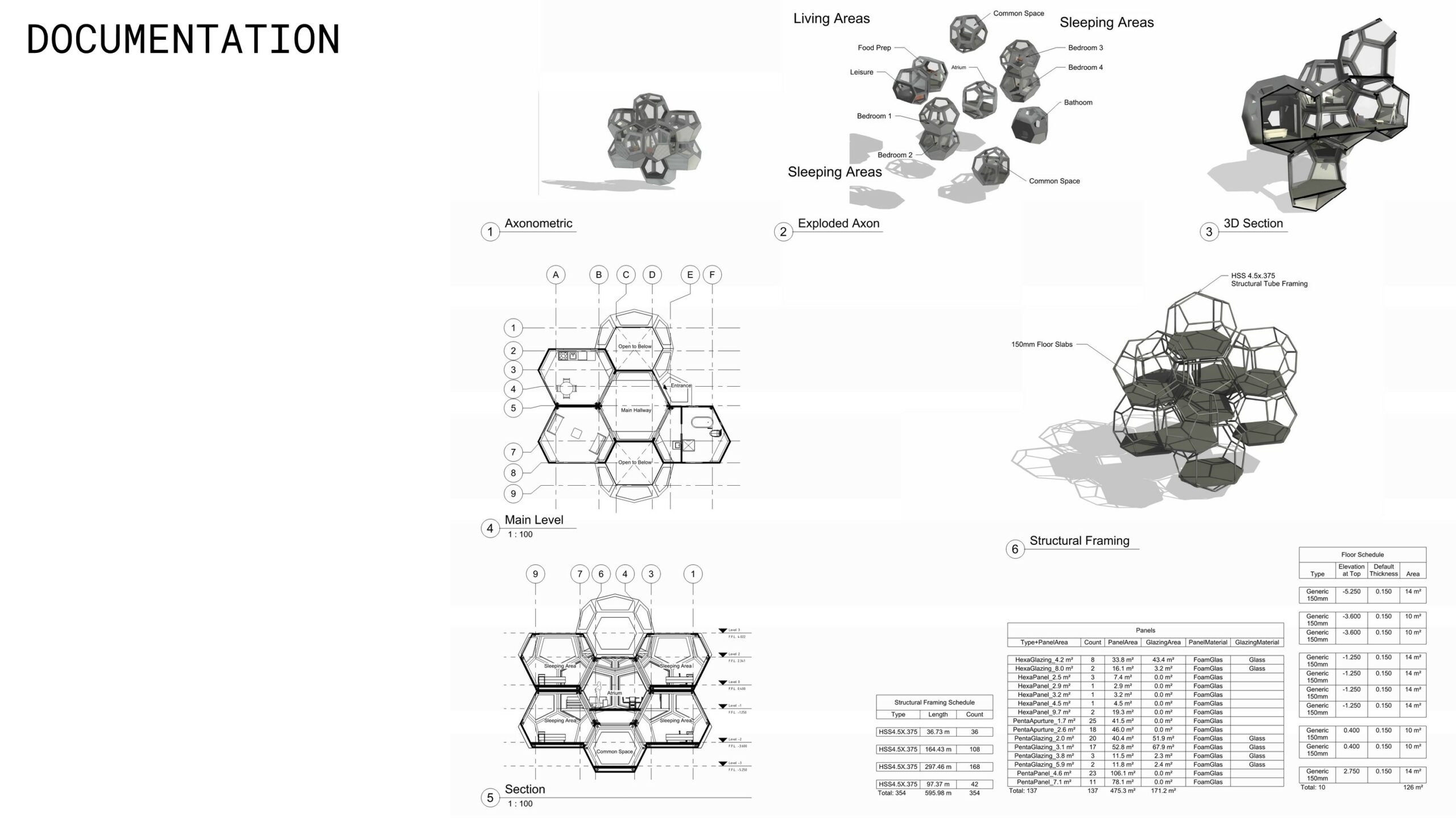

Our group utilized Rhino.Inside Revit exclusively to document complex aggregation geometries. Speckle was used to send and receive the aggregated planes so we could recover the geometry’s vertices and centers. Each cluster and crop module is based on six different adaptive families. Our base module shapes allowed us to parametrically design adaptative “penta” and “hexa” panels, both with and without glazing, to construct the geometry. Parametrics include cluster name, shape, materials, solid panel area, and glazing area. The panels are envisioned as structural blown glass, using insitu resources without the need for water.

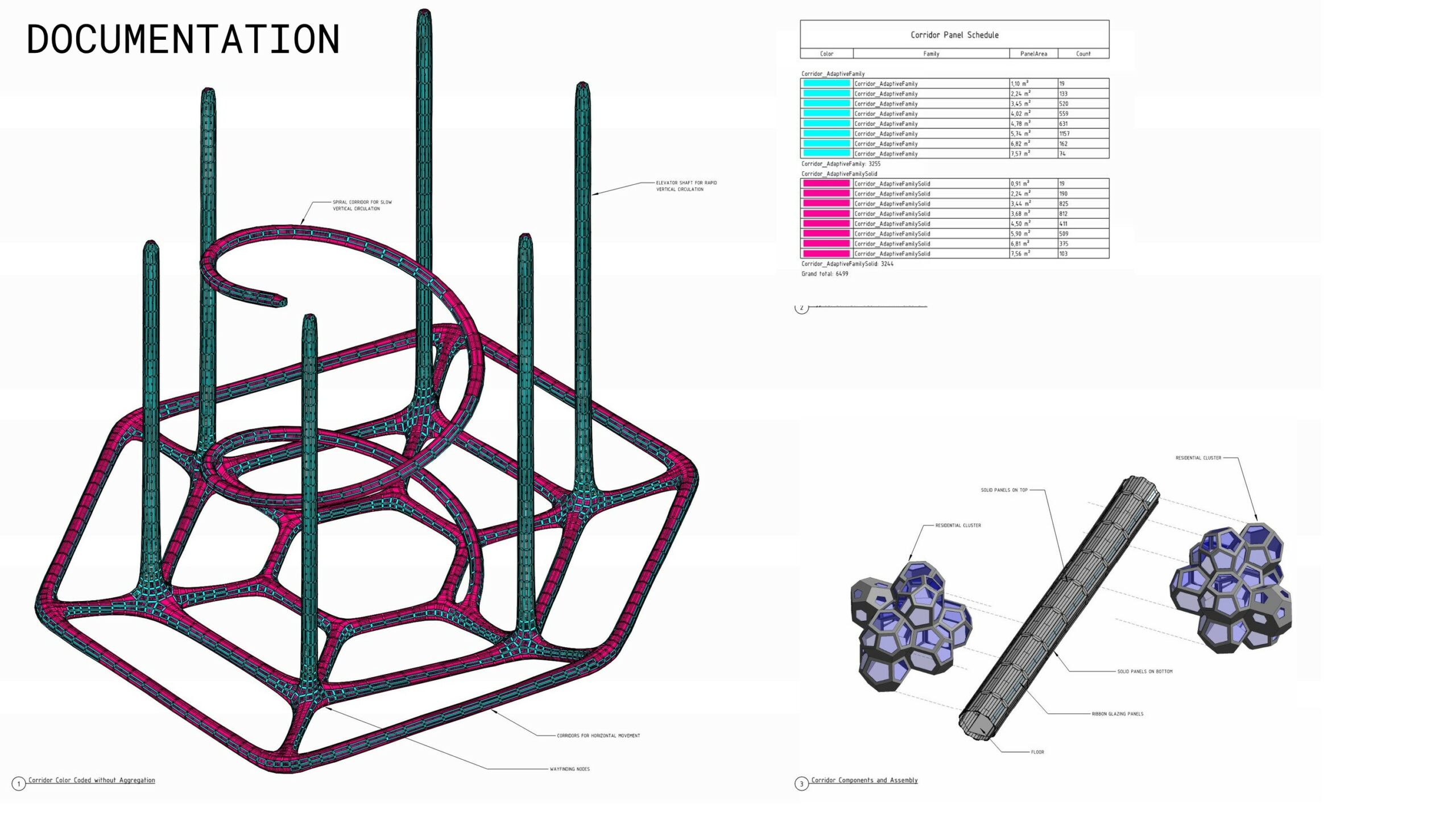

The corridor utilizes two quad adaptive families, one with glazing and one solid. For both the clusters and corridor, vertices are extracted from a Speckle stream trough a custom Grasshopper script. At first, we tried building each cluster as a Revit file and linking into a master file. However, we discovered that it is very difficult to orient Revit links and given our 3D aggregation and complex orientations, this method proved ineffective. Secondly, we attempted to utilized Model Groups within Revit but found it laborious to extract the necessary shared parameters needed to create meaningly schedules and filtered views. Finally, it is possible to create and use nested families; however, this would have resulted significant re-work to our process. Given these constraints, we used adaptive families directly based on vertices gathered from our deconstructed block method to document.

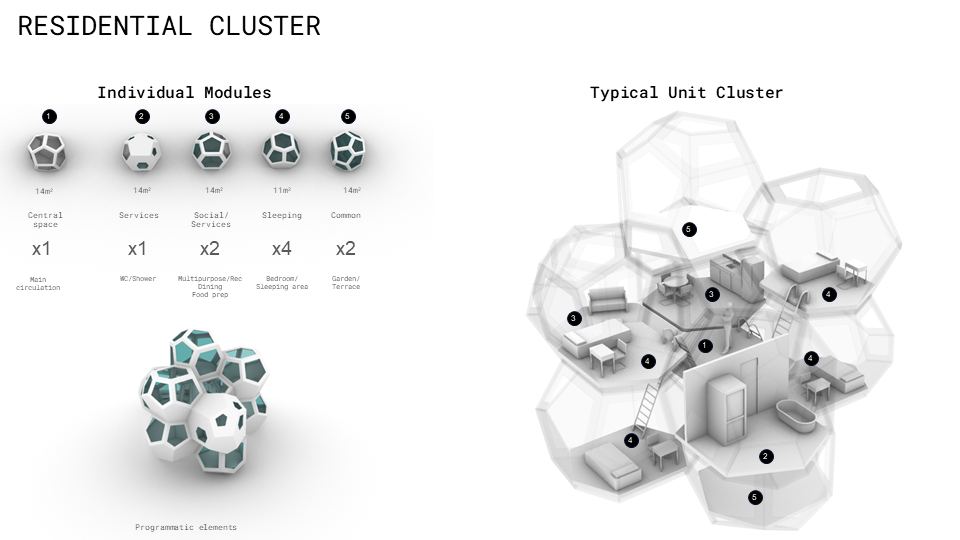

The final documentation includes plan views of the main aggregation within the lava tube. Color-coded sections illustrate the architecture and programmatic relationship to the site. Furthermore, by using shared parameters within our adaptative families, we were able to create filtered views and schedules. For example, we have documented the clusters with specific clusters for easy visual organization. A color-coded schedule further breaks out the quantity, material, and panel areas associated with each cluster. A detailed sheet focused on the corridor organizes the quad panels via a color-coded filter and schedule as well as exploded axonometric views. Finally, detailed 3D structural models, annotated exploded axons, plans, and sections thoroughly describe each cluster: residential, service, and harvest.

Throughout this process, we learned about Revit best practices. While challenging, this process will certainly help us improve future BIM efforts and create more effective and novel workflows.

Lessons Learned

Wasp aggregation methods are somewhat difficult to control, and furthermore because our aggregations were generated sequentially it complicated the division of labour. In future it would be beneficial to write a ghPython script to convert the wasp aggregation to its JSON encoded structure, which could then be passed to a Hops component, opening up multi-threading computation and allowing for separation of the workflow.

The many restrictions imposed by Revit on component families, groups and linked files were a frustration for our project, as the simple workflow of using blocks in Rhino was unable to be easily transferred to Revit. Our final workflow utilizing panel vertices as adaptive points for insertion was cumbersome and prevented us from easily making any changes once the panels were in Revit, other options that might have been more suitable include using nested families (respecting Revits rules about which types of families can be nested), or utilizing Dynamo to insert linked files in their correct location and orientation (using shared coordinates).

It is hoped that specklepy and pyRevit will develop further and provide some of the functionality that was required to meet our goals.