This project documents a 1-week workshop that introduced students to the COMPAS framework. Our goal was to design a tile mosaic that would be part of a collaborative pick-and-place workflow with the UR5, incorporating COMPAS Fab, Kangaroo (Grasshopper), and ROS.

What is COMPAS?

Utilizing the COMPAS framework developed by Gramazio Kohler Research and the larger community, COMPAS FAB is an advanced computational research tool designed to integrate robotic fabrication in architecture and structures. This project builds upon several pivotal works in the field. Firstly, the foundational COMPAS framework (Tom van Mele et al., 2017-2024) provides essential tools for computational research in architecture. Secondly, “Non-planar Upcycled Robotic Tiling” by Aikaterini Toumpetski and Nikolaos Maslarinos (ETH Zürich, MAS 2021/22 Thesis Project) explores innovative methods for robotic tiling using upcycled materials. Furthermore, the “COMPAS FAB: Robotic fabrication package” (Gonzalo Casas et al., 2018-2023) offers robust capabilities for integrating robotic systems within the COMPAS ecosystem. The “Mosaic // Sharp edge” workshop (Angel Muñoz and Alexandre Dubor, IAAC, 2021) showcases applications of robotic fabrication in detailed mosaic work. Lastly, the “Mobile Robotic Tiling” project by Gramazio Kohler Research (2016) underlines the potential of mobile robotics in construction. Through these combined efforts, this project advances the field of robotic fabrication in architecture, promoting innovative methods and enhancing precision and efficiency in construction processes.

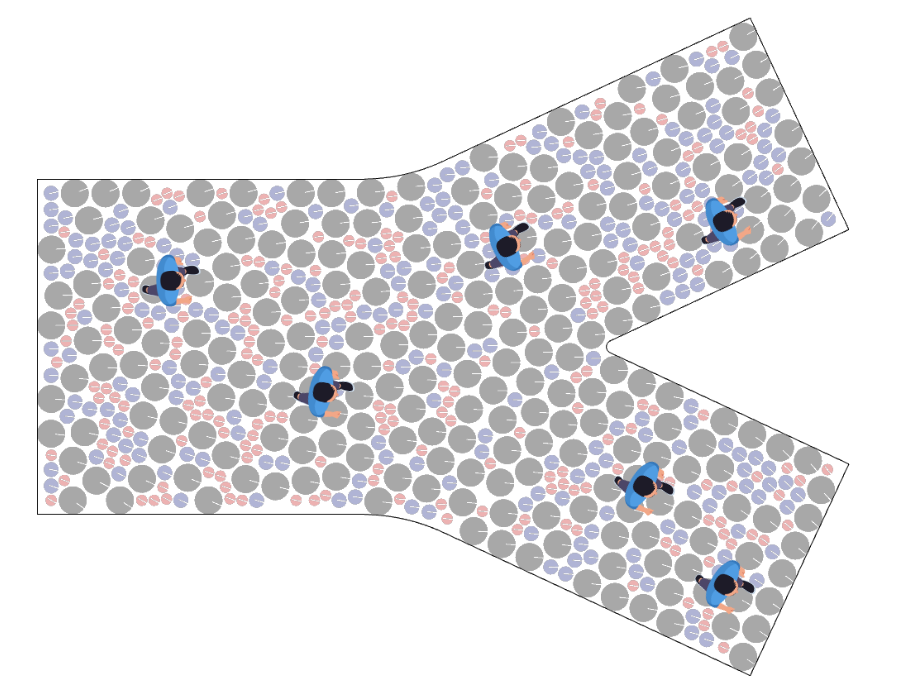

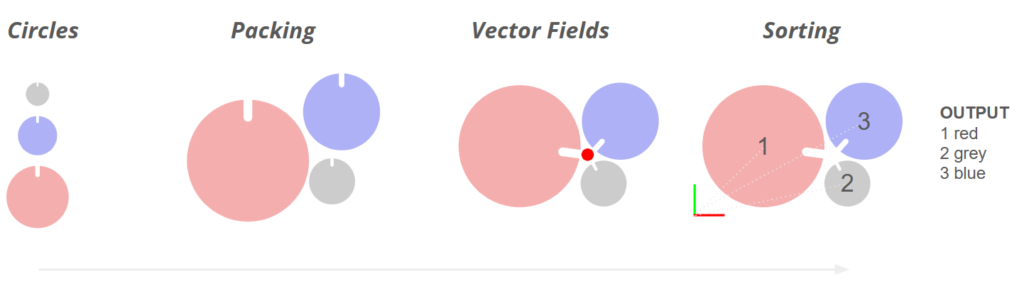

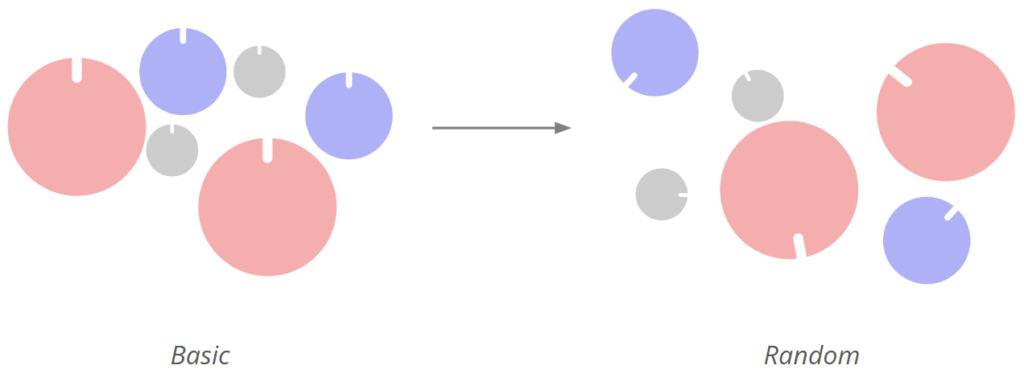

Our design took a bottom-up approach, embracing the randomness of tile size and color, and ultimately finding order through the integration of vector fields to align the tiles in a specific direction. After taking a step back from our work, we saw its value as a potential wayfinding solution for the built environment.

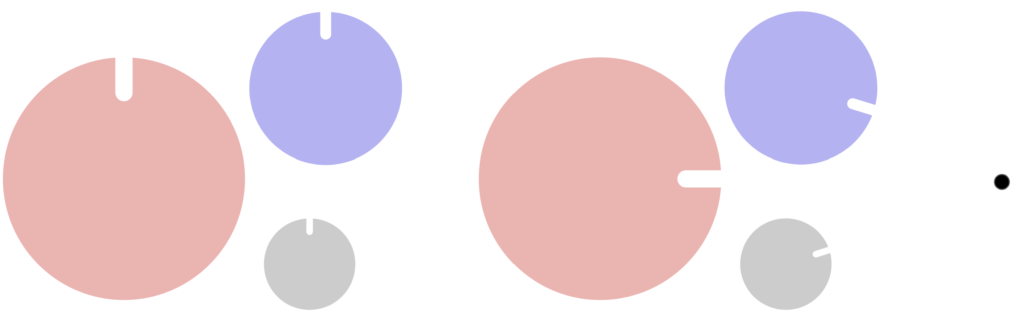

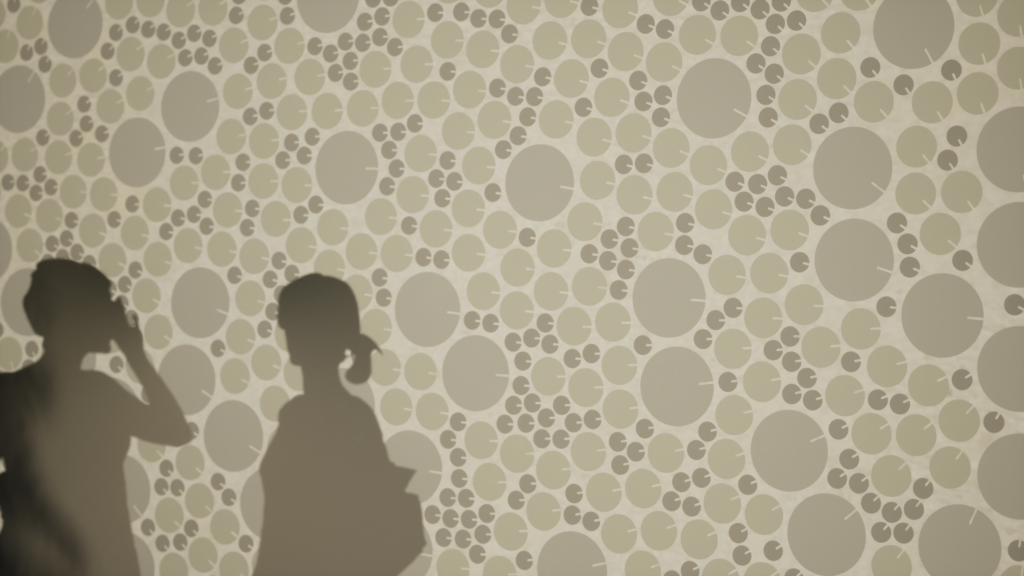

Our design was simple, utilizing three different sizes and colors of Cycles, that were cut out of Aludibond. Each one has a small cut in it, we wanted to use these to indicate some kind of information. in the image below, we have the three tiles we are using on the left. On the right are the three tiles rotated in a direction, which we want to use for wayfinding.

The slits in the circles could always point in a desired direction, helping lead people somewhere or guide the eye to a certain position. It is a subtle cue that can be implemented into many different locations.

Digital Workflow

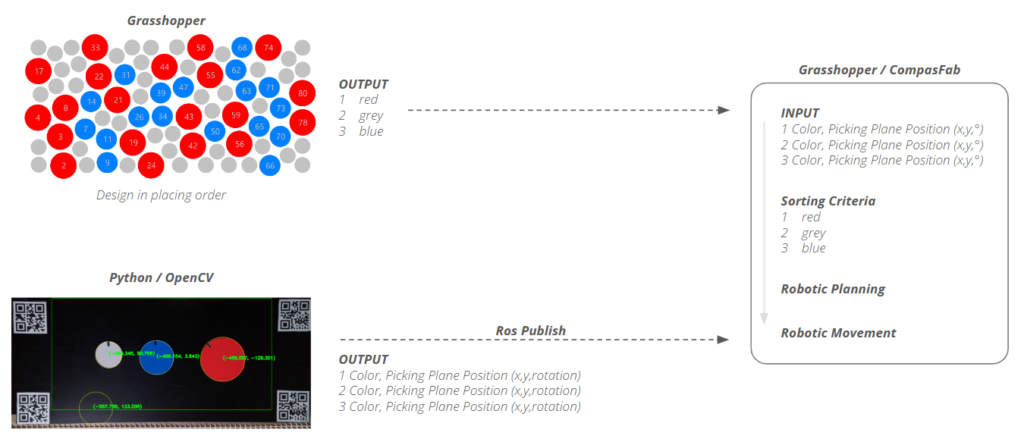

The tiles are packed into a bounding area where the kangaroo circle packing simulation is applied. Once the tiles are nested, the orientation of the tiles is then pivoted to align with the vector field applied over the bounding area. Once the design stage is complete, the tiles are sorted in order of distance from the 0,0 point – this sorting process corresponds to the placing order in the robotic workflow. Each tile output contains metadata regarding the tile’s color, position, and orientation.

Robotic picking approach

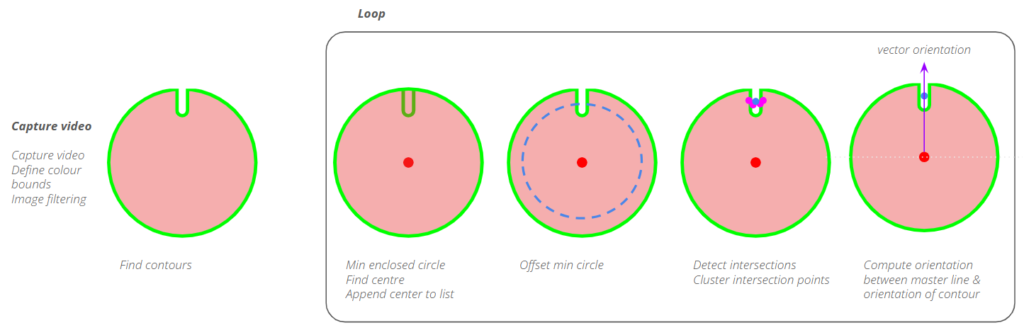

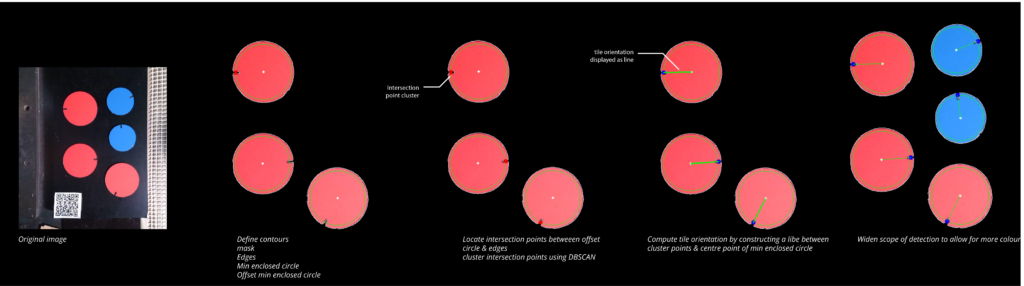

We aimed to enhance the scanning process to compute the orientation of randomly placed tiles at the picking station. This required developing a computer vision methodology capable of feature detection and determining object orientation.

Macro workflow

design, openCV and compasFab

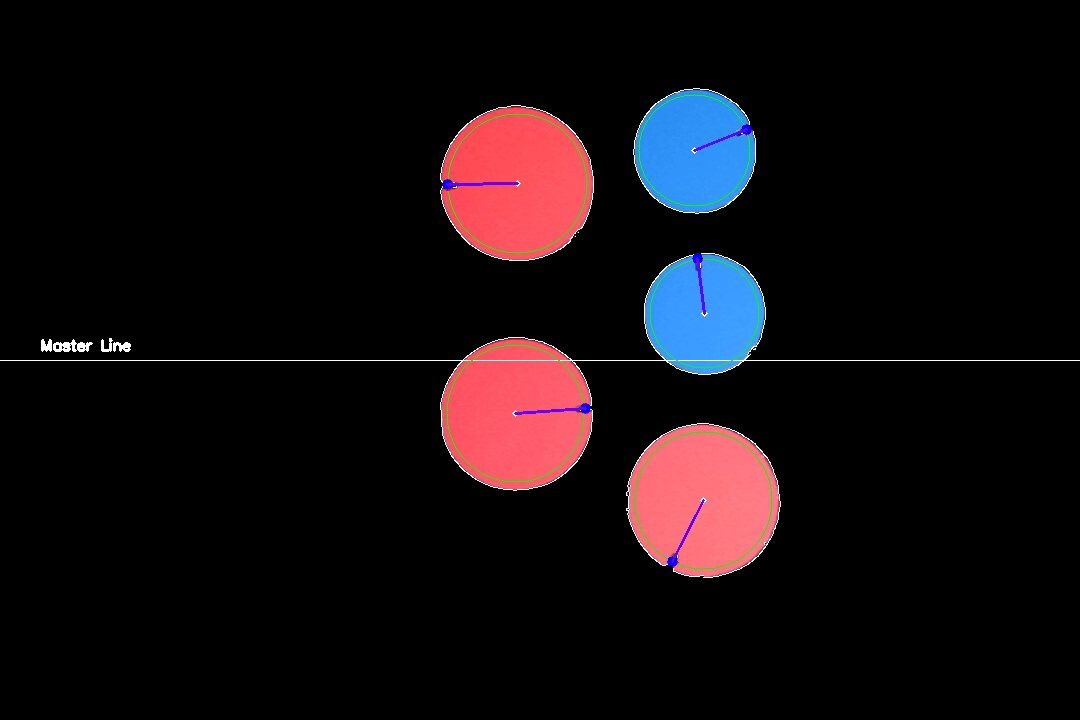

Our overarching workflow combines Grasshopper, ROS, Docker, and CompasFab in Grasshopper. As demonstrated in the previous step, from Grasshopper, we obtain the number of each circle and their associated color as output. We feed this information into CompasFab, where it is merged with data received from Python running OpenCV. The Python script, detailed later, can detect the color, position, and rotation of each circle in the pickup area in front of the robot. The information is sorted, and as output, color, picking plane position, and rotation are transmitted to CompasFab. CompasFab then identifies the first circle required in the design, finds its location from the OpenCV data, picks it up, and places it where needed.

Computer Vision Logic

Exploiting the main feature in our tile – the notch – we were able to determine the orientation of each tile by finding and clustering intersection points between the detected edge and an offset circle. This allowed us to define a vector direction that can be read by Grasshopper and CompasFab in the robotic picking process.

References

This workshop is based on the following prior work:

- Aikaterini Toumpetski, Nikolaos Maslarinos. Non-planar Upcycled Robotic Tiling. ETH Zürich, MAS 2021/22 Thesis Project, October, 2022. https://www.masdfab.arch.ethz.ch/2021-22-t3-work

- Tom van Mele, et al., COMPAS: A framework for computational research in architecture and structures, http://compas.dev (2017-2024). doi:10.5281/zenodo.2594510. URL https://doi.org/10.5281/zenodo.2594510

- Gonzalo Casas et al., COMPAS FAB: Robotic fabrication package for the compas framework, https://github.com/compas-dev/compas fab, Gramazio Kohler Research, ETH Zurich (2018-2023). doi:10.5281/zenodo.3469478. URL https://doi.org/10.5281/zenodo.3469478

- Angel Muñoz, Alexandre Dubor. Workshop 2.1: Mosaic // Sharp edge. IAAC Blog. February, 2021. https://www.iaacblog.com/programs/workshop-2-1-mosaic-sharp-edge

- Gramazio Kohler Research. Mobile Robotic Tiling. Gramazio Kohler Research (2016). https://gramaziokohler.arch.ethz.ch/web/e/forschung/257.html