Mobile Robotic Scanning & Discrete Element Analysis

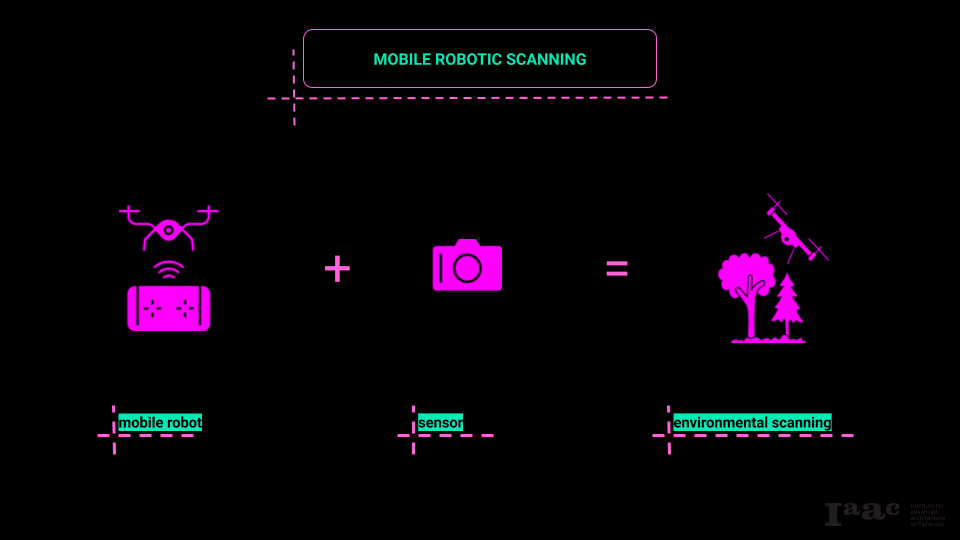

This workshop served as an introduction to mobile robotics for scanning. We explored various types of mobile robots and different kinds of sensors, focusing on how they integrate with one another to enable diverse scanning approaches. Based on these investigations, we designed a specific scanning methodology suited to our application.

The second aspect of the workshop focused on data processing and analysis, ultimately integrating this data into an actionable application.

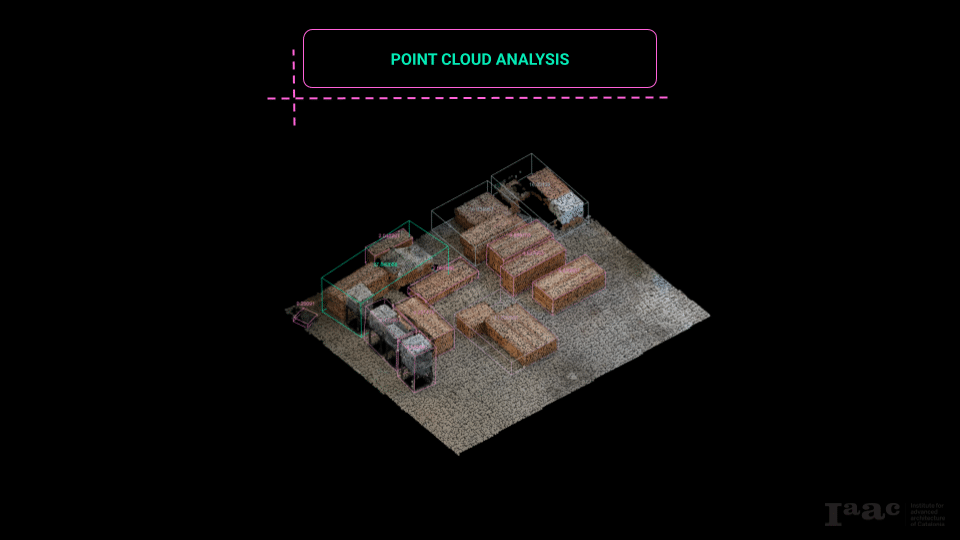

The application we developed centers on the discrete segmentation of point clouds to create accurate volumetric assessments for logistical operations. This objective served as the primary informant for the design of our scanning operations.

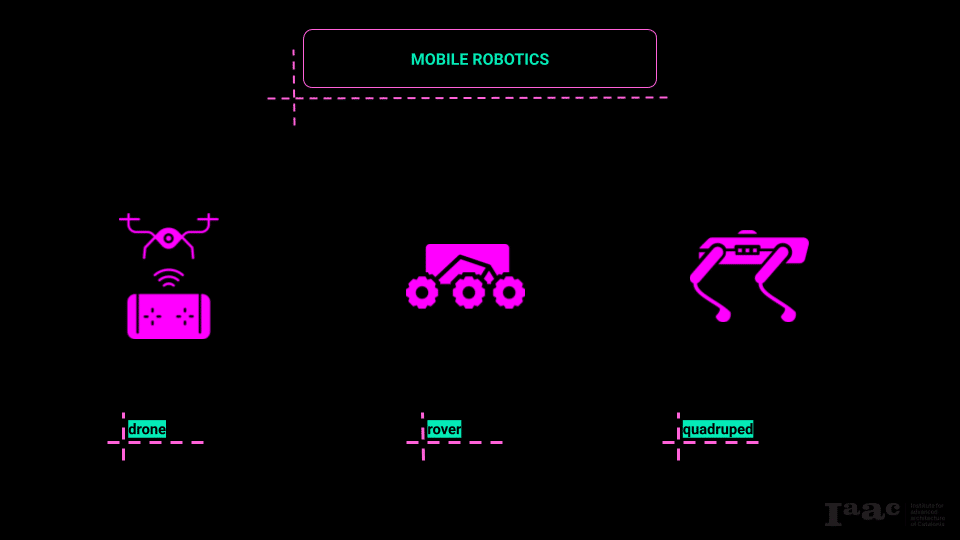

We worked with three distinct types of mobile robots: an aerial drone (Phantom 3), a ground rover (Clearpath Husky), and a quadruped robot (Unitree Go2). Each offers unique advantages and poses specific challenges within a scanning workflow.

- Drone (Phantom 3):

The drone provides aerial mobility, enabling dynamic altitude changes and access to difficult-to-reach areas. Its ability to capture data from varying elevations makes it particularly effective for scanning large objects or areas with complex geometry. However, drones are limited by battery life, flight regulations, and environmental conditions such as wind. Precision at close range can also be a challenge due to stability constraints during flight. - Rover (Husky):

The Husky rover offers stable ground-based mobility. Its robust platform can carry heavier sensor payloads and navigate relatively uneven terrain. Its slower movement allows for more deliberate and precise scanning, particularly close to ground level. The trade-off lies in its limited ability to traverse highly irregular terrain and its inability to capture elevated perspectives without additional hardware. - Quadruped (Go2):

The quadruped robot introduces flexibility in navigating challenging environments, including stairs, rubble, or natural landscapes. Its biomimetic locomotion allows it to approach objects at various heights and angles. However, payload limitations and balance constraints can complicate the integration of heavier sensors, and its mobility requires careful planning to maintain stability while scanning.

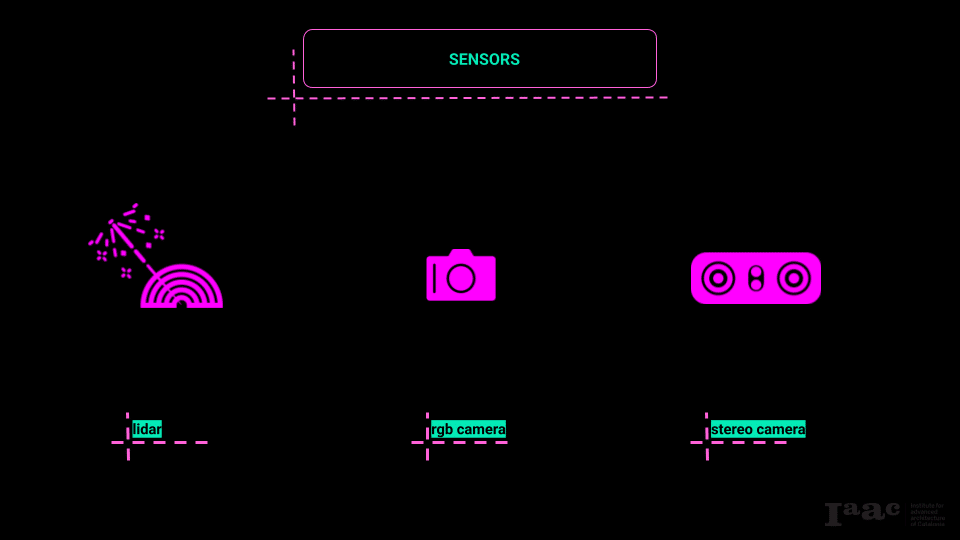

We worked with three types of sensors: an RGB camera, photogrammetry, and a stereo camera. Each operates on different principles and offers distinct benefits and limitations in point cloud generation.

- RGB Camera:

A standard RGB camera captures images in red, green, and blue channels. It’s primarily used in photogrammetric workflows where multiple overlapping photographs are stitched together to create 3D models. The main advantage of RGB cameras is their lightweight nature and accessibility. However, they rely heavily on lighting conditions and texture contrast in the environment to generate accurate data. - Photogrammetry:

Photogrammetry is a process that reconstructs 3D geometry from 2D images by identifying shared points across multiple photographs taken from different perspectives. It leverages computer vision algorithms to generate dense point clouds. The benefit of this method is its low hardware requirement—only a standard camera is needed—but it demands extensive computational resources and high-quality imagery for accurate results. - Stereo Camera:

Stereo cameras capture two simultaneous images from slightly different viewpoints, mimicking human binocular vision. By analyzing the disparity between these images, they estimate depth information in real time. Stereo cameras are advantageous for immediate depth sensing without extensive post-processing, but they often struggle with accuracy at longer distances or in textureless environments.

Scanning Methodology

We chose to employ a drone equipped with an RGB camera as our primary scanning tool. The drone’s capability to adjust elevation allows us to capture data from multiple angles and perspectives, while the RGB camera offers lightweight and efficient image capture suitable for photogrammetric processing. The relatively small data footprint of RGB imagery further streamlined our workflow.

To complement the drone scans, we incorporated a secondary RGB camera operated manually. This allowed us to capture close-up images of areas where the drone struggled, particularly in capturing fine details or occluded geometries. This dual approach was designed to maximize coverage and detail, balancing aerial and ground perspectives within the scanning operation.

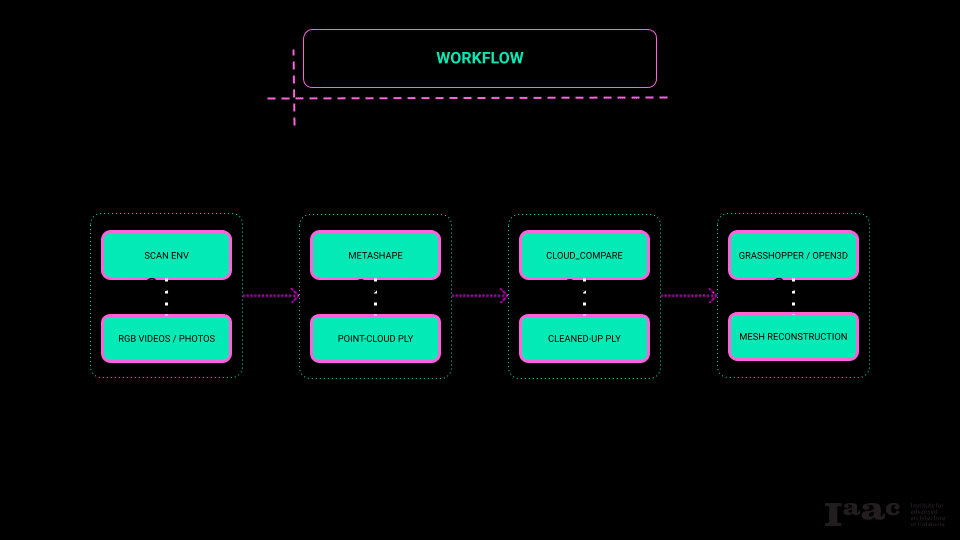

Our workflow followed a structured pipeline from data acquisition to volumetric analysis:

Analysis and Segmentation (Grasshopper/Open3D):

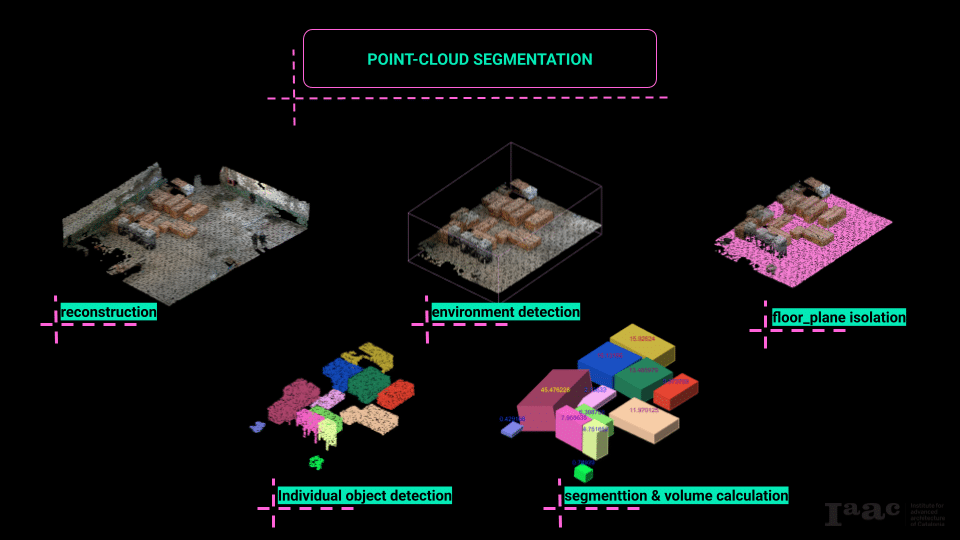

We used Open3D, integrated through Python components in Grasshopper, to analyze the composite point cloud. The steps included:

- Reconstruction

- Environment detection

- Floor plane isolation

- Object detection and segmentation

- Volume calculation

Data Acquisition:

We conducted the scanning process using both the drone (with its onboard RGB camera) and the manually operated RGB camera. The drone captured aerial images while the manual camera focused on close-range details.

Photogrammetric Reconstruction (Agisoft Metashape):

We processed the images from both devices in Metashape to generate separate point clouds. Metashape’s photogrammetric algorithms reconstructed 3D models by identifying common points across the image sets.

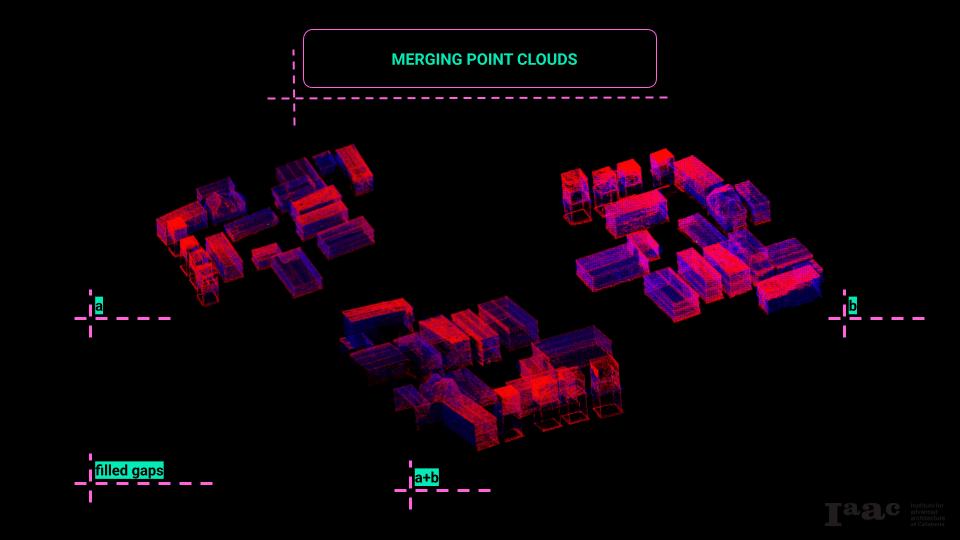

Point Cloud Integration (CloudCompare):

We imported the point clouds into CloudCompare to create a composite dataset. This step required careful alignment and merging of the separate clouds to produce a cohesive and accurate model.

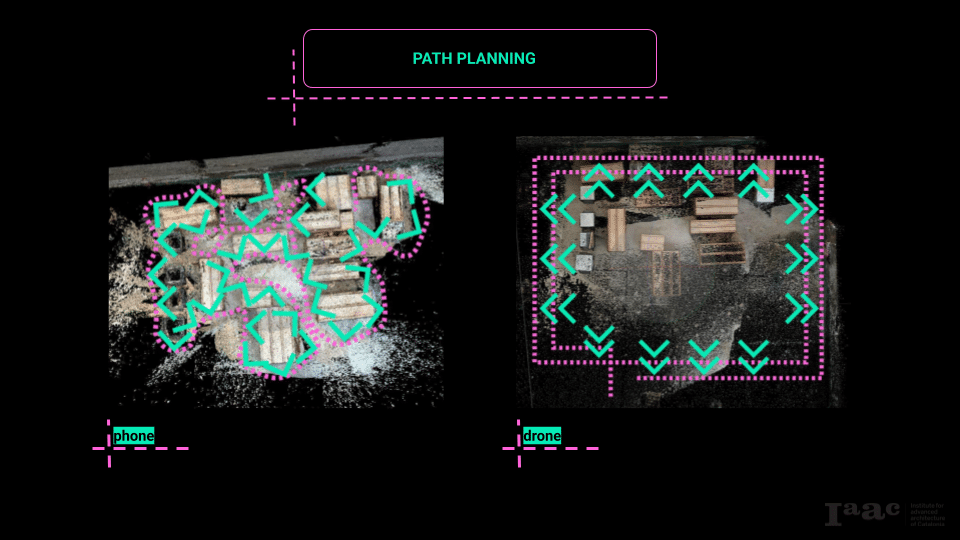

We implemented two distinct scanning paths to maximize point cloud coverage and detail:

- Aerial Path (Drone):

The drone followed a Cartesian, grid-like flight pattern, making two passes over the scanning area. The first pass was at a higher altitude with the camera oriented directly downward to capture top-down views. The second pass was conducted at a lower altitude with the camera tilted diagonally. This approach was intended to capture angularity and fine details from multiple perspectives, improving the quality of the photogrammetric reconstruction. Both passes were manually piloted, not autonomous. - Ground Path (On Foot):

The manual camera operator followed a perimeter-based path inspired by maze-solving strategies—specifically the “wall-following” method. By consistently following a single wall, the path ensured continuous perimeter coverage, minimizing gaps in close-range scanning and providing additional detail to complement the drone’s aerial data.

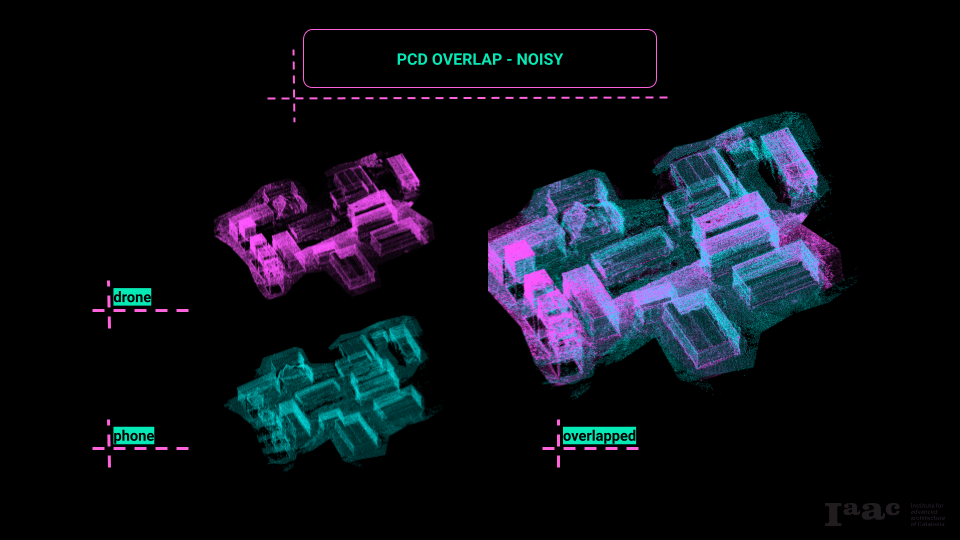

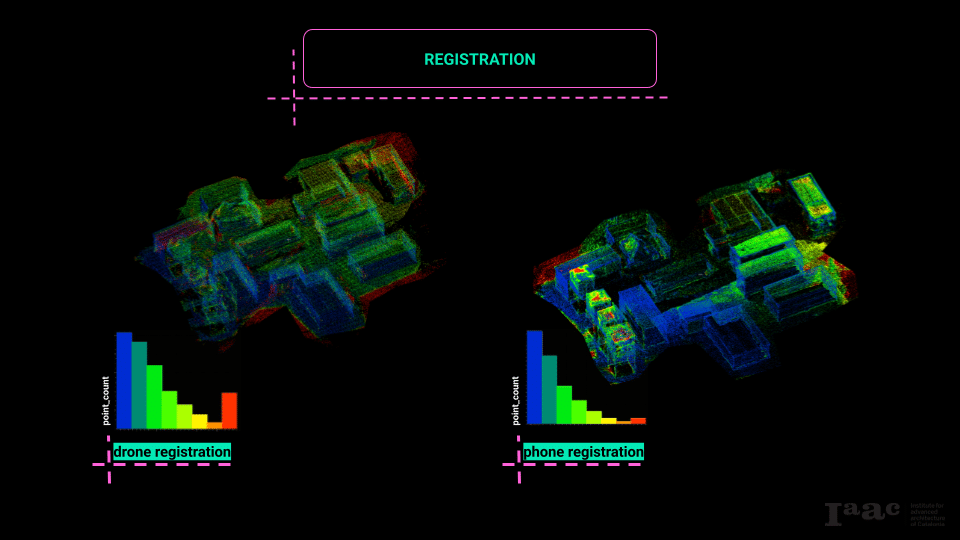

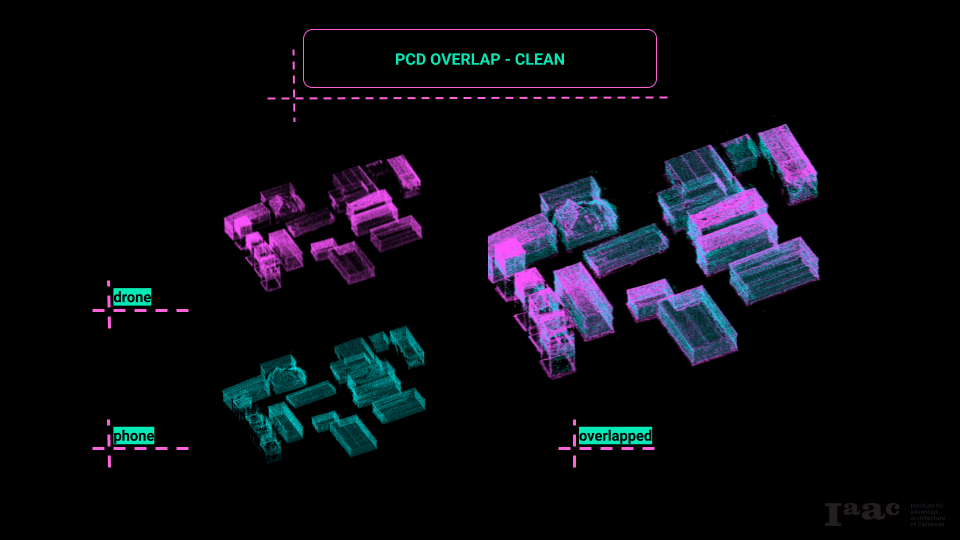

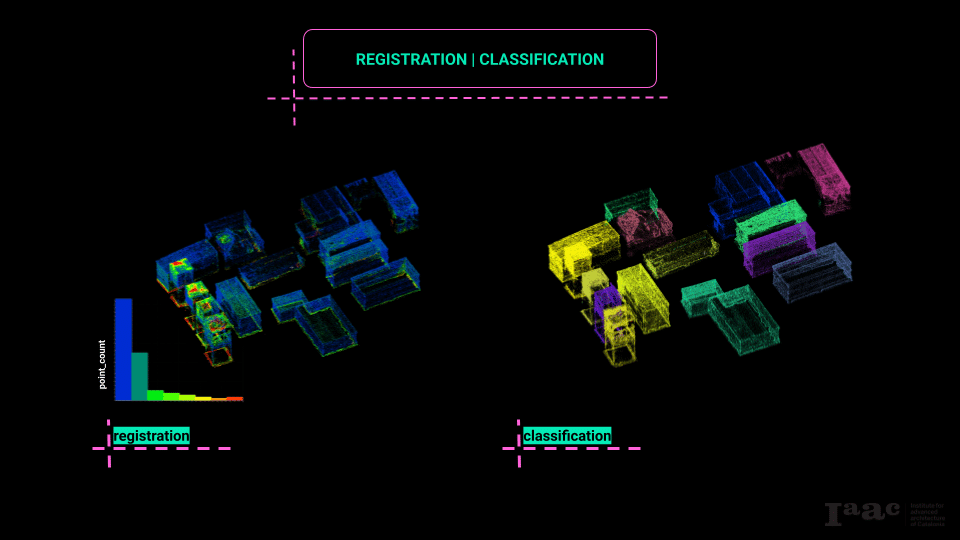

CloudCompare was used to merge the two point clouds—one from the drone and one from the manual camera—into a single composite point cloud. Initially, the merging process faced challenges due to excessive noise and poor registration. In CloudCompare terminology, “registration” refers to the alignment and matching of points from one cloud to corresponding points in another. When point clouds are noisy or lack sufficient overlap, registration errors occur, resulting in misalignments and gaps.

To address this, we performed a cleaning process on both point clouds prior to registration. This involved removing outliers and reducing noise to enhance point correspondence between datasets. By improving data quality, we achieved a cleaner, more accurate composite point cloud, which was better suited for further analysis.

Grasshopper, with Open3D integrated through Python components, served as the primary tool for our point cloud analysis. The analytical pipeline consisted of the following stages:

- Reconstruction:

Importing the composite point cloud for processing. - Environment Detection:

Differentiating the environment (e.g., ground plane) from objects of interest. - Floor Plane Isolation:

Identifying and isolating the floor plane to establish a reference for object segmentation. - Object Detection and Segmentation:

Clustering points into discrete objects based on proximity and connectivity criteria. - Volume Calculation:

Calculating the volumetric data for each segmented object to inform logistical decisions.

Based on the volumetric data extracted from the segmented point clouds, we developed a script to calculate optimal transportation strategies. This included determining suitable transport modes (e.g., vehicle types) and calculating the number of trips required to transport the scanned items. Material analysis was also incorporated, allowing us to estimate weights based on object classification and material properties.

Additionally, we implemented a basic nesting strategy to optimize how objects could be packed into a container volume for transport. This system accounted for irregular geometries, flagged items that did not fit, and generated warnings to prompt alternative strategies or additional trips.

Conslusion

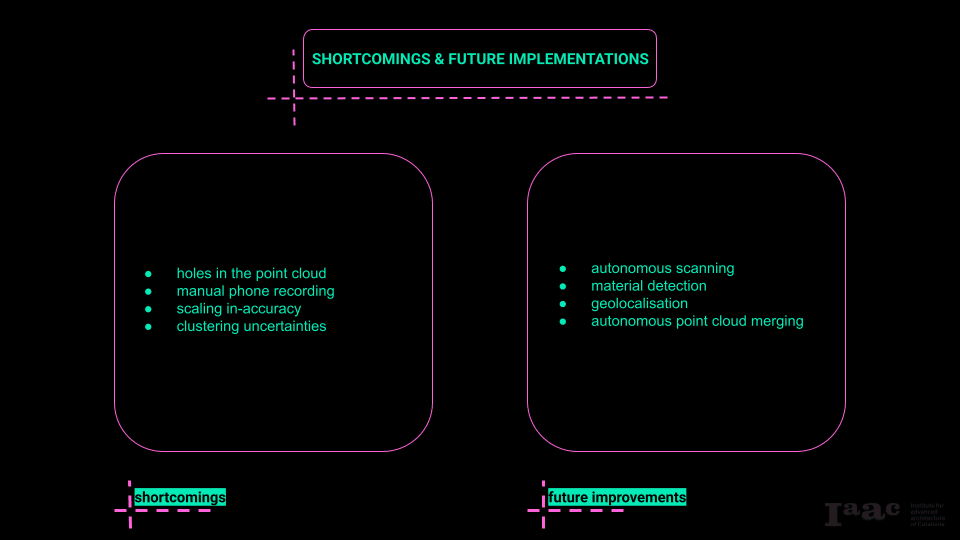

Despite the overall success of the scanning workflow and its integration into a logistical application, several shortcomings became evident throughout the process. One of the most persistent issues was the presence of gaps and holes in the point cloud data. These typically resulted from incomplete coverage during scanning, particularly in areas with complex or occluded geometries that the drone or handheld camera struggled to capture effectively. Additionally, the manual data acquisition with the phone camera, while helpful in filling some of these gaps, introduced inconsistencies in the dataset. This manual intervention ran counter to the overarching goal of establishing an autonomous robotic scanning system, and it introduced variables such as inconsistent movement, image angles, and varying distances that impacted data quality. Another significant limitation was the accuracy of the point cloud scaling, which directly affected the reliability of the volumetric calculations. Without calibrated scaling references or ground control points, the precision of measurements was limited, undermining the accuracy of logistical assessments based on these volumes. Furthermore, the segmentation and clustering processes occasionally produced errors, with discrete objects being inaccurately grouped together. This clumping complicated object differentiation and skewed volume calculations, reducing the reliability of the dataset in logistical planning scenarios.

Looking forward, there are several areas identified for improvement that could significantly enhance both the accuracy and autonomy of the system. The most pressing development is the implementation of fully autonomous path planning and robotic mobility. Removing the need for manual piloting and handheld scanning would ensure consistent data acquisition, standardize scanning procedures, and reduce human error. Equally important is the advancement of automated material detection. Integrating machine learning algorithms with sensor data would allow for real-time classification of materials, enabling more accurate weight estimations and improving the precision of logistical calculations. In addition, incorporating geo-location and spatial referencing, such as GPS or other localization systems, would embed spatial data directly within point clouds. This would not only enhance the utility of the dataset for logistical planning but also open up possibilities for geo-referenced operations and integration with broader site management systems. Finally, developing autonomous point cloud compositing workflows would address current bottlenecks in post-processing. Automating registration and merging processes would improve point cloud alignment, reduce processing time, and increase the accuracy of the final dataset, ultimately supporting a more robust and scalable scanning-to-logistics pipeline.