Turning Natural Language into Architecture

Designing a house is never just about walls and windows—it’s about translating human stories into spaces that feel alive. Clients describe routines, aspirations, and cultural practices. Architects then face the challenge of turning those narratives into coherent layouts that also respond to site, climate, and context.

Most computational tools fall short. They either generate geometry without meaning or create visuals that look good but don’t actually work. That’s where ThinkSpaceAI comes in.

Why We Built It

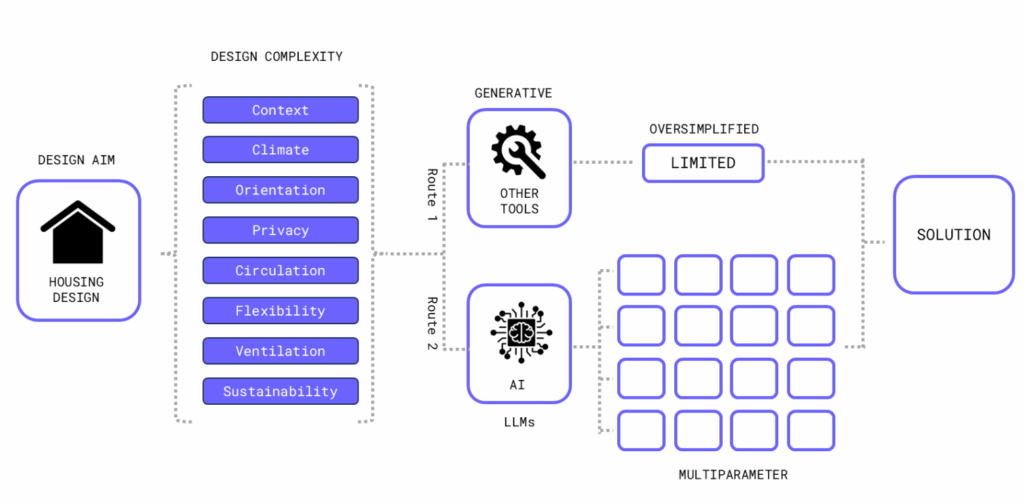

Early-stage housing design is complex. Architects must balance spatial logic, user needs, climate, culture, and site constraints—all from vague conversations. Large Language Models (LLMs) offer a way to bridge this gap: they can interpret natural language, extract spatial programs, and map them into architectural logic.

Our goal with ThinkSpaceAI was simple: to turn narratives into design options that are both meaningful and verifiable.

MOTIVATION

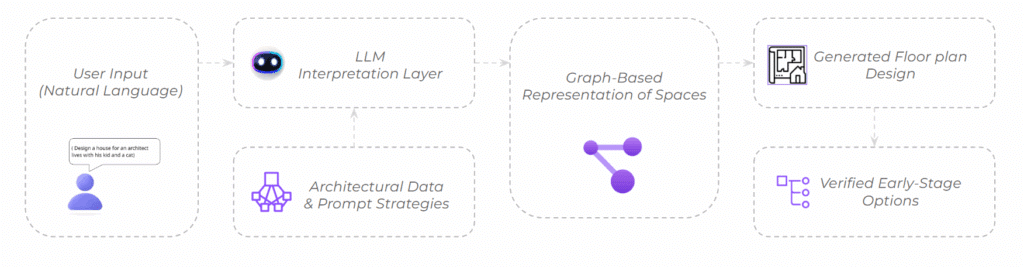

The motivation of this thesis is to explore how natural language can be transformed into structured architectural knowledge for house design. We propose a workflow where user prompts are translated into graph-based spatial representations, which are then converted into floorplans enriched with architectural data. By testing large language models (LLMs) and prompt strategies, this work aims to establish guidelines that bridge free-form input with structured design logic, offering architects meaningful options in the early stages of design.

Problem Statement

- Early-stage house design is complex, requiring architects to balance spatial logic, user needs, and design intent. Existing computational tools either automate geometry or fail to interpret unstructured input, making them less intuitive for design exploration.

- Large language models (LLMs) offer potential to bridge natural language and architectural design, but there is no established workflow that translates prompts into graph-based representations and meaningful floorplans.

- This gap limits the use of LLMs as effective design partners in the conceptual phase.

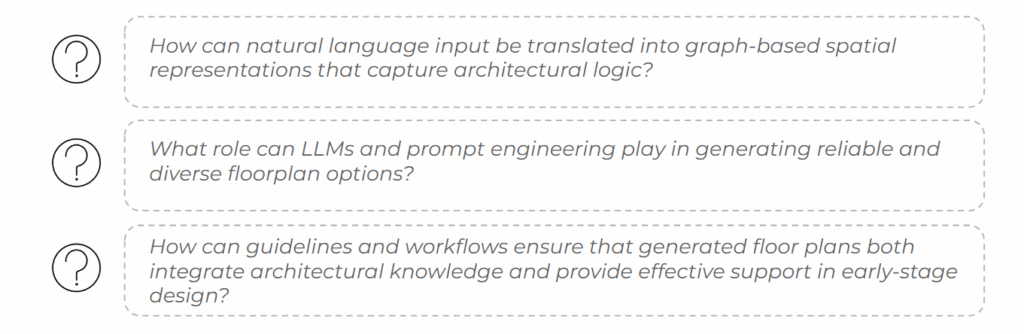

Research Questions

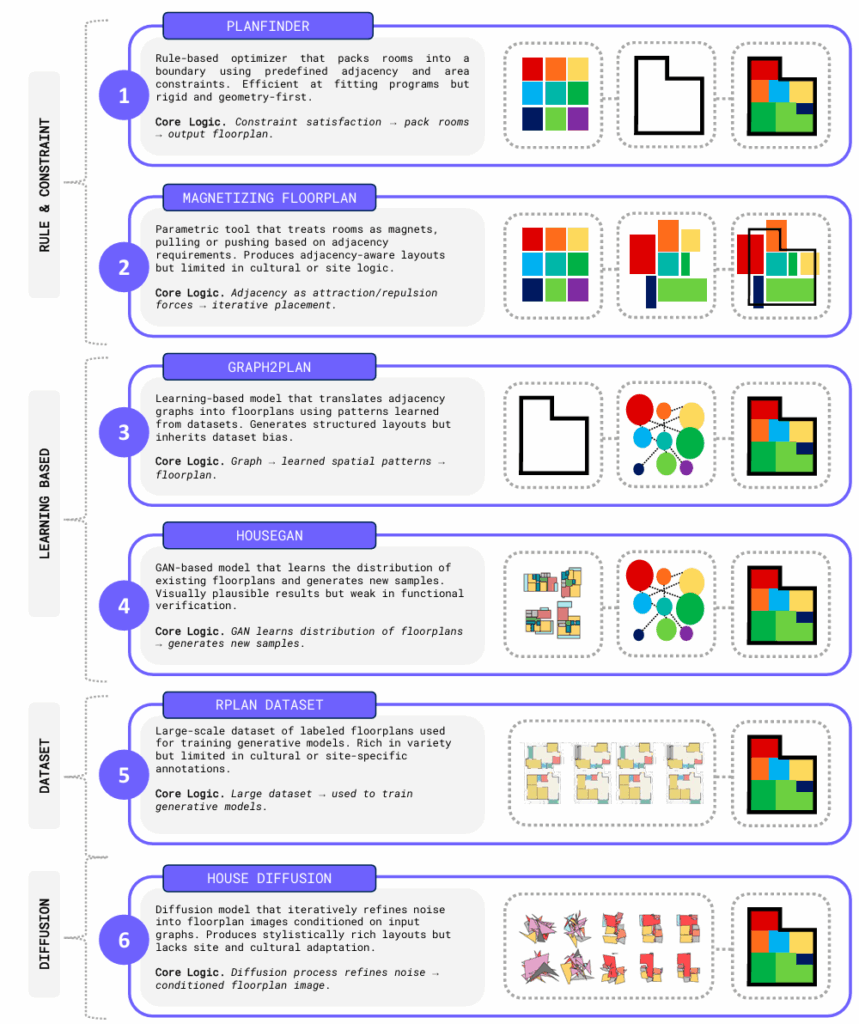

BACKGROUND & STATE OF ART

Computational tools have long promised to augment architectural practice by accelerating design exploration and

automating repetitive tasks. Early-stage housing design, however, poses unique challenges: it requires balancing

user needs, site-specific constraints, and cultural expectations, all while maintaining architectural coherence.

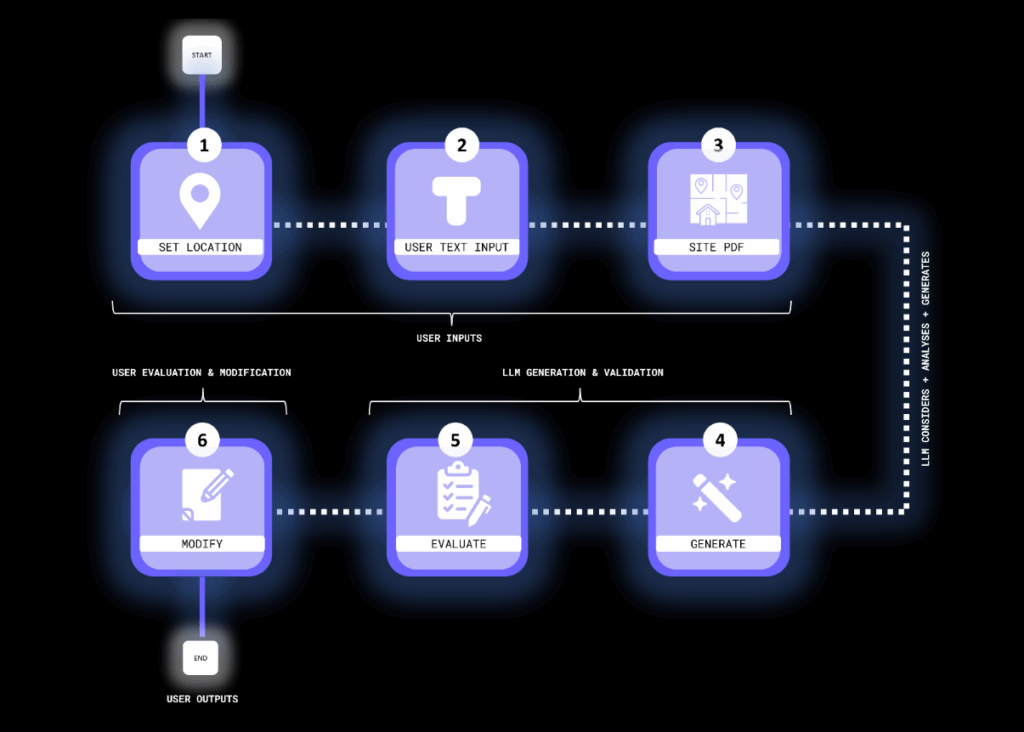

How It Works

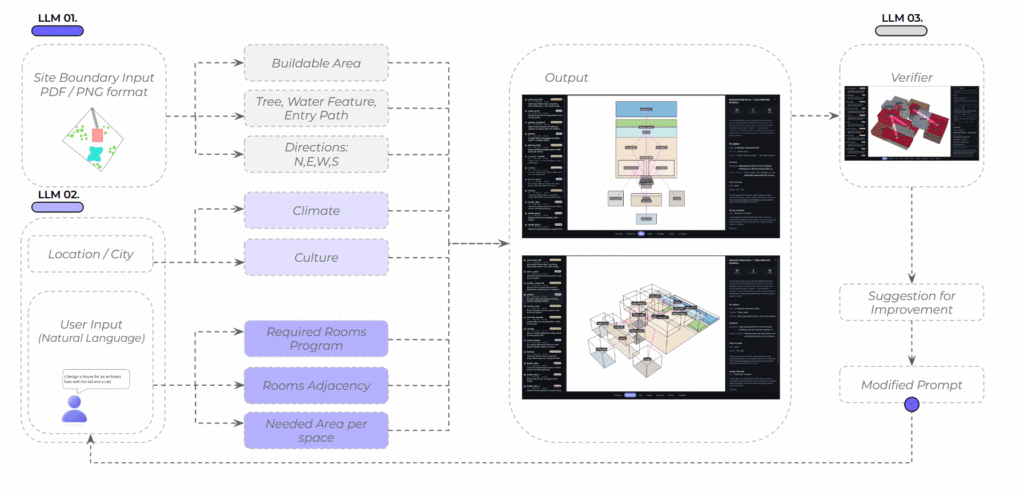

We developed a multi-agent pipeline that unfolds in layers:

- Site Analyst – Reads site boundaries, climate, and environmental data.

- Program Architect – Translates user stories into a graph of rooms, adjacencies, and circulation.

- Design Generator – Produces floorplans and 3D massing.

- Verifier – Uses graph analysis to check circulation, privacy, and coherence.

The result is a system that doesn’t just “draw plans” but reasons about them.

Testing the Framework

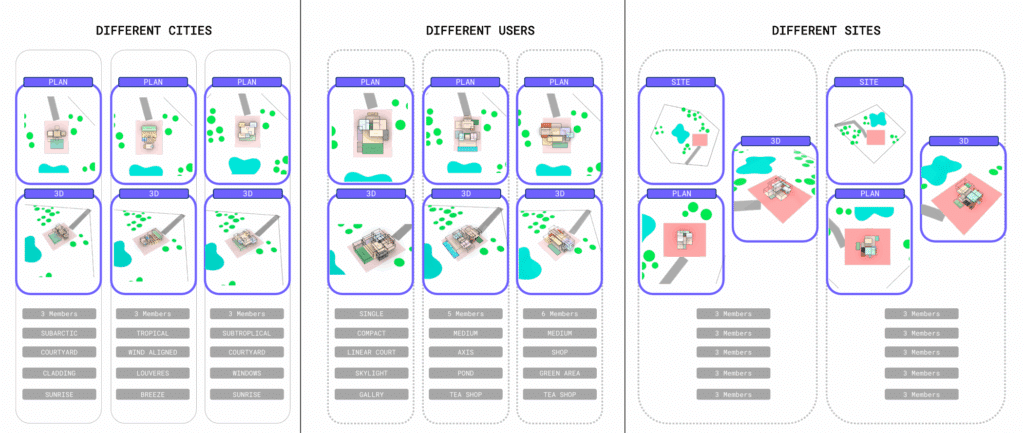

We tested ThinkSpaceAI across 400 housing scenarios:

- 21 cities (different climates and cultures)

- 21 user archetypes (from single professionals to large families)

- 10 site typologies (compact lots, flag lots, courtyards, and more)

The results? Layouts that adapt meaningfully to both context and culture. For example:

Adapting to Climate, Users, and Sites

We tested ThinkSpaceAI’s adaptability across climates, users, and site conditions.

- Climate: In Helsinki, designs emphasized insulation, winter sun courtyards, and fire pits. In Singapore, layouts prioritized ventilation, shading, and rainwater harvesting. In Mexico City, passive solar gain and cultural courtyards dominated. The same program produced entirely different, context-driven results.

- Users: In Istanbul, a single artist’s home became a compact linear layout with skylights and work-live integration, while larger families generated more expansive, community-centered plans. The framework adapts to professional roles, family structures, and cultural practices.

- Sites: In Beijing, two identical users on different plots produced distinct results. A south-entry site created a layered courtyard sequence, while a west-entry shifted adjacencies and thresholds. Identical requirements yielded different spatial organizations, proving the system’s sensitivity to orientation and site geometry.

Together, these tests show how the framework doesn’t just generate layouts—it thinks contextually, producing designs shaped by climate, people, and place.

What We Learned

- Do’s: Layer inputs gradually—site + user + culture + climate—to enrich outputs.

- Don’ts: Don’t let cultural prompts dominate (they bias results), don’t force layout shapes too early, and don’t trust material suggestions blindly.

- Takeaway: LLMs are powerful, but they need structured workflows and verification to be useful architectural partners.

Now, we want to show our Gallery output.

Difficulties & Succession

During the process, we faced challenges such as overlapping rooms and disconnected layouts. To address this, we introduced validation and adjacency rules. These tools improved spatial consistency, ensuring the generated floorplans are both connected and architecturally coherent.

In succession, the framework got the 2 floor house to be aligned in 2d and 3d, we got the staircase to connect the 2 floors properly, we got the 2 bathrooms to be on top of each other for plumping efficiency, and we got the terrace to face the water feature on site. So we can say that the workflow makes the generated designs more consistent with architectural standards and real-world site conditions.

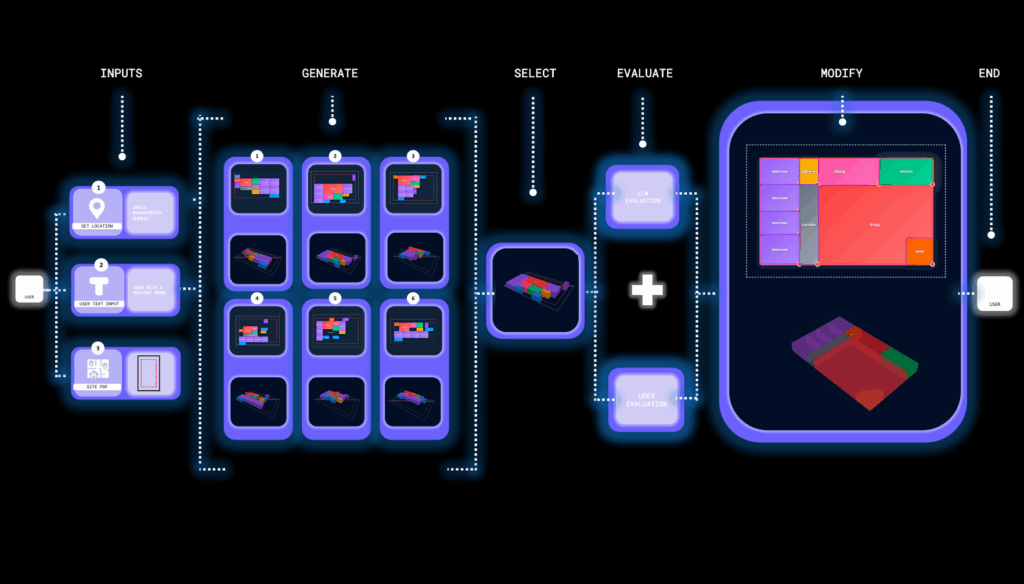

The Tool: ThinkSpaceAI

We wrapped this into an interactive copilot tool for architects:

- Input: location, site file, and natural language description.

- Backend: multi-LLM pipeline generates options and checks them.

- Output: floorplans, massing, adjacency graphs—ready for editing.

Architects can browse design galleries, compare layouts across contexts, and even edit designs directly in 2D and 3D. It’s fast, flexible, and keeps humans in control.

Our copilot tool guides architects through an interactive design workflow. It begins with simple inputs—location, natural language requirements, and a site boundary file. From there, the system generates multiple design options, each combining spatial diagrams and 3D massing. The user selects a preferred option, which is then evaluated collaboratively: the LLM checks adjacency, connectivity, and spatial logic, while the architect reviews functionality and intent. Finally, the layout can be modified directly—adjusting room sizes, moving spaces, or adding new ones—with instant updates in both 2D and 3D. The result is an adaptable, coherent design that reflects architectural rules while keeping the architect in control.

Why It Matters

ThinkSpaceAI isn’t about replacing architects. It’s about giving them a thinking partner in the earliest stages of design—where ideas are fluid, options are endless, and decisions have the biggest impact.

What we’ve seen is that the model can indeed produce plausible and often inspiring outputs, but not with full consistency or precision. Spatial reasoning is improving, yet biases from context—like location, climate, or cultural references—remain, and geometry isn’t always reliable. These tools, therefore, shouldn’t be seen as standalone designers, but as catalysts for exploration. Their real strength lies in speeding up the early stages of design, opening new possibilities, while still requiring validation and refinement by us as architects.

We’d like to close with the thought that design can now begin with something as simple as a sentence. From a single line of text to an entire architectural concepts in minutes.

Conclusion & Future Work

ThinkSpaceAI shows how natural language can be transformed into architecturally sound designs using a multi-LLM, graph-driven workflow. The system adapts to climate, culture, site, and user needs while maintaining spatial logic and functional relationships. Comparative tests across cities, users, and sites confirmed its versatility and practical potential.

At its core, the framework combines graph analysis, validation rules, and multi-stage LLM verification, ensuring quality while keeping the process accessible through a natural language interface.

Still, challenges remain—cultural prompts can default to stereotypes, 3D reasoning in multi-story layouts needs improvement, and reliance on predefined site boundaries limits flexibility.

Future work points to five directions:

- Cultural intelligence: richer, contemporary datasets to avoid stereotypes.

- Multi-modal inputs: site analysis from imagery and GIS.

- Spatial reasoning: stronger 3D and BIM integration.

- Performance: real-time energy and comfort optimization.

- Collaboration & compliance: multi-user workflows and automated code checks.

In short, ThinkSpaceAI is a step toward AI as a true design partner—bridging ideas and architecture from a single sentence.